ICN strives to automate the process of installing the local cluster controller to the greatest degree possible–"zero touch installation". Most of the work is done simply by booting up the jump host (Local Controller). Once booted, the controller is fully provisioned and begins to inspect and provision baremetal servers, until the cluster is entirely configured.

This document show step by step to configure the network, and deployment architecture for ICN BP.

Apache license v2.0

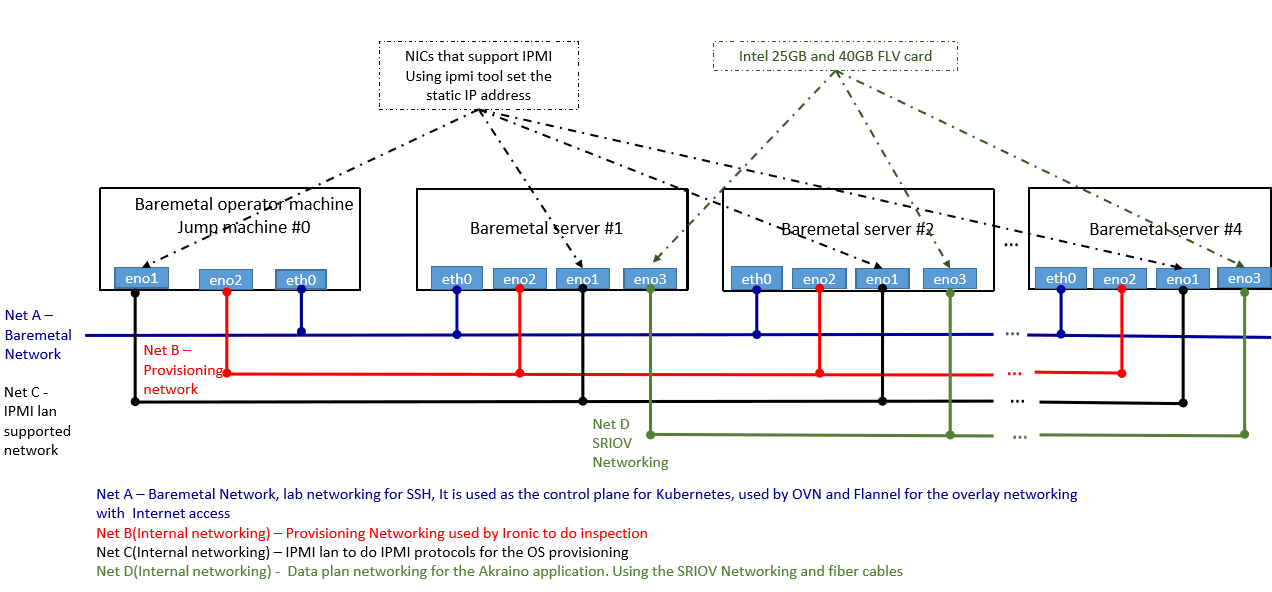

The local controller is provisioned with the Metal3 Baremetal Operator and Ironic, which enable provisioning of Baremetal servers. The controller has three network connections to the baremetal servers: network A connects baremetal servers, network B is a private network used for provisioning the baremetal servers, and network C is the IPMI network, used for control during provisioning. In addition, the baremetal hosts connect to the network D, the SRIOV network.

In some deployment model, you can combine Net C and Net A to be the same networks, but developer should take care of IP Address management between Net A and IPMI address of the server.

There are two main components in ICN Infra local controller - Local controller and Compute K8s cluster

Local controller will reside in the jump server to run the Metal3 operator, Binary provisioning agent operator and Binary provisioning agent restapi controller.

Compute K8s cluster will actually run the workloads and it installed on Baremetal nodes

All-in-one VM based deployment required at least 32 GB RAM and 32 CPU servers

Recommended Hardware requirements 64GB Memory and 32 CPU servers, SRIOV network cards

Jump server required to be pre-installed with Ubuntu 18.04

No Prerequisites for ICN BP

Jump server required to be installed with Ubuntu 18.04 server, and have 3 distinguished networks as shown in figure 1 <--- SM comment "This is redundant, is captured under HW and SW requirements" >>>

Local controller: at least three network interfaces.

Baremetal hosts: four network interfaces, including one IPMI interface.

Four or more hubs, with cabling, to connect four networks.

Hostname | CPU Model | Memory | Storage | 1GbE: NIC#, VLAN, (Connected extreme 480 switch) | 10GbE: NIC# VLAN, Network (Connected with IZ1 switch) |

|---|---|---|---|---|---|

Jump | Intel 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

<--- SM comment "DMZ may not mean anything to customer. Lets be consistent. Can we replace IF0, IF1 etc with Net A, Net BThis is redundant, is captured under HW and SW requirements" >>>

ICN R2 release support Ubuntu 18.04 - ICN BP install all required software during "make install"

Please refer the figure 1, for all the network requirement in ICN BP

Please make sure you have 3 distinguished networks - Net A, Net B and Net C as mentioned in figure 1. Local controller uses the Net B and Net C to provision the Baremetal servers to do the OS provisioning.

(Tested as below)

Hostname | CPU Model | Memory | Storage | 1GbE: NIC#, VLAN, (Connected extreme 480 switch) | 10GbE: NIC# VLAN, Network (Connected with IZ1 switch) |

|---|---|---|---|---|---|

node1 | Intel 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node2 | Intel 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node3 | Intel 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

The local controller will install all the software in compute servers right from OS, the software required to bring up the Kubernetes cluster <--- SM comment "local controller installs OS on compute servers?" >>>

ICN BP check all the precondition and execution requirements for both Baremetal and VM deployment <--- SM comment "Heading indicates only Baremetal but the text here mentions both types of deployments including VM" >>>

Installation is two-step process and everything starts with one command "make install"

User required to provide the IPMI information of the edge server they required to connect to the local controller by editing node JSON sample file in the directory icn/deploy/metal3/scripts/nodes.json.sample as below. If you want to increase nodes, just add another array

{

"nodes": [

{

"name": "edge01-node01",

"ipmi_driver_info": {

"username": "admin",

"password": "admin",

"address": "10.10.10.11"

},

"os": {

"image_name": "bionic-server-cloudimg-amd64.img",

"username": "ubuntu",

"password": "mypasswd"

}

},

{

"name": "edge01-node02",

"ipmi_driver_info": {

"username": "admin",

"password": "admin",

"address": "10.10.10.12"

},

"os": {

"image_name": "bionic-server-cloudimg-amd64.img",

"username": "ubuntu",

"password": "mypasswd"

}

}

]

} |

User will find the network configuration file named as "user_config.sh" in the icn parent folder

#!/bin/bash #Local controller - Bootstrap cluster DHCP connection #BS_DHCP_INTERFACE defines the interfaces, to which ICN DHCP deployment will bind #e.g. export BS_DHCP_INTERFACE="ens513f0" export BS_DHCP_INTERFACE= #BS_DHCP_INTERFACE_IP defines the IPAM for the ICN DHCP to be managed. #e.g. export BS_DHCP_INTERFACE_IP="172.31.1.1/24" export BS_DHCP_INTERFACE_IP= #Edge Location Provider Network configuration #Net A - Provider Network #If provider having specific Gateway and DNS server details in the edge location #export PROVIDER_NETWORK_GATEWAY="10.10.110.1" export PROVIDER_NETWORK_GATEWAY= #export PROVIDER_NETWORK_DNS="8.8.8.8" export PROVIDER_NETWORK_DNS= #Ironic Metal3 settings for provisioning network #Interface to which Ironic provision network to be connected #Net B - Provisioning Network #e.g. export IRONIC_INTERFACE="enp4s0f1" export IRONIC_INTERFACE= #Ironic Metal3 setting for IPMI LAN Network #Interface to which Ironic IPMI LAN should bind #Net C - IPMI LAN Network #e.g. export IRONIC_IPMI_INTERFACE="enp4s0f0" export IRONIC_IPMI_INTERFACE= #Interface IP for the IPMI LAN, ICN verfiy the LAN Connection is active or not #e.g. export IRONIC_IPMI_INTERFACE_IP="10.10.110.20" #Net C - IPMI LAN Network export IRONIC_IPMI_INTERFACE_IP= |

After configuring, Node inventory file and setting files. Please run "make install" from the ICN parent directory as shown below:

root@pod11-jump:# git clone "https://gerrit.akraino.org/r/icn" Cloning into 'icn'... remote: Counting objects: 69, done remote: Finding sources: 100% (69/69) remote: Total 4248 (delta 13), reused 4221 (delta 13) Receiving objects: 100% (4248/4248), 7.74 MiB | 21.84 MiB/s, done. Resolving deltas: 100% (1078/1078), done. root@pod11-jump:# cd icn/ root@pod11-jump:# vim Makefile root@pod11-jump:# make install |

Following steps occurs once the "make install" command is given.

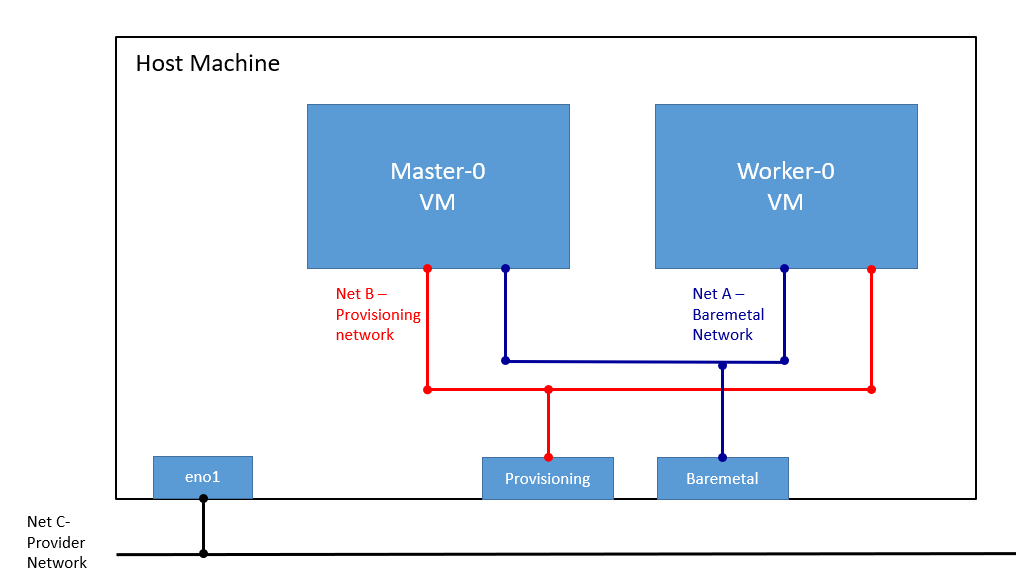

Virtual deployment is used for the dev env using metal3 virtual deployment to create VM with PXE boot. VM Ansible scripts the node inventory file in the /opt/ironic. No setting is required from the user to deploy the virtual deployment. Virtual deployment is used for dev works.

no snapshot is implemented in ICN R2

Host server or Jump host required to install with ubuntu 18.04. This install all the VMs and install the k8s clusters. Same as Baremetal deployment use "make vm_install" to install Virtual deployment

"make verify_all" install two VMs with name master-0 and worker-0 with 8GB RAM and 8vCPUs, And install k8s cluster on the VMs using the ICN - BPAoperator and install the ICN - BPA rest API verifier. BPA operator installs the Multi cluster KUD to bring up the kubernetes with all addons and plugins.

ICN blueprint checks all the setup in both baremetal and VM deployment. Verify script will check the metal3 provision the OS in each baremetal nodes by checking with a timeout period of 60 sec and interval of 30. BPA operator verifies will check, whether the KUD is installation is complete by doing plain curl command to the Kubernetes cluster installed in baremetal and VM setup.

Baremetal Verifier: Run the "make bm_verifer", it will verify the bare-metal deployment

VM Verifier: Run the "make vm_verifier", it will verify the Virtual deployment

For development uses the virtual deployment, it take up 10 mins to bring up the setup virutal BMC VMs with pxeboot.

Virtual deployment works well for the BPA operator development for metal3 installation scripts.

No images provided in ICN R2 release

no Post-deployment configuration required in ICN R2 release

Required Linux Foundation ID to launch bug in ICN: https://jira.akraino.org/projects/ICN/issues

The command "make vm_clean_all" uninstall all the components for the virtual deployments

The error message is explicit, all messages are captured in logs folder

no packages is maintained in ICN R2

not applicable

not applicable

not applicable

How to setup IPMI?

First, make sure the IPMI tool is installed in your servers, if not install them using apt install ipmitool

Then, check for the ipmitool information of each servers using the command "ipmitool lan print 1"

If the above command doesn't show the IPMI information, then setup the IPMI static IP address using following instruction

BMC web console url is not working?

It is hard to find issues or reason. Check the ipmitool bmc info to find the issues, if the url is not available

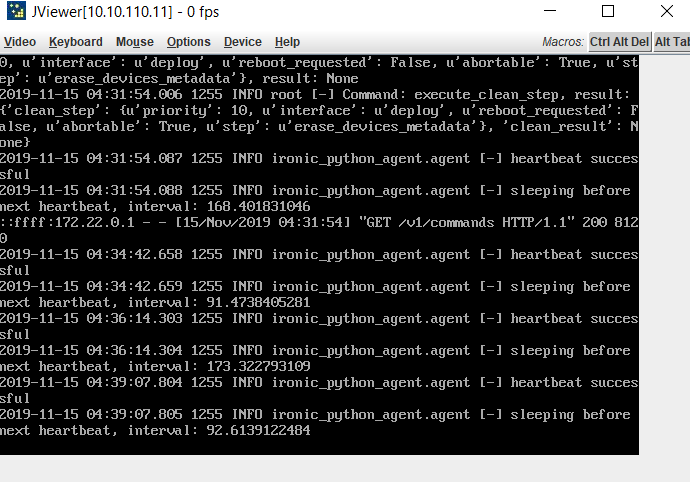

No change in bmh state - provisioning state is for more than 40min?

Generally metal3 provision for bare metal takes 20 - 30 mins. Look at the ironic logs and bare-metal operator to look at the state of nodes. Openstack baremetal node shows all state of the node right from power, storage.

Why provide network is required?

Generally, provider network DHCP servers in lab provide the router and dns server details. In some lab setup DHCP server don't provide this information.

/*

* Copyright 2019 Intel Corporation, Inc

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/