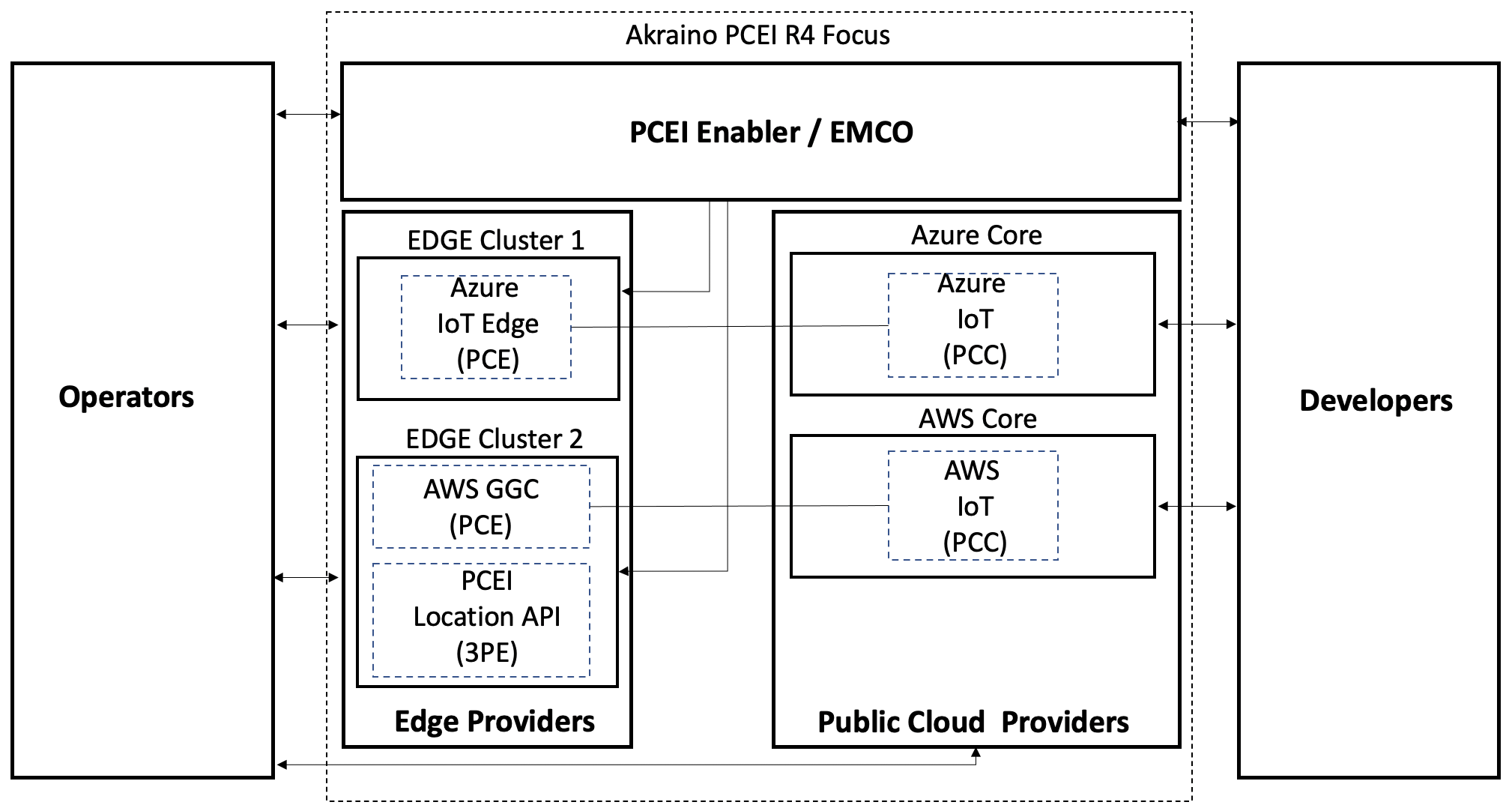

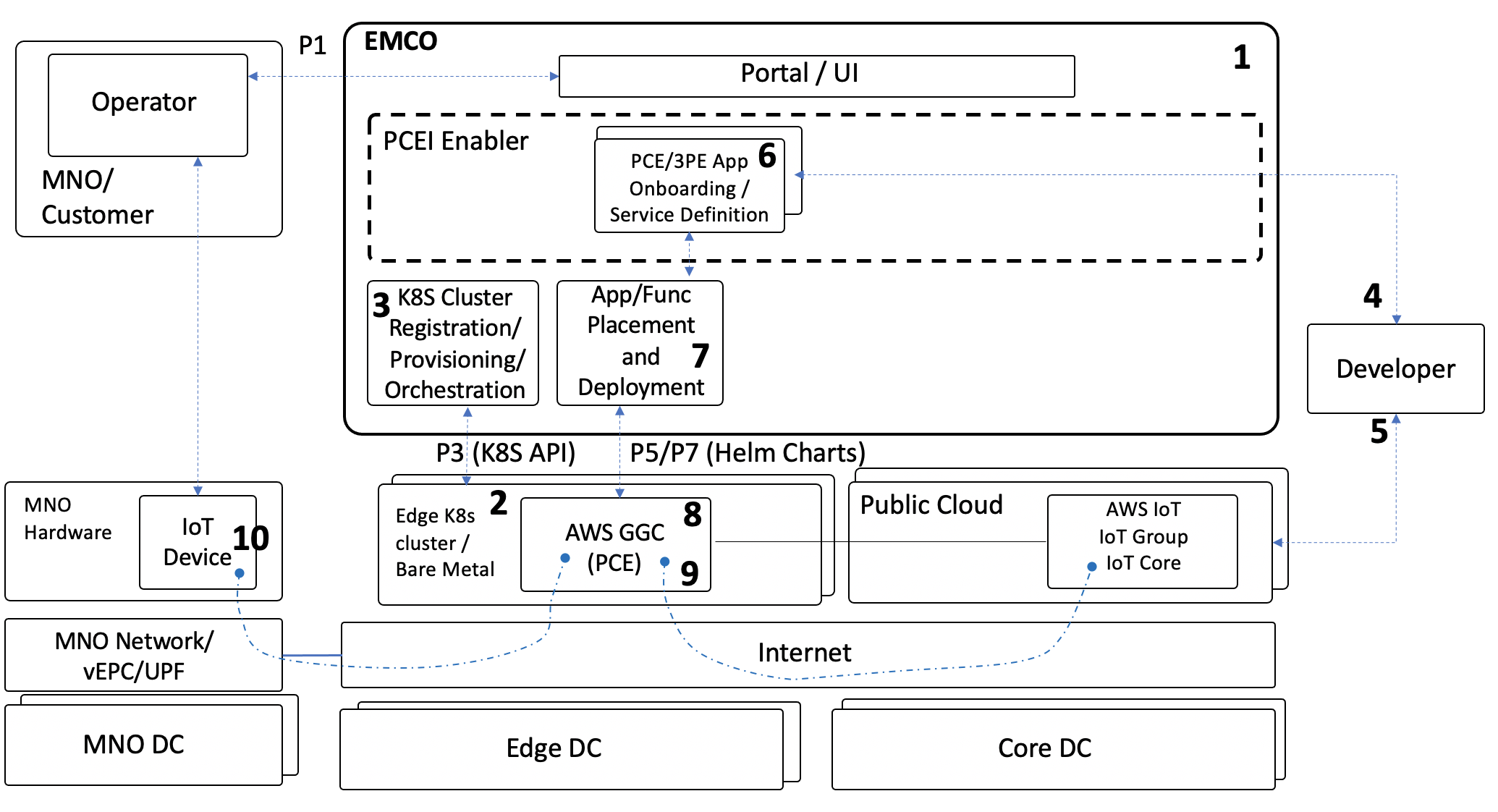

This document describes the use of Public Cloud Edge Interface (PCEI) implemented based on Edge Multi-Cluster Orchestrator (EMCO) for deployment of Public Cloud Edge (PCE) Apps from multiple clouds (Azure and AWS), deployment of a 3rd-Party Edge (3PE) App (an implementation of ETSI MEC Location API App), as well as the end-to-end operation of the deployed PCE Apps using simulated Low Power Wide Area (LPWA) IoT client.

Individuals/entities that:

Entities that:

Entities that:

Entities that:

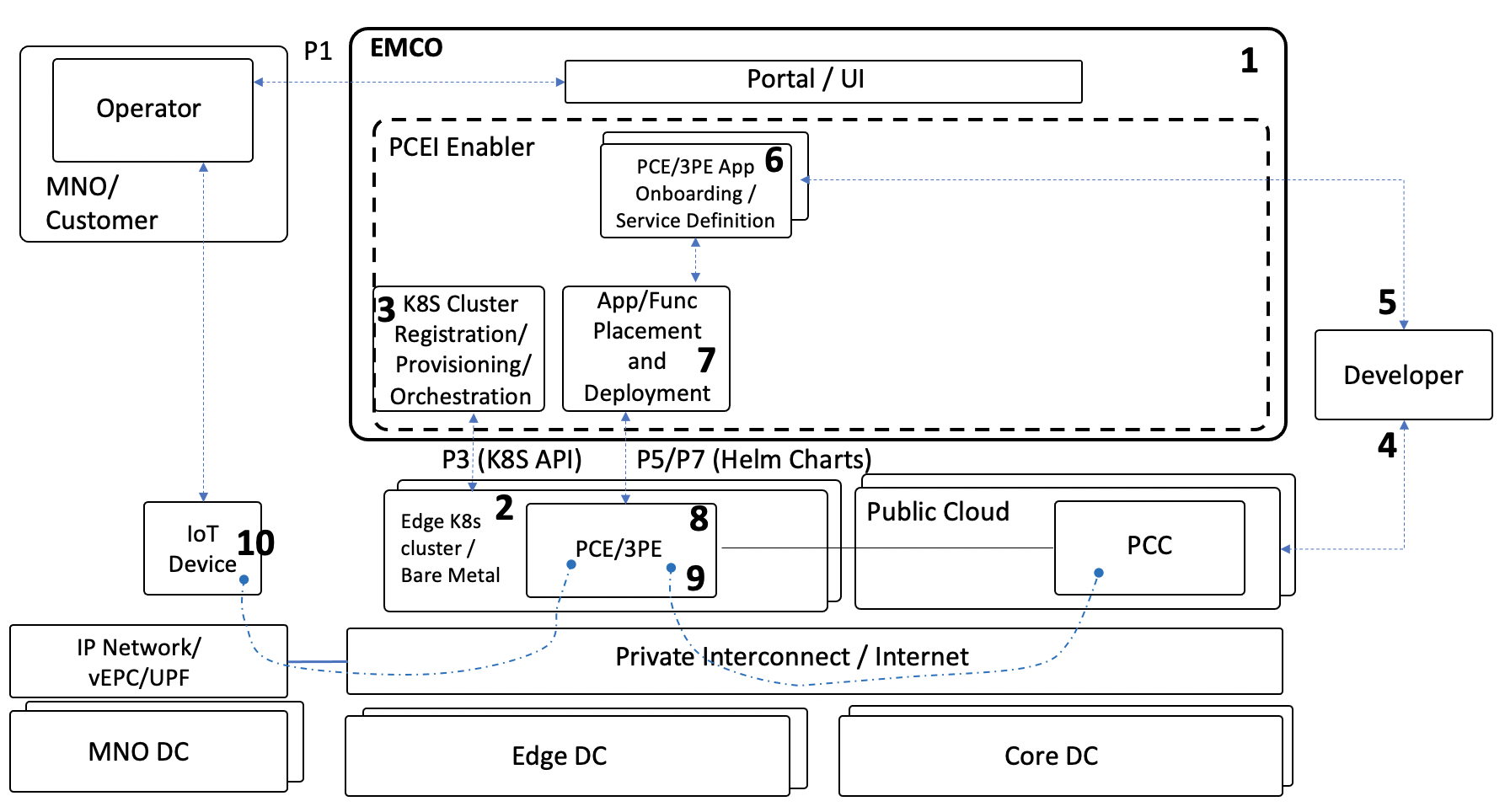

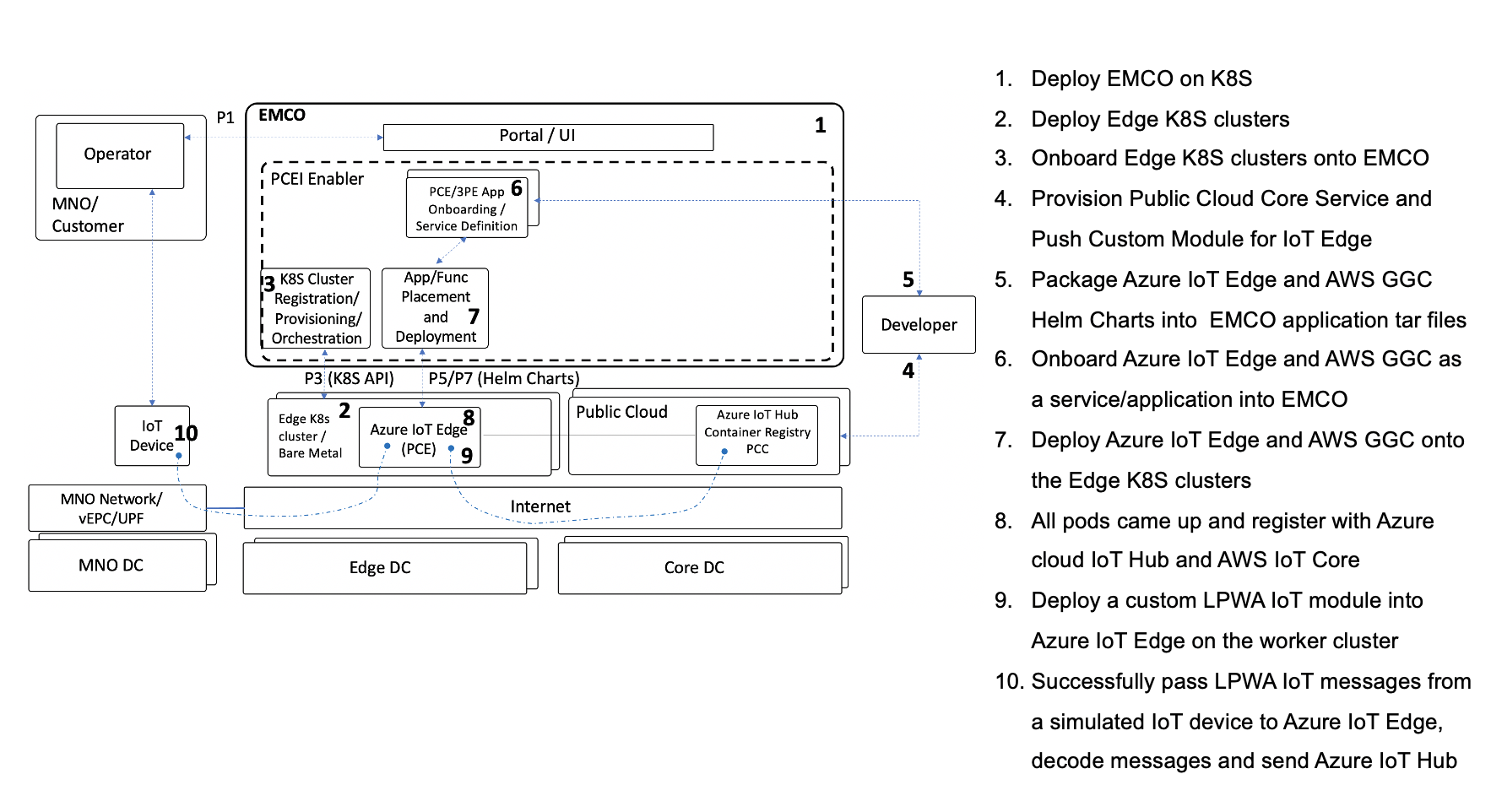

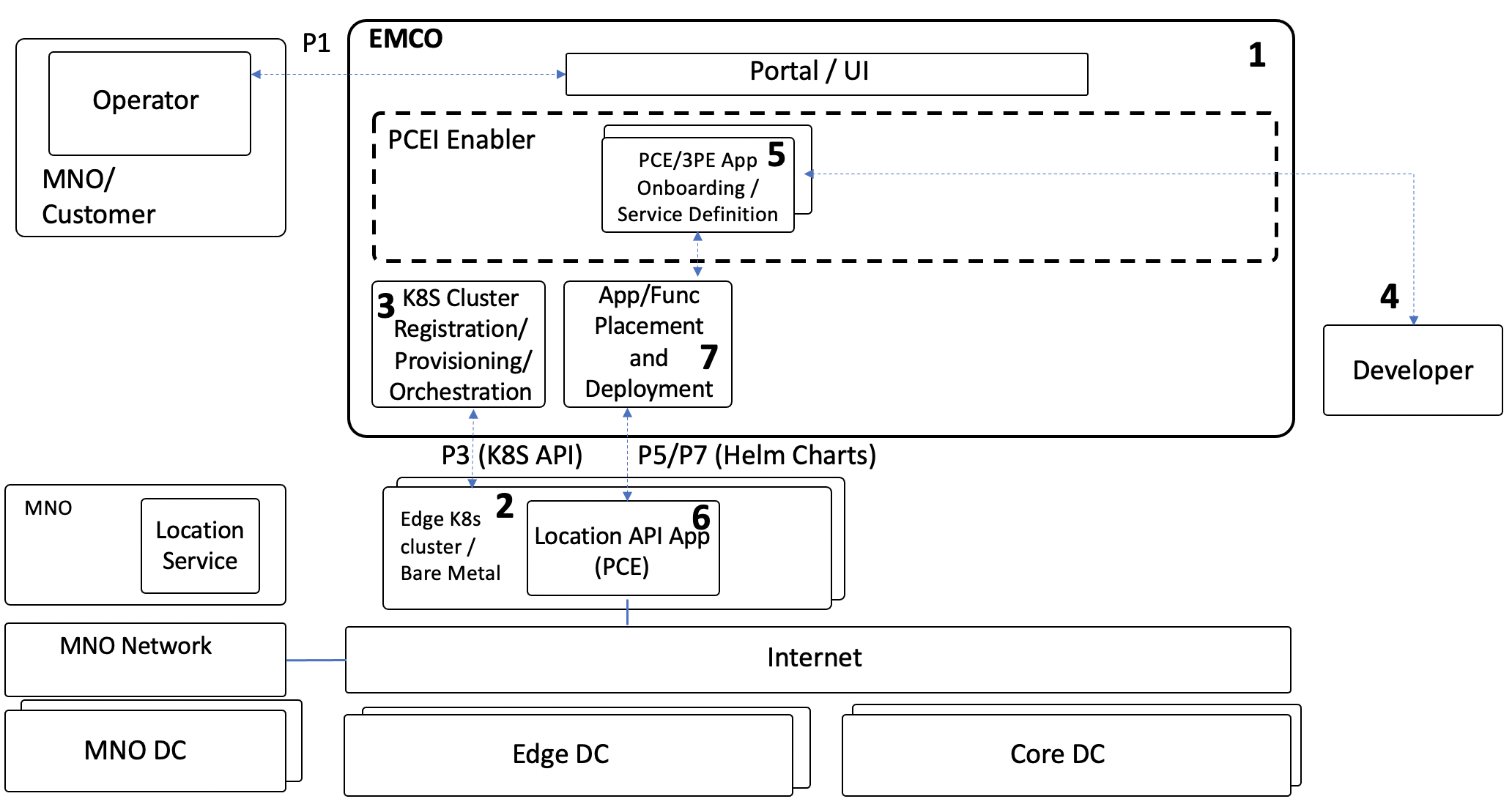

The end-to-end validation environment and flow of steps is shown below:

Description of components of the end-to-end validation environmen:

Note that P1, P3, P5/P7 Reference Points are shown to illustrate alignment with general PCEI architecture.

The end-to-end PCEI validation steps are described below:

For performing Steps 1 and 2 please refer to the PCEI R4 Installation Guide.

Please refer to the PCEI R4 Installation Guide to deploy EMCO and Edge K8S Clusters.

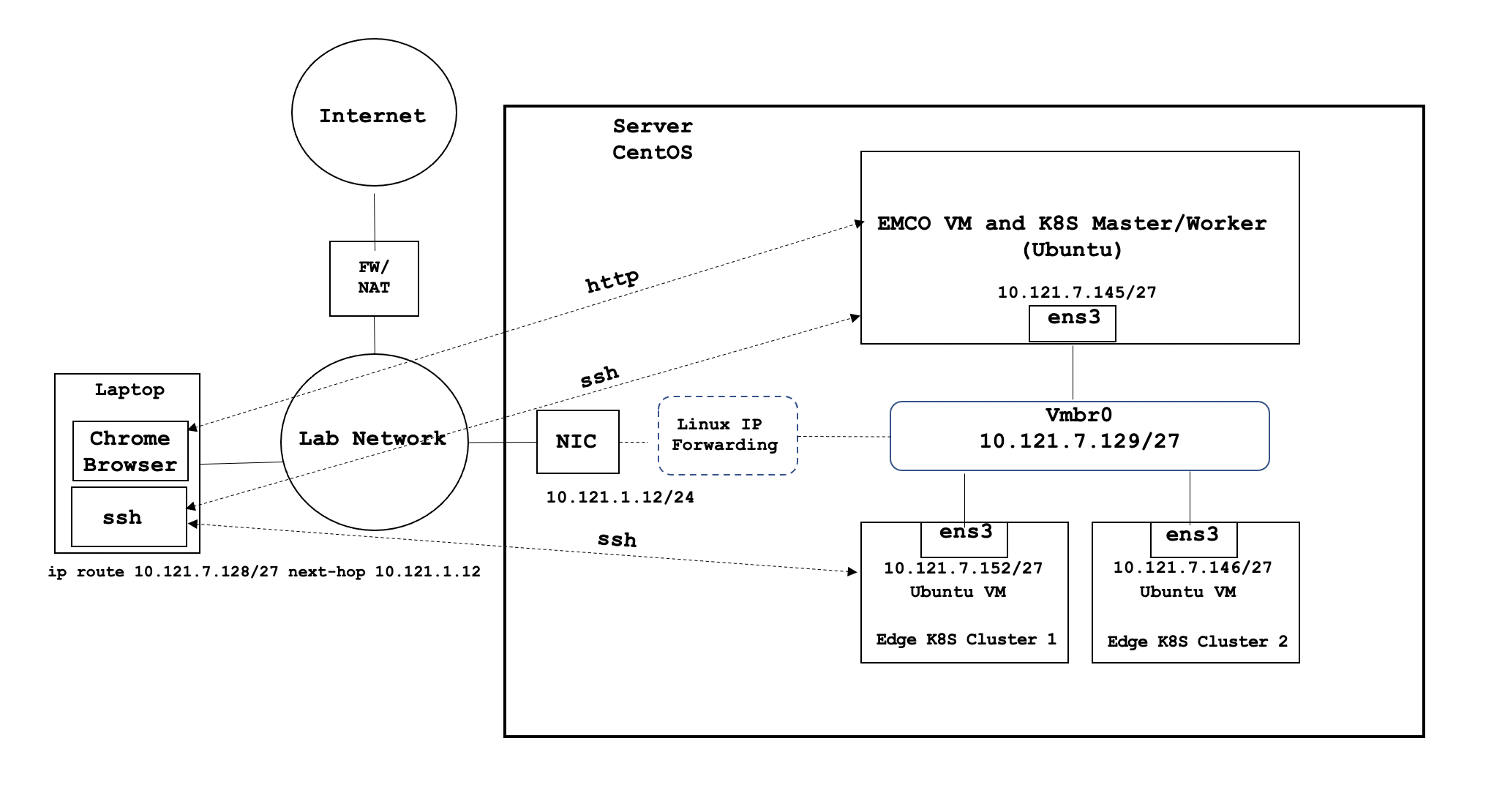

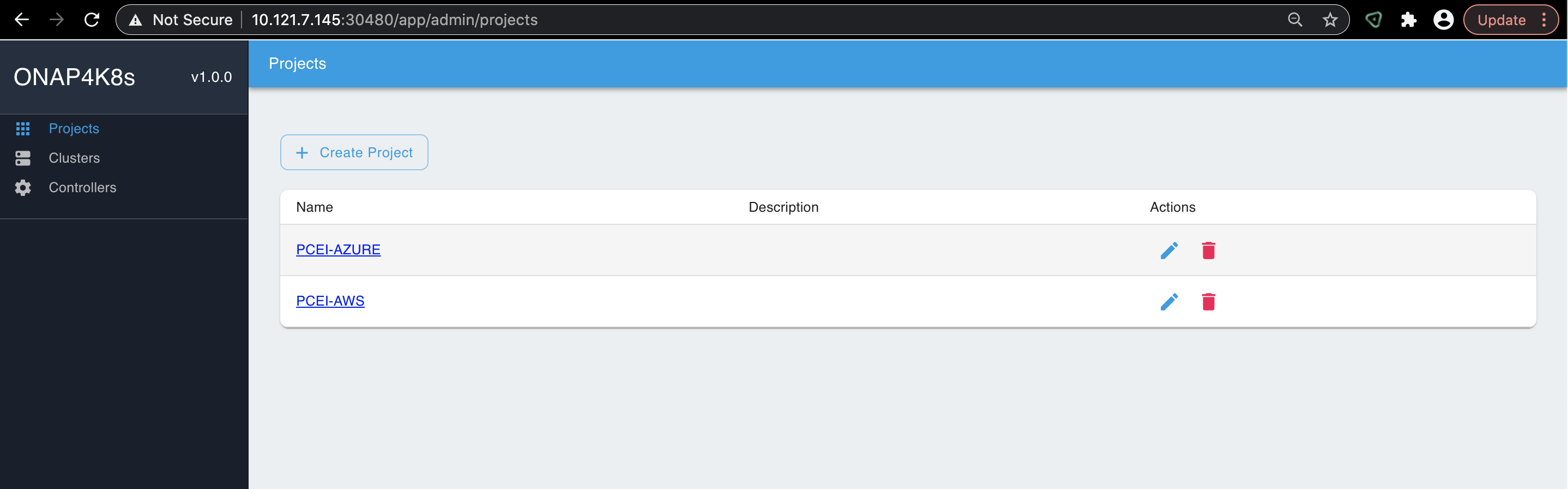

Access EMCO UI via a Web Browser as shown in the diagram below (all IP addresses shown are used as examples):

In order to be able to access the EMCO cluster and the Edge K8S cluster via ssh directly from a laptop as shown in the diagram above, please copy the ssh key used in the PCEI Installation to you local machine:

### copy id_rsa key from deployment host to be able to ssh to eco and cluster vm’s sftp onaplab@10.121.1.12 get ~/.ssh/id_rsa pcei-emco ### ssh to VMs ssh -i pcei-emco onaplab@10.121.7.145 |

Connect to the VNC server, start the Browser (Chrome) and point it to the EMCO VM (amcop-vm-01) IP address and port 30480:

http://10.121.7.145:30480 |

You should be able to connect to EMCO UI as shown below:

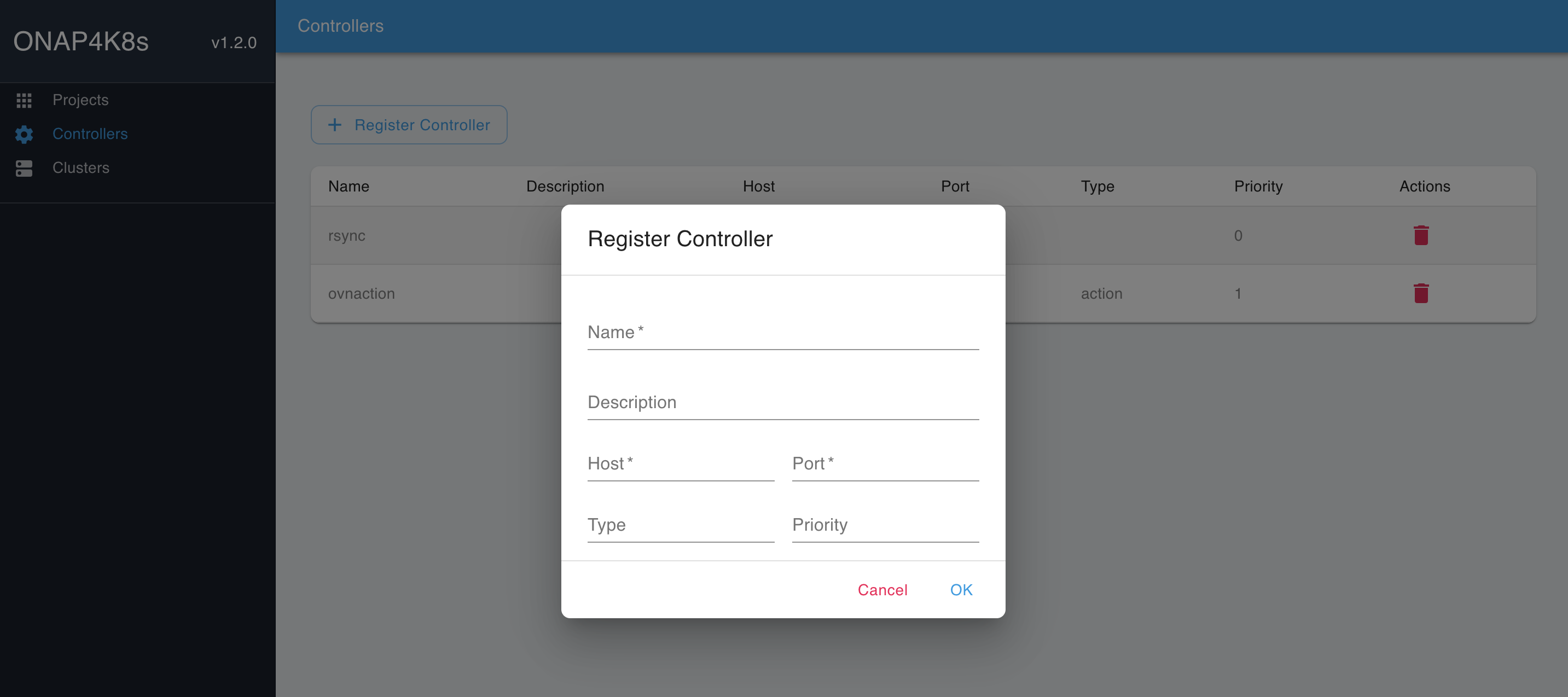

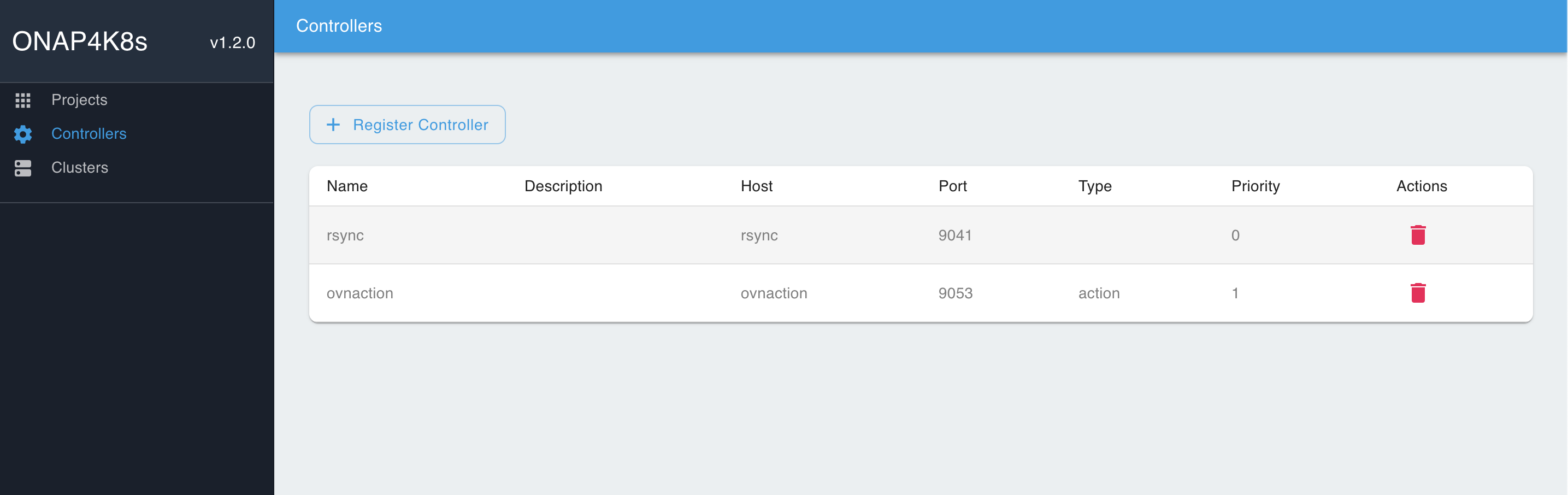

The following Network Controllers must be provisioned in EMCO:

### Add controllers rsync: host : rsync port: 9041 type: < leave blank, not required.> priority: < leave blank, not required> ovnaction: host: ovnaction port: 9053 type: action priority: 1 |

To provision Network Controllers, please use the parameters shown above and EMCO UI "Controllers → Register Controllers" tab:

Fill in the parameters for the "rsync" and the "ovnaction" controllers:

To register the two Edge K8S Clusters created during PCEI installation (refer to PCEI R4 Installation Guide), the cluster configuration files need to be copied from the corresponding VMs to the machine that is used to access EMCO UI. Please make sure that the ssh key used to access the VMs is present on the machine that where the cluster config files are being copied to. The example below assumes that the cluster config files are copied to the Host Server used to deploy EMCO and Edge Cluster VMs. Alternatively, the ssh key and the config files could be copied to a laptop.

# Determine VM IP addresses: [onaplab@os12 ~]$ sudo virsh list --all Id Name State ---------------------------------------------------- 6 amcop-vm-01 running 9 edge_k8s-1 running 10 edge_k8s-2 running [onaplab@os12 ~]$ sudo virsh domifaddr edge_k8s-1 Name MAC address Protocol Address ------------------------------------------------------------------------------- vnet1 52:54:00:19:96:72 ipv4 10.121.7.152/27 [onaplab@os12 ~]$ sudo virsh domifaddr edge_k8s-2 Name MAC address Protocol Address ------------------------------------------------------------------------------- vnet2 52:54:00:c0:47:8b ipv4 10.121.7.146/27 sftp -i pcei-emco onaplab@10.121.7.146 cd .kube get config kube-config-edge-k8s-2 sftp -i pcei-emco onaplab@10.121.7.152 cd .kube get config kube-config-edge-k8s-1 |

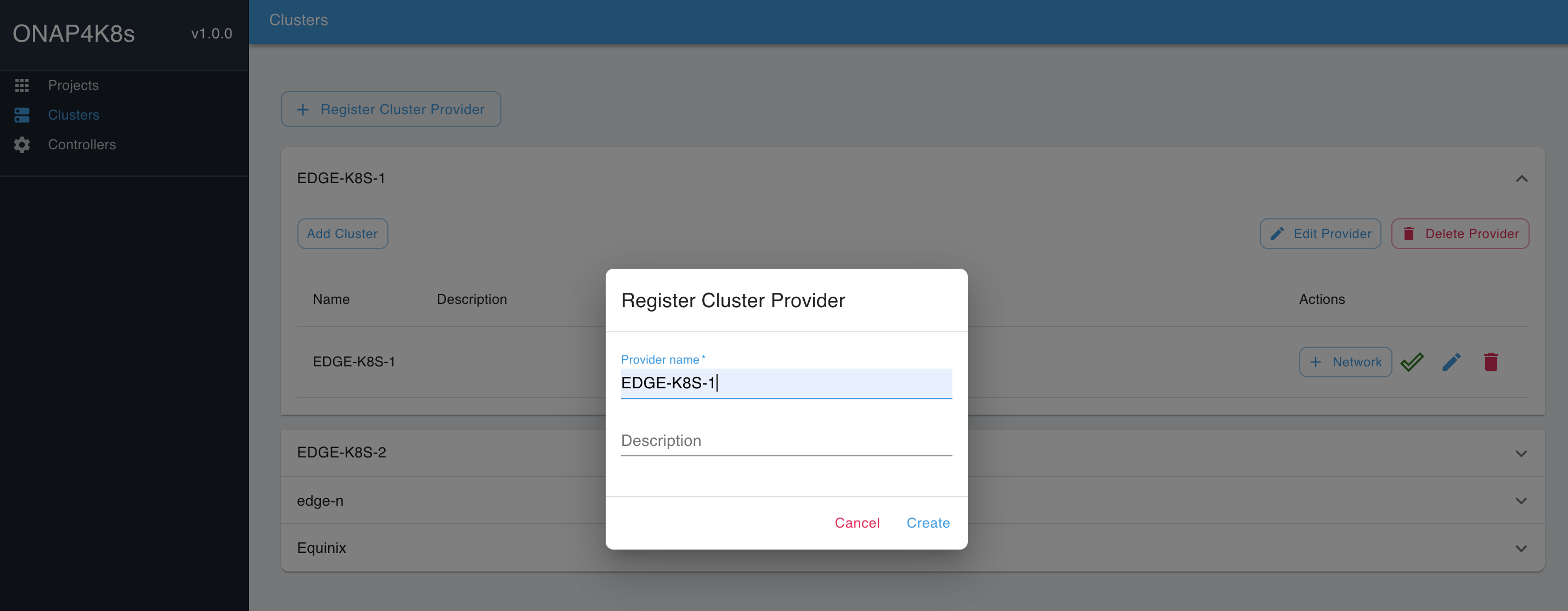

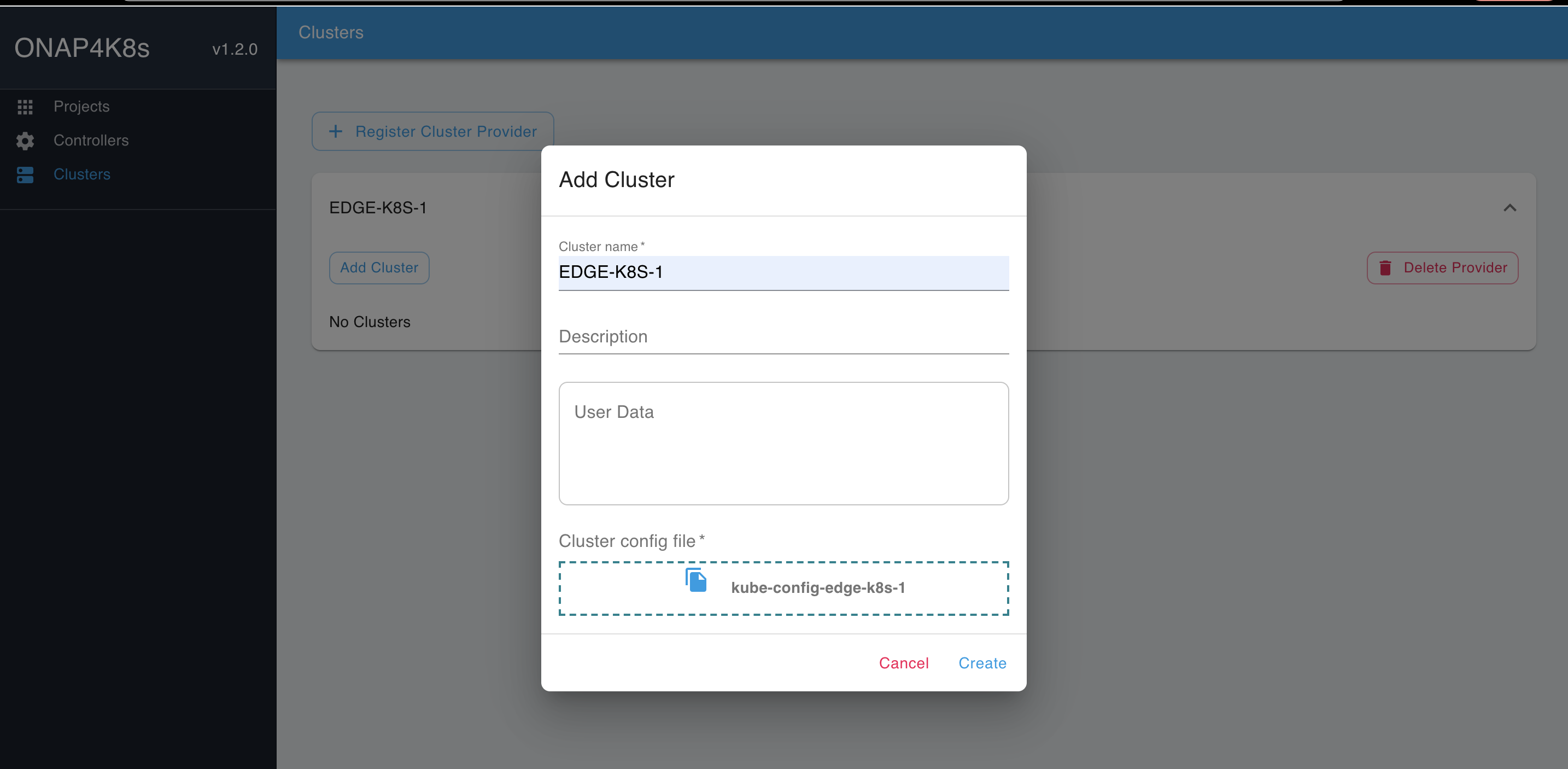

Using EMCO UI "Cluster → Register Cluster Provider" tabs, provision Cluster Providers for EDGE-K8S-1 and EDGE-K8S-2 clusters:

Click on the Cluster Provider and click on "Add Cluster". Fill in the Cluster Name and add the config file downloaded earlier. Repeat for the two clusters EDGE-K8S-1 and EDGE-K8S-2:

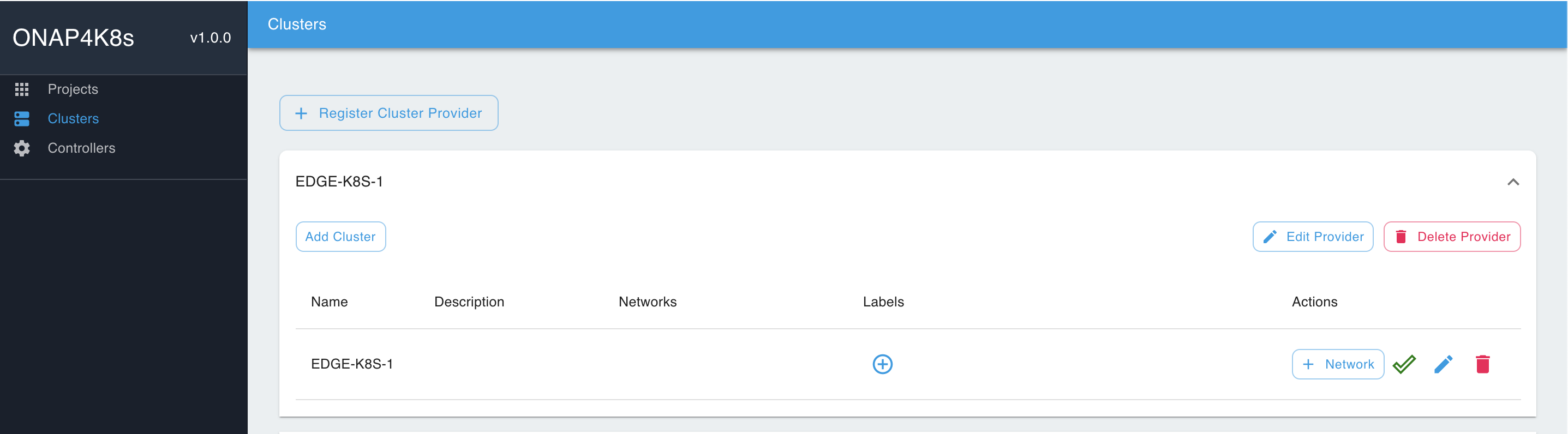

You should see the following result:

At this point the edge clusters have been registered with the orchestrator and are redy for placing PCE and 3PE apps.

The deployment of Azure IoT Edge cloud native application as a PCE involves the following steps:

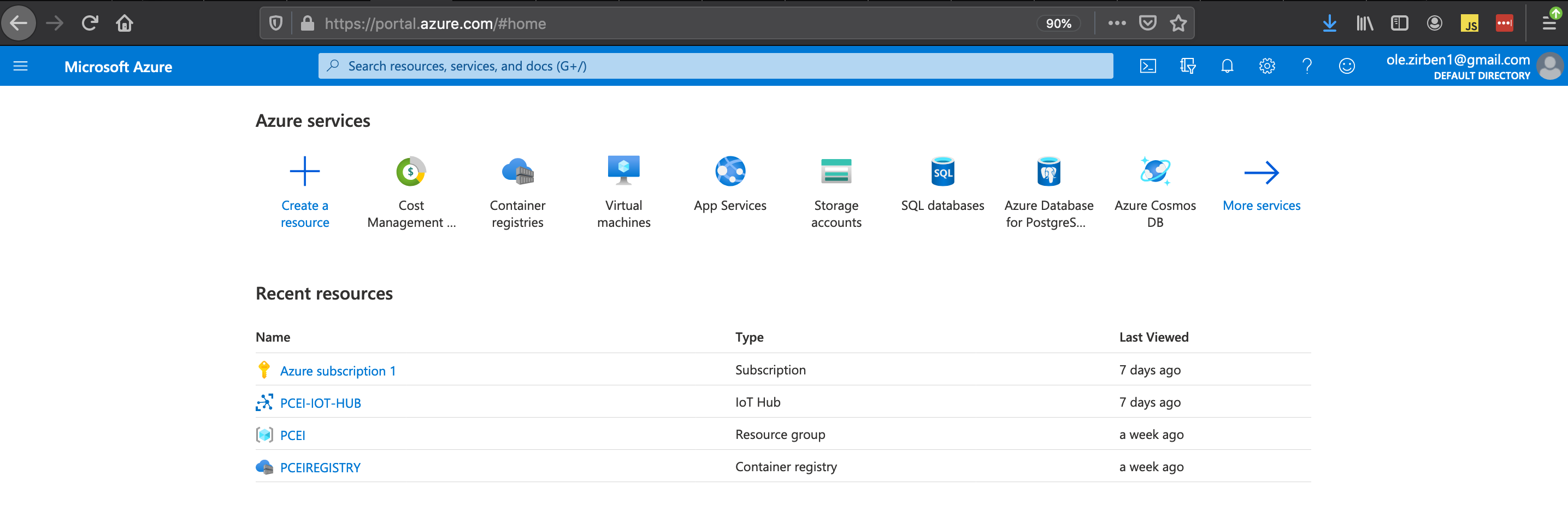

Login to your Azure Portal and add Subscription, IoT Hub and Container Registry Resources:

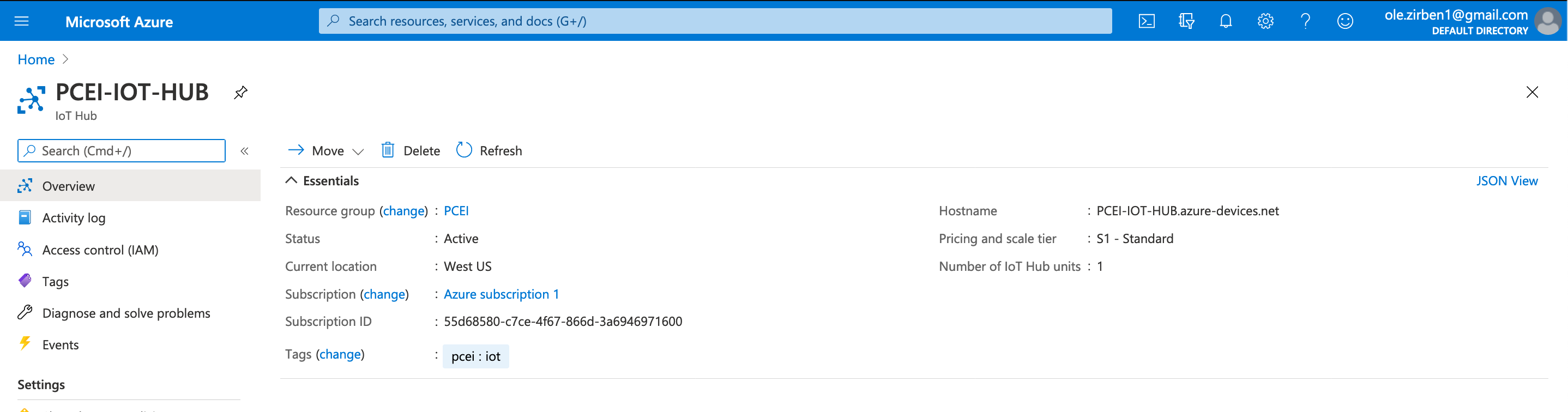

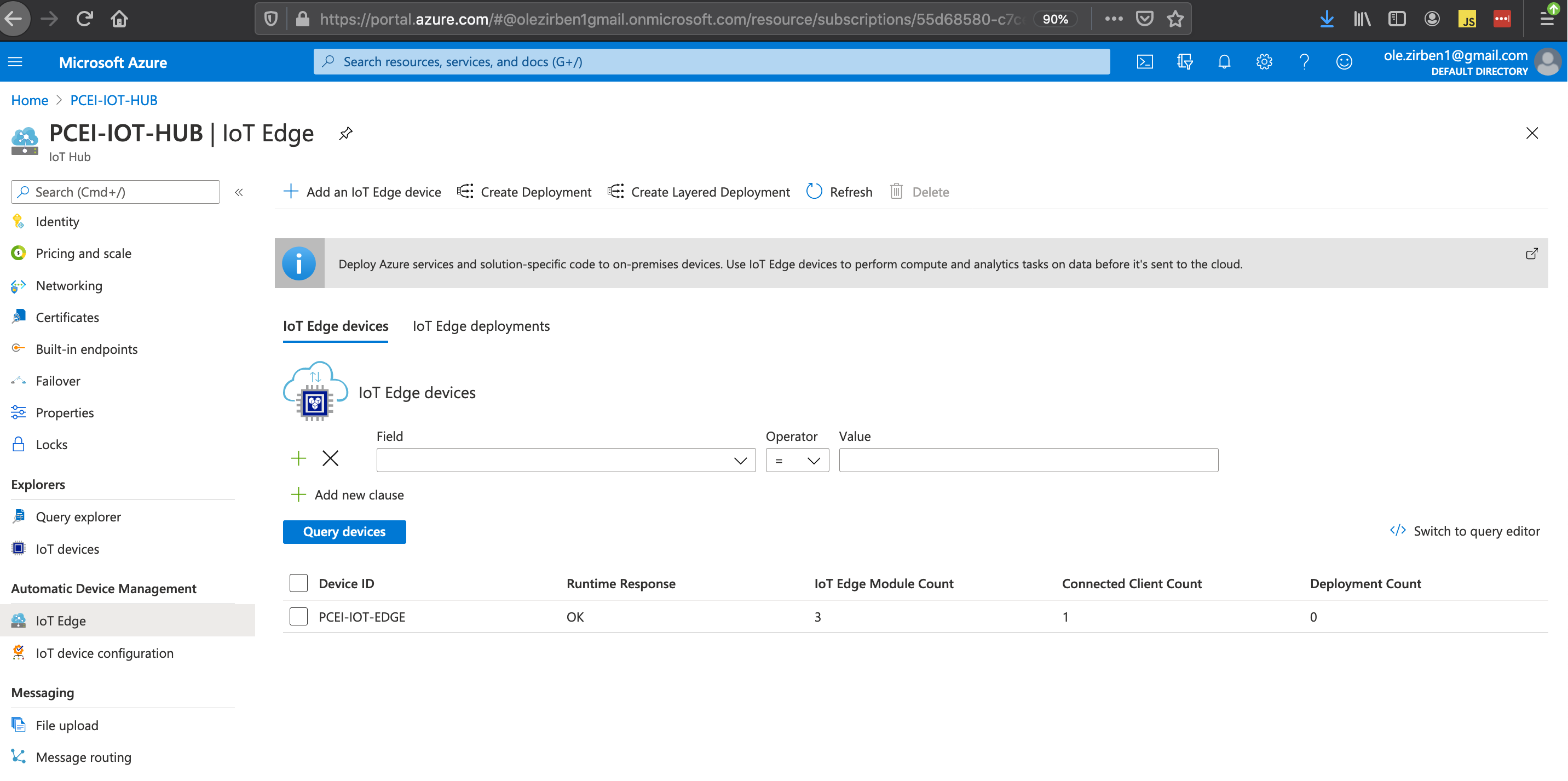

Provision IoT Hub:

Provision IoT Edge under IoT Hub:

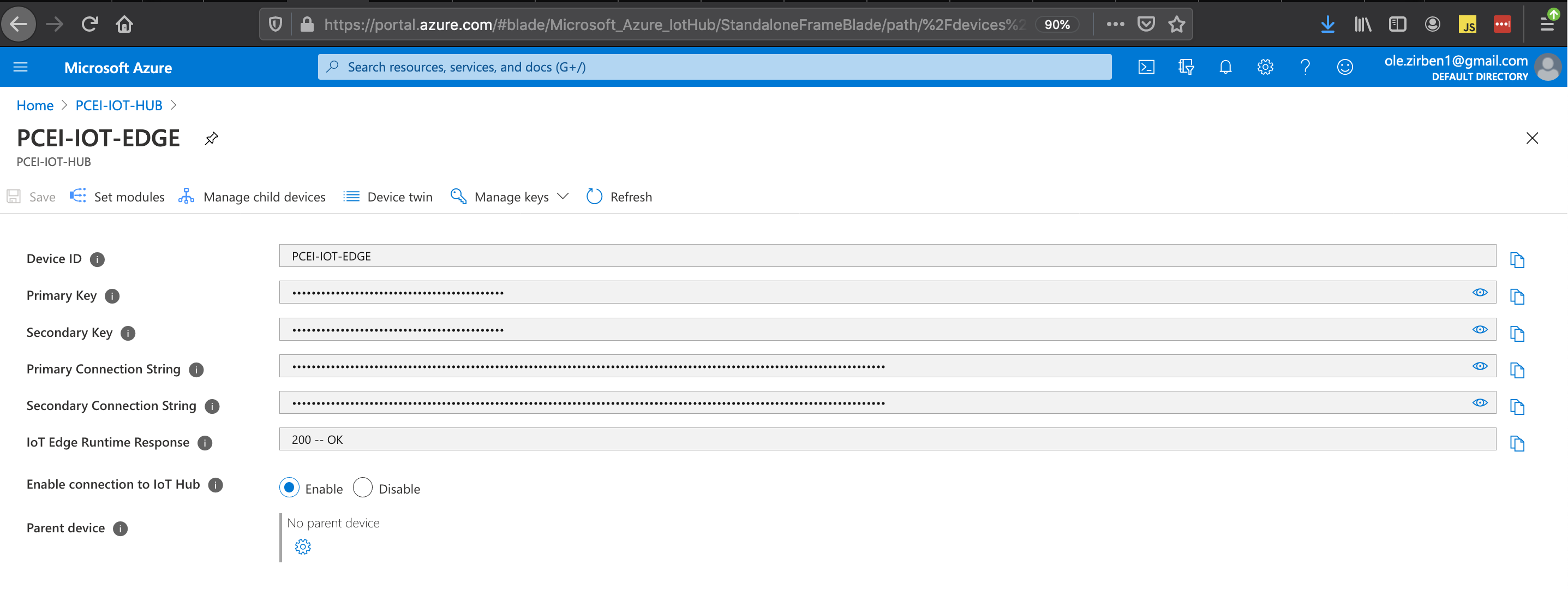

Copy the "Primary Connection String" from the IoT Edge parameters. This string will be used later in the values.yaml file for the Azure IoT Edge Helm Charts.

Use this link for information on developing custom software modules for Azure IoT Edge:

The example below is optional. It shows how to build a custom module for Azure IoT Edge to read and decode Low Power IoT messages from a simulated LPWA IoT device. Follow the above link to:

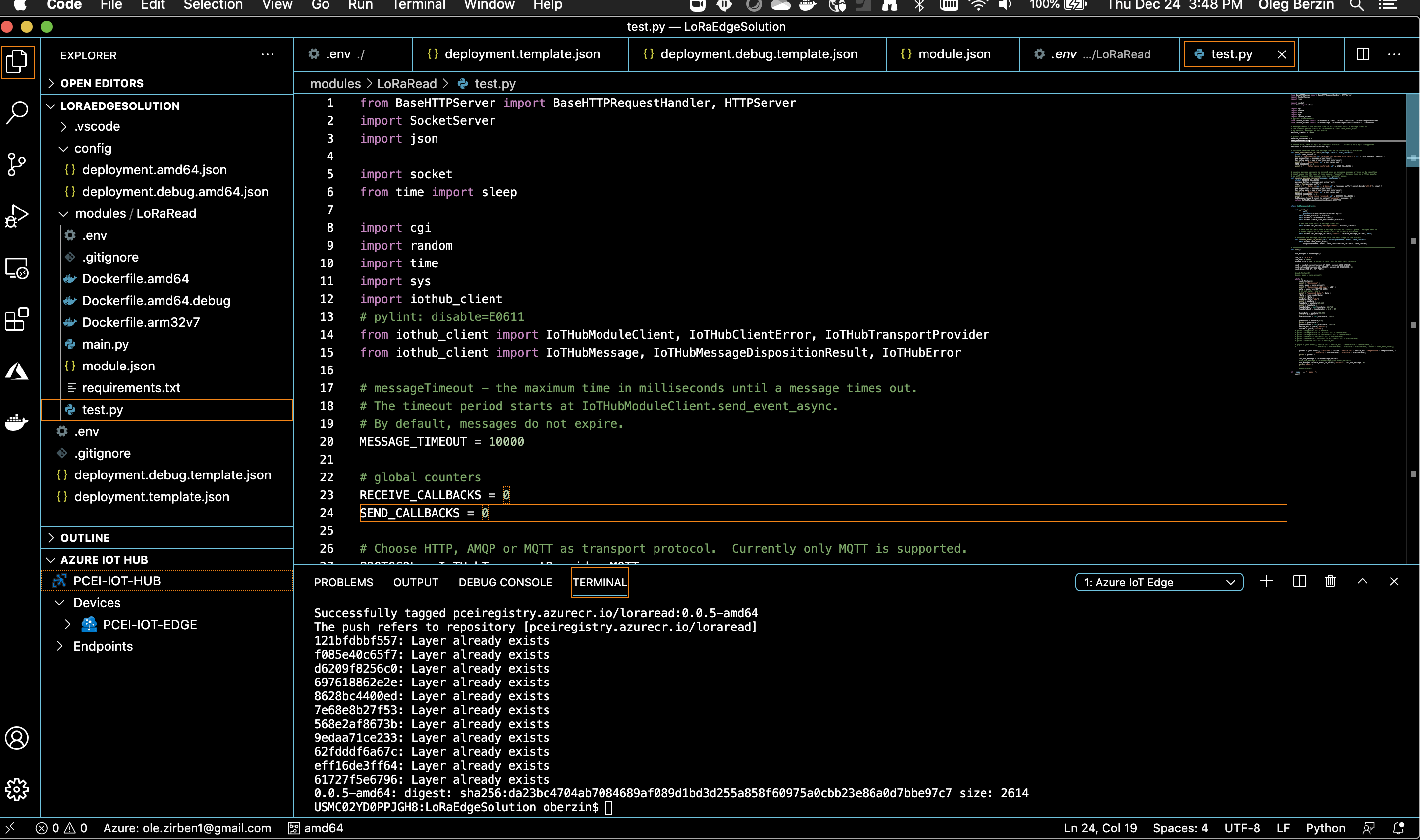

The steps below show how to build custom IoT module for Azure IoT Edge using "LoRaEdgeSolution" code from PCEI repo:

Download PCEI repo to the machine that has VSC and Docker installed (per above instructions):

git clone "https://gerrit.akraino.org/r/pcei" cd pcei ls -l total 0 drwxr-xr-x 8 oberzin staff 256 Dec 24 15:44 LoRaEdgeSolution drwxr-xr-x 3 oberzin staff 96 Dec 24 15:44 iotclient drwxr-xr-x 5 oberzin staff 160 Dec 24 15:44 locationAPI |

Using VSC open the LoRaEdgeSolution folder that was downloaded from PCEI repo.

Add required credentials for Azure Container Registry (ACR) using .env file.

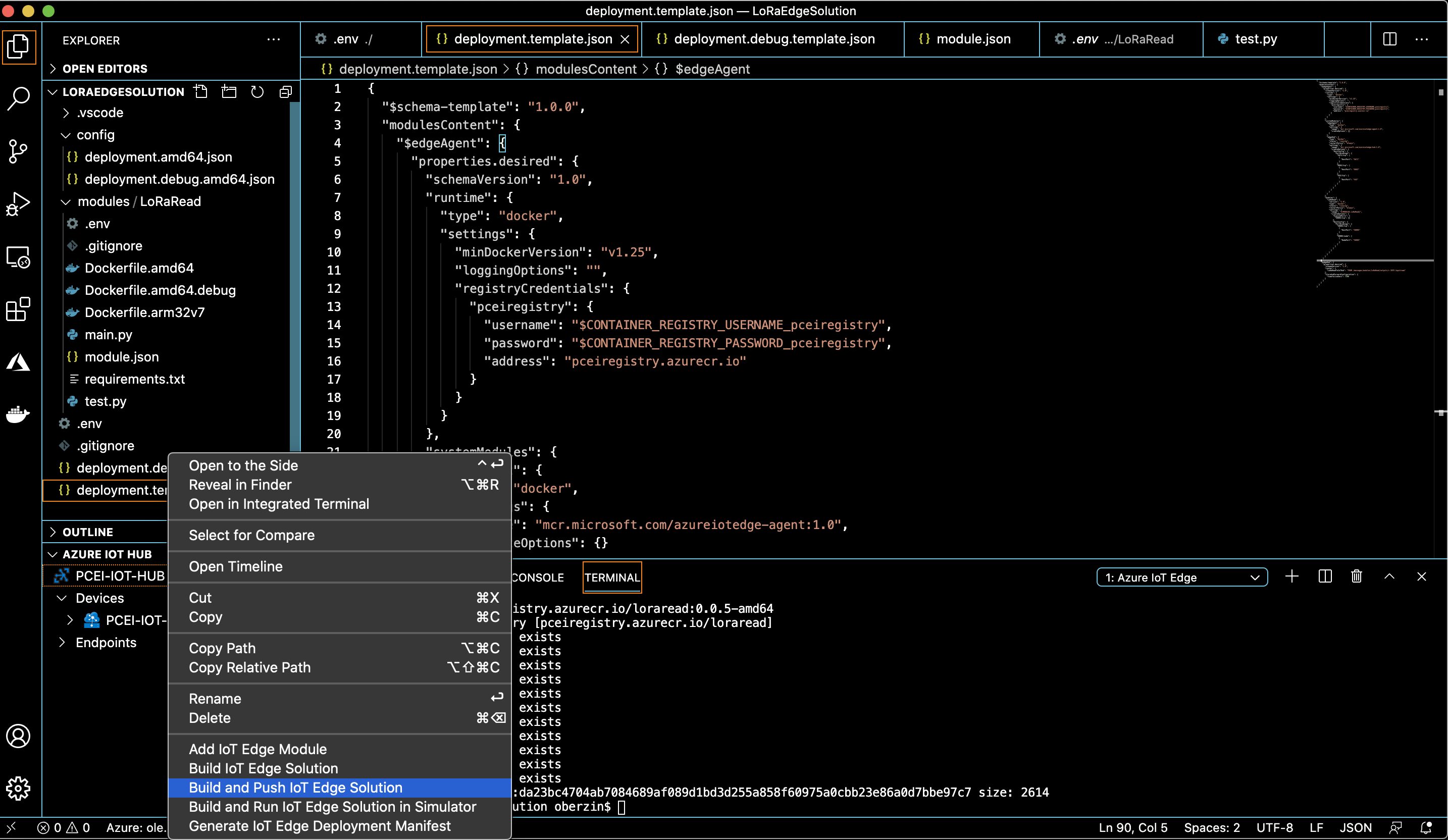

Build and push the solution to ACR as shown below. Righ-click on "deployment.template.json":

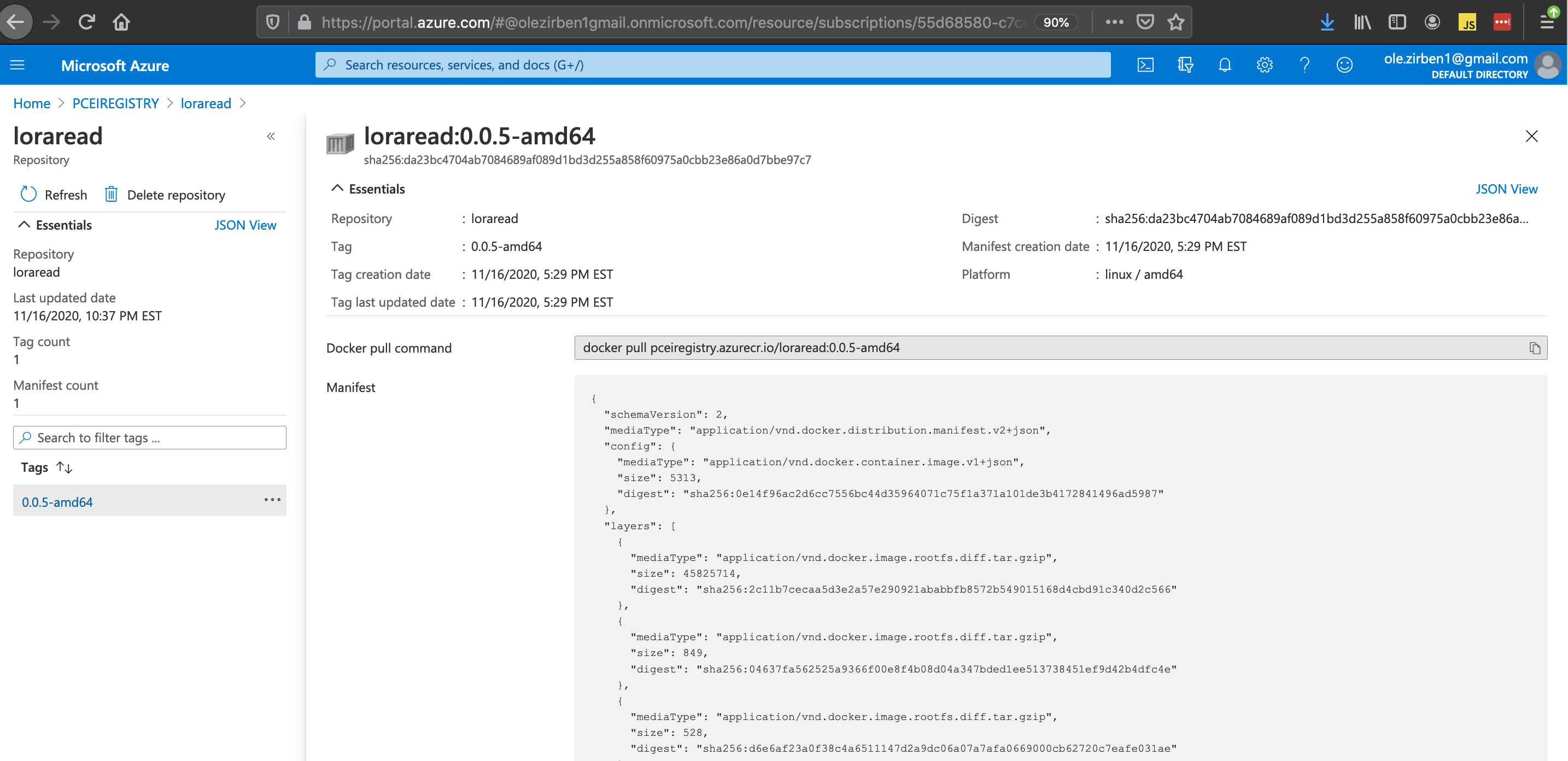

The docker image for the custom module should now be visible in Azure Cloud ACR:

Use this link to understand the architecture of Azure IoT Edge cloud native application:

https://microsoft.github.io/iotedge-k8s-doc/architecture.html

A local laptop can be used to package Helm charts for Azure IoT Edge. Alternatively the Host Server itself can be used to package the Helm charts for Azure IoT Edge.

Clone Azure IoT Edge from github:

NOTE: Due to Azure IoT Edge on K8S being in preview stage, please use the below URL to clone the Helm charts:

https://github.com/Azure/iotedge/tree/release/1.1-k8s-preview

git clone https://github.com/Azure/iotedge cd iotedge/kubernetes/charts/ mkdir azureiotedge1 cp -a edge-kubernetes/. azureiotedge1/ cd azureiotedge1 ls -al total 24 drwxrwxr-x. 3 onaplab onaplab 79 Dec 24 13:14 . drwxrwxr-x. 5 onaplab onaplab 77 Dec 24 13:14 .. -rw-rw-r--. 1 onaplab onaplab 137 Dec 24 13:02 Chart.yaml -rw-rw-r--. 1 onaplab onaplab 333 Dec 24 13:02 .helmignore drwxrwxr-x. 2 onaplab onaplab 220 Dec 24 13:02 templates -rw-rw-r--. 1 onaplab onaplab 14226 Dec 24 13:02 values.yaml |

Modify values.yaml file to specify the "Primary Connection String" from Azure Cloud generated during IoT Hub/IoT Edge provisioning.

Modify values.yaml to set the LoadBalancer port mapping for iotedged and edgeAgent pods

vi values.yaml

# Change the line below and save the file

provisioning:

source: "manual"

deviceConnectionString: "PASTE PRIMARY CONNECTION STRING FROM AZURE IOT HUB / IOT EDGE SCREEN"

dynamicReprovisioning: false

# Set LoadBalancer port mapping

service:

name: iotedged

type: LoadBalancer

edgeAgent:

containerName: edgeagent

image:

repository: azureiotedge/azureiotedge-agent

tag: 0.1.0-beta9

pullPolicy: Always

hostname: "localhost"

env:

authScheme: 'sasToken'

# Set this to one of "LoadBalancer", "NodePort", or "ClusterIP" to tell the

# IoT Edge runtime how you want to expose mapped ports as Services.

portMappingServiceType: 'LoadBalancer'

|

Create a tar file with Azure IoT Edge Helm Charts:

# Make sure to change to the "charts" directory cd .. pwd /home/onaplab/iotedge/kubernetes/charts # zip the "azureiotedge1" directory. Be sure to use "azureiotedge1.zip" file name. tar -czvf azureiotedge1.tar azureiotedge1/ azureiotedge1/ azureiotedge1/.helmignore azureiotedge1/Chart.yaml azureiotedge1/templates/ azureiotedge1/templates/NOTES.txt azureiotedge1/templates/_helpers.tpl azureiotedge1/templates/edge-rbac.yaml azureiotedge1/templates/iotedged-config-secret.yaml azureiotedge1/templates/iotedged-deployment.yaml azureiotedge1/templates/iotedged-proxy-config.yaml azureiotedge1/templates/iotedged-pvc.yaml azureiotedge1/templates/iotedged-service.yaml azureiotedge1/values.yaml ls -al total 12 drwxrwxr-x. 5 onaplab onaplab 102 Dec 24 13:23 . drwxrwxr-x. 4 onaplab onaplab 31 Dec 24 13:02 .. drwxrwxr-x. 3 onaplab onaplab 79 Dec 24 13:14 azureiotedge1 -rw-rw-r--. 1 onaplab onaplab 8790 Dec 24 13:23 azureiotedge1.tar drwxrwxr-x. 3 onaplab onaplab 79 Dec 24 13:14 edge-kubernetes drwxrwxr-x. 3 onaplab onaplab 60 Dec 24 13:02 edge-kubernetes-crd |

Copy the infrastructure profile tar file to your home directory from the Host Server. If using a local laptop, copy this file from the Host Server. This file is needed to define the Service and the App in EMCO:

cd cp /home/onaplab/amcop_deploy/aarna-stream/cnf/vfw_helm/profile.tar.gz . la -l ls -l total 220088 drwxrwxr-x. 9 onaplab onaplab 138 Nov 24 06:53 aarna-stream drwxrwxr-x. 3 onaplab onaplab 56 Nov 24 06:52 amcop_deploy -rw-r--r--. 1 onaplab onaplab 225356880 Nov 23 14:58 amcop_install_v1.0.zip drwxrwxr-x. 22 onaplab onaplab 4096 Dec 24 13:02 iotedge -rw-rw-r--. 1 onaplab onaplab 263 Nov 23 17:13 netdefault.xml -rw-rw-r--. 1 onaplab onaplab 1098 Dec 26 12:19 profile.tar.gz |

Due to limitations in the current EMCO implementation the following step must be performed manually:

SSH to EDGE-K8S-1 VM:

# Determine VMs IP [onaplab@os12 ~]$ sudo virsh domifaddr edge_k8s-1 Name MAC address Protocol Address ------------------------------------------------------------------------------- vnet1 52:54:00:19:96:72 ipv4 10.121.7.152/27 # ssh from your laptop ssh -i pcei-emco onaplab@10.121.7.152 |

Deploy Azure CRD:

helm install edge-crd --repo https://edgek8s.blob.core.windows.net/staging edge-kubernetes-crd NAME: edge-crd LAST DEPLOYED: Thu Dec 24 21:43:12 2020 NAMESPACE: default STATUS: deployed REVISION: 1 TEST SUITE: None kubectl get crd NAME CREATED AT edgedeployments.microsoft.azure.devices.edge 2020-12-24T21:43:13Z |

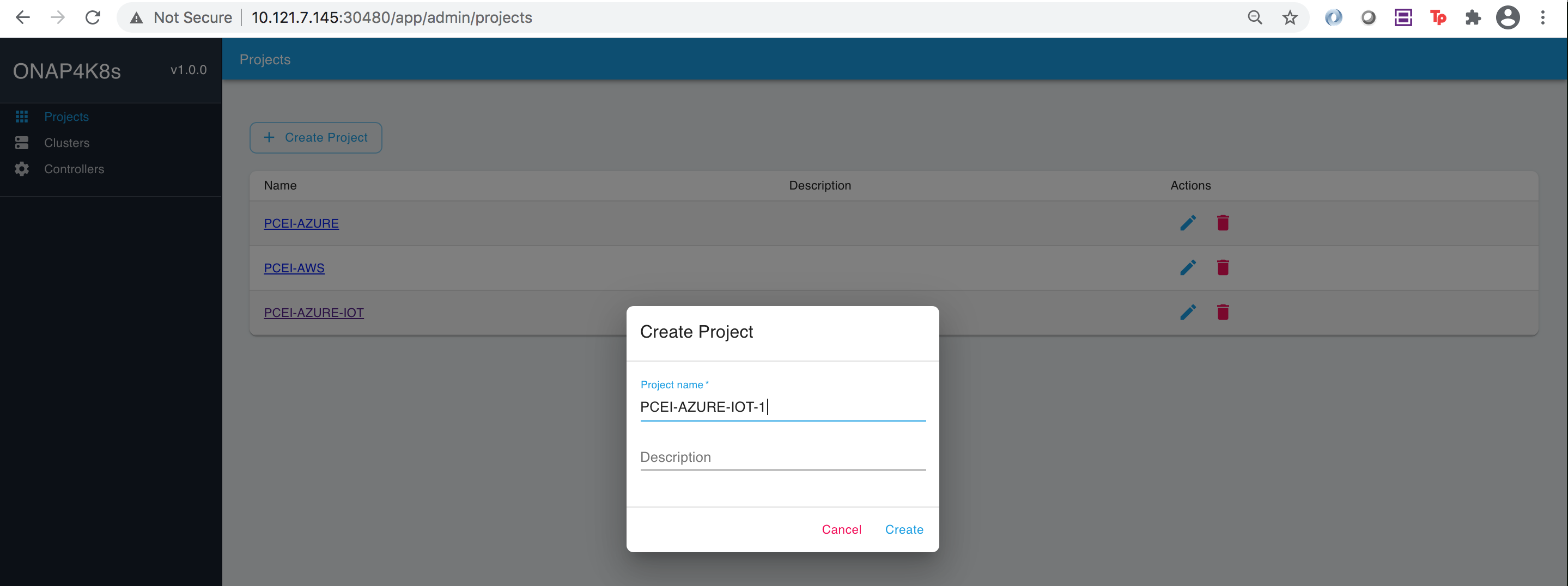

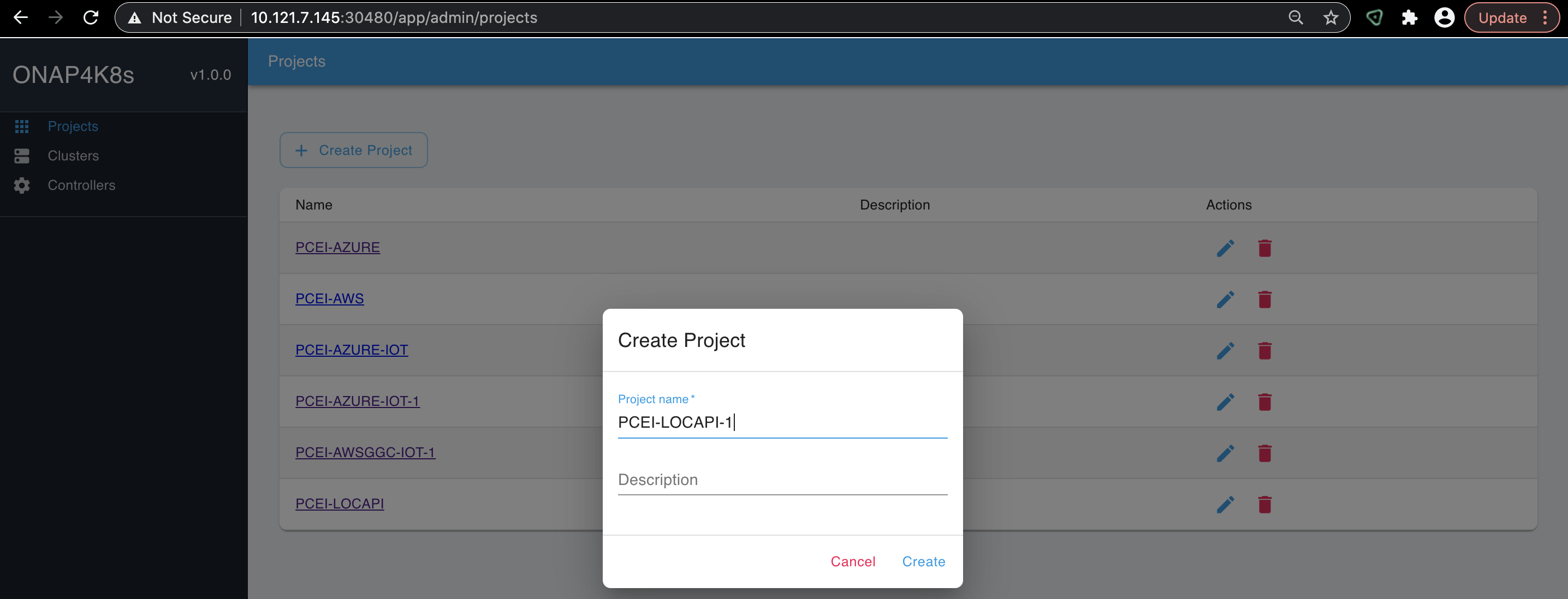

To define Azure IoT Edge Service in PCEI, connect to EMCO UI and select "Projects → Add Project":

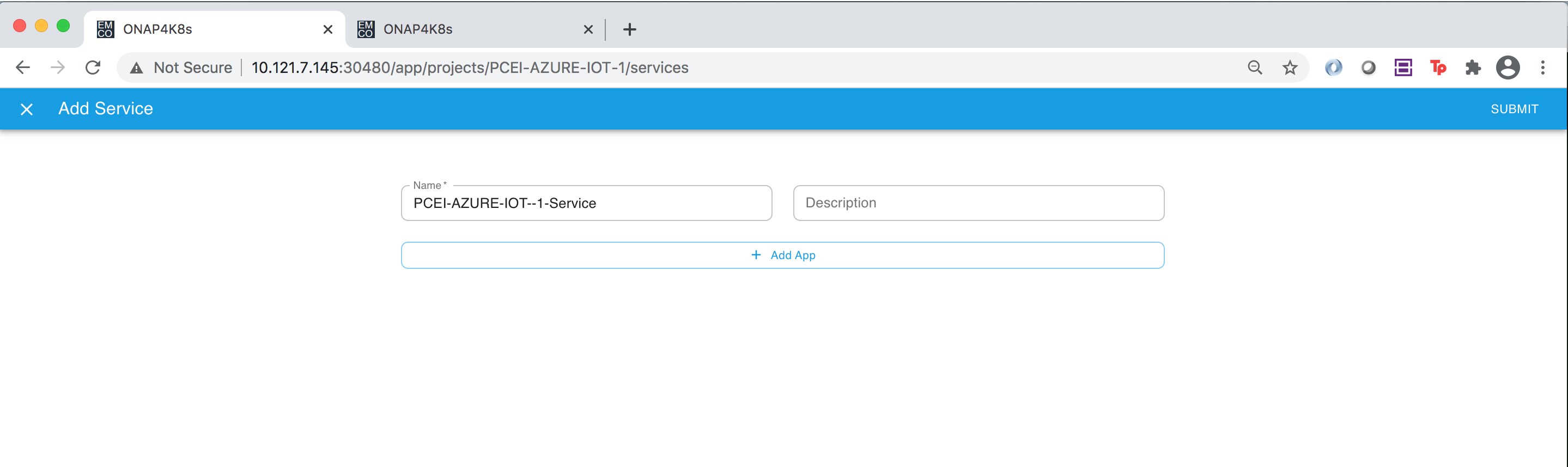

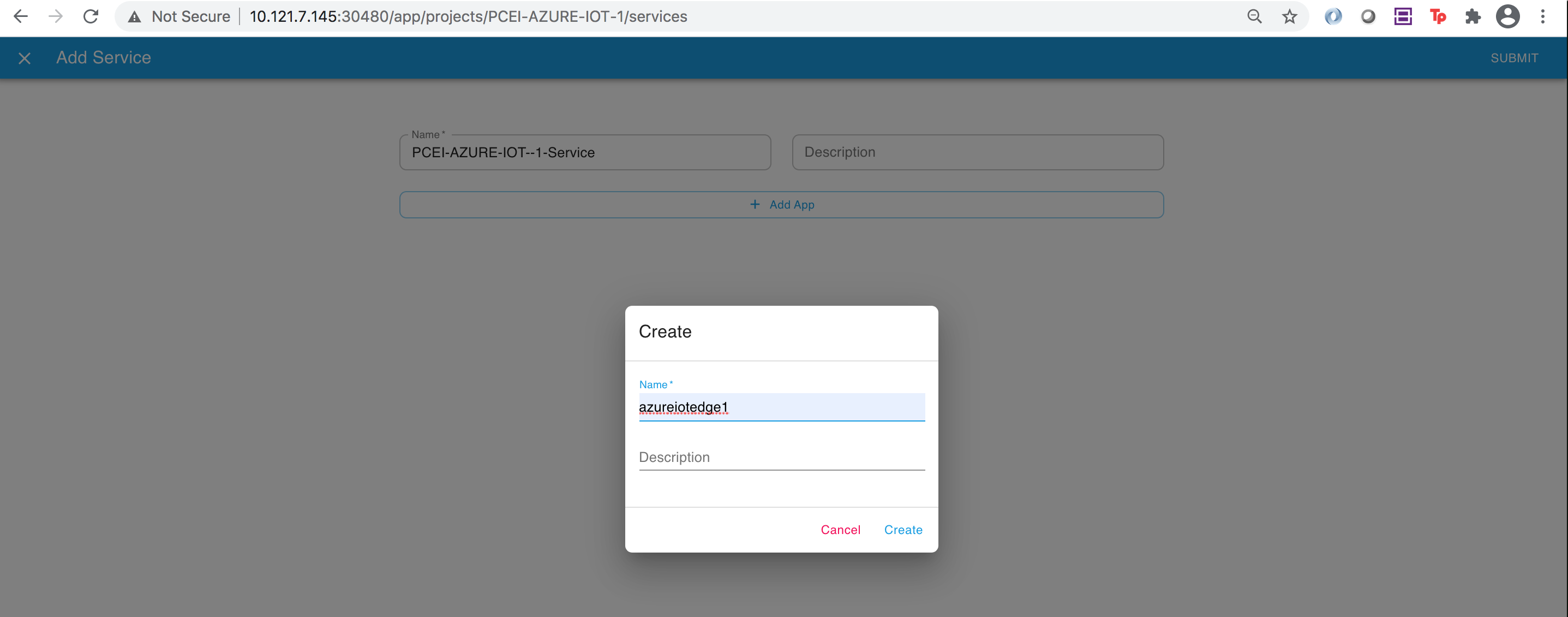

Select the project you just created (PCEI-AZURE-IOT-1 in the above example), select "Add Service", specify the Name and click on "Add App":

Click on Add App tp specify the App Name.

IMPORTANT NOTE: Please ensure that the "App Name" string matches the name of the tar file (without the .tar extension" created earlier with the Helm charts for Azure IoT Edge ("azureiotedge1" in this example".

Click "Create".

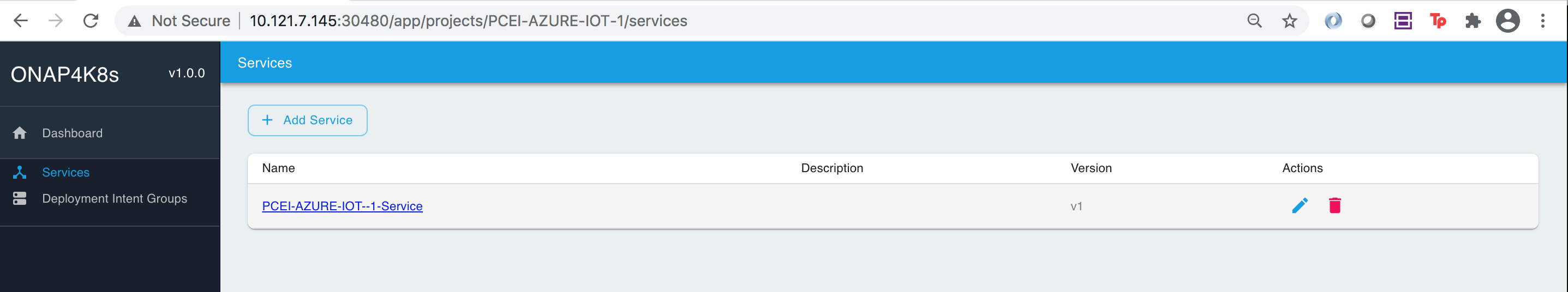

You should see the screen below:

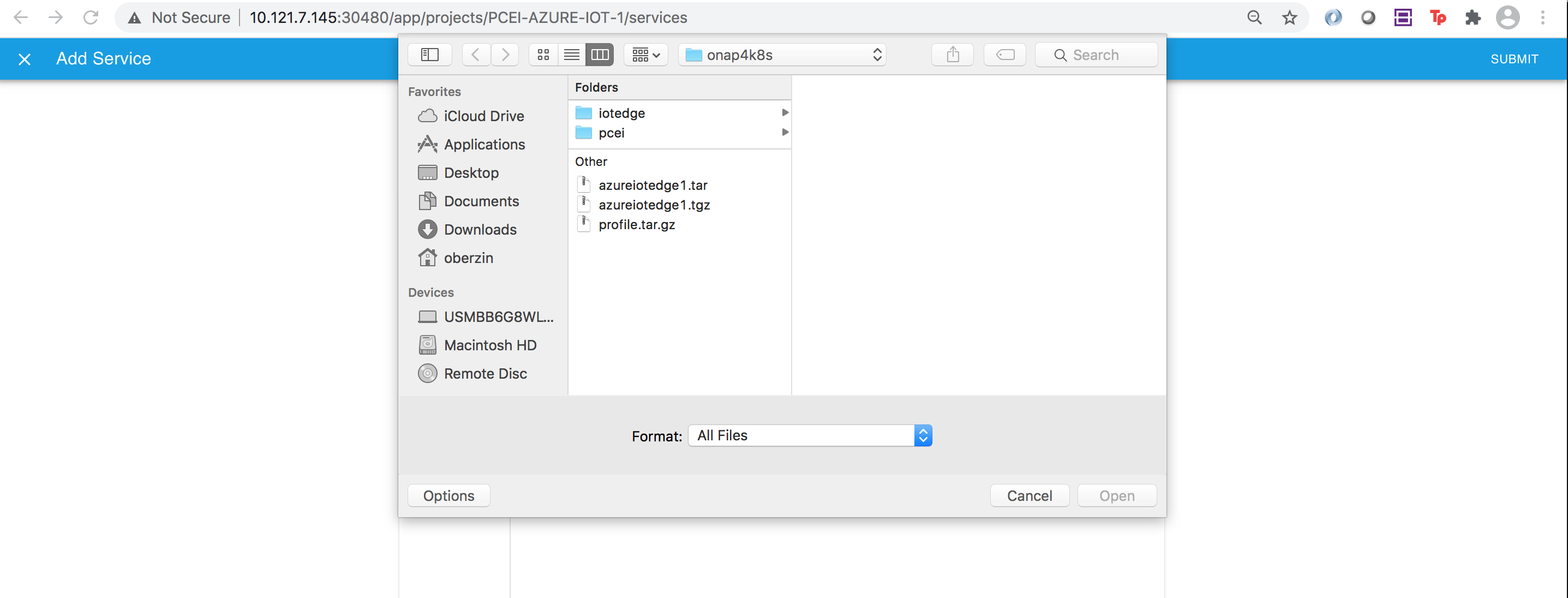

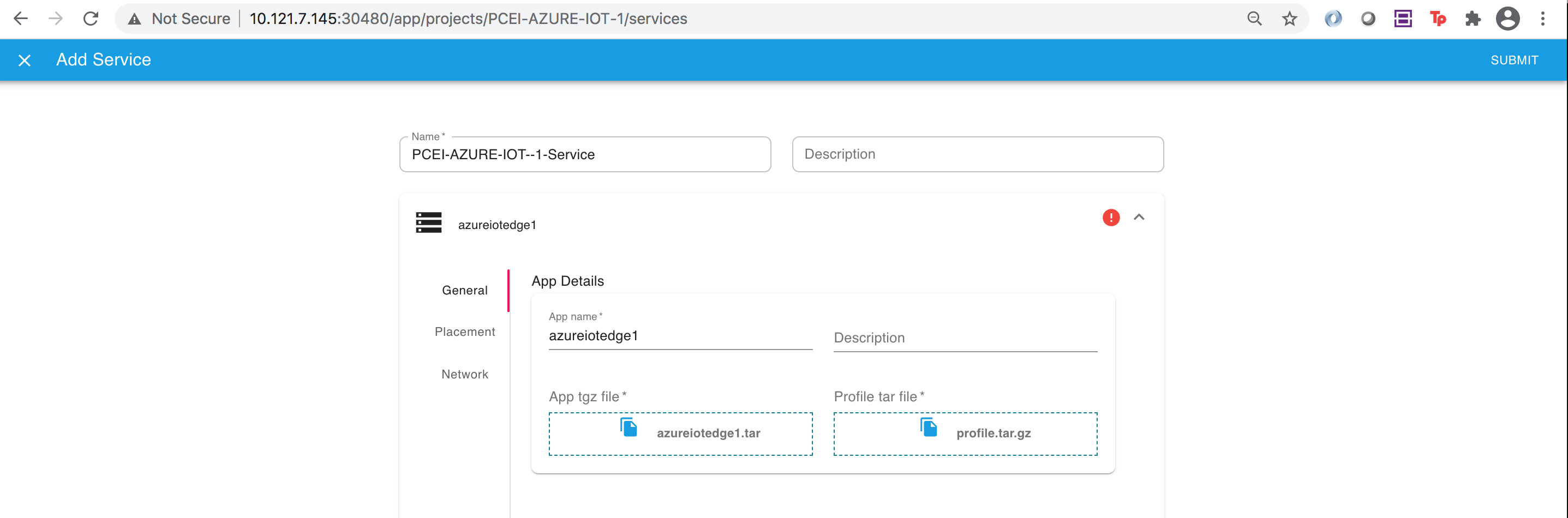

Click on the "azureiotedge1" Service and attach application definition files. Add App file "azureiotedge1.tar" and profile file "profile.tar.gz". DO NOT CLICK "SUBMIT".

In order to select the tar file please use the "Options" button on the Mac and select "All files":

Add the App tar file and the Profile tar file

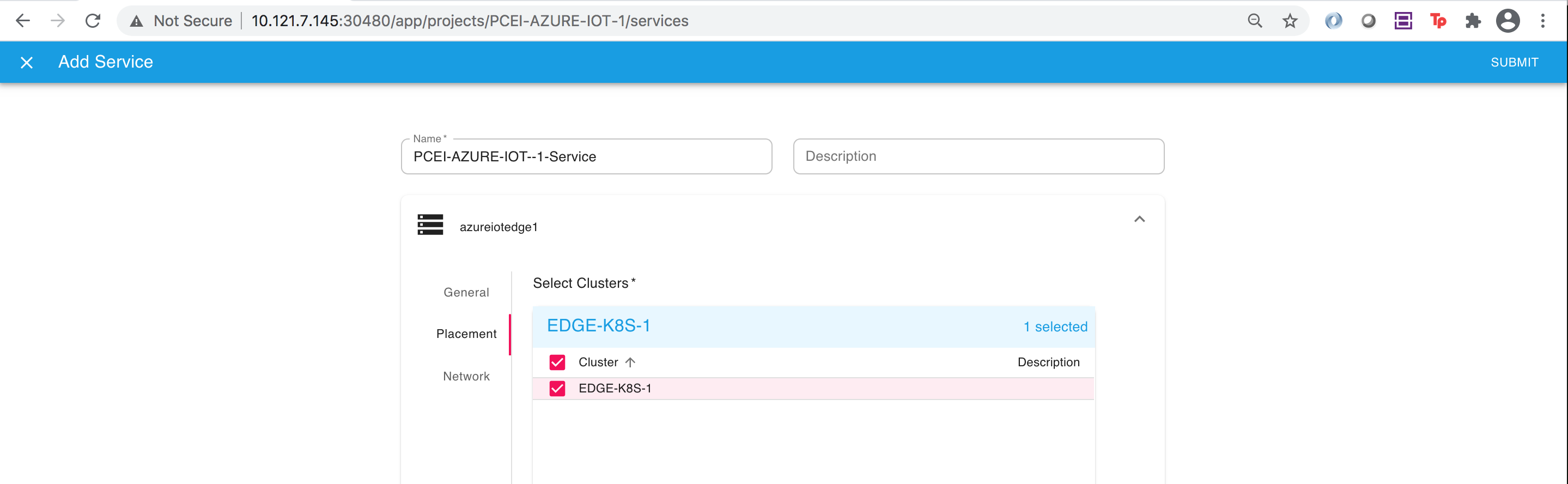

Select the "Placement" tab on the left.

Select the "EDGE-K8S-1" cluster that was registered earlier:

Click "SUBMIT" in the upper right corner:

After clicking "SUBMIT" you should see the screen below:

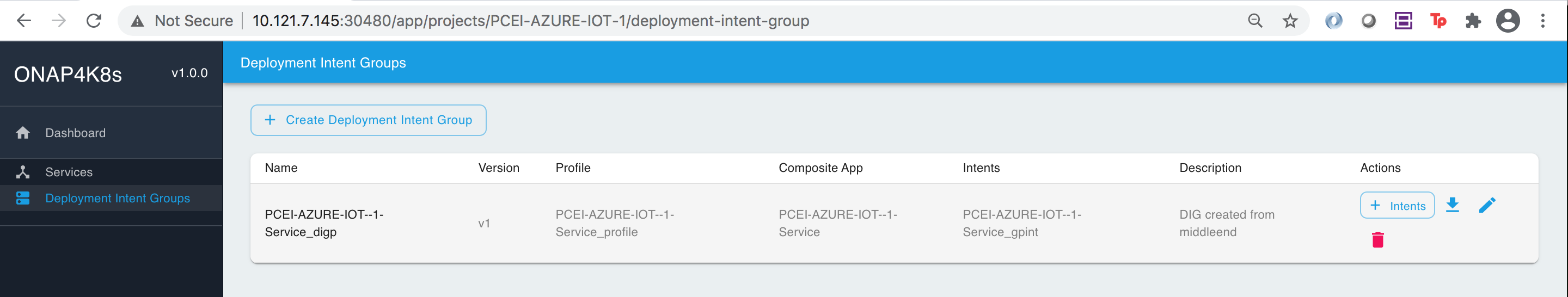

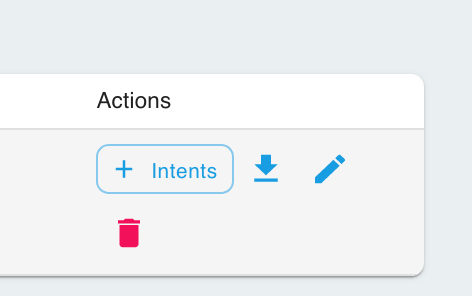

To deploy Azure IoT Edge App onto the Edge K8S Cluster (EDGE-K8S-1 in this example), select "Deployment Intent Groups" tab on the left side of the previous screen:

Click on the Blue Down Arrow on the right to deploy Azure IoT Edge App:

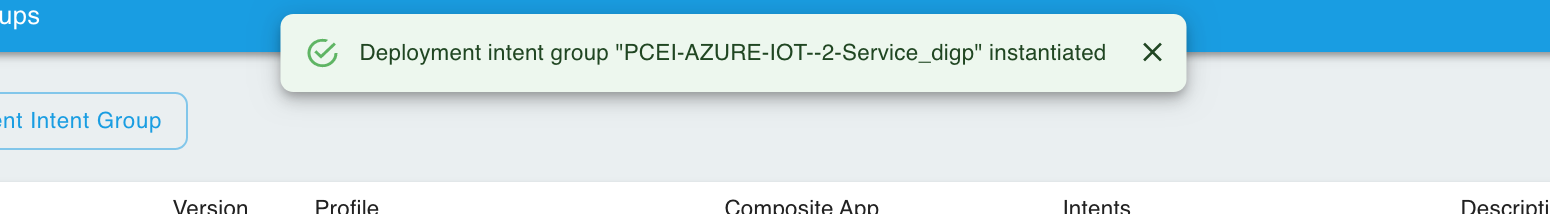

After successful deployment, you should see a message in GREEN stating that the deployment has been successful.

SSH to the EDGE-K8S-1 VM and verify the Azure IoT Edge pods started:

ssh onaplab@10.121.7.152 # Verify Azure IoT Edge pods are Running in the default namespace kubectl get pods NAME READY STATUS RESTARTS AGE edgeagent-59cf45d8b9-tc5g9 2/2 Running 1 2m8s edgehub-97dc4fdc8-t5qhf 2/2 Running 0 110s iotedged-6d9dcf4757-h474r 1/1 Running 0 2m17s loraread-d4d79b867-2ft2v 2/2 Running 0 110s # Verify services. Note the TCP port for the loraread-xxxxx-yyyy pod (31230 in the example below): kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE awsggc-service NodePort 10.244.1.182 <none> 8883:30883/TCP 9d edgehub LoadBalancer 10.244.12.125 <pending> 5671:30252/TCP,8883:31342/TCP,443:30902/TCP 3m iotedged LoadBalancer 10.244.36.143 <pending> 35000:32275/TCP,35001:32560/TCP 32d kubernetes ClusterIP 10.244.0.1 <none> 443/TCP 32d loraread LoadBalancer 10.244.3.167 <pending> 50005:31230/TCP |

To verify Azure IoT Edge end-to-end operation, perform the following tasks:

On the Host Server list the IP address for the edge_k8s-1 VM:

sudo virsh domifaddr edge_k8s-1 Name MAC address Protocol Address ------------------------------------------------------------------------------- vnet1 52:54:00:19:96:72 ipv4 10.121.7.152/27 ssh onaplab@10.121.7.152 kubectl get svc loraread NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE loraread LoadBalancer 10.244.3.167 <pending> 50005:31230/TCP 8m28s onaplab@localhost:~$ onaplab@localhost:~$ logout Connection to 10.121.7.152 closed. |

#Clone PCEI repo to a local directory:

git clone "https://gerrit.akraino.org/r/pcei"

cd pcei/iotclient/

ls -l

total 8

-rw-r--r-- 1 oberzin 108493823 3230 Dec 26 16:15 STM32SimAzureEMCO.py

# Run the LPWA IoT Client. Specify the IP address (be sure to enclose the IP address in double quotes) of the edge_k8s-1 VM and the port number for the loraread service:

python2 STM32SimAzureEMCO.py

ENTER SERVER IPv4: "10.121.7.152"

ENTER SERVER PORT: 31230

31230

SENDING...

2020-12-26T16:20:47-99

d2

62

21

######## COUNT: 1

{u'txtime': u'2020-12-26T16:20:47-99', u'datarate': 3, u'ack': u'false', u'seqno': 60782, u'pdu': u'007321E7016700d2026862', u'devClass': u'A', u'snr': 10.75, u'devEui': u'0004A30B001BAAAA', u'rssi': -39, u'gwEui': u'00250C00010003A9', u'joinId': 90, u'freq': 903.5, u'port': 3, u'channel': 6}

CLOSED

SEND RESULT: None

NEXT INTERVAL

2020-12-26T16:20:58-25

dc

62

21

######## COUNT: 2

{u'txtime': u'2020-12-26T16:20:58-25', u'datarate': 3, u'ack': u'false', u'seqno': 60782, u'pdu': u'007321E7016700dc026862', u'devClass': u'A', u'snr': 10.75, u'devEui': u'0004A30B001BAAAA', u'rssi': -39, u'gwEui': u'00250C00010003A9', u'joinId': 90, u'freq': 903.5, u'port': 3, u'channel': 6}

CLOSED

SEND RESULT: None

|

Note that the LPWA IoT Client is generating Temperature, Humidity and Pressure readings in the encoded format: u'pdu': u'007321E7016700d2026862'.

On the EGGE-K8S-1 cluster (edge_k8s-1 VM) veryfy that the Azure IoT Edge LoRaRead pod is receiving LPWA IoT messages and decoding their contents:

ssh onaplab@10.121.7.152

kubectl get pods

NAME READY STATUS RESTARTS AGE

edgeagent-59cf45d8b9-tc5g9 2/2 Running 1 26m

edgehub-97dc4fdc8-t5qhf 2/2 Running 0 26m

iotedged-6d9dcf4757-h474r 1/1 Running 0 26m

loraread-d4d79b867-2ft2v 2/2 Running 0 26m

kubectl logs loraread-d4d79b867-2ft2v loraread

Listening

('Connection address:', ('10.244.64.1', 7117))

('received data:', '{\n "ack": "false", \n "channel": 6, \n "datarate": 3, \n "devClass": "A", \n "devEui": "0004A30B001BAAAA", \n "freq": 903.5, \n "gwEui": "00250C00010003A9", \n "joinId": 90, \n "pdu": "007321E7016700d2026862", \n "port": 3, \n "rssi": -39, \n "seqno": 60782, \n "snr": 10.75, \n "txtime": "2020-12-26T16:20:47-99"\n}')

{u'txtime': u'2020-12-26T16:20:47-99', u'datarate': 3, u'ack': u'false', u'seqno': 60782, u'pdu': u'007321E7016700d2026862', u'devClass': u'A', u'snr': 10.75, u'devEui': u'0004A30B001BAAAA', u'rssi': -39, u'gwEui': u'00250C00010003A9', u'joinId': 90, u'freq': 903.5, u'port': 3, u'channel': 6}

007321E7016700d2026862

00d2

62

21E7

{"Pressure": 867, "TIMESTAMP": "2020-12-26T16:20:47-99", "Humidity": 49, "Temperature": 69.80000000000001, "Device EUI": "0004A30B001BAAAA"}

sent!

Listening

Confirmation[0] received for message with result = OK

Properties: {}

Total calls confirmed: 1

('Connection address:', ('10.244.64.1', 24020))

('received data:', '{\n "ack": "false", \n "channel": 6, \n "datarate": 3, \n "devClass": "A", \n "devEui": "0004A30B001BAAAA", \n "freq": 903.5, \n "gwEui": "00250C00010003A9", \n "joinId": 90, \n "pdu": "007321E7016700dc026862", \n "port": 3, \n "rssi": -39, \n "seqno": 60782, \n "snr": 10.75, \n "txtime": "2020-12-26T16:20:58-25"\n}')

{u'txtime': u'2020-12-26T16:20:58-25', u'datarate': 3, u'ack': u'false', u'seqno': 60782, u'pdu': u'007321E7016700dc026862', u'devClass': u'A', u'snr': 10.75, u'devEui': u'0004A30B001BAAAA', u'rssi': -39, u'gwEui': u'00250C00010003A9', u'joinId': 90, u'freq': 903.5, u'port': 3, u'channel': 6}

007321E7016700dc026862

00dc

62

21E7

{"Pressure": 867, "TIMESTAMP": "2020-12-26T16:20:58-25", "Humidity": 49, "Temperature": 71.6, "Device EUI": "0004A30B001BAAAA"}

sent!

Listening

Confirmation[0] received for message with result = OK

Properties: {}

Total calls confirmed: 2

|

Note that the Azure IoT Edge PCE App is decoding the IoT Client readings from the Low Power encoding into clear text JSON format and forwarding the decoded readings to the Azure IoT Hub in the core cloud:

{"Pressure": 867, "TIMESTAMP": "2020-12-26T16:20:58-25", "Humidity": 49, "Temperature": 71.6, "Device EUI": "0004A30B001BAAAA"} sent!

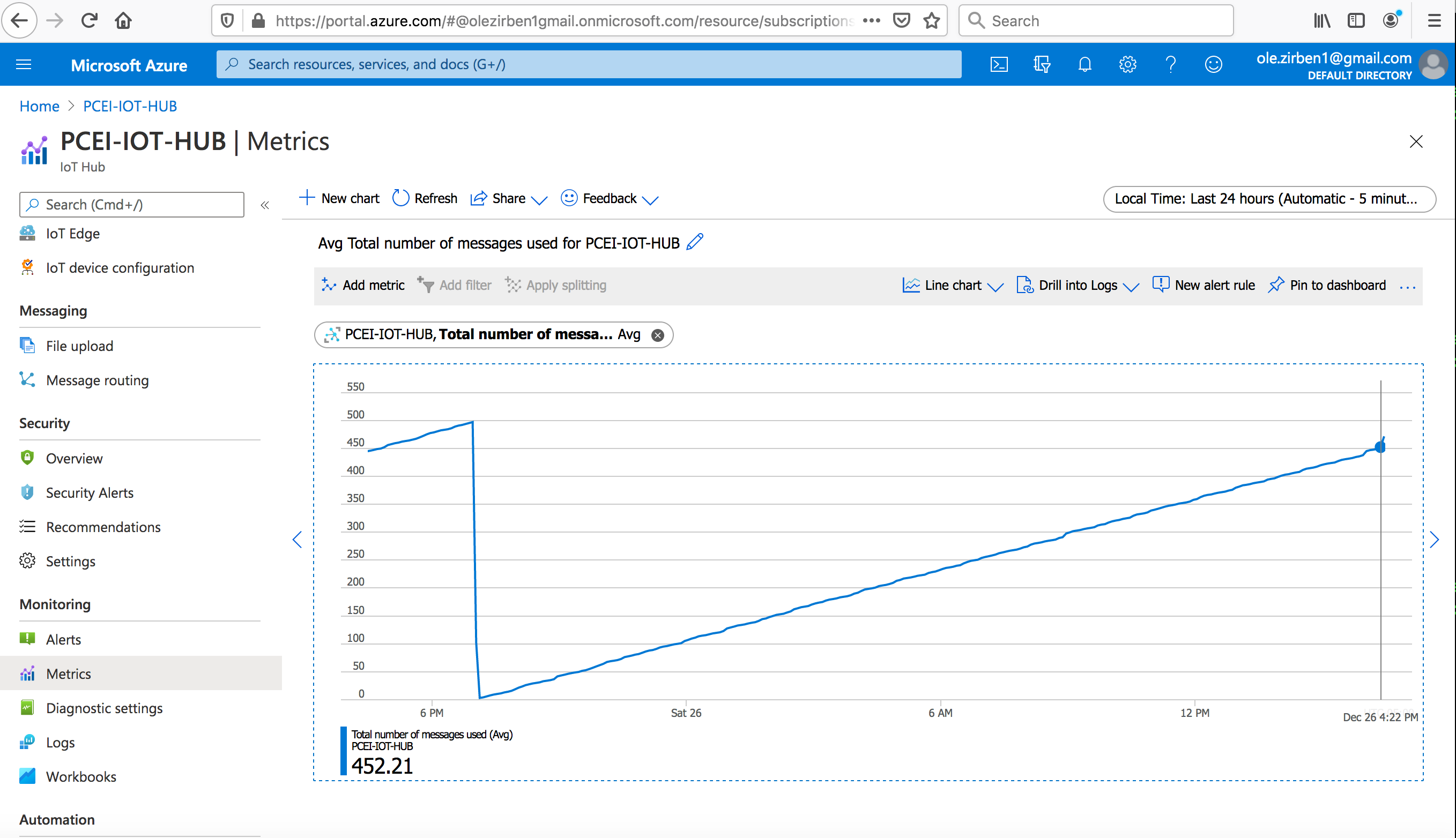

Verify that the IoT message count is increasing in Azure Portal for the IoT Edge:

The process of deploying AWS GreenGrass Core with PCEI is similar to the process described for Azure IoT Edge.

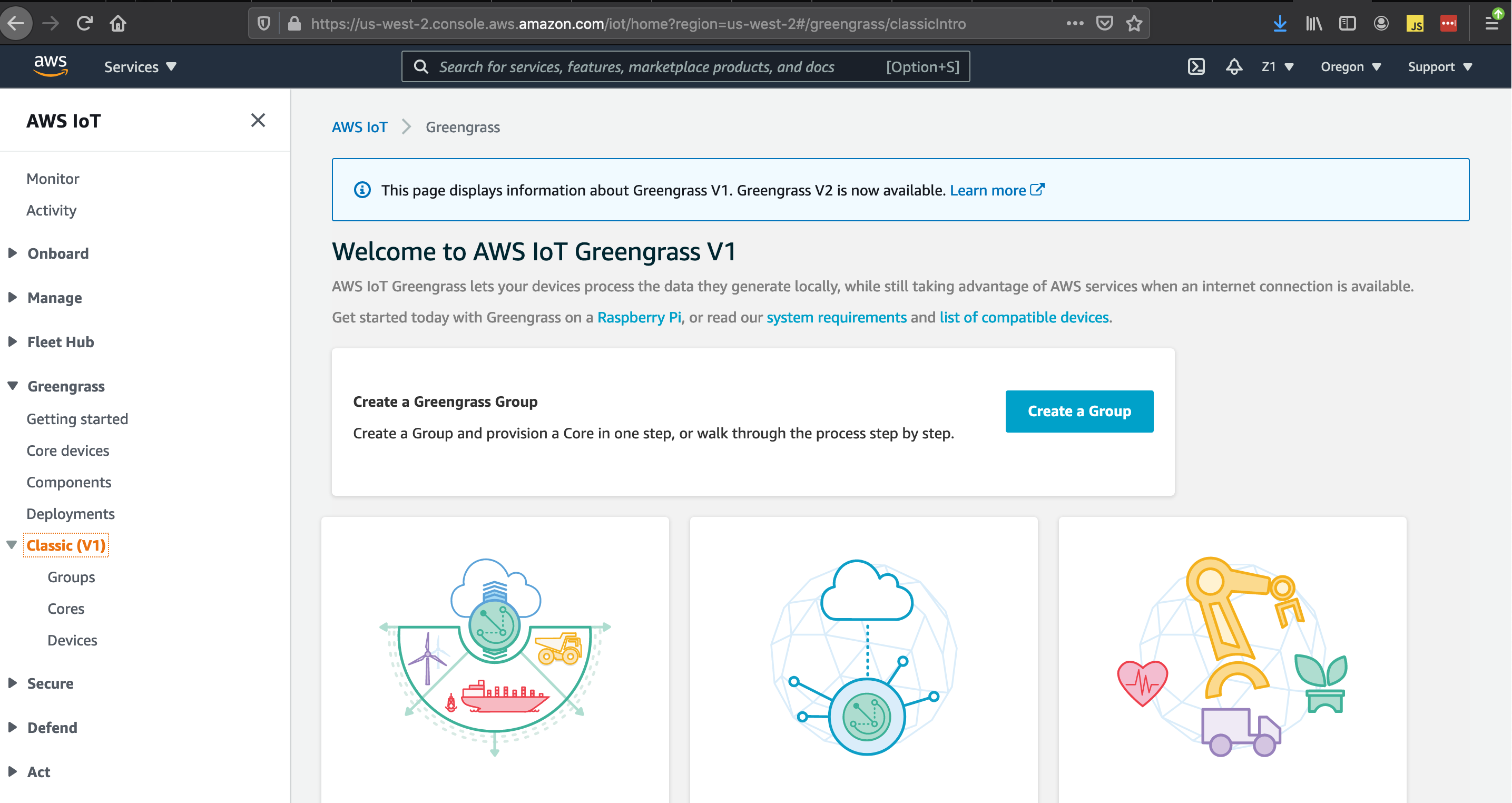

Login to AWS Console and select AWS IoT service.

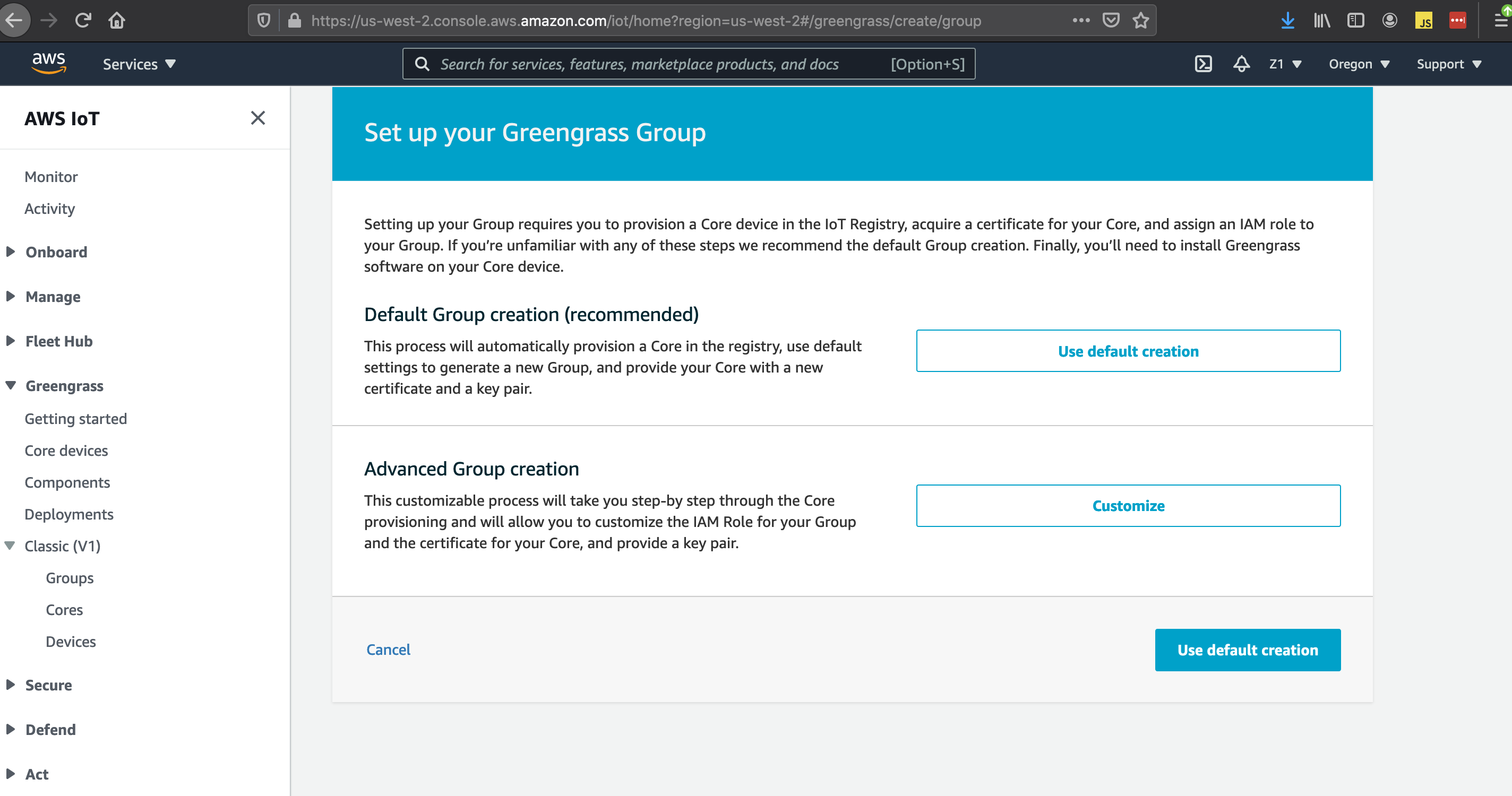

Select "Greengrass" on the left hand side, select "Classic (V1)":

Create a Group by clicking on "Create a Group":

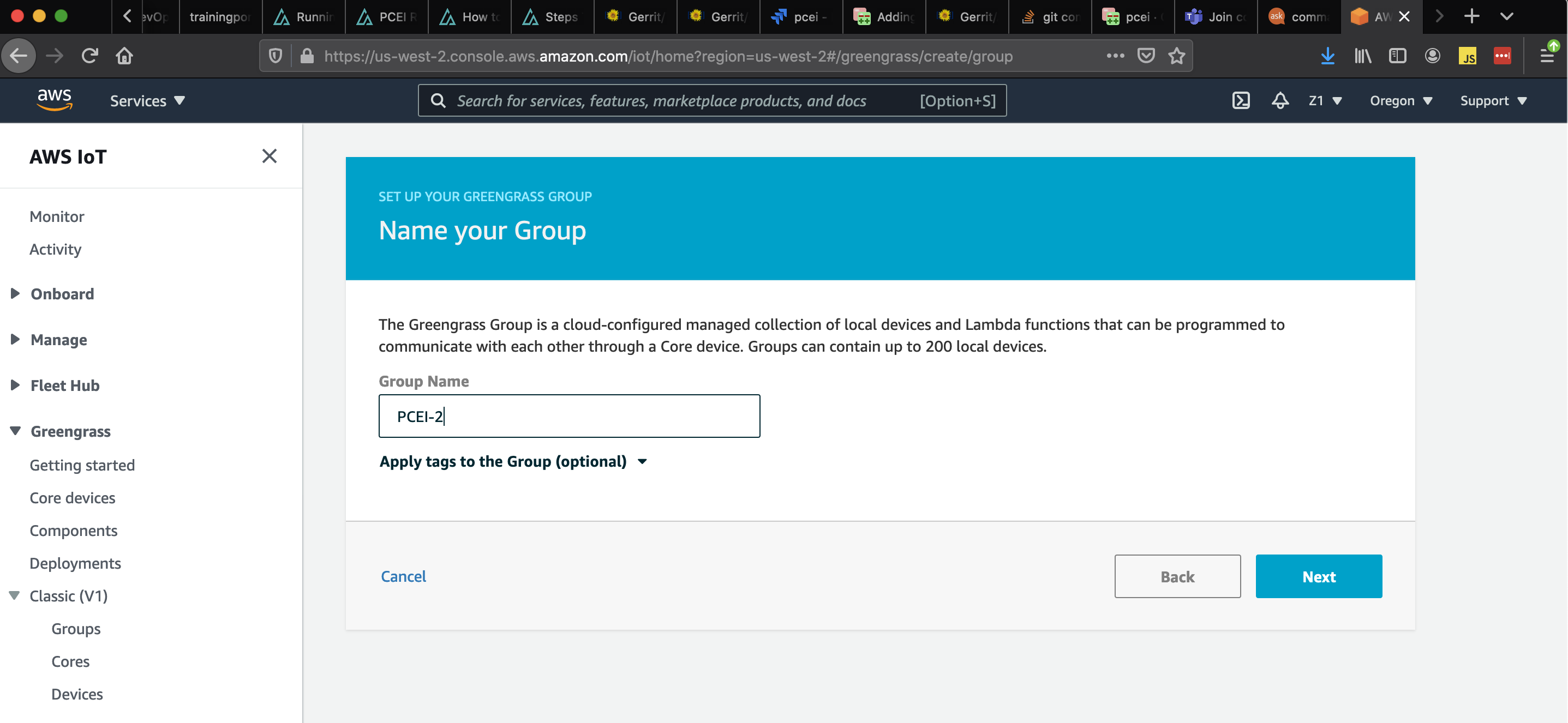

Select "Use default creation" and specify the Group Name:

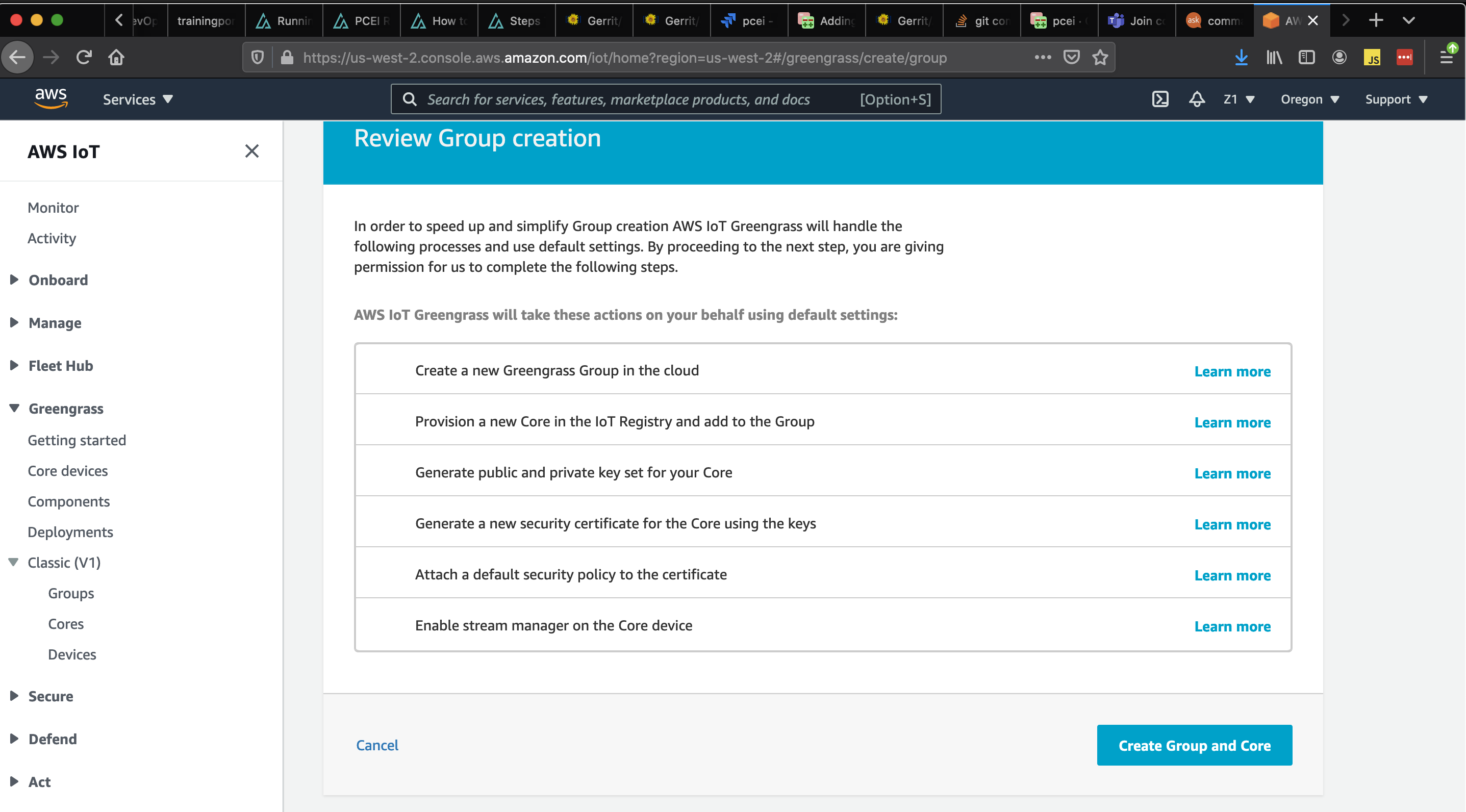

Confirm the "Core Function" and create the Group and Core:

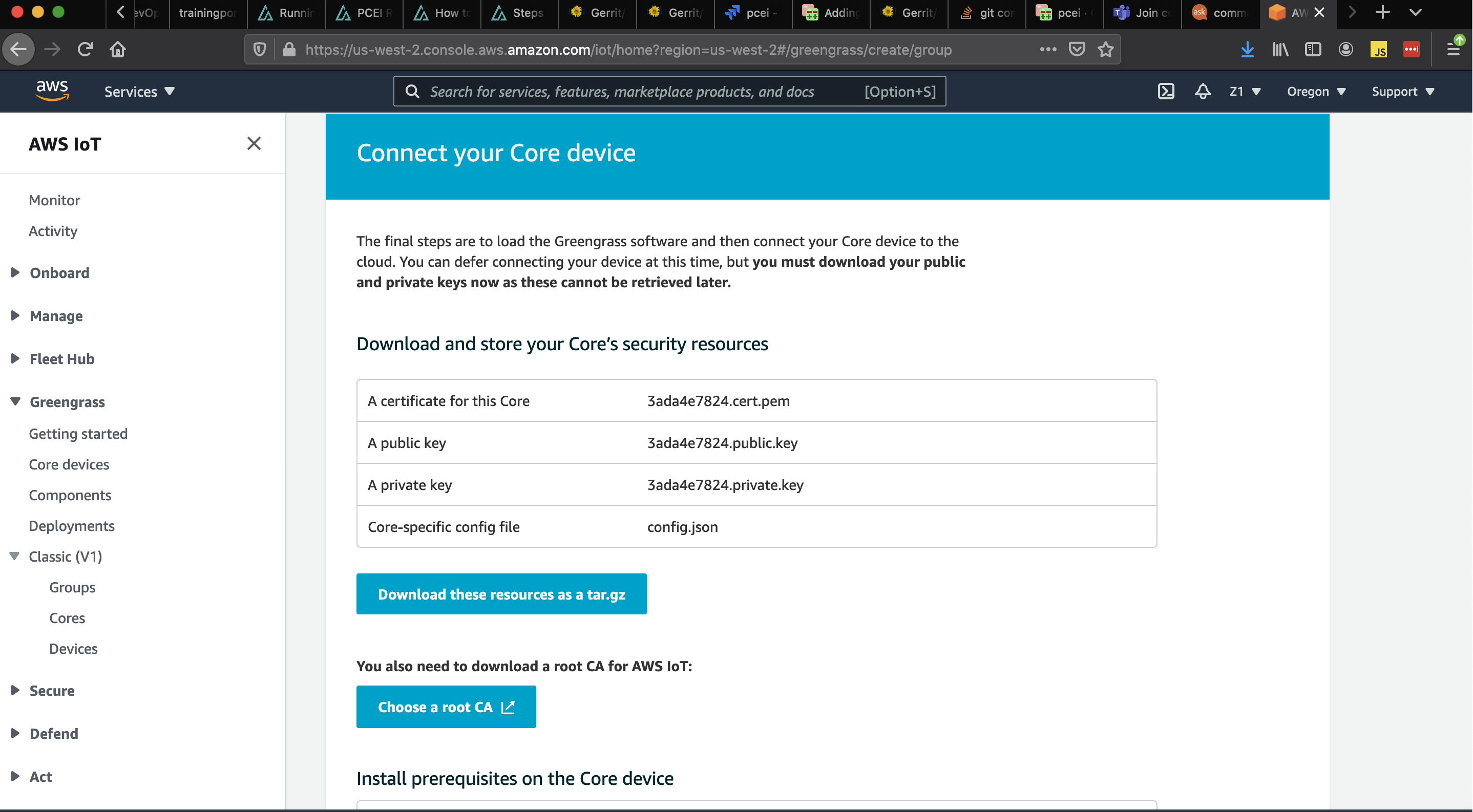

Download the Core Security Resources tar file to your local directory:

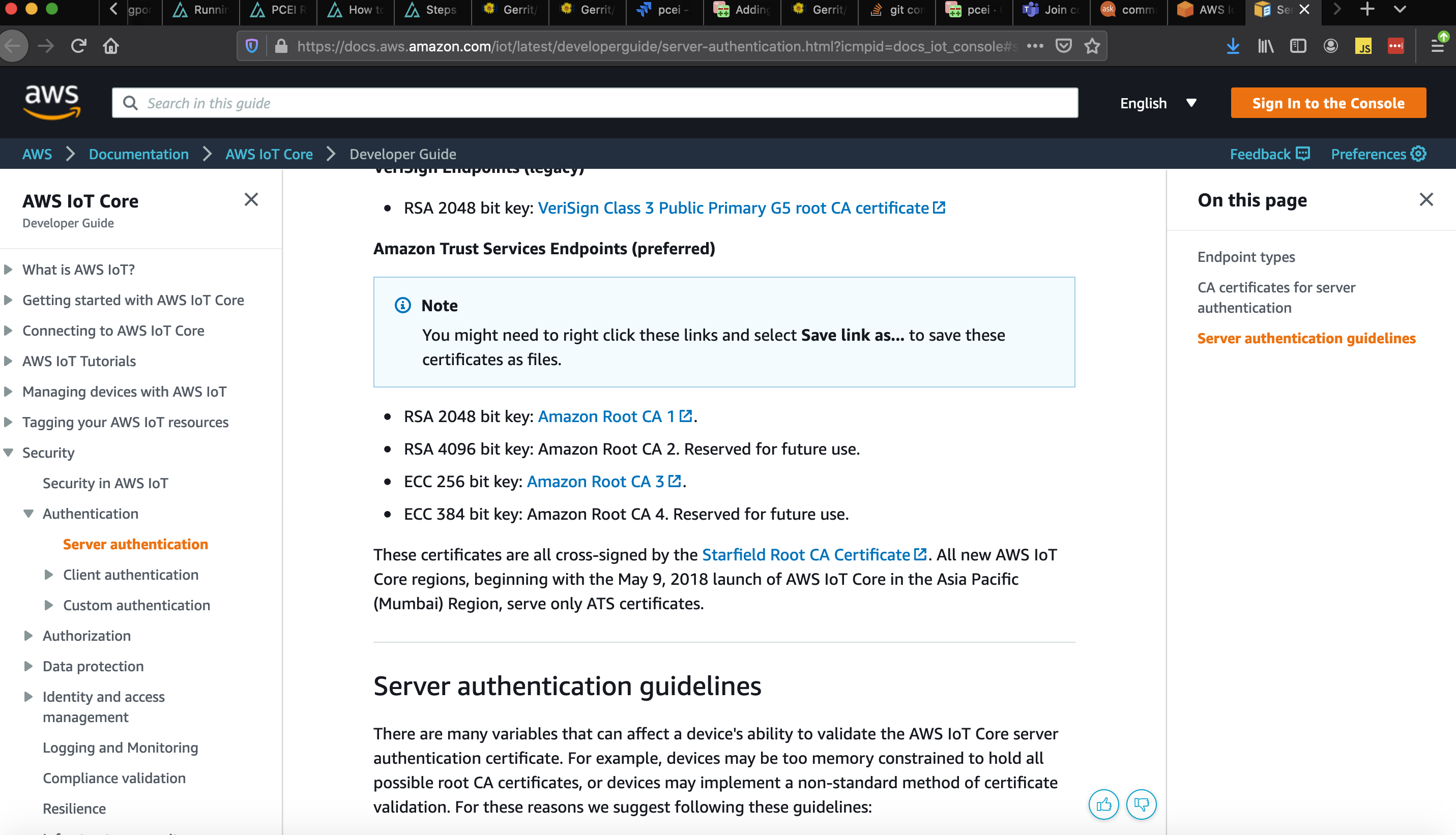

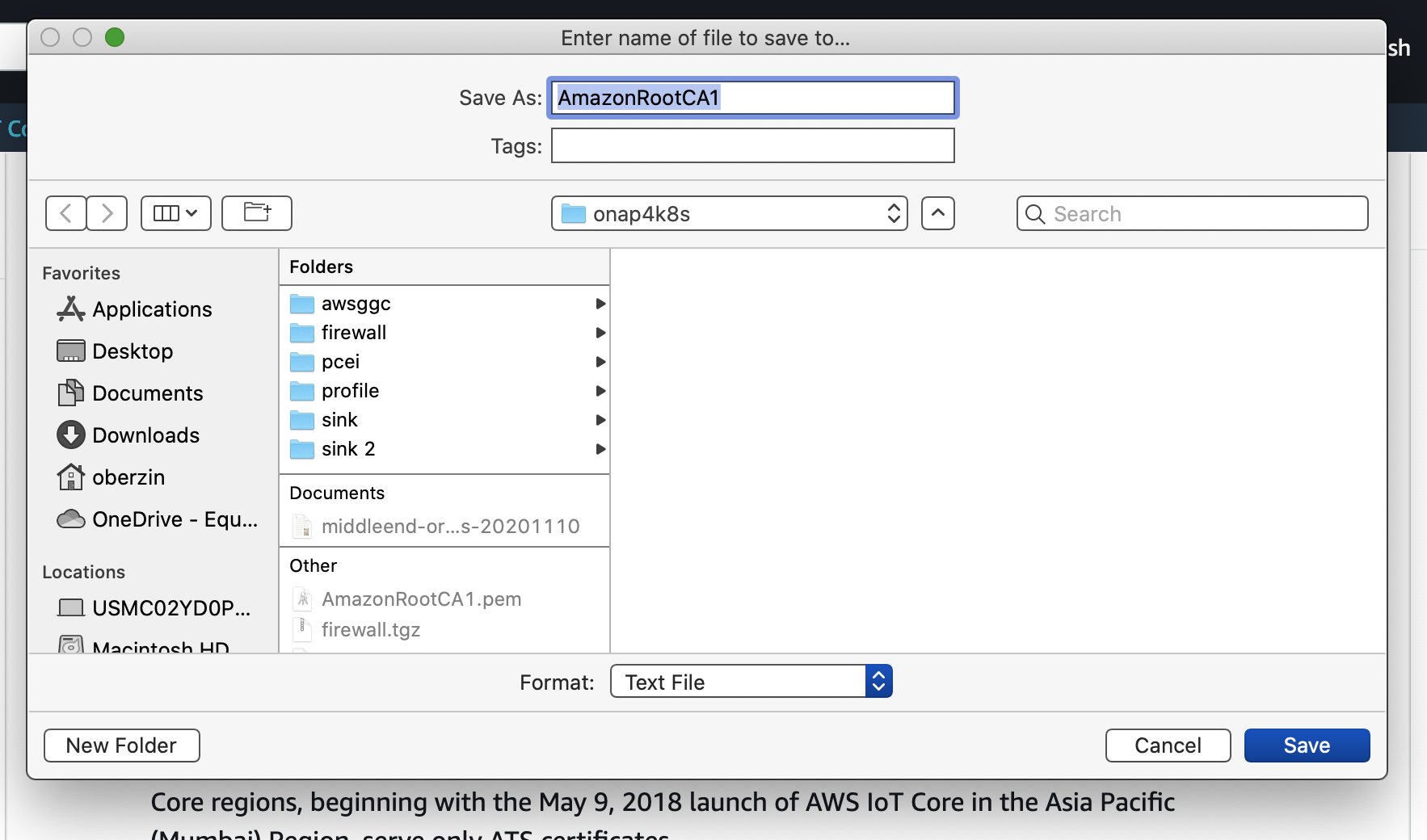

Next, chose a root CA by clicking the button and right clicking on "Amazon Root CA" link on the next page. Select "Save Link As" and save the file in your local directory

At this point your AWS Greengrass Group and Core have been provisioned.

Perform the following tasks on the edge_k8s-2 VM/Cluster. For the purposes of this guide, a local Docker registry is deployed on the edge cluster. Any other registry can be used.

# SSH to the EMCO Cluster (use the ssh key is ssh-ing from you laptop: ssh -i ~/.ssh/pcei-emco onaplab@10.121.7.146 ## Install AWS CLI on EMCO cluster curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" unzip awscliv2.zip sudo ./aws/install ## Configure AWS auth - supply Access Key and Secret Access Key: aws configure Access Key ID: XXXXXXX Secret Access Key: YYYYYYY ## Pull GGC docker image from AWS ACR awspass=`sudo aws ecr get-login-password --region us-west-2` sudo docker login --username AWS --password $awspass https://216483018798.dkr.ecr.us-west-2.amazonaws.com sudo docker pull 216483018798.dkr.ecr.us-west-2.amazonaws.com/aws-iot-greengrass:latest ### Start local docker repo on worker cluster sudo docker run -d -p 5000:5000 --restart=always --name registry registry:2 ## Tag AWS GGC docker image sudo docker tag 216483018798.dkr.ecr.us-west-2.amazonaws.com/aws-iot-greengrass localhost:5000/aws-iot-greengrass ## Push AWS GGC docker image to local registry sudo docker push localhost:5000/aws-iot-greengrass |

# Clone PCEI repo to your local directory git clone "https://gerrit.akraino.org/r/pcei" cd pcei mkdir awsggc1 cp -a awsggc/. awsggc1/ cd awsggc1 ls -al total 16 -rw-r--r-- 1 oberzin 108493823 116 Nov 23 10:55 Chart.yaml drwxr-xr-x 9 oberzin 108493823 306 Nov 24 23:10 templates -rw-r--r-- 1 oberzin 108493823 376 Nov 24 13:32 values.yaml # Modify template files: cd templates/ (base) USMBB6G8WL-3:templates oberzin$ ls -l total 56 -rw-r--r-- 1 oberzin 108493823 1042 Nov 23 10:55 _helpers.tpl -rw-r--r-- 1 oberzin 108493823 1443 Nov 24 22:37 awsggc-cert.yaml -rw-r--r-- 1 oberzin 108493823 1939 Nov 24 22:34 awsggc-privkey.yaml -rw-r--r-- 1 oberzin 108493823 1405 Nov 24 22:22 awsggc-rootca.yaml -rw-r--r-- 1 oberzin 108493823 1086 Nov 24 22:30 configmap.yaml -rw-r--r-- 1 oberzin 108493823 1701 Nov 24 23:10 deployment.yaml -rw-r--r-- 1 oberzin 108493823 407 Nov 23 10:55 service.yaml |

# Modify config.yaml file.

# Update the "thingArn", "iotHost" and "ggHost" valuses based on the config.json file from your GGC configuration.

# LEAVE ALL OTHER LINES UNCHANGED.

vi configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: {{ include "awsggc.name" .}}-configmap

data:

config.json: |-

{

"coreThing" : {

"caPath" : "root.ca.pem",

"certPath" : "cert.pem",

"keyPath" : "private.key",

"thingArn" : "arn:aws:iot:us-west-2:XXXXX",

"iotHost" : "XXXXX-ats.iot.us-west-2.amazonaws.com",

"ggHost" : "greengrass-ats.iot.us-west-2.amazonaws.com",

"keepAlive" : 600

},

"runtime" : {

"cgroup" : {

"useSystemd" : "yes"

}

},

"managedRespawn" : false,

"crypto" : {

"principals" : {

"SecretsManager" : {

"privateKeyPath" : "file:///greengrass/keys/private.key"

},

"IoTCertificate" : {

"privateKeyPath" : "file:///greengrass/keys/private.key",

"certificatePath" : "file:///greengrass/certs/cert.pem"

}

},

"caPath" : "file:///greengrass/ca/root.ca.pem"

}

}

|

# Update awsggc-cert.yaml file

# Paste the contents of "<awsggcid>-setup/cert/<awsggcid>-cert.pem" file

# into the awsggc-cert.yaml file

# Be sure to maintan indentation as shown in the example below:

vi awsggc-cert.yaml

apiVersion: v1

kind: ConfigMap

metadata:

# name: {{ include "awsggc.name" .}}-configmap

name: awsggc-cert

data:

cert.pem: |-

-----BEGIN CERTIFICATE-----

Paste cert here

-----END CERTIFICATE-----

# Update awsggc-privkey.yaml file

# Paste the contents of "<awsggcid>-setup/cert/<awsggcid>-private.key" file

# into the awsggc-privkey.yaml file

# Be sure to maintan indentation as shown in the example below:

vi awsggc-privkey.yaml

apiVersion: v1

kind: ConfigMap

metadata:

# name: {{ include "awsggc.name" .}}-configmap

name: awsggc-privkey

data:

private.key: |-

-----BEGIN RSA PRIVATE KEY-----

Paste key here

-----END RSA PRIVATE KEY-----

# Update awsggc-rootca.yaml file

# Paste the contents of "AmazonRootCA1.pem" file

# into the awsggc-rootca.yaml file

# Be sure to maintan indentation as shown in the example below:

vi awsggc-rootca.yaml

apiVersion: v1

kind: ConfigMap

metadata:

# name: {{ include "awsggc.name" .}}-configmap

name: awsggc-rootca

data:

root.ca.pem: |-

-----BEGIN CERTIFICATE-----

MIIDQTCCAimgAwIBAgITBmyfz5m/jAo54vB4ikPmljZbyjANBgkqhkiG9w0BAQsF

ADA5MQswCQYDVQQGEwJVUzEPMA0GA1UEChMGQW1hem9uMRkwFwYDVQQDExBBbWF6

b24gUm9vdCBDQSAxMB4XDTE1MDUyNjAwMDAwMFoXDTM4MDExNzAwMDAwMFowOTEL

MAkGA1UEBhMCVVMxDzANBgNVBAoTBkFtYXpvbjEZMBcGA1UEAxMQQW1hem9uIFJv

b3QgQ0EgMTCCASIwDQYJKoZIhvcNAQEBBQADggEPADCCAQoCggEBALJ4gHHKeNXj

ca9HgFB0fW7Y14h29Jlo91ghYPl0hAEvrAIthtOgQ3pOsqTQNroBvo3bSMgHFzZM

9O6II8c+6zf1tRn4SWiw3te5djgdYZ6k/oI2peVKVuRF4fn9tBb6dNqcmzU5L/qw

IFAGbHrQgLKm+a/sRxmPUDgH3KKHOVj4utWp+UhnMJbulHheb4mjUcAwhmahRWa6

VOujw5H5SNz/0egwLX0tdHA114gk957EWW67c4cX8jJGKLhD+rcdqsq08p8kDi1L

93FcXmn/6pUCyziKrlA4b9v7LWIbxcceVOF34GfID5yHI9Y/QCB/IIDEgEw+OyQm

jgSubJrIqg0CAwEAAaNCMEAwDwYDVR0TAQH/BAUwAwEB/zAOBgNVHQ8BAf8EBAMC

AYYwHQYDVR0OBBYEFIQYzIU07LwMlJQuCFmcx7IQTgoIMA0GCSqGSIb3DQEBCwUA

A4IBAQCY8jdaQZChGsV2USggNiMOruYou6r4lK5IpDB/G/wkjUu0yKGX9rbxenDI

U5PMCCjjmCXPI6T53iHTfIUJrU6adTrCC2qJeHZERxhlbI1Bjjt/msv0tadQ1wUs

N+gDS63pYaACbvXy8MWy7Vu33PqUXHeeE6V/Uq2V8viTO96LXFvKWlJbYK8U90vv

o/ufQJVtMVT8QtPHRh8jrdkPSHCa2XV4cdFyQzR1bldZwgJcJmApzyMZFo6IQ6XU

5MsI+yMRQ+hDKXJioaldXgjUkK642M4UwtBV8ob2xJNDd2ZhwLnoQdeXeGADbkpy

rqXRfboQnoZsG4q5WTP468SQvvG5

-----END CERTIFICATE----- |

Create a tar file with Helm charts for AWS Grrengrass Core App:

# Change to the "pcei" directory: cd ../.. # Create the tar file tar -czvf awsggc1.tar awsggc1/ awsggc1 awsggc1/Chart.yaml awsggc1/.helmignore awsggc1/templates awsggc1/values.yaml awsggc1/templates/deployment.yaml awsggc1/templates/service.yaml awsggc1/templates/awsggc-rootca.yaml awsggc1/templates/configmap.yaml awsggc1/templates/_helpers.tpl awsggc1/templates/awsggc-privkey.yaml awsggc1/templates/awsggc-cert.yaml |

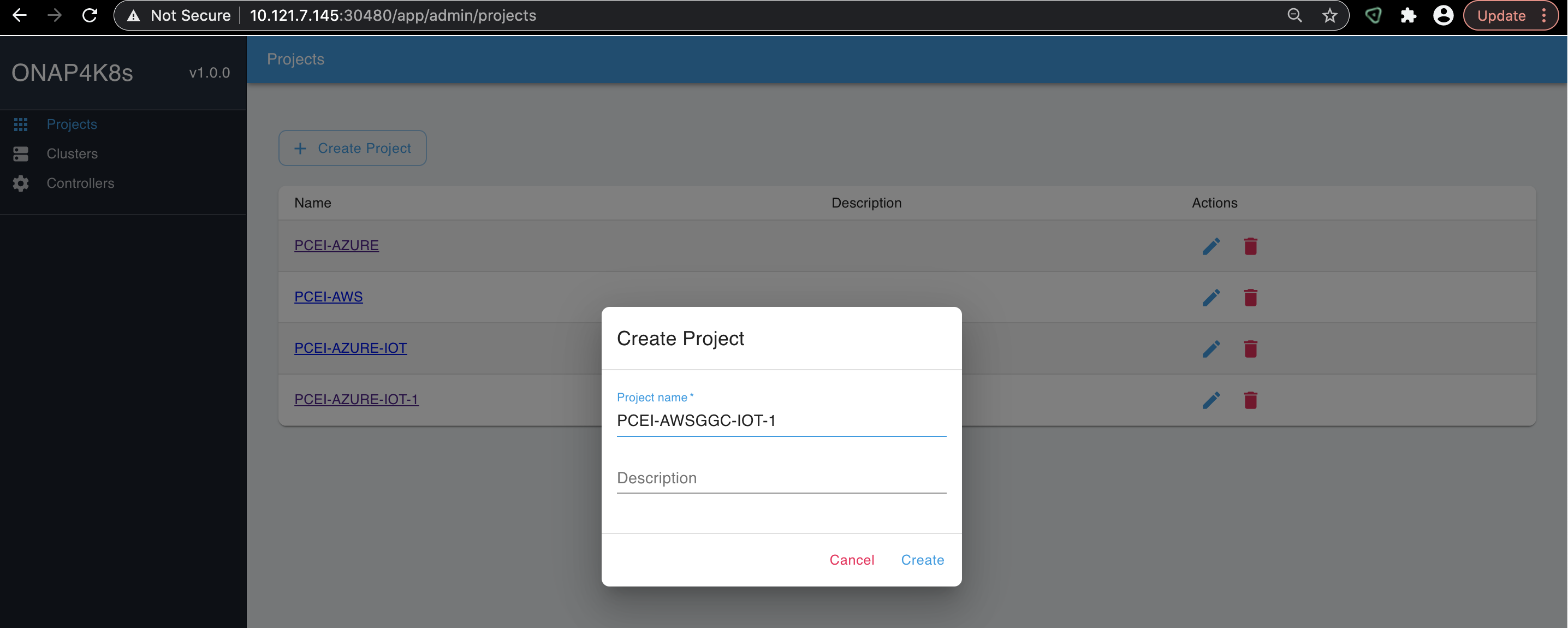

Access EMCO UI → Projects → Create Project:

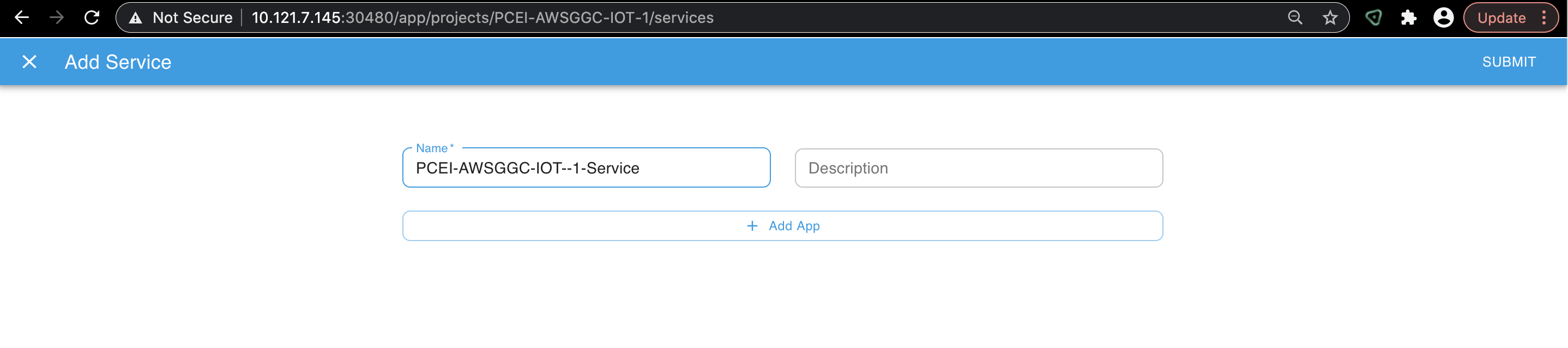

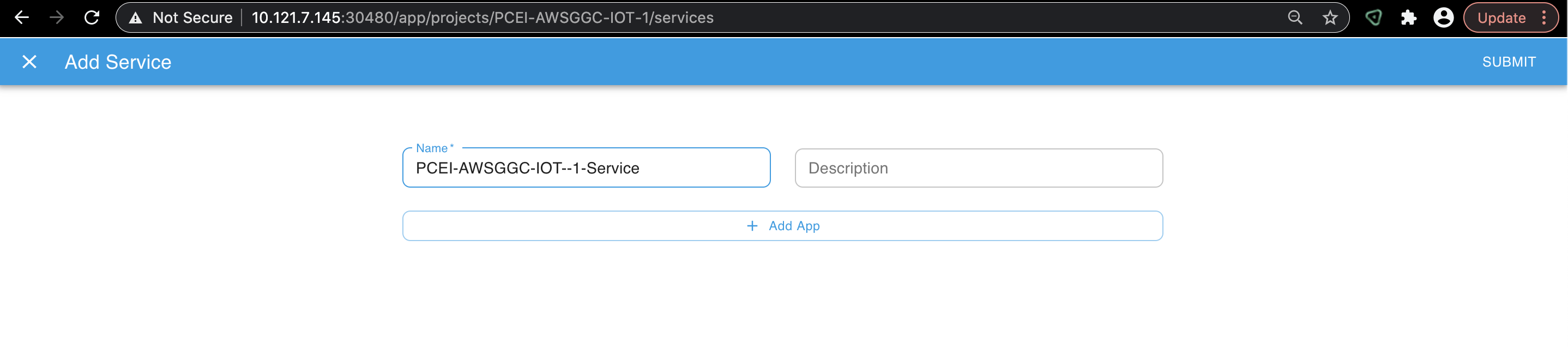

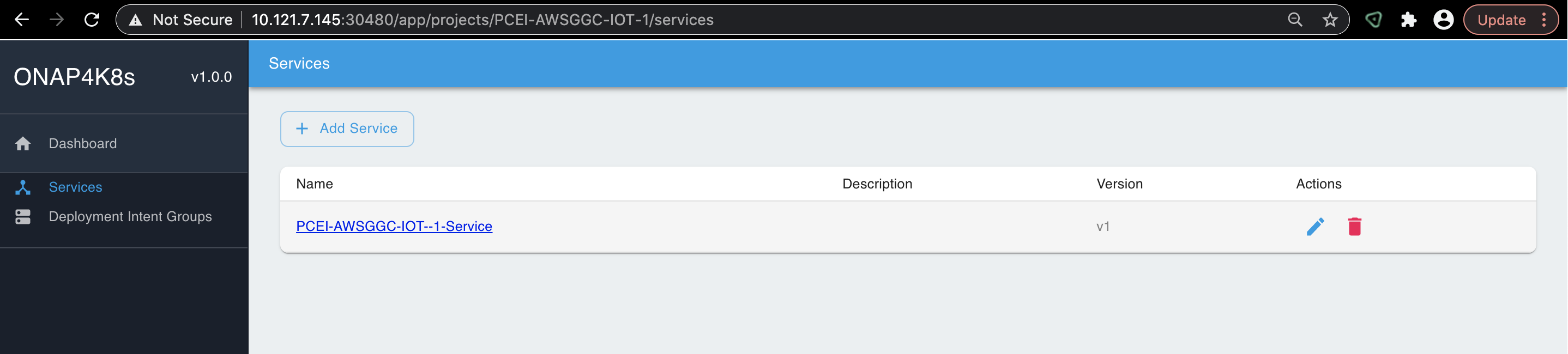

Select the just created Project - "PCEI-AWSGGC-IOT-1" in this example and click "Add Service":

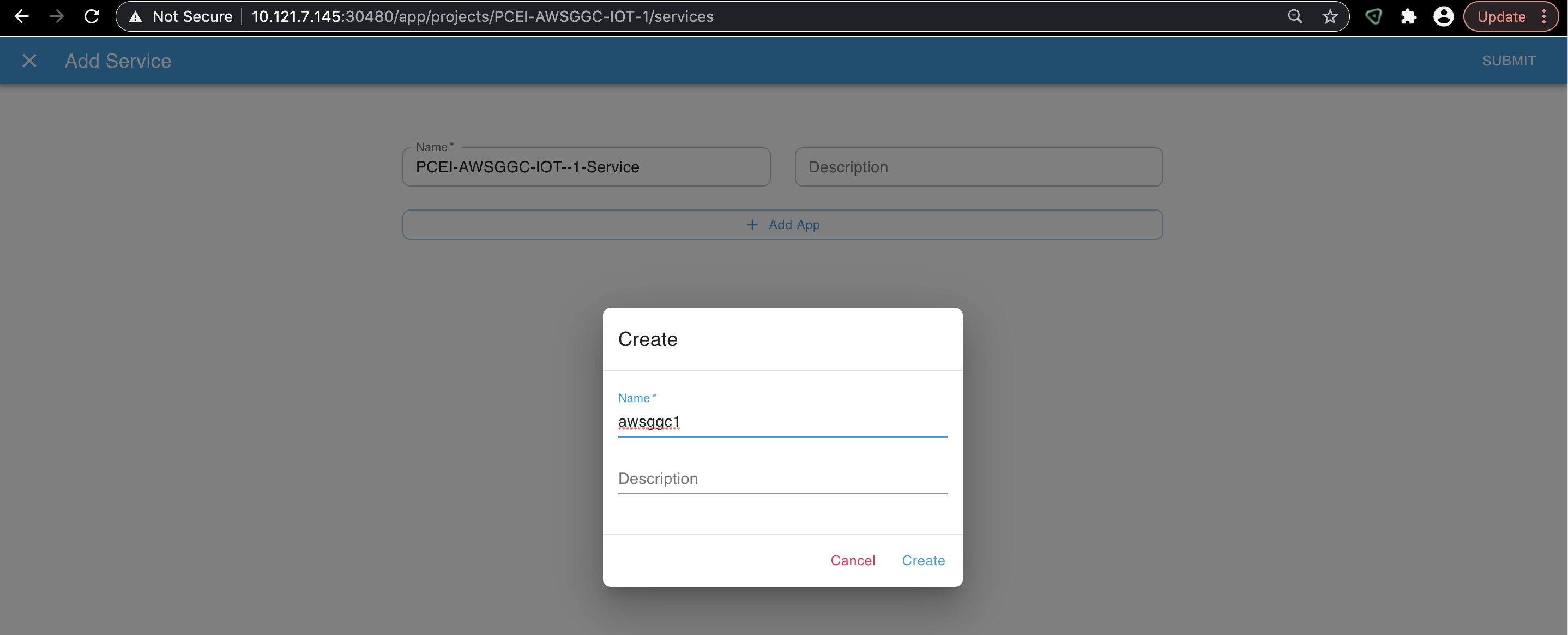

Click on "+ Add App" and add the "awsggc1" app. Make sure to use the "awsggc1" name to match the app tar file name without the extension:

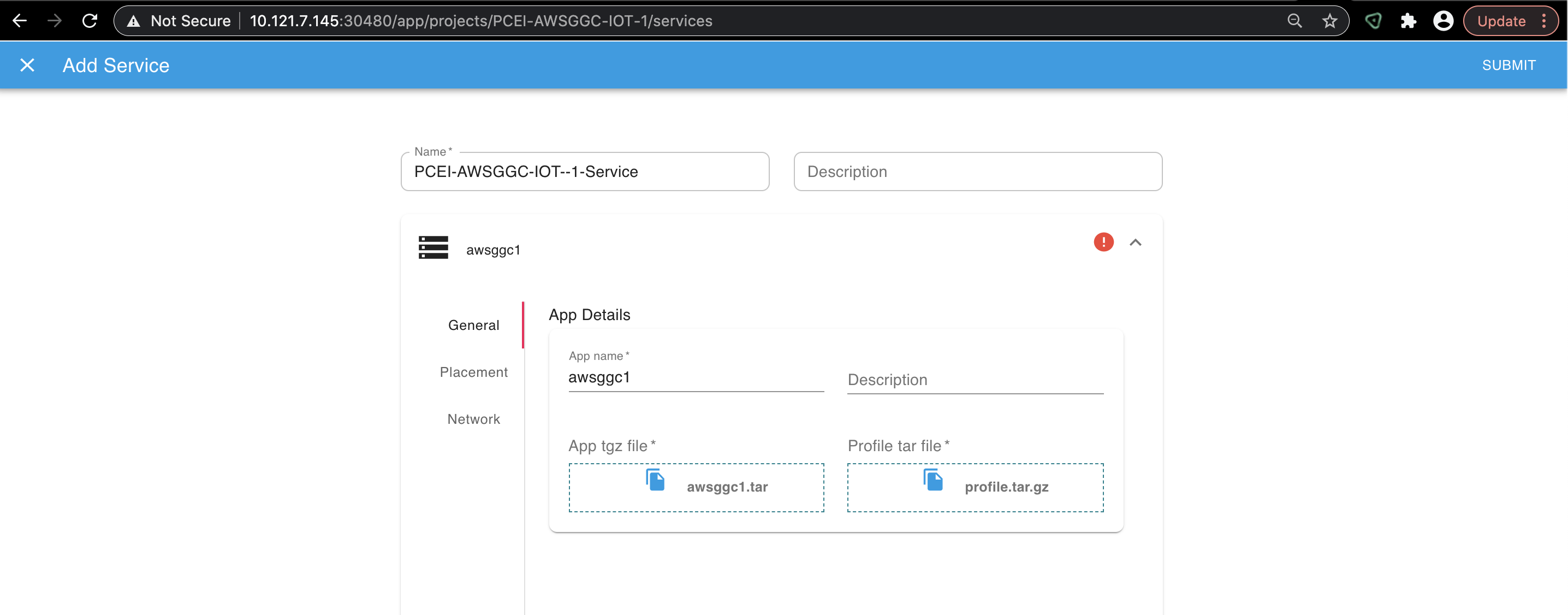

// Oleg Berzin, is this figure same as the previous figure? - Tina Tsou

Define the AWS GGC app by adding the App tar filr and the Profile tar file:

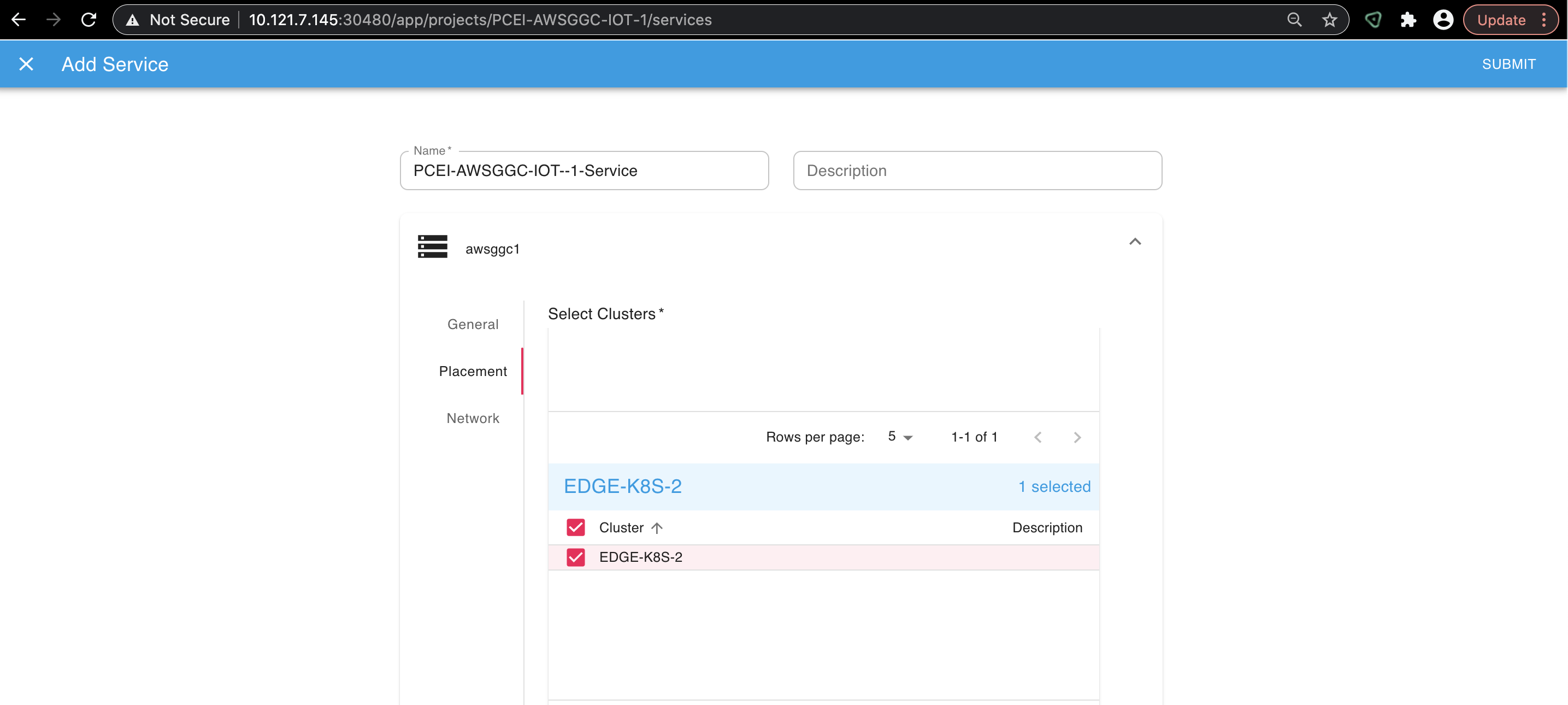

Select "Placement" on the left and select the EDGE-K8S-2" cluster:

Click "SUBMIT" in the upper right cornet.

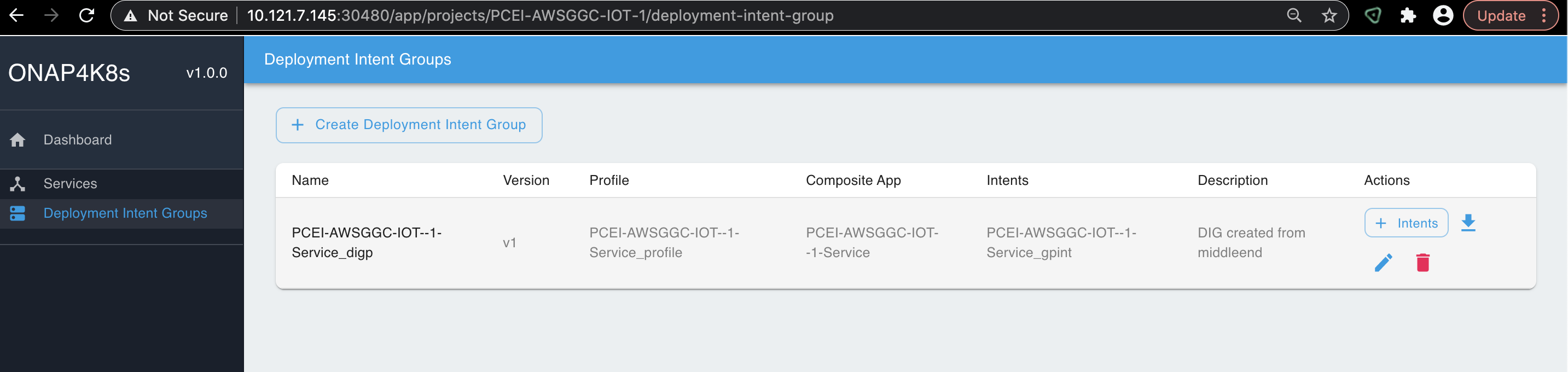

Go to "Deployment Intent Groups"on the left and deploy the AWS GGC App by clicking on the blue down-arrow:

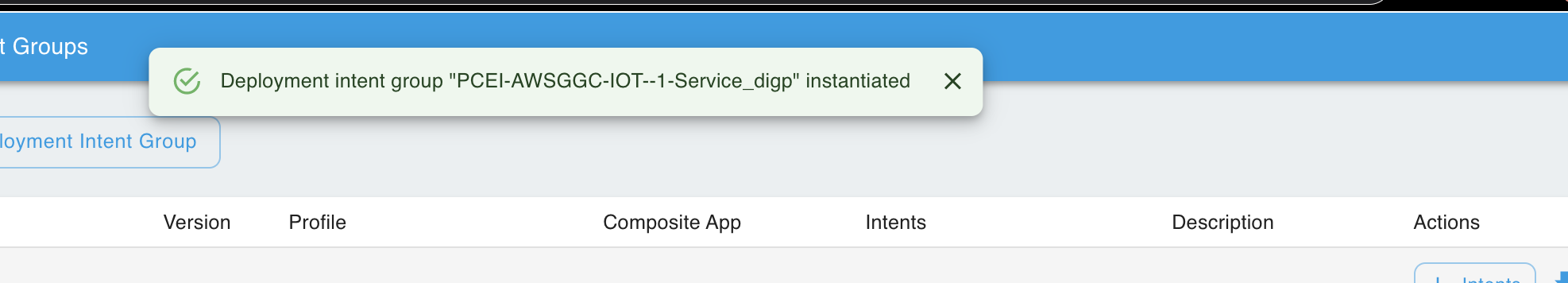

You should see the message in GREEN below:

Connect to the EDGE-K8S-2 cluster (edge_k8s-2 VM):

ssh onaplab@10.121.7.146

# Verify that the AWS GGC pod is Running in the default namesopace

kubectl get pods

NAME READY STATUS RESTARTS AGE

v1-awsggc-5c84b4ccf-vqn5x 1/1 Running 0 3m34s

# Identify the AWS GGC Docker container:

onaplab@localhost:~$ sudo docker ps |grep awsggc

e93b85bbbf04 216483018798.dkr.ecr.us-west-2.amazonaws.com/aws-iot-greengrass "/bin/bash" 4 minutes ago Up 4 minutes k8s_awsggc_v1-awsggc-5c84b4ccf-vqn5x_default_5dfb86a9-fdd2-479c-9852-3341f9b002b0_0

# Connect to the AWS GGC container:

sudo docker exec -it e93b85bbbf04 /bin/bash

bash-4.2#

# Examine the greengrass core logs:

bash-4.2# cd /greengrass/ggc/var/log/system/

bash-4.2# ls -l

total 12

drwx------ 2 root root 4096 Dec 30 01:47 localwatch

-rw------- 1 root root 8015 Dec 30 01:47 runtime.log

cat runtime.log

bash-4.2# cat runtime.log

[2020-12-30T01:47:50.015Z][INFO]-===========================================

[2020-12-30T01:47:50.015Z][INFO]-Greengrass Version: 1.11.0-RC2

[2020-12-30T01:47:50.015Z][INFO]-Greengrass Root: /greengrass

[2020-12-30T01:47:50.015Z][INFO]-Greengrass Write Directory: /greengrass/ggc

[2020-12-30T01:47:50.015Z][INFO]-Group File Directory: /greengrass/ggc/deployment/group

[2020-12-30T01:47:50.016Z][INFO]-Default Lambda UID: 999

[2020-12-30T01:47:50.016Z][INFO]-Default Lambda GID: 997

[2020-12-30T01:47:50.016Z][DEBUG]-Go version: go1.12.11

[2020-12-30T01:47:50.016Z][DEBUG]-CoreThing Connection Config:

{

"Region": "us-west-2",

"ThingArn": "arn:aws:iot:us-west-2:XXXXX",

"IoTMQTTEndpoint": "XXXXX-ats.iot.us-west-2.amazonaws.com:8883",

"IoTHTTPEndpoint": "XXXXX-ats.iot.us-west-2.amazonaws.com:8443",

"GGMQTTPort": 8883,

"GGHTTPEndpoint": "greengrass-ats.iot.us-west-2.amazonaws.com:8443",

"GGDaemonPort": 8000,

"GGMQTTKeepAlive": 600,

"GGMQTTMaxConnectionRetryInterval": 60,

"GGMQTTConnectTimeout": 30,

"GGMQTTPingTimeout": 30,

"GGMQTTOperationTimeout": 5,

"GGHTTPTimeout": 60,

"FIPSMode": false,

"CoreClientId": ""

}

[2020-12-30T01:47:50.016Z][DEBUG]-System Config:

{

"tmpDirectory": "",

"shadowSyncTimeout": 0,

"useOverlayWithTmpfs": false,

"disablePivotRoot": false

}

[2020-12-30T01:47:50.016Z][DEBUG]-Runtime Config:

{

"maxWorkItemCount": 1024,

"schedulingFrequency": 1000,

"maxConcurrentLimit": 25,

"lruSize": 25,

"cgroup": {

"useSystemd": true,

"mountPoint": null

},

"postStartHealthCheckTimeout": 60000,

"allowFunctionsToRunAsRoot": false,

"onSystemComponentsFailure": 0,

"systemComponentAuthTimeout": 5000,

"mountAllBlockDevices": false

}

[2020-12-30T01:47:50.016Z][INFO]-===========================================

[2020-12-30T01:47:50.016Z][DEBUG]-[3]Requested certificate load [file:///greengrass/certs/cert.pem] with error: [<nil>]

[2020-12-30T01:47:50.016Z][INFO]-The current core is using the AWS IoT certificates with fingerprint. {"fingerprint": "10f8f09c63075139b8daff9fcbd1ff8d221cb505f421895171ea2b4c85231b82"}

[2020-12-30T01:47:50.016Z][INFO]-Will persist worker process info. {"dir": "/greengrass/ggc/packages/1.11.0/var/worker/processes"}

[2020-12-30T01:47:50.017Z][INFO]-Will persist worker process info. {"dir": "/greengrass/ggc/packages/1.11.0/var/worker/processes"}

[2020-12-30T01:47:50.017Z][DEBUG]-loading subscriptions {"trie": "map[]"}

[2020-12-30T01:47:50.017Z][INFO]-No proxy URL found.

[2020-12-30T01:47:50.018Z][DEBUG]-[3]Requested certificate load [file:///greengrass/ca/root.ca.pem] with error: [<nil>]

[2020-12-30T01:47:50.018Z][DEBUG]-[3]Requested key load [file:///greengrass/keys/private.key] with error: [<nil>]

[2020-12-30T01:47:50.018Z][DEBUG]-[3]Requested certificate load [file:///greengrass/certs/cert.pem] with error: [<nil>]

[2020-12-30T01:47:50.018Z][INFO]-No proxy URL found.

[2020-12-30T01:47:50.021Z][INFO]-Connecting with MQTT. {"endpoint": "a1hd4mq2lxwoaa-ats.iot.us-west-2.amazonaws.com:8883", "clientId": "PCEI_Core1"}

[2020-12-30T01:47:50.021Z][DEBUG]-MQTT connection attempt. {"attemptId": "tcbM", "clientId": "PCEI_Core1"}

[2020-12-30T01:47:50.021Z][DEBUG]-New MQTT connection attempt

[2020-12-30T01:47:50.021Z][DEBUG]-Acquired lock for connection attempt

[2020-12-30T01:47:50.021Z][DEBUG]-Disconnect client {"force": false}

[2020-12-30T01:47:50.022Z][DEBUG]-Reloading function registry.

[2020-12-30T01:47:50.022Z][DEBUG]-[3]Requested certificate load [file:///greengrass/certs/cert.pem] with error: [<nil>]

[2020-12-30T01:47:50.022Z][INFO]-The current core is using the AWS IoT certificates with fingerprint. {"fingerprint": "10f8f09c63075139b8daff9fcbd1ff8d221cb505f421895171ea2b4c85231b82"}

[2020-12-30T01:47:50.212Z][DEBUG]-Connection request is valid

[2020-12-30T01:47:50.212Z][DEBUG]-Finished connection attempt, waiting for OnConnect Handler

[2020-12-30T01:47:50.212Z][DEBUG]-Releasing lock for connection attempt

[2020-12-30T01:47:50.212Z][INFO]-MQTT connection successful. {"attemptId": "tcbM", "clientId": "PCEI_Core1"}

[2020-12-30T01:47:50.212Z][INFO]-MQTT connection established. {"endpoint": "a1hd4mq2lxwoaa-ats.iot.us-west-2.amazonaws.com:8883", "clientId": "PCEI_Core1"}

[2020-12-30T01:47:50.212Z][DEBUG]-Handle new connection event

[2020-12-30T01:47:50.212Z][DEBUG]-Acquired lock for new connection event

[2020-12-30T01:47:50.212Z][DEBUG]-Update status to connected

[2020-12-30T01:47:50.213Z][DEBUG]-Releasing lock for new connection event

[2020-12-30T01:47:50.213Z][DEBUG]-Entering OnConnect. {"clientId": "PCEI_Core1", "count": 1}

[2020-12-30T01:47:50.213Z][INFO]-MQTT connection connected. Start subscribing. {"clientId": "PCEI_Core1", "count": 1}

[2020-12-30T01:47:50.213Z][INFO]-Daemon connected to cloud.

[2020-12-30T01:47:50.213Z][DEBUG]-Subscribe retry configuration. {"IntervalInSeconds": 60, "count": 1}

[2020-12-30T01:47:50.213Z][INFO]-Start subscribing. {"numOfTopics": 5, "clientId": "PCEI_Core1"}

[2020-12-30T01:47:50.213Z][INFO]-Trying to subscribe to topic $aws/things/PCEI_Core1-gda/shadow/get/accepted

[2020-12-30T01:47:50.213Z][DEBUG]-Subscribe {"topic": "$aws/things/PCEI_Core1-gda/shadow/get/accepted", "qos": 0}

[2020-12-30T01:47:50.263Z][DEBUG]-Subscribed to topic. {"topic": "$aws/things/PCEI_Core1-gda/shadow/get/accepted"}

[2020-12-30T01:47:50.263Z][DEBUG]-Publish {"topic": "$aws/things/PCEI_Core1-gda/shadow/get", "qos": 1}

[2020-12-30T01:47:50.289Z][INFO]-Trying to subscribe to topic $aws/things/PCEI_Core1-gda/shadow/update/delta

[2020-12-30T01:47:50.289Z][DEBUG]-Subscribe {"topic": "$aws/things/PCEI_Core1-gda/shadow/update/delta", "qos": 0}

[2020-12-30T01:47:50.373Z][DEBUG]-Subscribed to topic. {"topic": "$aws/things/PCEI_Core1-gda/shadow/update/delta"}

[2020-12-30T01:47:50.373Z][INFO]-Trying to subscribe to topic $aws/things/PCEI_Core1-gcf/shadow/get/rejected

[2020-12-30T01:47:50.373Z][DEBUG]-Subscribe {"topic": "$aws/things/PCEI_Core1-gcf/shadow/get/rejected", "qos": 0}

[2020-12-30T01:47:50.425Z][DEBUG]-Subscribed to topic. {"topic": "$aws/things/PCEI_Core1-gcf/shadow/get/rejected"}

[2020-12-30T01:47:50.425Z][INFO]-Trying to subscribe to topic $aws/things/PCEI_Core1-gcf/shadow/get/accepted

[2020-12-30T01:47:50.425Z][DEBUG]-Subscribe {"topic": "$aws/things/PCEI_Core1-gcf/shadow/get/accepted", "qos": 0}

[2020-12-30T01:47:50.494Z][DEBUG]-Subscribed to topic. {"topic": "$aws/things/PCEI_Core1-gcf/shadow/get/accepted"}

[2020-12-30T01:47:50.494Z][DEBUG]-Publish {"topic": "$aws/things/PCEI_Core1-gcf/shadow/get", "qos": 1}

[2020-12-30T01:47:50.52Z][INFO]-Trying to subscribe to topic $aws/things/PCEI_Core1-gcf/shadow/update/delta

[2020-12-30T01:47:50.52Z][DEBUG]-Subscribe {"topic": "$aws/things/PCEI_Core1-gcf/shadow/update/delta", "qos": 0}

[2020-12-30T01:47:50.535Z][INFO]-Updating with update request. {"aggregationIntervalSeconds": 3600, "publishIntervalSeconds": 86400}

[2020-12-30T01:47:50.535Z][INFO]-Config update is in pending state. {"aggregationIntervalSeconds": 3600, "publishIntervalSeconds": 86400}

[2020-12-30T01:47:50.535Z][INFO]-Start metrics config updating. {"aggregationIntervalSeconds": 3600, "publishIntervalSeconds": 86400}

[2020-12-30T01:47:50.535Z][INFO]-Metric config update succeeded.

[2020-12-30T01:47:50.536Z][INFO]-Received signal to terminate aggregation schedule

[2020-12-30T01:47:50.536Z][INFO]-Received signal to terminate upload schedule

[2020-12-30T01:47:50.536Z][DEBUG]-Report config update to the topic {"topic": "$aws/things/PCEI_Core1-gcf/shadow/update", "data": "{\"state\":{\"reported\":{\"telemetryConfiguration\":{\"publishIntervalSeconds\":86400,\"aggregationIntervalSeconds\":3600}}}}"}

[2020-12-30T01:47:50.536Z][DEBUG]-Publish {"topic": "$aws/things/PCEI_Core1-gcf/shadow/update", "qos": 1}

[2020-12-30T01:47:50.562Z][INFO]-Update metric config reported to the cloud.

[2020-12-30T01:47:50.577Z][DEBUG]-Subscribed to topic. {"topic": "$aws/things/PCEI_Core1-gcf/shadow/update/delta"}

[2020-12-30T01:47:50.577Z][INFO]-All topics subscribed. {"clientId": "PCEI_Core1"}

bash-4.2# |

The deployment of the Location API App is an example of deploying a 3rd-Party Edge (3PE) App without the Public Cloud Core (PCC) backend/provisioning. The figure below shows the process of deploying the Location API App. Note that the MNO infrastructure and Location Service are shown for example only (the current implementation of the PCEI Location API App does not support intergration with MNO Location Services).

Clone the PCEI repo and build the Docker image for the Location API App. Perform the follwing tasks on the EDGE-K8S-2 cluster:

ssh onaplab@10.121.7.146 # Clone the PCEI repo git clone "https://gerrit.akraino.org/r/pcei" cd pcei/locationAPI/nodejs # Build the Docker image sudo docker build -f Dockerfile . -t pceilocapi:latest #Run local Docker repository sudo docker run -d -p 5000:5000 --restart=always --name registry registry:2 # Push the PCEI Location API image to local Docker repository: sudo docker tag pceilocapi localhost:5000/pceilocapi sudo docker push localhost:5000/pceilocapi |

To package PCEI Location API App, we need to download Helm charts to a local directory on your laptop and create a tar App file:

git clone "https://gerrit.akraino.org/r/pcei" cd pcei mkdir pceilocapi1 cp -a pceilocapihelm/. pceilocapi1/ cd pceilocapi1 ls -l total 16 -rw-r--r-- 1 oberzin staff 120 Dec 29 23:24 Chart.yaml drwxr-xr-x 6 oberzin staff 192 Dec 29 23:34 templates -rw-r--r-- 1 oberzin staff 376 Dec 29 23:34 values.yaml cd .. tar -czvf pceilocapi1.tar pceilocapi1/ a pceilocapi1 a pceilocapi1/Chart.yaml a pceilocapi1/.helmignore a pceilocapi1/templates a pceilocapi1/values.yaml a pceilocapi1/templates/deployment.yaml a pceilocapi1/templates/service.yaml a pceilocapi1/templates/configmap.yaml a pceilocapi1/templates/_helpers.tpl |

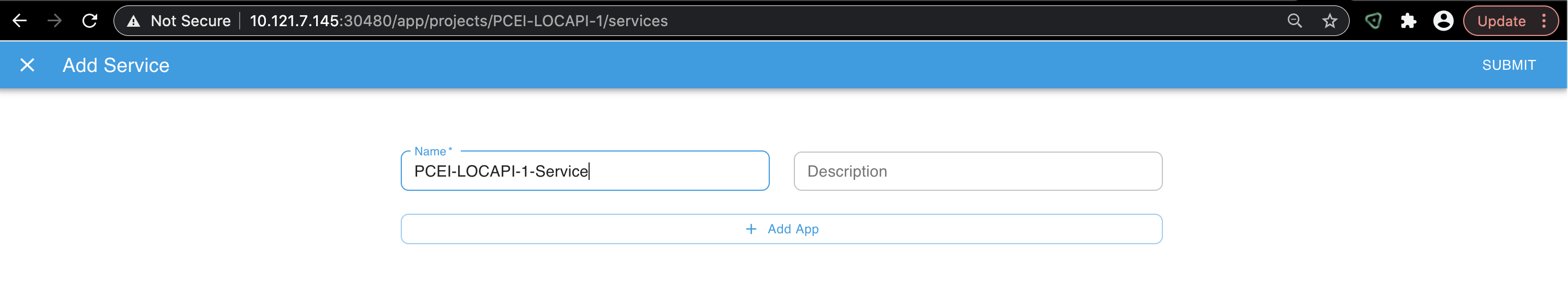

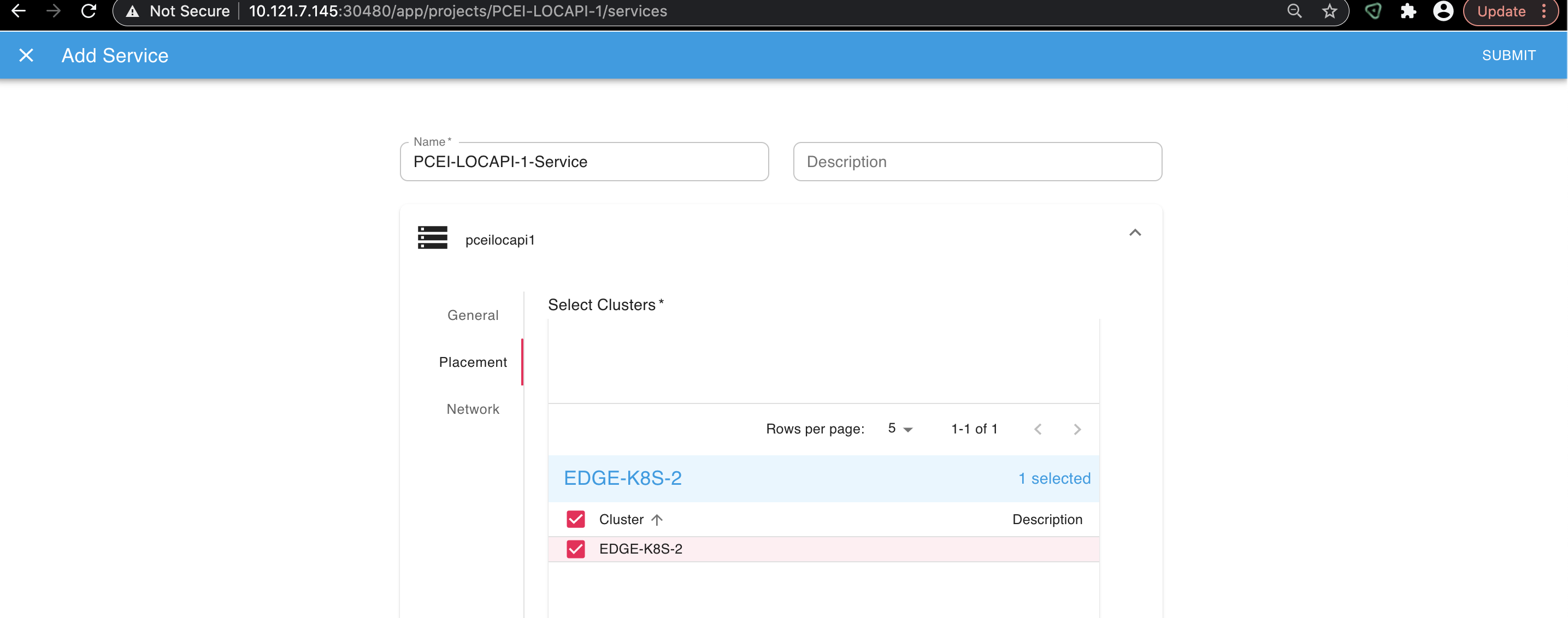

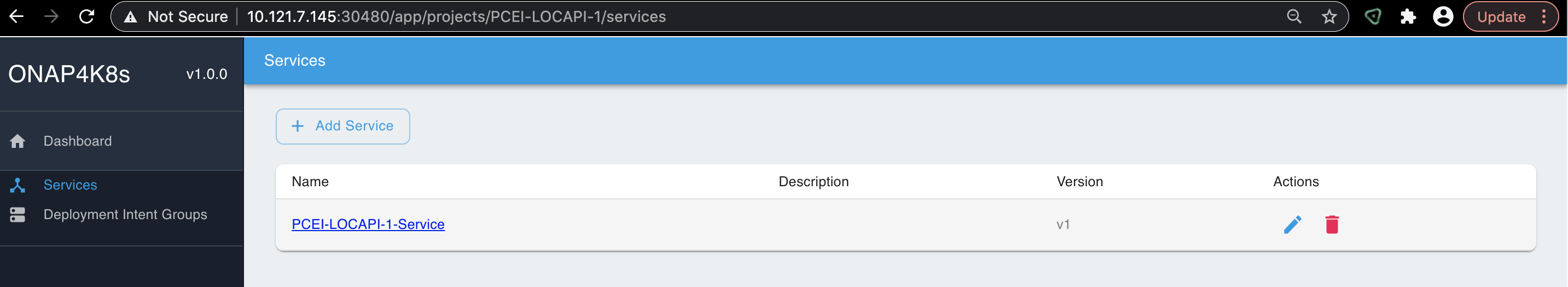

Access EMCO UI. Define a Project and a Service for PCEI Location API:

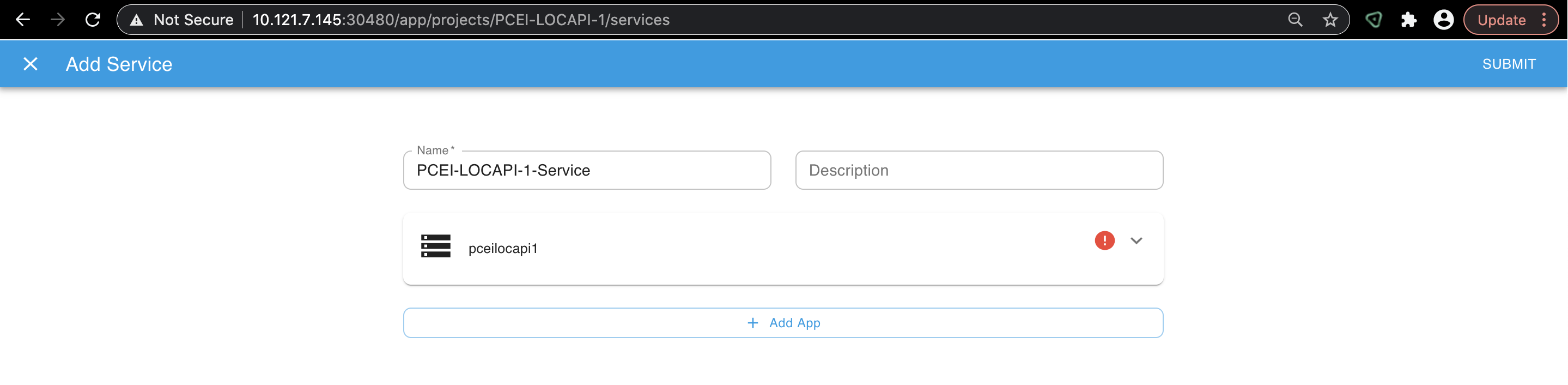

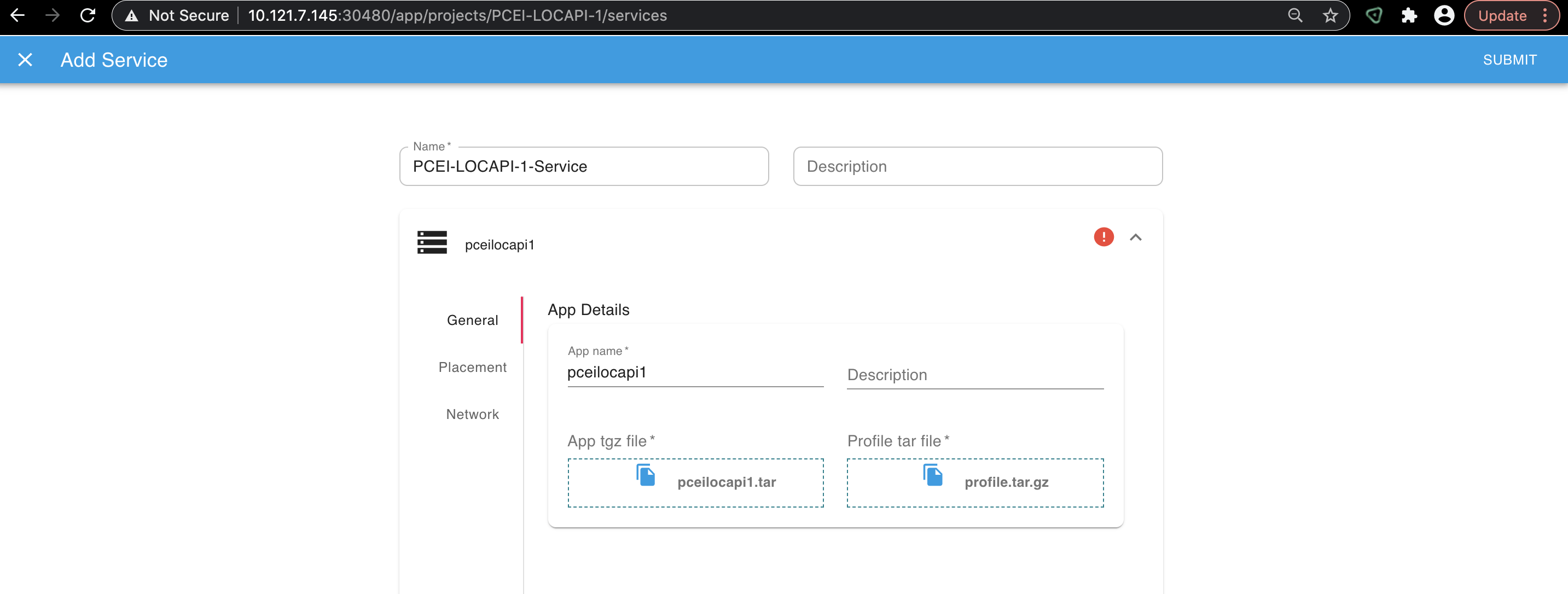

Add PCEI Location Api App:

Associate the App tar file and the Profile tar file with the PCEI Location API App:

Select "Placement" and place the PCEI Location API App onto the "EDGE-K8S-2" cluster:

Click "SUBMIT"

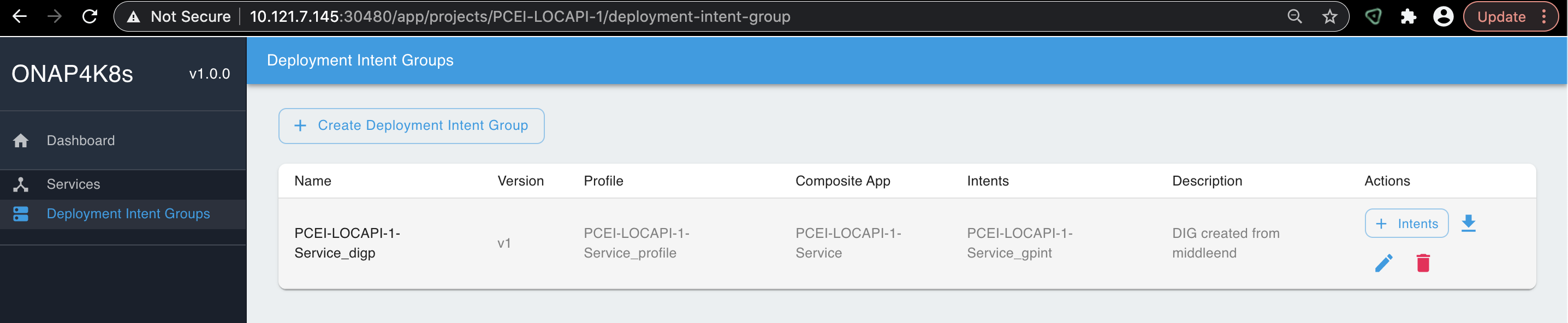

Select "Deployment Intent Groups":

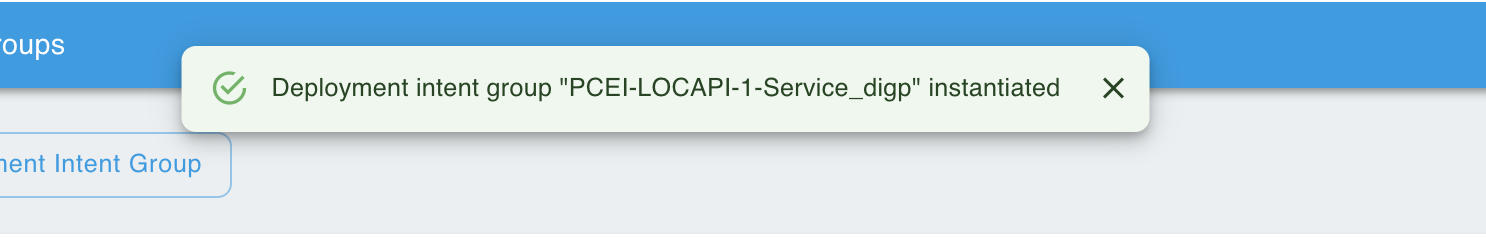

Click the blue down-arror to deploy the PCEI Location API App:

Verify the PCEI Location API App deployment on the "EDGE-K8S-2" cluster:

# SSH to egde_k8s-1 VM: ssh onaplab@10.121.7.146 # Verify that PCEI Location API App pod is Running kubectl get pods NAME READY STATUS RESTARTS AGE v1-pceilocapi-64477bb5d8-dzjh7 1/1 Running 0 107s onaplab@localhost:~$ # Verify K8S service kubectl get service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE pceilocapi-service NodePort 10.244.20.128 <none> 8081:30808/TCP 26m # Verify PCEI Location API logs kubectl logs v1-pceilocapi-64477bb5d8-dzjh7 > location-api@1.0.0 prestart /usr/src/app > npm install audited 147 packages in 1.235s 2 packages are looking for funding run `npm fund` for details found 8 vulnerabilities (2 low, 2 moderate, 4 high) run `npm audit fix` to fix them, or `npm audit` for details > location-api@1.0.0 start /usr/src/app > node index.js Your server is listening on port 8081 (http://localhost:8081) Swagger-ui is available on http://localhost:8081/docs # Verify PCEI Location API Docker container sudo docker ps |grep pcei 128842966f27 localhost:5000/pceilocapi "docker-entrypoint.s…" 4 minutes ago Up 4 minutes k8s_pceilocapi_v1-pceilocapi-64477bb5d8-dzjh7_default_7732dd43-4e61-48a7-bc2c-70770895fa6f_0 |

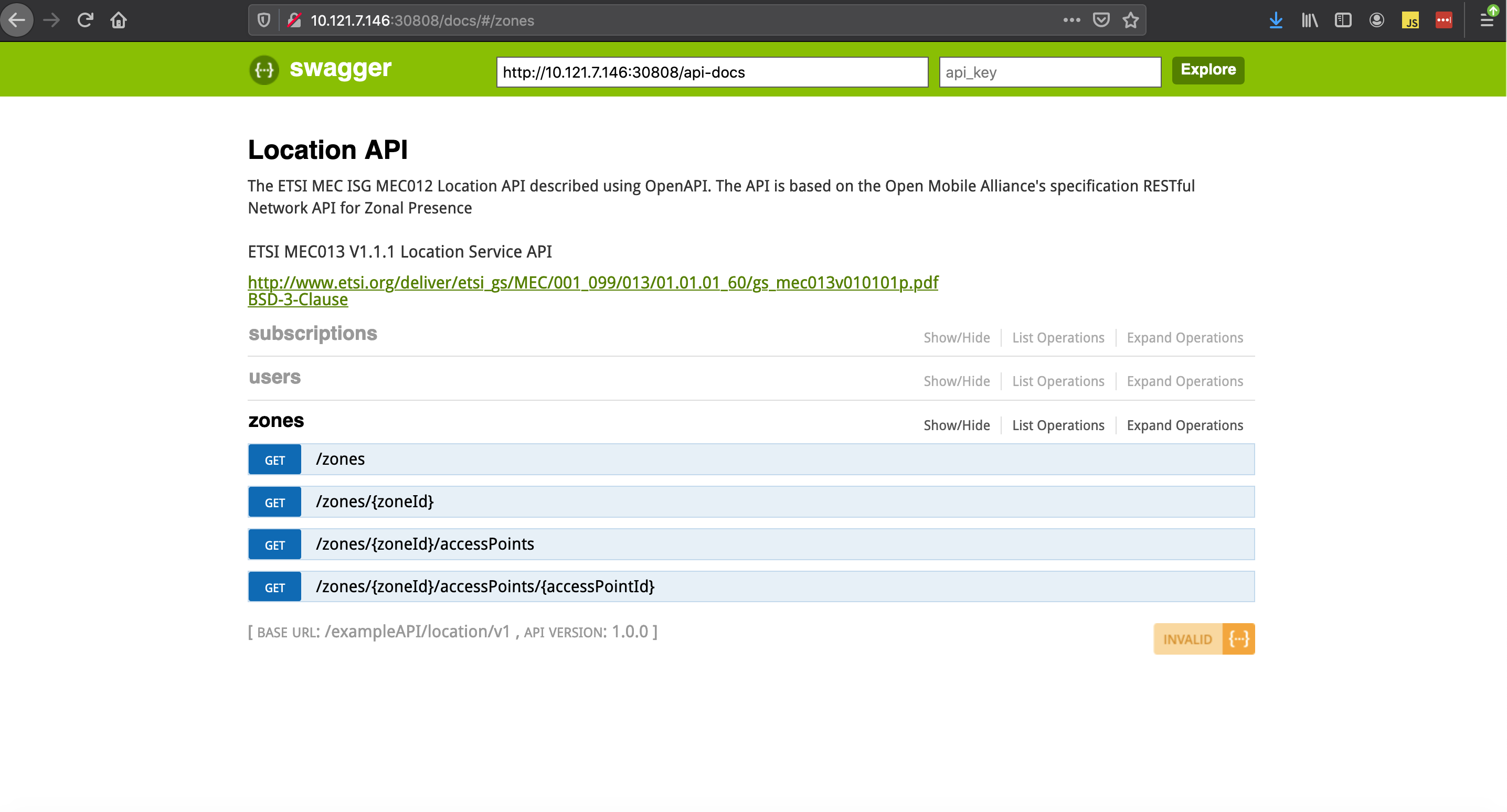

Access the PCEI Location API App doc page:

http://<EDGE-K8S-1 VM IP Address>:<pceilocapi pod service port>/docs

http://10.121.7.146:30808/docs |