Goal

Sdewan CRD Controller (config agent) is the controller of Sdewan CRDs. With the config agentCRD Controller, we are able to deploy CNFsSdewan CRs to configure CNF rules. In this page, we have the following terms, let's define them here.

...

To deploy a CNF, user needs to create one CNF deployment and some Sdewan rule CRs. In a Kubernetes namespace, there could be more than one CNF deployment and many Sdewan rule CRs. We use label to correlate one CNF with some Sdewan rule CRs. The Sdewan controller watches Sdewan rule CRs and applies them onto the correlated CNF by calling CNF REST api.

Sdwan Design Principle

- There could be multiple tenants/namespaces in a Kubernetes cluster. User may deploy multiple CNFs in any one or more tenants.

- The replica of CNF deployment could be more than one for active/backup purpose. We should apply rules for all the pods under CNF deployment. (This release doesn't implement VRRP between pods)

- CNF deployment and Sdewan rule CRs can be created/updated/deleted in any order

- The Sdewan controller and CNF process could be crash/restart at anytime for some reasons. We need to handle these scenarios

- Each Sdewan rule CR has labels to identify the type it belongs to. 3 types are available at this time:

basic, app-intent and k8s-service. We extend k8s user role permission so that we can set user permission at type level of Sdewan rule CR - Sdewan rule CR dependencies are checked on creating/updating/deleting. For example, if we create a mwan3_rule CR which uses policy

policy-x, but no mwan3_policy CR named policy-x exists. Then we block the request

CNF Deployment

In this section we describe what the CNF deployment should be like, as well as the pod under the deployment.

- CNF pod should has multiple network interfaces attached. We use multus and ovn4nfv CNIs to enable multiple interfaces. So in the CNF pod yaml, we set annotations:

k8s.v1.cni.cncf.io/networks, k8s.plugin.opnfv.org/nfn-network. - When user deploys a CNF, she/he most likely want to deploy the CNF on a specified node instead of a random node. Because some nodes may don't have provider network connected. So we set

spec.nodeSelector for pod - CNF pod runs Sdewan CNF (based on openWRT in ICN). We use image

integratedcloudnative/openwrt:dev - CNF pod should setup with rediness probe. Sdewan controller would check pod readiness before calling CNF REST api.

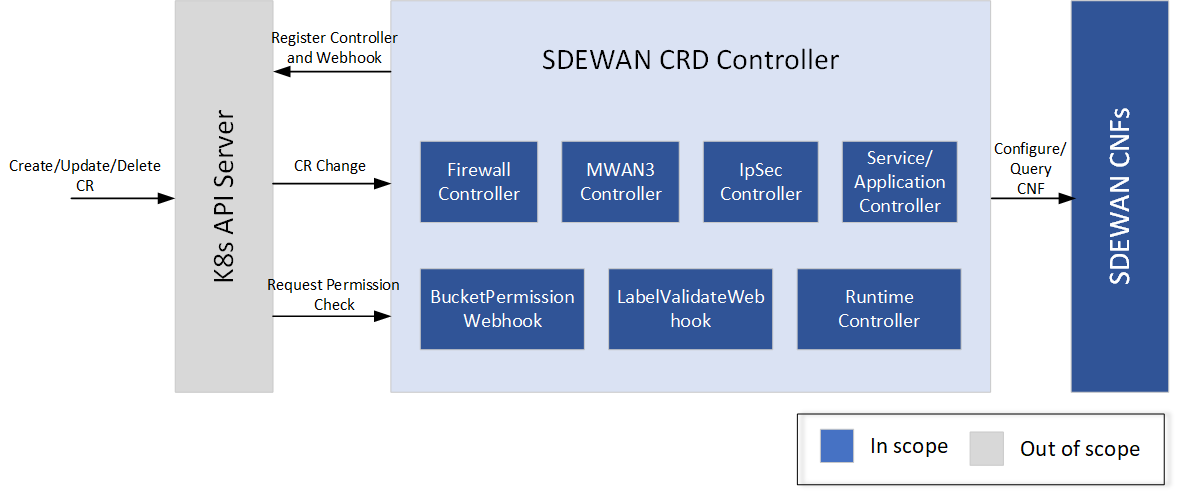

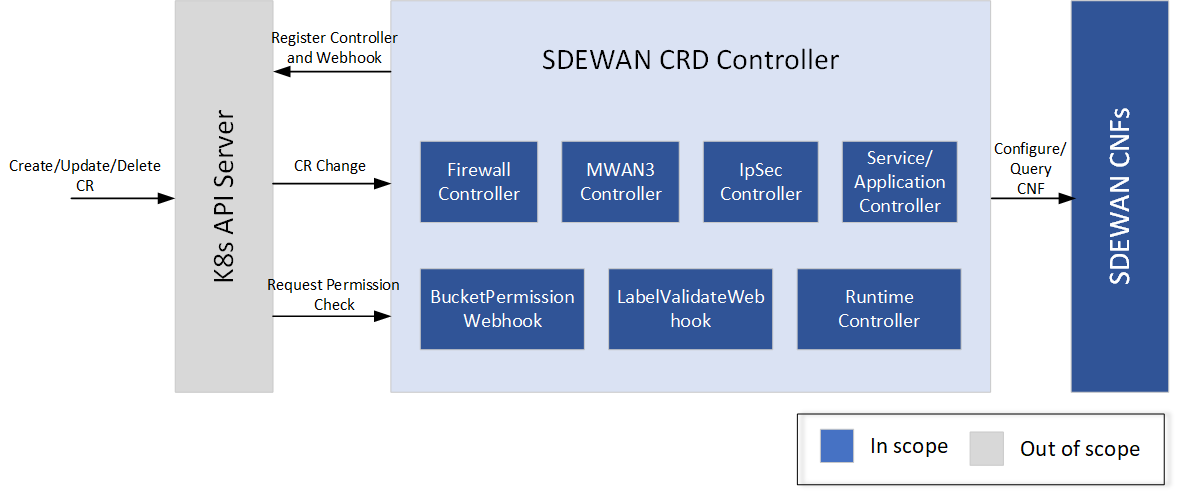

Architecture

Image Added

Image Added

SDEWAN CRD Controller internally calls SDEWAN Restful API to do CNF configuration. And a remote client (e.g. SDEWAN Overlay Controller) can manage SDEWAN CNF configuration through creating/updating/deleting SDEWAN CRs. It includes below components:

- MWAN3 Controller: monitor mwan3 related CR change then do mwan3 configuration in SDEWAN CNF

- Firewall Controller: monitor firewall related CR change then do firewall configuration in SDEWAN CNF

- IpSec Controller: monitor ipsec related CR change then do ipsec configuration in SDEWAN CNF

- Service/Application Controller: configure firewall/NAT rule for in-cluster service and application

- Runtime controller: collect runtime information of CNF include IPSec, IKE, firewall/NAT connections, DHCP leases, DNS entries, ARP entries etc..

- BucketPerssion/LabelValidateWebhook: do sdewan CR request permission check based on CR label and user

CNF Deployment

In this section we describe what the CNF deployment should be like, as well as the pod under the deployment.

- CNF pod should has multiple network interfaces attached. We use multus and ovn4nfv CNIs to enable multiple interfaces. So in the CNF pod yaml, we set annotations:

k8s.v1.cni.cncf.io/networks, k8s.plugin.opnfv.org/nfn-network. - When user deploys a CNF, she/he most likely want to deploy the CNF on a specified node instead of a random node. Because some nodes may don't have provider network connected. So we set

spec.nodeSelector for pod - CNF pod runs Sdewan CNF (based on openWRT in ICN). We use image

integratedcloudnative/openwrt:dev - CNF pod should setup with rediness probe. Sdewan controller would check pod readiness before calling CNF REST api.

| Code Block |

|---|

|

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: cnf-1

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

replicas: 1 |

| Code Block |

|---|

|

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: cnf-1

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

replicas: 1

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

template:

metadata:

annotations:

k8s.plugin.opnfv.org/nfn-network: |-

{ "type": "ovn4nfv", "interface": [

{

"defaultGateway": "false",

"interface": "net0",

"name": "ovn-priv-net"

},

{

"defaultGateway": "false",

"interface": "net1",

"name": "ovn-provider-net1"

},

{

"defaultGateway": "false",

"interface": "net2",

"name": "ovn-provider-net2"

} {

"defaultGateway": "false",

"interface": "net2",

"name": "ovn-provider-net2"

}

]}

k8s.v1.cni.cncf.io/networks: '[{ "name": "ovn-networkobj"}]'

spec:

containers:

- command:

- /bin/sh

- /tmp/sdewan/entrypoint.sh

image: integratedcloudnative/openwrt:dev

name: sdewan

readinessProbe:

failureThreshold: 5

httpGet:

path: /

port: 80

scheme: HTTP

initialDelaySeconds: 5

periodSeconds: 5

successThreshold: 1

timeoutSeconds: 1

securityContext:

privileged: true

procMount: Default

volumeMounts:

- mountPath: /tmp/sdewan

name: example-sdewan

readOnly: true

nodeSelector:

kubernetes.io/hostname: ubuntu18 |

Sdewan rule CRs

CRD defines all properties of a resource, but it's not human friendly. So we paste Sdewan rule CR samples instead of CRDs.

- Each Sdewan rule CR has a label named

sdewanPurpose to indicate which CNF should the rule be applied onto - Each Sdewan rule CR has the

status field which indicates if the latest rule is applied and when it's applied Mwan3Policy.spec.members[].network should match the networks defined in CNF pod annotation k8s.plugin.opnfv.org/nfn-network. As well as FirewallZone.spec[].network

CR samples of Mwan3 type:

| Code Block |

|---|

| language | yml |

|---|

| title | Mwan3Policy CR |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: Mwan3Policy

metadata:

name: balance1

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

members:

- network: ovn-net1

weight: 2

]}

metric: 2

k8s.v1.cni.cncf.io/networks: '[{ "name": "ovn-networkobj"}]'

spec:- network: ovn-net2

containersweight: 3

- commandmetric: 3

status:

appliedVersion: "2"

- /bin/shappliedTime: "2020-03-29T04:21:48Z"

inSync: - /tmp/sdewan/entrypoint.sh

image: integratedcloudnative/openwrt:dev

name: sdewan

readinessProbeTrue |

| Code Block |

|---|

| language | yml |

|---|

| title | Mwan3Rule CR |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: Mwan3Rule

metadata:

name: http_rule

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

policy: balance1

failureThresholdsrc_ip: 5192.168.1.2

dest_ip: 0.0.0.0/0

httpGet:dest_port: 80

pathproto: /

tcp

status:

appliedVersion: "2"

appliedTime: "2020-03-29T04:21:48Z"

inSync: True |

CR samples of Firewall type:

| Code Block |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallZone

metadata: port: 80

name: lan1

namespace: default

labels:

schemesdewanPurpose: HTTPcnf-1

spec:

initialDelaySeconds: 5

newtork:

- ovn-net1

periodSecondsinput: 5ACCEPT

output: ACCEPT

status:

appliedVersion: "2"

successThresholdappliedTime: 1"2020-03-29T04:21:48Z"

inSync: True |

| Code Block |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallRule

metadata:

name: reject_80

timeoutSecondsnamespace: 1default

labels:

securityContext:

sdewanPurpose: cnf-1

spec:

src: lan1

src_ip: 192.168.1.2

privilegedsrc_port: true80

proto: tcp

target: REJECT

status:

appliedVersion: "2"

procMountappliedTime: Default"2020-03-29T04:21:48Z"

inSync: True |

| Code Block |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallSNAT

metadata:

volumeMounts:name: snat_lan1

namespace: default

labels:

- mountPathsdewanPurpose: /tmp/sdewan

cnf-1

spec:

src: lan1

src_ip: 192.168.1.2

namesrc_dip: example-sdewan1.2.3.4

dest: wan1

proto: icmp

status:

readOnlyappliedVersion: true"2"

nodeSelector:

kubernetes.io/hostname: ubuntu18 |

Sdewan rule CRs

CRD defines all properties of a resource, but it's not human friendly. So we paste Sdewan rule CR samples instead of CRDs.

- Each Sdewan rule CR has a label named

sdewanPurpose to indicate which CNF should the rule be applied onto - Each Sdewan rule CR has the

status field which indicates if the latest rule is applied and when it's applied Mwan3Policy.spec.members[].network should match the networks defined in CNF pod annotation k8s.plugin.opnfv.org/nfn-network. As well as FirewallZone.spec[].network

CR samples of Mwan3 type:

appliedTime: "2020-03-29T04:21:48Z"

inSync: True |

| Code Block |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallDNAT

metadata:

name: dnat_wan1

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

src: wan1

src_dport: 19900

dest: lan1

dest_ip: 192.168.1.1

dest_port: 22

proto: tcp

status:

appliedVersion: "2"

appliedTime: "2020-03-29T04:21:48Z"

inSync: True |

| Code Block |

|---|

|

| Code Block |

|---|

| language | yml |

|---|

| title | Mwan3Policy CR |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: Mwan3PolicyFirewallForwarding

metadata:

name: balance1forwarding_lan_to_wan

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

memberssrc:

- network: ovn-net1

weight: 2

metric: 2

- network: ovn-net2

weight: 3

metric: 3 lan1

dest: wan1

status:

appliedVersion: "2"

appliedTime: "2020-03-29T04:21:48Z"

inSync: True |

CR samples of IPSec type(ruoyu):

| Code Block |

|---|

| language | yml |

|---|

| title | Mwan3Rule IPSec Proposal CR |

|---|

|

apiVersion: batch.sdewan sdewan.akraino.org/v1alpha1

kind: Mwan3Rule IpsecProposal

metadata:

name: http_rule name: test_proposal_1

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

policy: balance1

src_ip: 192.168.1.2encryption_algorithm: aes128

desthash_ipalgorithm: 0.0.0.0/0sha256

destdh_portgroup: 80

proto: tcpmodp3072

status:

appliedVersion: "21"

appliedTime: "2020-0304-29T0412T09:2128:48Z38Z"

inSync: True |

...

| Code Block |

|---|

| language | yml |

|---|

| title | IPSec Site CR |

|---|

|

apiVersion: batch.sdewan sdewan.akraino.org/v1alpha1

kind: FirewallZone IpsecSite

metadata:

name: lan1 name: ipsecsite-sample

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

remote: xx.xx.xx.xx

authentication_method: psk

newtork:

- ovn-net1

input: ACCEPTpre_shared_key: xxx

local_public_cert:

local_private_cert:

shared_ca:

local_identifier:

remote_identifier:

crypto_proposal:

output: ACCEPT

status- test_proposal_1

connections:

appliedVersion: "2"

- appliedTime: "2020-03-29T04:21:48Z"

inSync: True |

| Code Block |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallRule

metadata:

name: reject_80

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

src: lan1

src_ip: 192.168.1.2

src_port: 80

proto: tcp

target: REJECTconnection_name: connection_A

type: tunnel

mode: start

local_subnet: 172.12.0.0/24, 10.239.160.22

remote_sourceip: 172.12.0.30-172.12.0.45

remote_subnet:

crypto_proposal:

- test_proposal_1

status:

appliedVersion: "21"

appliedTime: "2020-0304-29T0412T09:2128:48Z38Z"

inSync: True

|

| Code Block |

|---|

| language | yml |

|---|

| title | IPSec Host CR |

|---|

|

apiVersion: batch.sdewan sdewan.akraino.org/v1alpha1

kind: FirewallSNAT IpsecHost

metadata:

name: snat_lan1 name: ipsechost-sample

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

remote: xx.xx.xx.xx/%any

srcauthentication_method: lan1psk

srcpre_shared_ip: 192.168.1.2key: xxx

local_public_cert:

srclocal_private_dip: 1.2.3.4cert:

destshared_ca: wan1

protolocal_identifier: icmp

status:

appliedVersion: "2"remote_identifier:

appliedTimecrypto_proposal: "2020-03-29T04:21:48Z"

inSync: True |

| Code Block |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallDNAT

metadata:

name: dnat_wan1

namespace: default

labels:

sdewanPurpose: cnf-1

spec:

src: wan1

src_dport: 19900

dest: lan1

dest_ip: 192.168.1.1

dest_port: 22

proto: tcp - test_proposal_1

connections:

- connection_name: connection_A

type: tunnel

mode: start

local_sourceip: %config

remote_sourceip: xx.xx.xx.xx

remote_subnet: xx.xx.xx.xx/xx

crypto_proposal:

- test_proposal_1

status:

appliedVersion: "21"

appliedTime: "2020-0304-29T0412T09:2128:48Z38Z"

inSync: True: True

|

CNF Service CR

.spec.fullname - The full name of the target service, with which we can get the service IP

.spec.port - The port exposed by CNF, we will do DNAT for the requests accessing this port of CNF

.spec.dport - The port exposed by target service

| Code Block |

|---|

| language | yml |

|---|

| title | CNF Service CR |

|---|

|

apiVersion: batch.sdewan.akraino.org/v1alpha1

kind: FirewallForwardingCNFService

metadata:

name: forwarding_lan_to_wancnfservice-sample

namespace: default

labels:

sdewanPurpose: cnf-1cnf1

spec:

srcfullname: lan1httpd-svc.default.svc.cluster.local

dest: wan1

status:

appliedVersion port: "22288"

appliedTimedport: "2020-03-29T04:21:48Z"

inSync: True |

...

Sdewan rule CRD Reconcile Logic

As we have many kinds of CRDs, they have almost the same reconcile logic. So we only describe the Mwan3Rule logic.

...

| Code Block |

|---|

|

def Mwan3RuleReconciler.Reconcile(req ctrl.Request):

rule_cr = k8sClient.get(req.NamespacedName)

cnf_deployment = k8sClient.get_deployment_with_label(rule_cr.labels.sdewanPurpose)

if rule_cr DeletionTimestamp exists:

# The CR is being deleted. finalizer on the CR

if cnf_deployment exists:

if cnf_deployment is ready:

for cnf_pod in cnf_deployment:

err = openwrt_client.delete_rule(cnf_pod_ip, rule_cr)

if err:

return "re-queue req"

rule_cr.finalizer = nil

return "ok"

else:

return "re-queue req"

else:

# Just remove finalizer, because no CNF pod exists

rule_cr.finalizer = nil

return "ok"

else:

# The CR is not being deleted

if cnf_deployment not exist:

return "ok"

else:

if cnf_deployment not ready:

# set appliedVersion = nil if cnf_deployment get into not_ready status

rule_cr.status.appliedVersion = nil

return "re-queue req"

else:

for cnf_pod in cnf_deployment:

runtime_cr = openwrt_client.get_rule(cnf_pod_ip)

if runtime_cr != rule_cr:

err = openwrt_client.add_or_update_rule(cnf_pod_ip, rule_cr)

if err:

# err could be caused by dependencies not-applied or other reason

return "re-queue req"

# set appliedVerson only when it's applied for all the cnf pods

rule_cr.finalizer = cnf_finalizer

rule_cr.status.appliedVersion = rule_cr.resourceVersion

rule_cr.status.inSync = True

return "ok" |

Unsual Cases

In the following cases, when we say "call CNF api to create/update/delte rule", it means the logic below:

...

- A deployment(CNF) for a given purpose has two pod replicas (CNF-pod-1 and CNF-pod-2)

- Controller is also brought yup.

- CNF-pod-1 and CNF-pod-2 are both running with no/default configuration.

- MWAN3 policy 1 is added

- MWAN3 rule 1 and Rule 2 are added to use MWAN3 Policy1.

- Since all controller, CNF-pod-1 and CNF-pod-2 are running, CNF-pod-1 and CNF-pod-2 has configuration MWAN3 Policy1, rule1 and rule2.

- Controller is down for 10 minutes.

After controller goes down, CNF-pod-1 is down

| Info |

|---|

| As controller is down, so no event, no reconcile. |

MWAN3 rule 1 is deleted.

| Info |

|---|

| As controller is down, so no event, no reconcile. rule1 CR is not deleted from etcd because of finalizer. Instead, DeleteTimestamp is added to rule1 CR by k8s |

MWAN3 rule 3 added

| Info |

|---|

| As controller is down, no event no reconcile. rule3 CR is added to etcd, but not applied onto CNF. rule3 status.appliedVersion and status.appliedTime and status.inSync are nil/default value. |

- For MWAN3 rule 2, we don't make any change

CNF-pod-1 is up

| Info |

|---|

| As controller is down, so no event, no reconcile. As pod restart, CNF-pod-1 is running with no/default configuration. |

Controller is up.

| Info |

|---|

| Controller reconciles for all CRs. For rule1 CR, controller calls cnf api to delete rule1 from both CNF-pod-1 and CNF-pod-2. Then controller removes finalizer from the rule1 CR, then rule1 CR is deleted from etcd by k8s. For rule2, controller calls cnf api to update rul2 for both CNF-pod-1 and CNF-pod-2. Then set rule2 status.appliedVersion=<current-version> and status.appliedTime=<now-time> and status.inSync=true. For rule3, controller calls cnf api to add rul3 for both CNF-pod-1 and CNF-pod-2. Then set rule3 finalizer. Also set rule3 status.appliedVersion=<current-version> and status.appliedTime=<now-time> and status.inSync=true. |

Ensure that CNF-pod-1 and CNF-pod-2 have latest configuration and there is no duplicate information.

| Info |

|---|

| Once the reconcile finish, both CNF-pod-1 and CNF-pod-2 have latest configuration. |

Admission Webhook Usage

We use admission webhook to implemention several features.

- Prevent creating more than one CNF of the same lable and the same namespace

- Validate CR dependencies. For example, mwan3 rule depends on mwan3 policy

- Extend user permission to control the operations on rule CRs. For example, we can control that ONAP can't update/delete rule CRs created by platform.

Sdewan rule CR type level Permission Implementation

8s support permission control on namespace level. For example, user1 may be able to create/update/delete one kind of resource(e.g. pod) in namespace ns1, but not namespace ns2. For Sdewan, this can't fit our requirement. We want label level control of Sdewan rule CRs. For example, user_onap can create/update/delete Mwan3Rule CR of label sdewan-bucket-type=app-intent, but not label sdewan-bucket-type=basic.

...

| Code Block |

|---|

|

def mwan3rule_webhook_handle_permission(req admission.Request):

userinfo = req["userInfo]

mwan3rule_cr = decode(req)

roles = k8s_client.get_role_from_user(userinfo)

for role in roles:

if mwan3rule_cr.labels.sdewan-bucket-type in role.annotation.sdewan-bucket-type-permission.mwan3rules:

return {"allowd": True}

return {"allowd": False} |

ServiceRule controller (For next release)

We create a controller watches the services created in the cluster. For each service, it creates a FirewallDNAT CR. On controller startup, it makes a syncup to remove unused CRs.

References

...