| Table of Contents | ||

|---|---|---|

|

The ICN blueprint family intends to address deployment of workloads in a large number of edges and also in public clouds using K8S as resource orchestrator in each site and ONAP-K8S as service level orchestrator (across sites). ICN also intends to integrate infrastructure orchestration which is needed to bring up a site using bare-metal servers. Infrastructure orchestration, which is the focus of this page, needs to ensure that the infrastructure software required on edge servers is installed on a per-site basis, but controlled from a central dashboard. Infrastructure orchestration is expected to do the following:

- Installation: First-time installation of all infrastructure software.

- Keep monitoring for new servers and install the software based on the role of the server machine.

- Patching: Continue to install the patches (mainly security-related) if new patch release is made in any one of the infrastructure software packages.

- May need to work with resource and service orchestrators to ensure that workload functionality does not get impacted.

- Software updates: Updating software due to new releases.

...

- SDWAN, Customer Edge, Edge Clouds – deploy VNFs/CNFs and applications as micro-services (Completed in R2 release using OpenWRT SDWAN Containerized)

- vFW

- EdgeX FoundryDAaaS - Distributed Ana

Where on the Edge

Kuralamudhan Ramakrishnan - what are the drivers?

Business Drivers

Overall Architecture

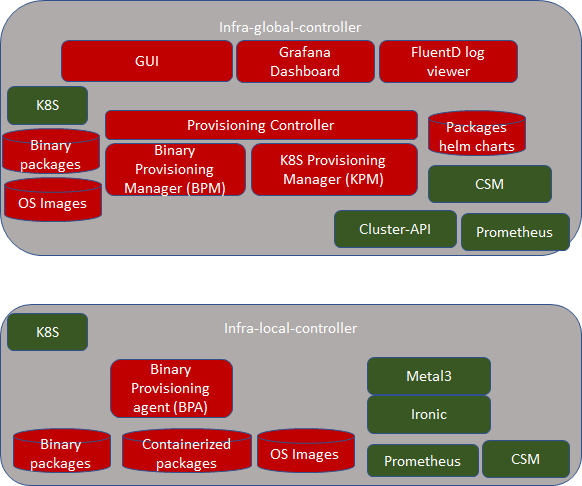

On an edge deployment, there may be multiple edges that need to be brought up. The Administrator going to each location, using the infra-local-controller to bring up application-K8S clusters in compute nodes of each location, is not scalable. Therefore, we have an "infra-global-controller" to control multiple "infra-local-controllers" which are controlling the worker nodes. The "infra-global-controller" is expected to provide a centralized software provisioning and configuration system. It provides one single-pane-of-glass for administrating the edge locations with respect to infrastructure. The worker nodes may be baremetal servers, or they may be virtual machines resident on the infra-local-controller. So the minimum platform configuration is one global controller and one local controller (although the local controller can be run without a global controller).

Since, there are a few K8S clusters, let us define them:

- infra-global-controller-K8S : This is the K8S cluster where infra-global-controller related containers are run.

- infra-local-controller-K8S: This is the K8S cluster where the infra-local-controller related containers are run, which bring up compute nodes.

- application-K8S : These are K8S clusters on compute nodes, where application workloads are run.

Flows & Sequence Diagrams

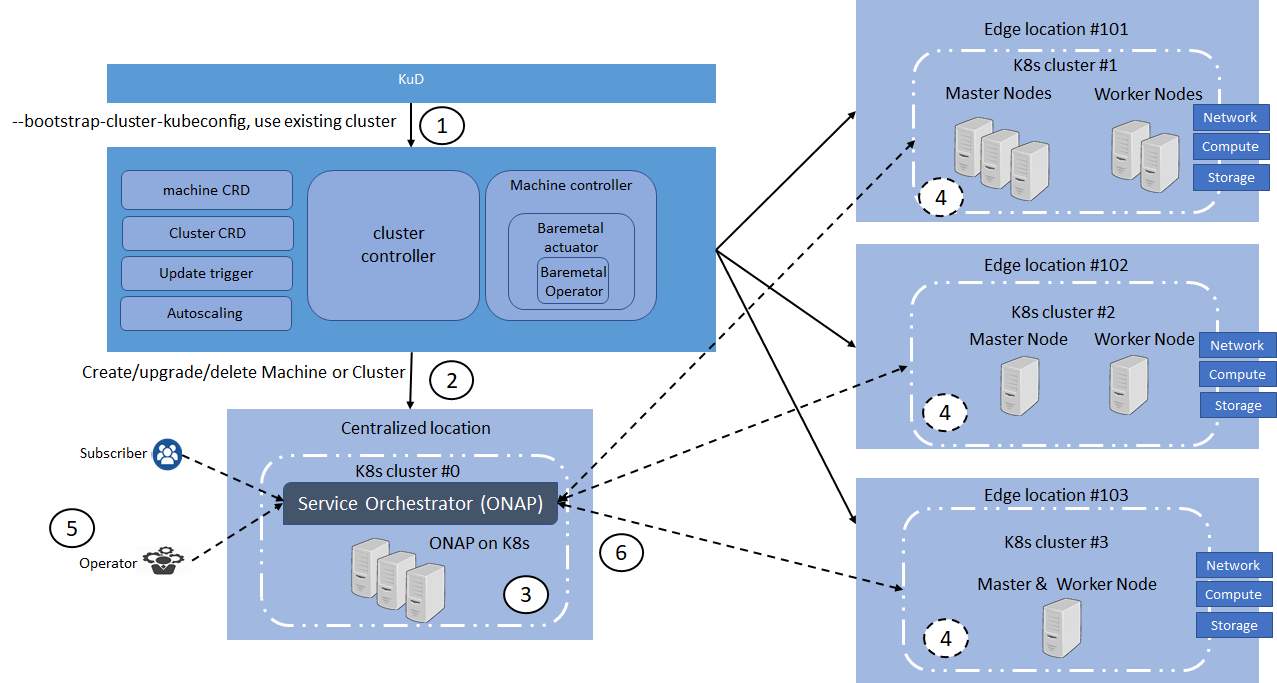

@Kural - I think this is the closest to a general architectural view, but the steps in the sequence (1-6 below the diagram) you might say belong in the Software Architecture diagram.

- Use Clusterctl command to create the cluster for the cluster-api-provider-baremetal provider. For this step, we required KuD to provide a cluster and run the machine controller and cluster controller

- Users Machine CRD and Cluster CRD in configured to instated 4 clusters as #0, #1, #2, #3

- Automation script for OOM deployment is trigged to deploy ONAP on cluster #0

- KuD addons script in trigger in all edge location to deploy K8s App components, NFV Specific and NFVi SDN controller

- Subscriber or Operator requires to deploy the VNF workload such as SDWAN in Service Orchestration

- ONAP should place the workload in the edge location based on Multi-site scheduling and K8s HPA

Platform Architecture

@Kural - I have explanations of software components in both Platform Architecture and Software Platform Architecture. Maybe it would be better to rewrite the PA to have a more general explanation of the arch, and move details into SPA? Also, maybe the locations of the elements (global, local) is not clear.

Nowadays best efforts are put to keep the Cloud native control plane close to workload to reduce latency, increase performance, and fault tolerance. A single orchestration engine to be lightweight and maintain the resources in a cluster of compute node, Where customer can deploy multiple Network Functions, such as VNF, CNF, Micro service, Function as a service (FaaS), and also scale the orchestration infrastructure depending upon the customer demand.

ICN target on-prem edge, IoT, SDWAN, Video streaming, Edge Gaming Cloud. A single deployment model to target multiple edge use case.

Overall Architecture

On an edge deployment, there may be multiple edges that need to be brought up. The Administrator going to each location, using the infra-local-controller to bring up application-K8S clusters in compute nodes of each location, is not scalable. Therefore, we have an "infra-global-controller" to control multiple "infra-local-controllers" which are controlling the worker nodes. The "infra-global-controller" is expected to provide a centralized software provisioning and configuration system. It provides one single-pane-of-glass for administrating the edge locations with respect to infrastructure. The worker nodes may be baremetal servers, or they may be virtual machines resident on the infra-local-controller. So the minimum platform configuration is one global controller and one local controller (although the local controller can be run without a global controller).

Since, there are a few K8S clusters, let us define them:

- infra-global-controller-K8S : This is the K8S cluster where infra-global-controller related containers are run.

- infra-local-controller-K8S: This is the K8S cluster where the infra-local-controller related containers are run, which bring up compute nodes.

- application-K8S : These are K8S clusters on compute nodes, where application workloads are run.

Flows & Sequence Diagrams

Each edge location has infra local controller, which has a bootstrap cluster, which has all the components required to boot up the compute cluster.

Platform Architecture

InfraInfra-global-controller:

Administration involves

...

As you see above in the picture, the bootstrap machine itself is based on K8S. Note that this K8S is different from the K8S that gets installed in compute nodes. That is, these are two different K8S clusters. In case of the bootstrap machine, it itself is a complete K8S cluster with one node that has both master and minion software combined. All the components of the infra-local-controller (such as BPA, Metal3 and Ironic) are containers.

...

As indicated above, infra-local-controller will bring up K8S clusters on the compute nodes used for workloads. Bringing up a workload K8S cluster normally requires the following steps

- Bring up a Linux operating system.

- Provision the software with the right configuration

- Bring up basic Kubernetes components (such as Kubelet, Docker, kubectl, kubeadm etc..

- Bring up components that can be installed using kubectl.

...

The Baremetal Operator provides provisioning of compute nodes (either baremetal bare-metal or VM) by using the kubernetes Kubernetes API. The Baremetal Operator defines a CRD BaremetalHost Object representing a physical server; it represents several hardware inventories. Ironic is responsible for provisioning the physical servers, and the Baremetal Operator is for responsible for wrapping the Ironic and represents them as CRD object.

...

The job of the BPA is to install all packages to the application-K8S that can't be installed using kubectl. Hence, the BPA is used right after the compute nodes get installed with the Linux operating system, before installing kubernetesKubernetes-based packages. BPA is also an implementation of CRD controller of infra-local-controller-k8s. We expect to have the following CRs:

- To upload site-specific information - compute nodes and their roles

- To instantiate the binary package installation.

- To get hold of application-K8S kubeconfig file.

- Get status of the installation

...

- To upload binary images that are used to install the stuff in compute nodes.

- To upload a Linux Operating system that are needed in compute nodes.

- Get status of installation of all packages as prescribed before.

...

Ironic is expected to bring up Linux on compute nodes. It is also expected to create SSH keys automatically for each compute node. In addition, it is also expected to create SSH user for each compute node. Usernames and password are expected to be stored in SMS for security reasons in infra-local-controller. BPA is expected to leverage these authentication credentials when it installs the software packages.

CSM is used for storing secrets and performing crypto operations using CSM.

...

.

Software Platform Architecture

...

Local Controller: kubeadmKubeadm, Metal3, Baremetal Operator, Ironic, Prometheus, CSM, ONAP

Global Controller: kubeadmKubeadm, KuD, K8S Provisioning Manager, Binary Provisioning Manager, Prometheus, CSM

...

R2 Release cover only Infra local controller:

Baremetal Operator

One of the major challenges to cloud admin managing multiple clusters in different edge location is coordinate control plane of each cluster configuration remotely, managing patches and updates/upgrades across multiple machines. Cluster-API provides declarative APIs to represent clusters and machines inside a cluster. Cluster-API provides the abstraction for various common logic that can be seen in various cluster provider such as GKE, AWS, Vsphere. Cluster-API consolidated all those logic provide abstractions for all those logic functions such as grouping machines for the upgrade, autoscaling mechanism.

In ICN family stack, Cluster-API Baremetal provider is the Metal3 Baremetal OperatorBaremetal operator from metal3 project is used as bare metal provider. It is used as a machine actuator that uses Ironic to provide k8s API to manage the physical servers that also run Kubernetes clusters on bare-metal host. Cluster-API manages the kubernetes control plane through cluster CRD, and Kubernetes node(host machine) through machine CRDs, Machineset CRDs and MachineDeployment CRDS. It also has an autoscaler mechanism that checks the Machineset CRD that is similar to the analogy of K8s replica set and MachineDeployment CRD similar to the analogy of K8s Deployment. MachineDeployment CRDs are used to update/upgrade of software drivers in

Cluster-API provider with Baremetal operator is used to provision physical server, and initiate the Kubernetes cluster with user configuration

KuD

Kubernetes deployment (KUD) is a project that uses Kubespray to bring up a Kubernetes deployment and some addons on a provisioned machine. As it already part of ONAP it can be effectively reused to deploy the K8s App components(as shown in fig. II), NFV Specific components and NFVi SDN controller in the edge cluster. In R2 release KuD will be used to deploy the K8s addon such as Virlet, OVN, NFD, and Intel device plugins such as SRIOV and QAT in the edge location(as shown in figure I). In R3 release, KuD will be evolved as "ICN Operator" to install all K8s addons. For more information on the architecture of KuD please find the information here.

ONAP on K8s

One of the Kubernetes clusters with high availability, which is provisioned and configured by Cluster-API will be used to deploy ONAP on K8s. ICN family uses ONAP Operations Manager(OOM) to deploy ONAP installation. OOM provides a set of helm chart to be used to install ONAP on a K8s cluster. ICN family will create OOM installation and automate the ONAP installation once a Kubernetes cluster is configured by cluster-API

ONAP Block and Modules:

ONAP will be the Service Orchestration Engine in ICN family and is responsible for the VNF life cycle management, tenant management and Tenant resource quota allocation and managing Resource Orchestration engine(ROE) to schedule VNF workloads with Multi-site scheduler awareness and Hardware Platform abstraction(HPA). Required an Akraino dashboard that sits on the top of ONAP to deploy the VNFs

Kubernetes Block and Modules:

KuD

Kubernetes deployment (KUD) is a project that uses Kubespray to bring up a Kubernetes deployment and some addons on a provisioned machine. As it already part of ONAP it can be effectively reused to deploy the K8s App components(as shown in fig. II), NFV Specific components and NFVi SDN controller in the edge cluster. In R2 release KuD will be used to deploy the K8s addon such as Virlet, OVN, NFD, and Intel device plugins such as SRIOV in the edge location(as shown in figure I). In R3 release, KuD will be evolved as "ICN Operator" to install all K8s addons. For more information on the architecture of KuD please find the information here.

ONAP on K8s

One of the Kubernetes clusters with high availability, which is provisioned and configured by KUD will be used to deploy ONAP on K8s. ICN family uses ONAP Operations Manager(OOM) to deploy ONAP installation. OOM provides a set of helm chart to be used to install ONAP on a K8s cluster. ICN family will create OOM installation and automate the ONAP installation once a Kubernetes cluster is configured by KUD

ONAP Block and Modules:

ONAP will be the Service Orchestration Engine in ICN family and is responsible for the VNF life cycle management, tenant management and Tenant resource quota allocation and managing Resource Orchestration engine(ROE) to schedule VNF workloads with Multi-site scheduler awareness and Hardware Platform abstraction(HPA). Required an Akraino dashboard that sits on the top of ONAP to deploy the VNFs

Kubernetes Block and Modules:

Kubernetes will be the Resource Orchestration Engine in ICN family to manage Network, Storage and Compute resource for the VNF application. ICN family will be using multiple container runtimes as Virtlet (R2 Release) and docker as a de-facto container runtime. Each release supports Kubernetes will be the Resource Orchestration Engine in ICN family to manage Network, Storage and Compute resource for the VNF application. ICN family will be using multiple container runtimes as Virtlet, Kata container, Kubevirt and gVisor. Each release supports different container runtimes that are focused on use cases.

...

NFV Specific components: This block is responsible for k8s compute management to support both software and hardware acceleration(include network acceleration) with CPU pinning and Device plugins such as QAT, FPGA, SRIOV & GPU.SRIOV

SDN Controller components: This block is responsible for managing SDN controller and to provide additional features such as Service Function chaining(SFC) and Network Route manager.

...

Please explain each component & their design/architecture, Please keep maximum 2 paragraph, if possible link your project wiki link for more information

Metal3: Kuralamudhan Ramakrishnan

BPA Operator: Itohan Ukponmwan ramamani yeleswarapu

BPA Rest Agent: Enyinna Ochulor Tingjie Chen

KUD: Akhila Kishore Kuralamudhan Ramakrishnan

ONAP4K8s: Kuralamudhan Ramakrishnan

SDWAN: SDWAN module is worked as software defined router which can be used to defined the rules when connect to external internet. It is implemented as CNF instead of VNF for better performance and effective deployment, and leverage OpenWRT (an open source project based on Linux, and used on embedded devices to route network traffic) and mwan3 package (for wan interfaces management) to implement its functionalities, detail information can be found at: SDWAN Module Design

ICN uses Metal3 project for provisioning server in the edge locations, ICN project uses IPMI protocol to identify the servers in the edge locations, and use Ironic & Ironic - Inspector to provision the OS in the edge location. For R2 release, ICN project provision Ubuntu 18.04 in each server, and uses the distinguished network such provisioning network and bare-metal network for inspection and ipmi provisioning

ICN project injects the user data in each server regarding network configuration, remote command execution using ssh and maintain a common secure mechanism for all provisioning the servers. Each local controller maintains IP address management for that edge location. For more information refer - Metal3 Baremetal Operator in ICN stack

BPA Operator:

ICN uses the BPA operator to install KUD. It can install KUD either on Baremetal hosts or on Virtual Machines. The BPA operator is also used to install software on the machines after KUD has been installed successfully

KUD Installation

Baremetal Hosts: When a new provisioning CR is created, the BPA operator function is triggered, it then uses a dynamic client to get a list of all Baremetal hosts that were provisioned using Metal3. It reads the MAC addresses from the provisioning CR and compares with the baremetal hosts list to confirm that a host with that MAC address exists. If it exists, it then searches the DHCP lease file for corresponding IP address of the host, using the IP addresses of all the hosts in the provisioning CR, it then creates a host.ini file and triggers a job that installs KUD on the machines using the hosts.ini file. When the job is completed successfully, a k8s cluster is running in the Baremetal hosts. The bpa operator then creates a configmap using the hosts name as keys and their corresponding IP addresses as values. If a host containing a specified MAC address does not exist, the BPA operator throws an error.

Virtual Machines : ICN project uses Virtlet for provisioning virtual machines in the edge locations. For this release, it involves a nested Kubernetes implementation. Kubernetes is first installed with Virtlet. Pod spec files are created with cloud init user data, network annotation with mac address, CPU and Memory requests. Virtlet VMs are created as per cluster spec or requirement. Corresponding provisioning custom resources are created to match the mac addresses of the Virtlet VMs.

BPA operator checks the provisioning custom resource and maps the mac address(es) to the running Virtlet VM(s). BPA operator gets the IP addresses of those VMs and initiates an installer job which runs KuD scripts in those VMs. Upon completion, the K8s cluster is ready running in the Virtlet VMs.

Software Installation

When a new software CR is created, the reconcile loop is triggered, on seeing that it is a software CR, the bpa operator checks for a configmap with a cluster label corresponding to that in the software CR, if it finds one, it gets the IP addresses of all the master and worker nodes, ssh's into the hosts and installs the required software. If no corresponding config map is found, it throws an error.

Refer

BPA Rest Agent:

Provides a straightforward RESTful API that exposes resources: Binary Images, Container Images, and OS Images. This is accomplished by using MinIO for object storage and Mongodb for metadata.

POST - Creates a new image resource using a JSON file.

GET - Lists available image resources.

PATCH - Uploads images to the MinIO backend and updates Mongodb.

DELETE - Removes the image from MinIO and Mongodb.

More on BPA Restful API can be found at ICN Rest API.

KuD

Kubernetes deployment (KUD) is a project that uses Kubespray to bring up a Kubernetes deployment and some addons on a provisioned machine. As it already part of ONAP it can be effectively reused to deploy the K8s App components(as shown in fig. II), NFV Specific components and NFVi SDN controller in the edge cluster. In R2 release KuD will be used to deploy the K8s addon such as Virlet, OVN, NFD, and Intel device plugins such as SRIOV in the edge location(as shown in figure I). In R3 release, KuD will be evolved as "ICN Operator" to install all K8s addons. For more information on the architecture of KuD please find the information here.

ONAP4K8s:

ONAP is used as Service orchestration in ICN BP. A lightweight golang version of ONAP is developed as part of Multicloud-k8s project in ONAP community. ICN BP developed containerized KUD multi-cluster to install the onap4k8s as a plugin in any cluster provisioned by BPA operator. ONAP4k8s installed EdgeX Foundry Workload, vFW application to install in any edge location.

Openness: Chenjie Xu

SDEWAN:

SDWAN module is worked as a software-defined router which can be used to define the rules when connecting to the external internet. It is implemented as CNF instead of VNF for better performance and effective deployment, and leverage OpenWRT (an open-source project based on Linux, and used on embedded devices to route network traffic) and mwan3 package (for wan interfaces management) to implement its functionalities, detail information can be found at: ICN - SDEWAN

SDEWAN controller: Huifeng Le Cheng Li Ruoyu Ying

Cloud Storage:

Cloud Storage which used by BPA Rest Agent to provide storage service for image objects with binary, container and operating system. There are 2 solutions, MinIO and GridFS, with the consideration of Cloud native and Data reliability, we propose to use MinIO, which is CNCF project for object storage and compatible with Amazon S3 API, and provide language plugins for client application, it is also easy to deploy in Kubernetes and flexible scale-out. MinIO also provide storage service for HTTP Server. Since MinIO need export volume in bootstrap, local-storage is a simple solution but lack of reliability for the data safety, we will switch to reliability volume provided by Ceph CSI RBD in next release. Detail information can be found at: Cloud Storage Design

Software components:

Components | Link | Akraino Release target | ||||||

Provision stack - Metal3 | R2 | |||||||

Host Operating system | Ubuntu 18.04 | R2 | ||||||

NIC drivers | R2 | |||||||

ONAP | R2 | |||||||

Workloads | OpenWRT SDWAN - https://openwrt.org/ | R2 | ||||||

KUD | R2 | |||||||

Kubespray | R2 | |||||||

K8s | R2 | |||||||

Docker | https://github.com/docker - 18.09 | R2 | ||||||

Virtlet | R2 | |||||||

SDN - OVN | ||||||||

Components | Link | Akraino Release target | ||||||

Cluster-API | R2 | |||||||

Cluster-API-Provider-bare metal | https://github.com/metal3ovn-io/cluster-api-provider-baremetal | R2 | org/ovn-kubernetes - 0.3.0 | R2 | ||||

OpenvSwitch | https://github.com/openvswitch/ovs - 2.10.1 | R2 | ||||||

AnsibleProvision stack - Metal3 | https://github.com/metal3-io/baremetal-operator/ | R2 | ||||||

Host Operating system | Ubuntu 18.04 | R2 | ||||||

ansible/ansible - 2.7.10 | R2 | |||||||

Helm | Quick Access Technology(QAT) drivers | Intel® C627 Chipset - https://arkgithub.intel.com/content/www/us/en/ark/products/97343/intel-c627-chipset.html | R2 | NIC drivers | /helm/helm - 2.9.1 | R2 | ||

Istio | XL710 - https://wwwgithub.intel.com/content/dam/www/public/us/en/documents/datasheets/xl710-10-40-controller-datasheet.pdf | R2 | istio/istio - 1.0.3 | R2 | ||||

Rook/Ceph | ONAP | Latest release 3.0.1-ONAP - https://githubrook.comio/docs/onaprook/integration/v1.0/helm-operator.html v1.0 | R2 | |||||

WorkloadsMetalLB | OpenWRT SDWAN - openwrt.org/ | R3 | R2 | |||||

| Device Plugins | KUD | https:// | git.onapgithub. | orgcom/ | multicloud/k8s/intel/intel-device-plugins-for-kubernetes - SRIOV | R2 | ||

KubesprayNode Feature Discovery | kubespray

| R2 | ||||||

K8sCNI | https://github.com/kubernetescoreos/flannel/kubeadm - v1.15 | R2 | Dockerrelease tag v0.11.0 https://github.com/dockercontainernetworking/cni - 18.09 | R2 | Virtletrelease tag v0.7.0 https://github.com/Mirantiscontainernetworking/virtletplugins - 1release tag v0.4.4 | R2 | SDN - OVN8.1 https://github.com/ovn-org/ovn-kubernetes - 0.3.0 | R2 |

OpenvSwitch | https://github.com/openvswitch/ovs - 2.10.1 | R2 | ||||||

Ansible | https://github.com/ansible/ansible - 2.7.10 | R2 | ||||||

Helm | https://github.com/helm/helm - 2.9.1 | R2 | ||||||

Istio | https://github.com/istio/istio - 1.0.3 | R2 | ||||||

Kata container | R3 | |||||||

Kubevirt | https://github.com/kubevirt/kubevirt/ - v0.18.0 | R3 | ||||||

Collectd | R2 | |||||||

Rook/Ceph | R2 | |||||||

MetalLB | R3 | |||||||

Kube - Prometheus | R2 | |||||||

OpenNESS | Will be updated soon | R3 | ||||||

Multi-tenancy | R2 | |||||||

Knative | R3 | |||||||

Device Plugins | https://github.com/intel/intel-device-plugins-for-kubernetes - QAT, SRIOV | R2 | ||||||

| https://github.com/intel/intel-device-plugins-for-kubernetes - FPGA, GPU | R3 | |||||||

Node Feature Discovery | R2 | |||||||

CNI | https://github.com/coreos/flannel/ - release tag v0.11.0 https://github.com/containernetworking/cni - release tag v0.7.0 https://github.com/containernetworking/plugins - release tag v0.8.1 https://github.com/containernetworking/cni#3rd-party-plugins - Multus v3.3tp, SRIOV CNI v2.0( withSRIOV Network Device plugin) | R2 | ||||||

Conformance Test for K8s | R2 |

Kuralamudhan Ramakrishnan - Edit it for R2 release

APIs

Kuralamudhan Ramakrishnan kuralamudhan- what to do here?

APIs with reference to Architecture and Modules

High Level definition of APIs are stated here, assuming Full definition APIs are in the API documentation

Hardware and Software Management

...

containernetworking/cni#3rd-party-plugins - Multus v3.3tp, SRIOV CNI v2.0( with SRIOV Network Device plugin) | R2 |

Hardware and Software Management

Software Management

Hardware Management

Hostname | CPU Model | Memory | Storage | 1GbE: NIC#, VLAN, (Connected extreme 480 switch) | 10GbE: NIC# VLAN, Network (Connected with IZ1 switch) |

|---|---|---|---|---|---|

Jump | 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node1 | 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node2 | 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node3 | 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node4 | 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

node5 | 2xE5-2699 | 64GB | 3TB (Sata) | IF0: VLAN 110 (DMZ) | IF2: VLAN 112 (Private) |

Licensing

GNU/common license

...