...

Pre-Installation Requirements

Resource Requirements

The resource requirements for deployment depend on the specific blueprint and deployment target. Please see:

Installer

The current KNI blueprints use the openshift-install tool from the OKD Kubernetes distro to stand up a minimal Kubernetes cluster. All other Day 1 and Day 2 operations are then driven purely through manipulation of declarative Kubernetes manifests. To use this in the context of Akraino KNI blueprints, the project has created a set of light-weight tools that need to be installed first.

...

mkdir -p $GOPATH/src/gerrit.akraino.org/kni

cd $GOPATH/src/gerrit.akraino.org/kni

git clone https://gerrit.akraino.org/r/kni/installer

cd installer

make build

make binary

cp bin/* $GOPATH/bin

Secrets

Most secrets (TLS certificates, Kubernetes API keys, etc.) will be auto-generated for you, but you need to provide at least two secrets yourself:

...

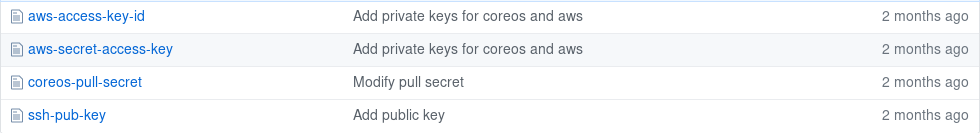

And store the public SSH key (id_rsa_kni.pub) and the pull secret there under the names ssh-pub-key and coreos-pull-secret, respectively.

Pre-Requisites for Deploying to AWS

For deploying a KNI blueprint to AWS, you need to

...

Store the aws-access-key-id and aws-secret-access-key in files of the same name in the akraino-secrets folder you created earlier.

Pre-Requisites for Deploying to Bare Metal

For deploying a KNI blueprint to bare metal using Metal3, you need to

- Acquire a server for the Provisioning Host with the following networking topology

- One nic interface with access to the internet

- Two nic interfaces that will connect to the Provisioning and Baremetal network

- Acquire two or more servers to be

- used as in the minimal case, 1 master + 1 worker

- used as a base cluster with HA, 3 master

- Master nodes should have 16G of RAM

- Worker nodes should have a minimum of 16G

- Each node is required to have 2 independent NIC interfaces

- Networking

- One nic on each of the master and workers will be put on the Provisioning LAN

- One nic on each of the master and workers will be put on the Baremetal LAN

- The Baremetal and Provisioning LANs should be isolated and not contain a DHCP nor DNS server.

- Deploy the Provisionin Host server with Centos 7.6

- The server requires

- 16G of mem

- 1 socket / 12 core

- 200G of free space in /

- Connect one nic to internet, one to Baremetal and one to Provisioning

- The server requires

- Prepare the Provisioning Host server for virtualization,

- source utils/prep_hos.sh from the kni-installer repo on the host.

Pre-Requisites for Deploying to Libvirt

For deploying a KNI blueprint to VMs on KVM/libvirt, you need to

...

Please see the upstream documentation for details.

...

Deploying a Blueprint

There is a Makefile on the root directory of this project. In order to deploy you will need to use the following syntax:

export CREDENTIALS=file://$(pwd)/akraino-secrets

export BASE_REPO="git::https://gerrit.akraino.org/r/kni/templates"

export BASE_PATH="aws/3-node"

export SITE_REPO="git::https://gerrit.akraino.org/r/kni/templates"

export SETTINGS_PATH="aws/sample_settings.yaml"

make deploy

Where:

CREDENTIALS

Content of private repo. This repository is needed to store private credentials. It is recommended that you store those credentials on a private repo where only allowed people have access, where access to the repositories can be controlled by SSH keys.

Each setting is stored in individual files in the repository:

- ssh-pub-key: public key to be used for SSH access into the nodes

- coreos-pull-secret: contents of the OpenShift credentials that you got with https://cloud.openshift.com/clusters/install

- aws-access-key-id: - just for AWS deploy - key id that you created in AWS

- aws-secret-access-key: - just for AWS deploy - secret for the key id

In order to clone a private repo, you can set with:

export CREDENTIALS=git@github.com:repo_user/repo_name.gitYou can also clone it locally with git first, or create a local folder with the right files, and set with:

export CREDENTIALS=file:///path/to/akraino-secrets| environment variable | description |

|---|---|

| BASE_REPO | Repository for the base manifests. This is the repository where the default manifest templates are stored. For Akraino, it's defaulting to git::https://gerrit.akraino.org/r/kni/templates |

| BASE_PATH | Inside BASE_REPO, there is one specific folder for each blueprint and provider: aws/1-node, libvirt/1-node, etc... So you need to specify a folder inside BASE_REPO, that will match the type of deployment that you want to execute. |

| SITE_REPO | Repository for the site manifests. Each site can have different manifests and settings, this needs to be a per-site repository where individual settings need to be stored, and where the generated manifests per site will be stored. For Akraino, it's defaulting to git::https://gerrit.akraino.org/r/kni/templates |

| SETTINGS_PATH | Specific site settings. Once the site repository is being cloned, it needs to contain a settings.yaml file with the per-site settings. This needs to be the path inside the SITE_REPO where the settings.yaml is contained. In Akraino, a sample settings file for AWS and libvirt is provided. You can see it on aws/sample_settings.yaml and libvirt/sample_settings.yaml on the SITE_REPO. You should create your own settings specific for your deployment. |

...

Settings Specific for AWS DeploymentsHow to deploy for AWS

There are two different footprints for AWS: 1 master/1 worker, and 3 masters/3 workers. Makefile needs to be called with:

...

Currently the installer is failing when adding console to the cluster for libvirt. In order to make it work, please follow instructions on https://github.com/openshift/installer/pull/1371.

...

Accessing the

...

Cluster

After the deployment finishes, a kubeconfig file will be placed inside build/auth directory:

...

Then cluster can be managed with the kubectl or oc CLI tool. You can get the client on this link: (drop-in replacement with advanced functionality) CLI tools. To get the oc client, see "Step 5 - Accessing your new cluster" at https://cloud.openshift.com/clusters/install ( Step 5: Access your new cluster ).

How to destroy the cluster

In order to destroy the running cluster , and clean up your environment, just use run

make clean

...

| Anchor | ||||

|---|---|---|---|---|

|

...