| Table of Contents | ||

|---|---|---|

|

Motivation

In ICN, we required to share resources with multiple users and/or application. In the web enterprise segment, it is like multiple deployments team sharing the Kubernetes(K8s) cluster. In the case of Telco or Cable segment, we have multiple end have ONAP4k8s as the service orchestration and Kubernetes as the resource orchestration. In the edge deployment, there will be multiple end-users sharing the same edge compute resource. This proposal refers to the Kubernetes Multi-tenancy options and how to utilize it in ICN architecture and also to benefit Multi-tenancy use case in K8sThe challenges are to isolate the end-users deployment and allocate the resource as per their demand and quota. This proposal addresses these challenges by creating a "Logical cloud" for the set of users, and provide logical isolation and resource quota.

Goal(in Scope)

Focusing on the solution within the cluster for tenants, and working with Kubernetes SIG groups and adapt the solution in the ICNservice orchestration

Goal(Out of Scope)

Working in Kubernetes core or API is clearly out of the scope of these documents. There are the solutions available to provide a separate control plane to each tenant in a cluster, it is quite expensive and hard to have such a solution in a cloud-native space.. But the creation of tenant within a cluster does not address the shared clusters and tenant creation should be at service orchestration instead of resource orchestration

Outline

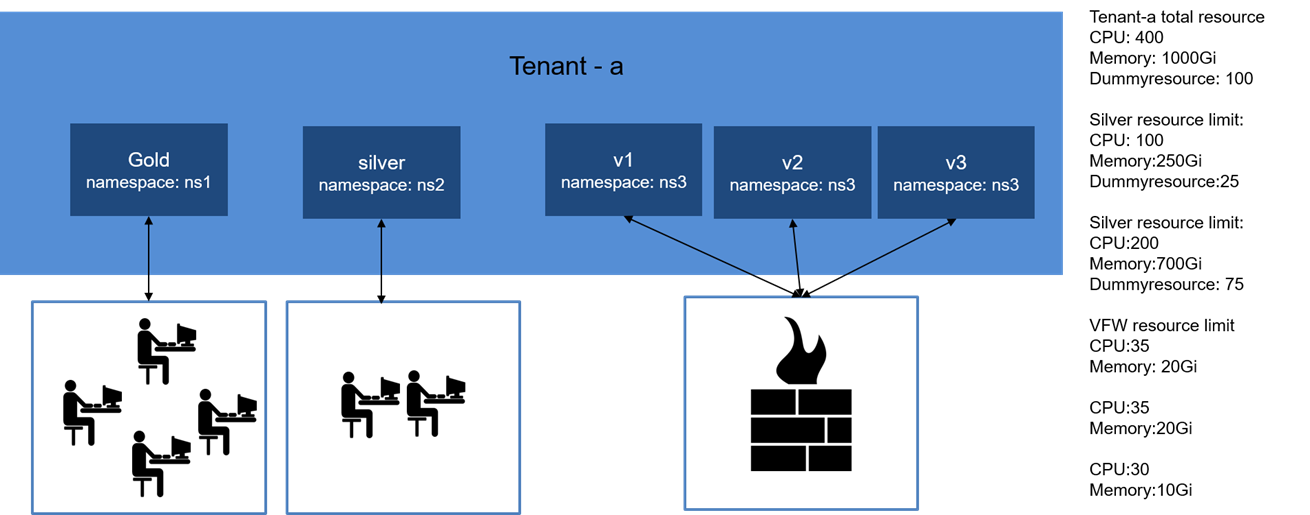

In this section, we define Multi-Tenancy Logical cloud in general for the Service Orchestration engine. A tenant Logical cloud can be defined as a group of resources bounded and isolated amount of compute, storage, networking and control plane in a kubernetes cluster. A tenant Logical cloud can also be defined as a group of users slicing a bounded resource allocated for them. These resources can be as follows:

- CPU, Memory, Extended Resources

- Network bandwidth, I/O bandwidth, Resource orchestration(Kubernetes) cluster resource

- Resource reservation to provide the Guaranteed QoS in Kubernetes

Multi-tenancy can be distinguished as "Soft Multitenancy" and "Hard Multitenancy"

- Soft Multitenancy tenants are trusted(means tenant obey the resource boundary between them). One tenant should not access the resource of another tenant

- Hard Multitenancy tenants can't be trusted(means any tenant can be malicious, and there must be a strong security boundary between them), So one tenant should not have access to anything from other tenants.

Requirement

- For a service provider, a tenant is basically a group of end-user sharing the same cluster, we have to make sure that the end user resources are tracked and accountable for their consumption in a cluster

- In a few cases, admin or end-user application is shared among multiple tenants, in such case application resource should be tracked across the cluster

- Centralization resource quota or the allocation limits record should be maintained by admin or for the end user. For example, just a kubectl "query" to Kubernetes API should display the resource quota and policy for each end-user or tenant

- In Edge use case, the service orchestration like ICN should get the resource details across multiple clusters by resource orchestration, should set the resource allocation for the cluster and decide the scheduling mechanism

- User credential centralization with application orchestration

Cloud Native Multi-tenancy Proposal - Tenant controller

Cloud Native Multi-tenancy proposal reuses the Kubernetes Multi-tenancy works to bind the tenant at the service orchestration and resource orchestration level.

Kubernetes Tenant project

All the materials discussed in the following section are documented in the reference section link, and the contents are belongs to authors to respective doc

Kubernetes community working on Tenant controller that define tenant as Custom resource definition, CRD, and define the following elements.

Kubernetes Tenant controller creates a multi-tenant ready kubernetes cluster that allows the creation of the following new types of kubernetes objects/ resources:

- A tenant resource (referred as “Tenant-CR” for simplicity)

- A namespace template resource (referred as “NamespaceTemplate-CR” for simplicity)

Tenant resource: Is a simple CRD object for the tenant with namespace associated with the tenant name

Namespace template resource: Define like Role, RoleBinding, ResourceQuota, network policy for the namespace associated with tenant-CR

Tenant controller set up

Build the Tenant controller

The following steps explain how to run the tenant controller in kubernetes

| Code Block | ||||

|---|---|---|---|---|

| ||||

$ go get github.com/kubernetes-sigs/multi-tenancy

$ cd $GOPATH/src/github.com/kubernetes-sigs/multi-tenancy

$ cat <<EOF > $PWD/.envrc

export PATH="`pwd`/tools/bin:$PATH"

EOF

$ source .envrc

<<Have to install additional few golang package, the project is not having vendor folder>>

$ go get github.com/golang/glog

$ go get k8s.io/client-go/kubernetes

$ go get k8s.io/client-go/kubernetes/scheme

$ go get k8s.io/client-go/plugin/pkg/client/auth/gcp

$ go get k8s.io/client-go/tools/clientcmd

$ go get github.com/hashicorp/golang-lru

$ devtk setup

$ devtk build

<<Running tenant controller>>

$ $PWD/out/tenant-controller/tenant-ctl -v=99 -kubeconfig=$HOME/.kube/config

|

...

- in Resource orchestration(Kubernetes)

Requirement

- For a service provider, a Logical cloud is basically a group of end-user sharing the same cluster, we have to make sure that the end-user resources are tracked and accountable for their consumption in a cluster

- In a few cases, admin or end-user application is shared among multiple Logical cloud, in such case application resource should be tracked across the cluster

- Centralization resource quota or the allocation limits record should be maintained by admin or for the end-user. For example, just a " Resource query" API to Service Orchestration (ONAP4K8s) should display the resource quota and policy for each end-user or logical cloud

- In Edge use case, the service orchestration like ICN should get the resource details across multiple clusters by resource orchestration, should set the resource allocation for the cluster and decide the scheduling mechanism

- User credential centralization with application orchestration

Distributed Cloud Manager

Objectives:

- User creation

- Namespace creation

- Logical cloud creation

- Resource isolation

Assumption:

- During the Cluster registration to the ONAP4K8s, the ONAP4K8s HPA features associate each cluster with the labels

- These labels are used by the DCM to identities the cluster and create the logical cloud

DCM Block diagram

DCM Flow:

- All components are exposed as DCM microservices and queries are made by Rest API

- DCM User controller microservices API is used to create the users with logical cloud admin and their associated logical namespace in the each cluster using Cluster labels

- DCM Manager looks for the quota information, if the quota information is not available it will apply the default quota for memory, CPU and kubernetes resources

- DCM Manager Microservices queries the database to create users, namespace, security controller root CA

- DCM Manager create logical cloud using Istio control plane using namespace and security controller root CA

- The quota for the logical cloud could be tuned even after the logical cloud

Logical cloud creation(With default resource quota & users)

The following steps explain how to run the tenant controller in kubernetes

| Code Block | ||||

|---|---|---|---|---|

| ||||

URL: /v2/projects/<project-name>/logical-clouds

POST BODY:

{

"name": "logical-cloud-1", //unique name for the new logical cloud

"description": "logical cloud for walmart finance department", //description for the logical cloud

"cluster-labels": "abc,xyz",

"resources": {

"cpu": "400",

"memory": "1000Gi",

"pods": "500",

"dummy/dummyResource": 100,

},

"user" : [{

"name" : "user-1", //name of user for this cloud

"type" : "certificate", //type of authentication credentials used by user (certificate, APIKey, UNPW)

"certificate" : "/path/to/user1/logical cloud-1-user1.csr" , //Path to user certificate

"permissions" : {

"apiGroups" : ["stable.example.com"]

"resources" : ["secrets", "pods"]

"verbs" : ["get", "watch", "list", "create"]

},

"quota" : {

"cpu": "100",

"memory": "500Gi",

"pods": "100",

"dummy/dummyResource": 20

}]

}

}

curl -d @create_logical_cloud-1.json http://onap4k8s:<multicloud-k8s_NODE_PORT>/v2/projects/<project-name>/logical-clouds \

--key ./logical cloud-t1-admin-key.pem \

--cert ./logical cloud-t1-admin.pem \

Return Status: 201

Return Body:

{

"name" : "logical-cloud-1"

"user" : "user-1"

"Message" : "logical cloud and associated user successfully created"

}

|

Creating users:

Logical cloud admin key and certificate should be created by Logical cloud admin(the one who create the curl command). Authentication is required for the curl command. DCM should have the Admin logical cloud information to authenticate the curl command. Unauthorized users can't create the logical cloud.

How the user certificate should be created?

- Create a private key for user1

- openssl genrsa -out logical cloud-1-user-1.key 2048

- Create a certificate sign request logical cloud-1-user1.csr

- openssl req -new -key logical cloud-1-user-1.key -out logical cloud-1-user1.csr -subj "/CN=user-1/O=logical cloud-1“

This information should be created before creating logical cloud and inserted in the logical cloud creation

Binding the user certificates with the cluster?

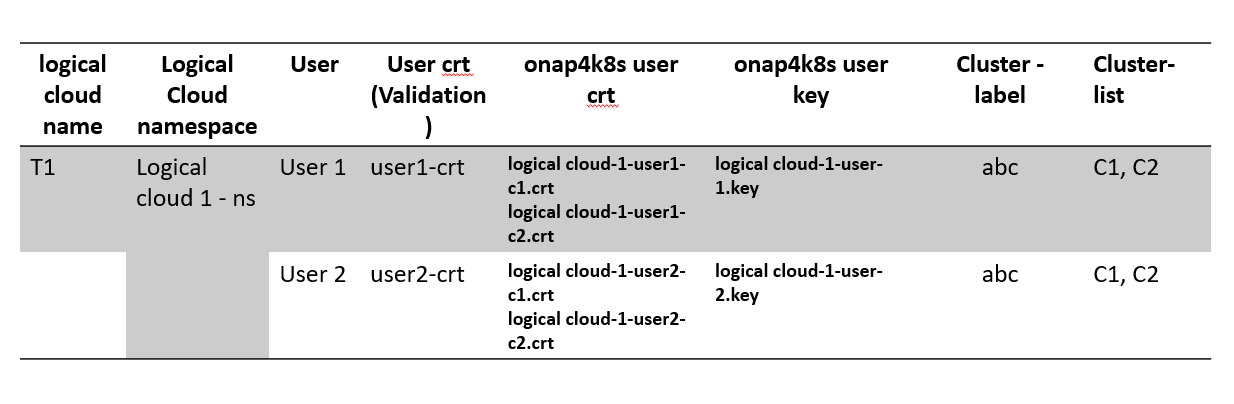

User controller does the following steps to bind the user certificate with the cluster using the cluster-labels : abc and xyz. Itohan Ukponmwan Please get the GET URL from HPA controller to get the cluster list with cluster labels

DCM queries HPA controller the list of cluster having cluster-labels abc and gets the cluster list c1 and c2

Each cluster(C1, C2) has the Kubernetes cluster certificate cluster (CA – c1-ca.crt & c1-ca.key), generate the final certificate logical cloud-1-user1.crt by using logical cloud-1-user1.csr (do the same for the cluster c2). user controller does the following steps once the logical cloud curl command is post through grpc with goclient API

$ openssl x509 -req -in logical cloud-1-user1.csr -CA CA_LOCATION/c1-ca.crt -CAkey CA_LOCATION/c1-ca.key -CAcreateserial -out logical cloud-1-user1-c1.crt -days 500

$ kubectl –kubeconfig=/path/to/c1/kubeconfig config set-credentials user-1 --client-certificate=./ logical cloud-1-user1-c1.crt --client-key=./logical cloud-1-user-1.key

The following steps explain how to run the tenant controller in kubernetes

| Code Block | ||||

|---|---|---|---|---|

| ||||

GET URL: /v2/projects/<project-name>/logical-clouds/<name>/users

RETURN STATUS: 200

RETURN BODY:

{

users" : [{

"name" : "user-1", //name of user for this cloud

"type" : "certificate", //type of authentication credentials used by user (certificate, APIKey, UNPW)

"certificate" : "/path/to/user1/logical cloud-1-user1.csr" , //Path to user certificate

"permissions" : {

"apiGroups" : ["stable.example.com"]

"resources" : ["secrets", "pods"]

"verbs" : ["get", "watch", "list", "create"]

},

"quota" : {

"cpu": "100",

"memory": "500Gi",

"pods": "100",

"dummy/dummyResource": 20

}

},

{

"name" : "user-2", //name of user for this cloud

"type" : "certificate", //type of authentication credentials used by user (certificate, APIKey, UNPW)

"certificate" : "/path/to/user2/logical cloud-1-user1.csr" , //Path to user certificate

"permissions" : {

"apiGroups" : ["stable.example.com"]

"resources" : ["secrets", "pods"]

"verbs" : ["get", "watch", "list", "create"]

},

"quota" : {

"cpu": "100",

"memory": "500Gi",

"pods": "100",

"dummy/dummyResource": 20

}

}

]

DELETE

URL: /v2/projects/<project-name>/logical-clouds/<name>/users

URL: /v2/projects/<project-name>/logical-clouds/<name>/user/<user-name>

RETURN STATUS: 204 |

Creating namespaces:

DCM queries the namespace controller through grpc to create namespace "logical cloud-1-ns" for the cluster with cluster labels abc and xyz. Namespace controller does the the following steps, to create the namespace and set the user with namespace through grpc with goclient API

$ kubectl create namespace logical cloud-1-ns --kubeconfig=/path/to/c1/kubeconfig

$ kubectl config set-context logical-cloud-1-user-1-context --cluster=c1 --namespace= logical cloud-1-ns --user=user1 --kubeconfig=/path/to/c1/kubeconfig

The following steps explain how to run the tenant controller in kubernetes

| Code Block | ||||

|---|---|---|---|---|

| ||||

GET URL: /v2/projects/<project-name>/logical-clouds/<name>/namespaces

RETURN STATUS: 200

RETURN BODY:

{

"clusters": {c1, c2}

namespces" : {

"name" : "logical cloud-1-ns", //name of namespace for the logical cloud

}

}

DELETE

URL: /v2/projects/<project-name>/logical-clouds/<name>/namespaces

RETURN STATUS: 204 |

DCM Database

DCM Database is based on Mongo DB.

Creating Logical cloud

- Logical cloud controller uses the Istio to create logical control using the https://istio.io/docs/setup/install/multicluster/gateways/ .

- DCM manager queries the Security controller with /v1/cadist/projects/{project-name}/logicalclouds/{logicalcloud-name}/clusters/{cluster-name} to get the bundle details for the cluster C1 and C2

- The expectation that "JSON bundle" should provide the path to the root cert.

- Logical cloud controller creates Isito control plane in Cluster C1 and C2 for namespace logical cloud-1-ns

| Code Block | ||||

|---|---|---|---|---|

| ||||

URL: /v2/projects/<project-name>/logical-clouds/control-plane

POST BODY:

{

"name": "logical-cloud-1", //unique name for the new logical cloud

"namespace": "Logical-cloud-1-istio-system",

"ca-cert": "",

"ca-key": "",

"root-cert": "",

"cert-chain" ""

"user" : {

"name" : "user-2", //name of user for this cloud

"type" : "certificate", //type of authentication credentials used by user (certificate, APIKey, UNPW)

"certificate" : "/path/to/user2/logical cloud-1-user2.csr" , //Path to user certificate

"permissions" : {

"apiGroups" : ["stable.example.com"]

"resources" : ["secrets", "pods"]

"verbs" : ["get", "watch", "list", "create"]

},

"quota" : {

"cpu": "200",

"memory": "300Gi",

"pods": "200",

"dummy/dummyResource": 30,

}

}

}

curl -d @create_logical_cloud-1-user-2.json http://onap4k8s:<multicloud-k8s_NODE_PORT>/v2/projects/<project-name>/logical-clouds \

--key ./logical cloud-t1-admin-key.pem \

--cert ./logical cloud-t1-admin.pem \

Return Status: 201

Return Body:

{

"name" : "logical-cloud-1"

"user" : "user-2"

"Message" : "logical cloud and associated user successfully created"

} |

Creating new users in already existing Logical cloud

the following steps explain how to run the tenant controller in kubernetes

| Code Block | ||||

|---|---|---|---|---|

| ||||

URL: /v2/projects/<project-name>/logical-clouds

POST BODY:

{

"name": "logical-cloud-1", //unique name for the new logical cloud

"user" : {

"name" : "user-2", //name of user for this cloud

"type" : "certificate", //type of authentication credentials used by user (certificate, APIKey, UNPW)

"certificate" : "/path/to/user2/logical cloud-1-user2.csr" , //Path to user certificate

"permissions" : {

"apiGroups" : ["stable.example.com"]

"resources" : ["secrets", "pods"]

"verbs" : ["get", "watch", "list", "create"]

},

"quota" : {

"cpu": "200",

"memory": "300Gi",

"pods": "200",

"dummy/dummyResource": 30,

}

}

}

curl -d @create_logical_cloud-1-user-2.json http://onap4k8s:<multicloud-k8s_NODE_PORT>/v2/projects/<project-name>/logical-clouds \

--key ./logical cloud-t1-admin-key.pem \

--cert ./logical cloud-t1-admin.pem \

Return Status: 201

Return Body:

{

"name" : "logical-cloud-1"

"user" : "user-2"

"Message" : "logical cloud and associated user successfully created"

} |

User creation details:

The Tenant CRD object is defined by following CRD objects:

...

Block diagram representation of tenant resource slicing

Tenant controller architecture

...

ICN Requirement and Tenant controller gaps

| ICN Requirement | Tenant Controller |

|---|---|

Multi-cluster tenant controller

| Cluster level tenant controller |

Identifying K8S clusters for this tenant based on cluster labels

| Tenant is created with CR at cluster level [Implemented] |

At K8s cluster level

|

|

Certificate Provisioning with Tenant

| Suggestion to bind the tenant with kubernetes context to see namespaces associated with it[Not implemented]. |

|

|

Multi-Cluster Tenant controller

...