Introduction

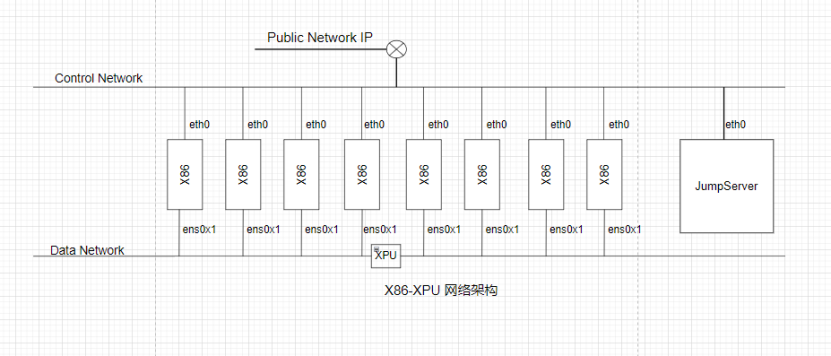

Since DPU is PCIe-compatible device, we can further combine DPU and PCIe Networking together. In R6, we introduce a hardware layer or physical link/fabric layer between the DPU and the CPUs as below. With this layer, we extend the DPU cluster size and also use the DPU management features as well.

Networking Topology

In CoB design, we have multiple networks. At least one PCIe networking for multiple CPUs. Also we can introduce more connections as well as traditional RJ45 as the management ports as well. Hence all CoB Hardware are cloud native compatible at the beginning.

Networking in CoB system for Cloud Native Applications

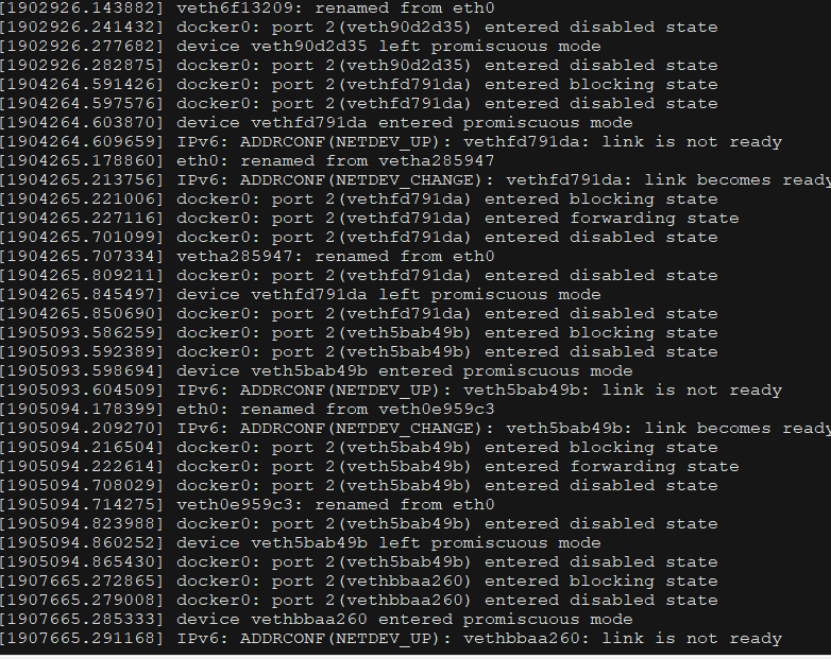

Docker installation log

Docker config

ExecStart=/usr/bin/dockerd -H unix:///var/run/docker.sock -H tcp://0.0.0.0:2375

docker-compose

cat >/etc/docker/daemon.json << EOF

{

"data-root":"/home/docker",

"debug":true,

"default-address-pools":[

{

"base":"192.1.0.0/16",

"size":24

}]

}

EOF

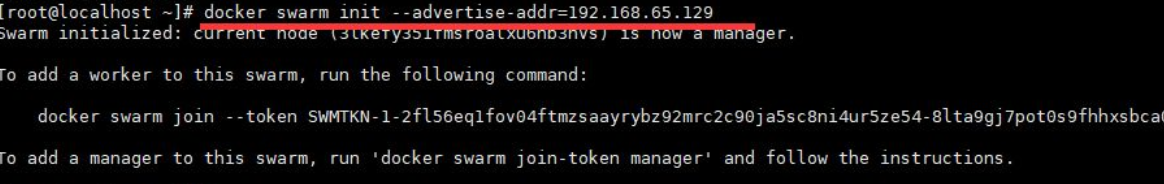

docker-swarm init

docker swarm init --advertise-addr 10.3.1.16 # set Manager

docker swarm join-token manager #join 10.3.1.16

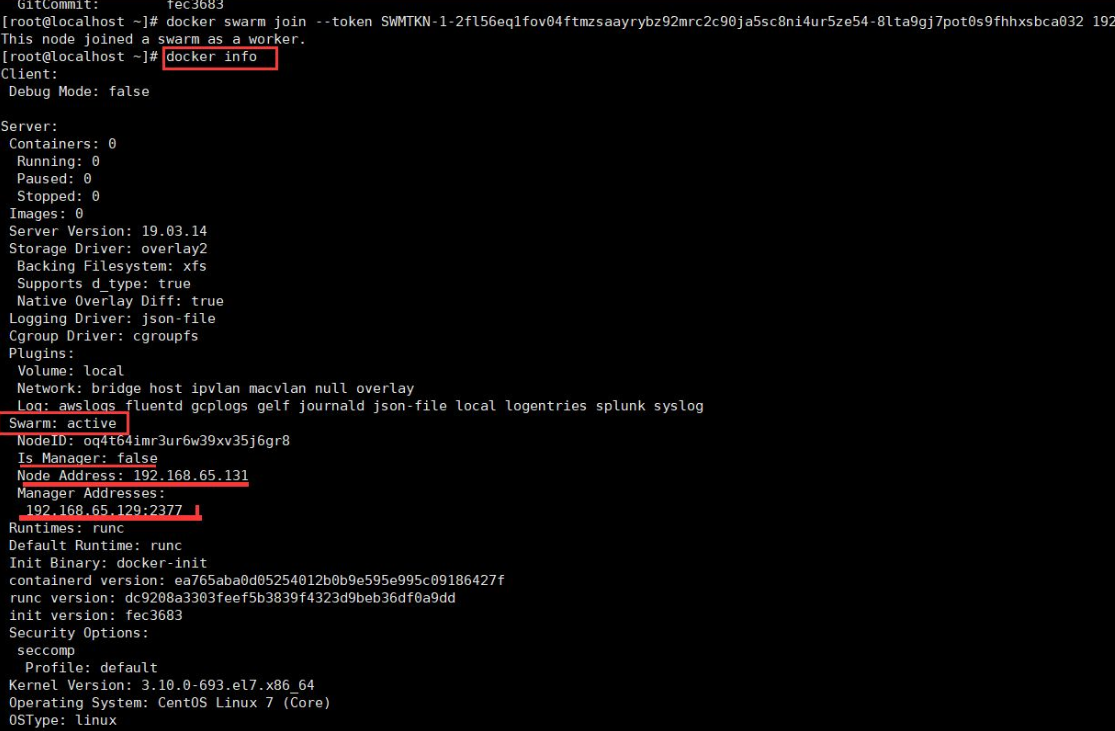

show

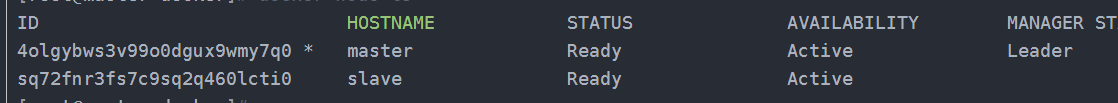

[root@master docker]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

4olgybws3v99o0dgux9wmy7q0 * master Ready Active Leader 19.03.5

sq72fnr3fs7c9sq2q460lcti0 slave Ready Active 19.03.5

Docker cluster status log

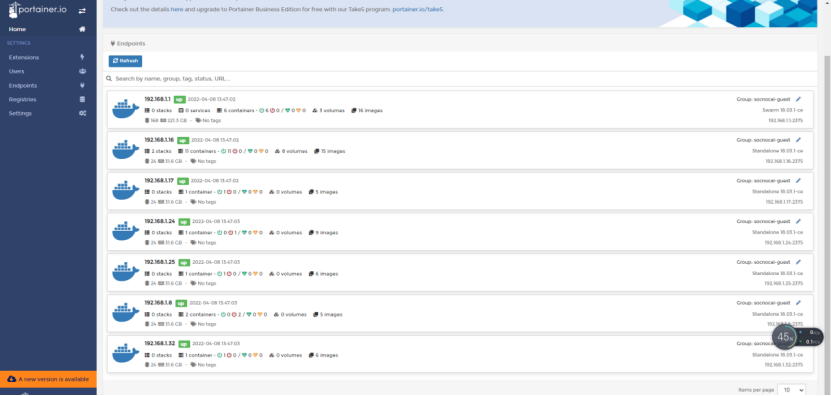

Web portal screenshot

The test is to evaluate the performance of SmartNIC offloading.

Test API description

Thus we currently don't have any Test APIs provided.

Test Dashboards

n/a

Additional Testing

n/a

Bottlenecks/Errata

n/a