...

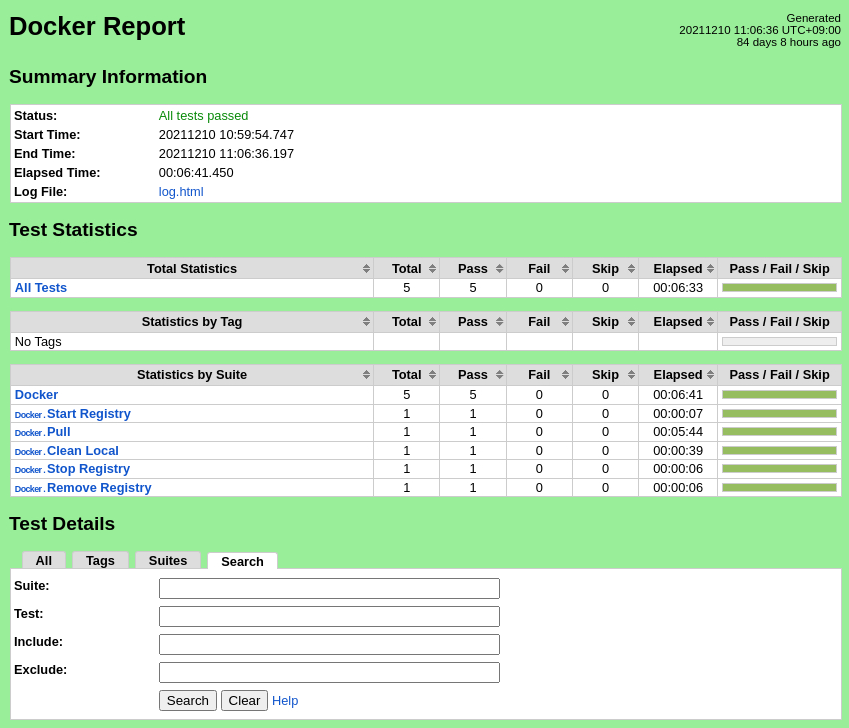

The test script will start the registry, pull upstream images and populate the registry, clean images left over from the pull process, stop the registry, and remove the registry. The robot command should report success for all test cases.

Test Results

Pass (5/5 test cases)

CI/CD Regression Tests: Node Setup

...

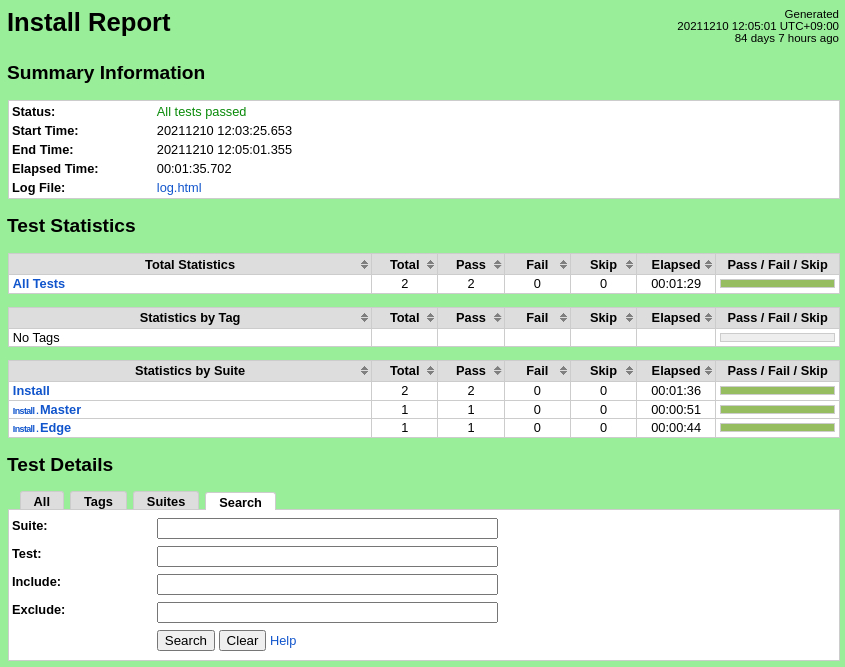

The test scripts will initialize the master and edge nodes and verify the required software is installed. The robot command should report success for all test cases.

Test Results

Pass (2/2 test cases)

CI/CD Regression Tests: Cluster Setup & Teardown

...

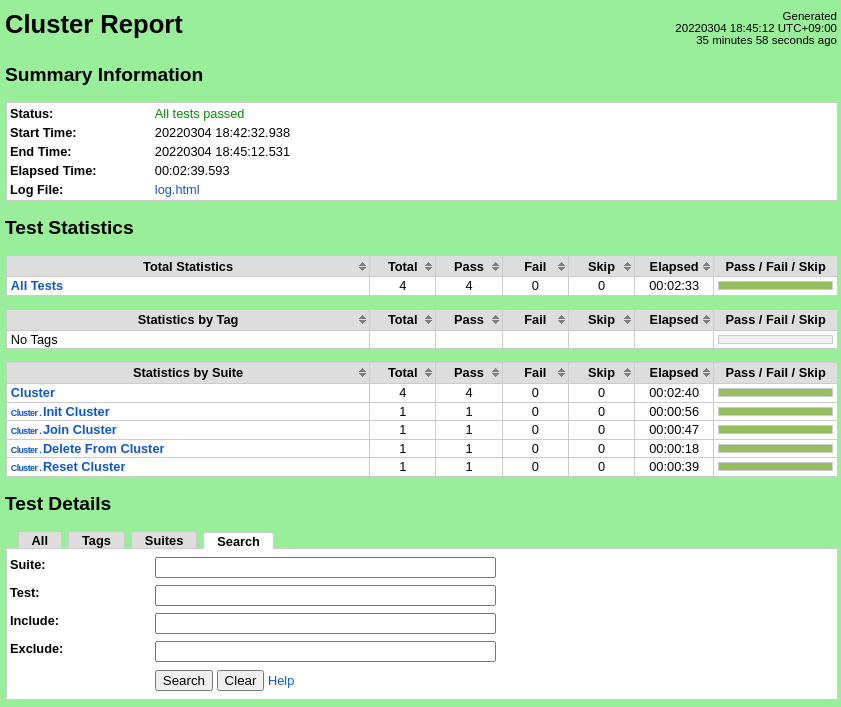

The test scripts will start the cluster, add all configured edge nodes, remove the edge nodes, and reset the cluster. The robot command should report success for all test cases.

Test Results

Pass (4/4 test cases)

CI/CD Regression Tests: EdgeX Services

...

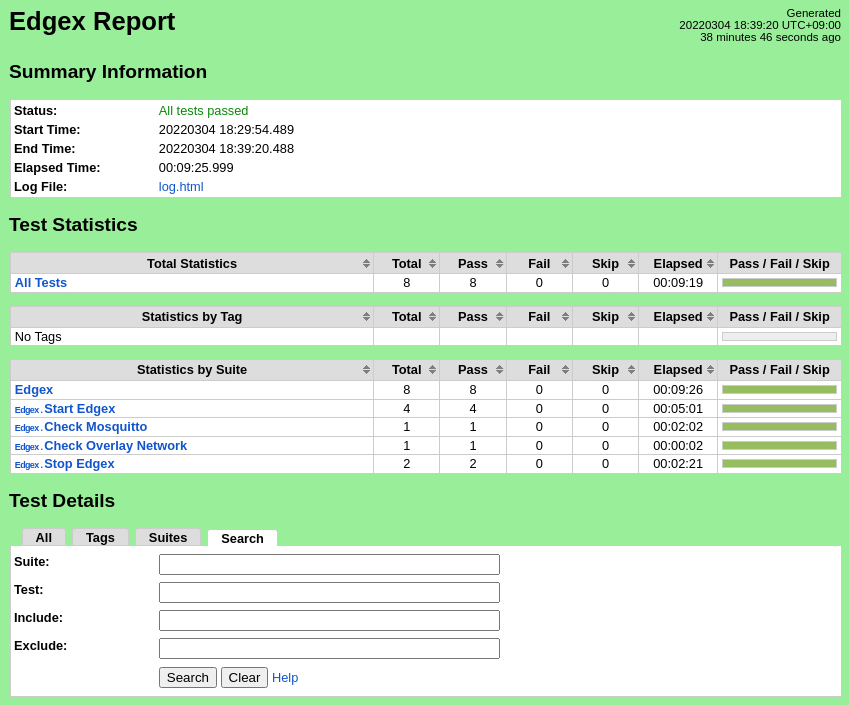

The test scripts will start the EdgeX micro-services on all edge nodes, confirm that MQTT messages are being delivered from the edge nodes, and stop the EdgeX micro-services. The robot command should report success for all test cases.

Test Results

Pass (8/8 test cases)

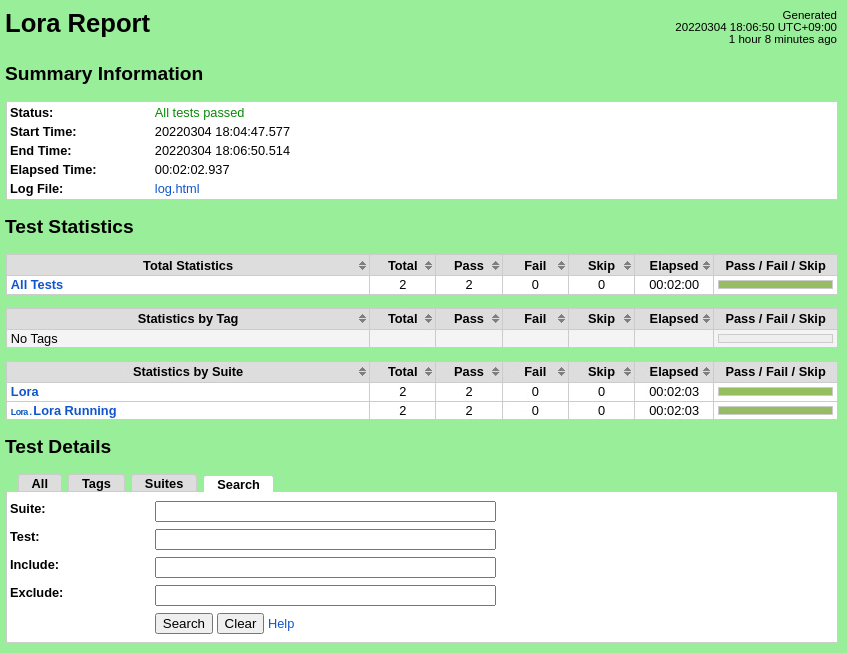

CI/CD Regression Tests: LoRa Device Service

...

The Robot Framework should report success for all test cases.

Test Results

Pass (2/2 test cases)

Feature Project Tests

N/A

BluVal Tests

...

We use Ubuntu 20.04, so we run ran Vuls test as followfollows:

Create directory

$ mkdir ~/vuls $ cd ~/vuls $ mkdir go-cve-dictionary-log goval-dictionary-log gost-logFetch NVD

$ docker run --rm -it \ -v $PWD:/go-cve-dictionary \ -v $PWD/go-cve-dictionary-log:/var/log/go-cve-dictionary \ vuls/go-cve-dictionary fetch nvdFetch OVAL

$ docker run --rm -it \ -v $PWD:/goval-dictionary \ -v $PWD/goval-dictionary-log:/var/log/goval-dictionary \ vuls/goval-dictionary fetch ubuntu 16 17 18 19 20Fetch gost

$ docker run --rm -i \ -v $PWD:/gost \ -v $PWD/gost-log:/var/log/gost \ vuls/gost fetch ubuntuCreate config.toml

[servers] [servers.master] host = "192.168.51.22" port = "22" user = "test-user" sshConfigPath = "/root/.ssh/config" keyPath = "/root/.ssh/id_rsa" # path to ssh private key in dockerStart vuls container to run tests

$ docker run --rm -it \ -v ~/.ssh:/root/.ssh:ro \ -v $PWD:/vuls \ -v $PWD/vuls-log:/var/log/vuls \ -v /etc/localtime:/etc/localtime:ro \ -v /etc/timezone:/etc/timezone:ro \ vuls/vuls scan \ -config=./config.tomlGet the report

$ docker run --rm -it \ -v ~/.ssh:/root/.ssh:ro \ -v $PWD:/vuls \ -v $PWD/vuls-log:/var/log/vuls \ -v /etc/localtime:/etc/localtime:ro \ vuls/vuls report \ -format-list \ -config=./config.toml

...

|

Test Dashboards

The above fixes are implemented in the Ansible playbook deploy/playbook/init_cluster.yml and configuration file deploy/playbook/k8s/fix.yml

Fix for CAP_NET_RAW Enabled: Create a PodSecurityPolicy with requiredDropCapabilities: NET_RAW. The policy is shown below. The complete fix is implemented in the Ansible playbook deploy/playbook/init_cluster.yml and configuration files deploy/playbook/k8s/default-psp.yml and deploy/playbook/k8s/system-psp.yml, plus enabling PodSecurityPolicy checking in deploy/playbook/k8s/config.yml.

apiVersion: policy/v1beta1 |

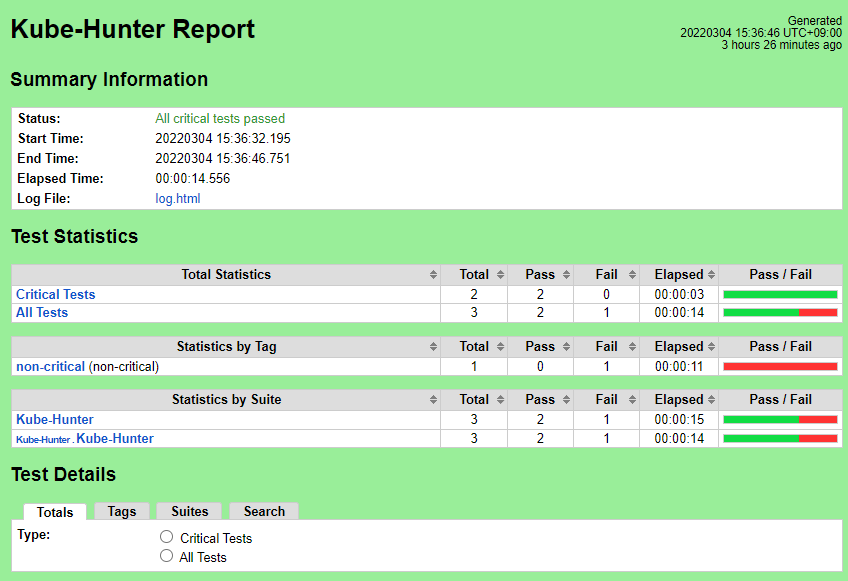

Results after fixes are shown below:

Note that in spite of all Kube-Hunter vulnerabilities being fixed, the results still show one test failure. The "Inside-a-Pod Scanning" test case reports failure, apparently because the log ends with "Kube Hunter couldn't find any clusters" instead of "No vulnerabilities were found." Because vulnerabilities were detected and reported earlier by this test case, and those vulnerabilities are no longer reported, we believe this is a false negative, and may be caused by this issue: https://github.com/aquasecurity/kube-hunter/issues/358

Test Dashboards

Single pane view of how the Single pane view of how the test score looks like for the Blue print.

| Total Tests | Test Executed | Pass | Fail | In Progress |

|---|---|---|---|---|

| 26 | 26 | 24 | 2 | 0 |

*Vuls is counted as one test case.

*One Kube-Hunter failure is counted as a pass. See above.

Additional Testing

None at this time.

Bottlenecks/Errata

None at this time.