...

Where on the Edge

Business Drivers

Overall Architecture

<This could inform the non-technical audience, but now is more geared towards a more engaged, technical audience>

< Blue print's relation to Akraino generic architecture, how it relates to it >

< This section will use the Akraino architecture document as reference>

Platform Architecture

<Hardware components should be specified with model numbers, part numbers etc>

Software Platform Architecture

<Software components with version/release numbers >

<EDGE Interface>

<ETSI MEC Interaction>

APIs

APIs with reference to Architecture and Modules

High Level definition of APIs are stated here, assuming Full definition APIs are in the API documentation

Hardware and Software Management

Licensing

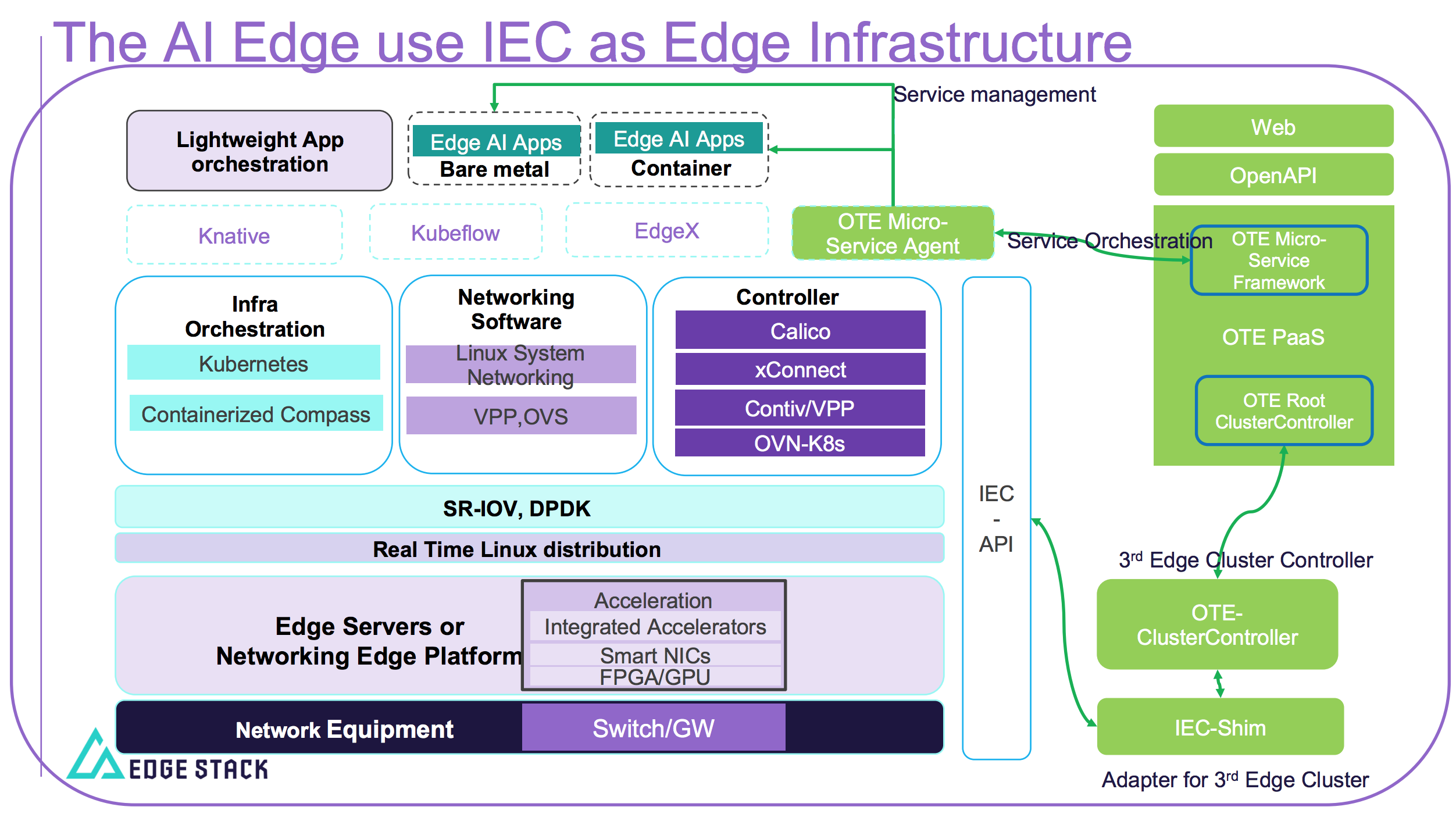

The AI Edge will provide a cluster management for different logical MEC edge clusters. Through the standard api interface, the third clusters can join the management of AI Edge easily, so as to schedule deployment of an AI application to a specific edge node with the unified access. The benefits are: Lower cost on manage multiple edge clusters and more computing power of edge devices can be utilized ; Less load and latencies on network and more safely since the application is running locally; Edge cluster autonomy.

Overall Architecture

The AI Edge blueprint architecture consists of a cluster control manager with web platform at the cloud and multiple edge clusters. The number of clusters can be theoretically unlimited which can effectively solve the management and scheduling problems of large-scale mobile edge clusters in 5G era. For development environment we have tested with one IEC clusters with 3 nodes.

The cluster control manager, which consists of ote-web, openapi and lightweight cluster-controller, manages orchestration and life cycle of applications on the edge cluster and the hierarchical structure of clusters. While the ote-web and openapi provides access to the AI Edge, the cluster-controller provides core capabilities support for network connection, metadata synchronization and message transmit between cloud and edge and establishes the routing path for all edges. The edge, can be a kubernetes cluster, a k3s cluster or other private cluster, will be deployed a cluster-controller and a cluster-shim as to receive and process messages from upper cluster. Due to the autonomy of edge cluster, the network/workspace infrastructure and data volumes are managed by itself. Therefore, the deployed AI applications can still run normally when disconnection from the cloud occured.

Many cloud native monitoring applications are used to collect container/node resource usage and running log, like prometheus, elasticsearch.

The below image shows the overall architecture for using IEC as edge infrastructure in AI Edge.

Platform Architecture

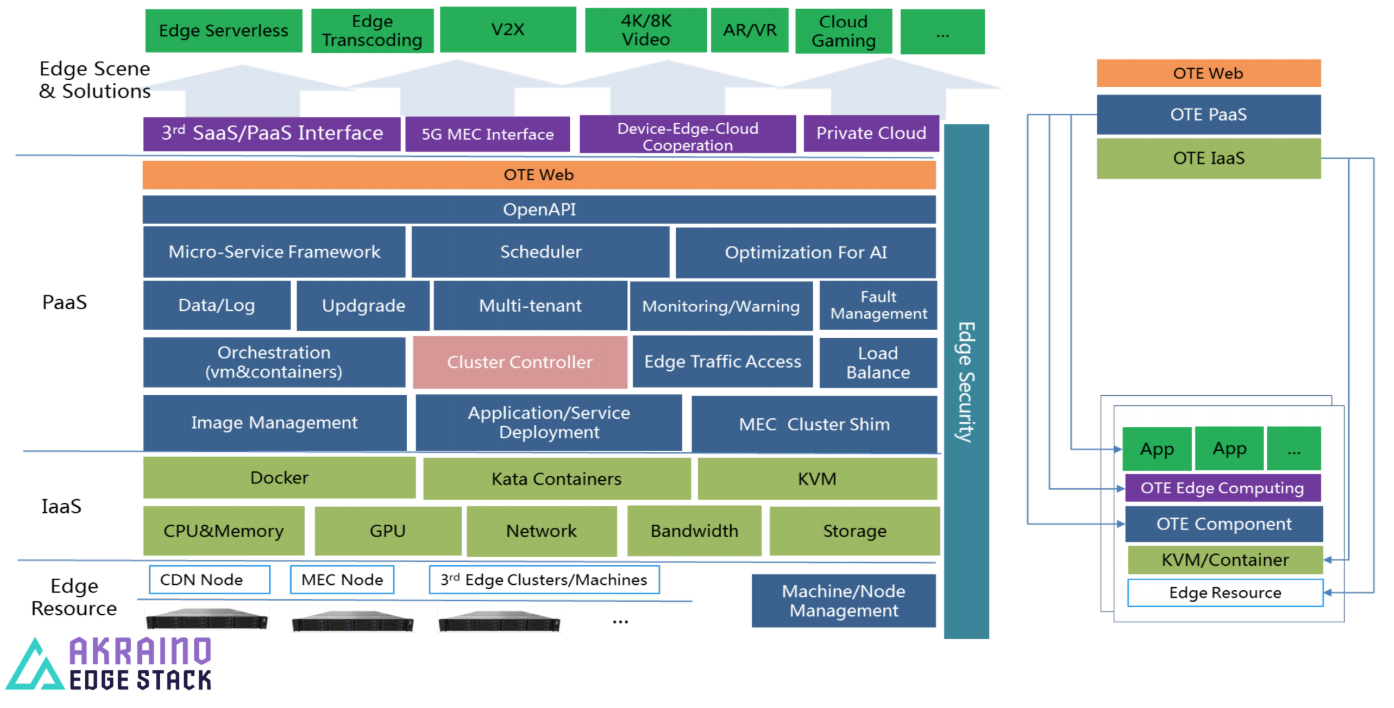

The detailed platform architecture of AI Edge blueprint is shown in the below diagram.

In the current release the components released are

- cluster-controller and controller-manager

- k8s-cluster-shim

Other components, such as openapi, ote-web, are currently released as docker images and will be open source in the future.

Software Platform Architecture

The below image shows the software architecture for this release.

OTE-Stack features a pluggable architecture, making it much easier to build on.

- The global scheduler is fully compatible with kubernetes. Users can operate directly using kubectl;

- Using websocket for the edge-cloud communication;

- In addition to the cluster name, the cluster tag can be added customically. Cluster tag matching through intelligent cluster-selecter to achieve accurate routing of messages;

- Through k8s-cluster-shim to achieve the management of kubernetes cluster, shielding the native implementation within the kubernetes cluster;

- According to the interface of OTE-Stack, the cluster shim of the third party cluster can be realized to access and schedule the third party cluster. The internal implementation of the third party cluster is shielded;

- Each layer can be used as a control entry to control all sub-clusters below this layer. Users can also use kubectl or API to implement custom cluster management and scheduling.

APIs

Federated ML application at edge R4 API Document

Hardware and Software Management

Software Management: Gerrit Repo

Licensing

Apache 2.0 GNU/common license