Table of Contents

Introduction

The R4 release will evaluate the conntrack performance of Open vSwitch (OVS).

A DPDK based Open vSwitch (OVS-DPDK) is used as the virtual switch, and the network traffic is virtualized with the VXLAN encapsulation.

We will compare the performance of an optimized software based OVS with the performance of the OVS running on a MLNX SmartNIC with hardware offload enabled.

Akraino Test Group Information

SmartNIC deployed Architecture

We reuse the test architecture for smart NIC in R5 release. The below description is the same with the R5 release test documents.

To deploy the Test architecture for smartNIC, we use a private Jenkins and a server equipped with a BlueField v2 SmartNIC.

We use Ansible to automatically setup the filesystem image and install the OVS-DPDK in the SmartNICs.

The File Server is a simple Nginx based web server where stores the BF drivers, FS image.

The Git repo is our own git repo where hosts OVS-DPDK and DPDK code.

The Jenkins will use ansible plugin to download BF drivers and FS image in the test server and setup the environment

according to the ansible-playbook.

...

http://www.mellanox.com/downloads/BlueField/BlueField-3.5.0.11563/BlueField-3.5.0.11563.tar.xz

...

...

Since DPU is PCIe-compatible device, we can further combine DPU and PCIe Networking together. In R6, we introduce a hardware layer or physical link/fabric layer between the DPU and the CPUs as below. With this layer, we extend the DPU cluster size and also use the DPU management features as well.

OVS-DPDK Test Architecture

OVS-DPDK on BlueField Test Architecture

The testbed setup is shown in the above diagram. DUT stands for Device Under Test

Networking Topology

In CoB design, we have multiple networks. At least one PCIe networking for multiple CPUs. Also we can introduce more connections as well as traditional RJ45 as the management ports as well. Hence all CoB Hardware are cloud native compatible at the beginning.

...

Networking in CoB system for Cloud Native Applications

Cloud Native Server Reference Design

At least, we pack all components together, and give a reference design as below.

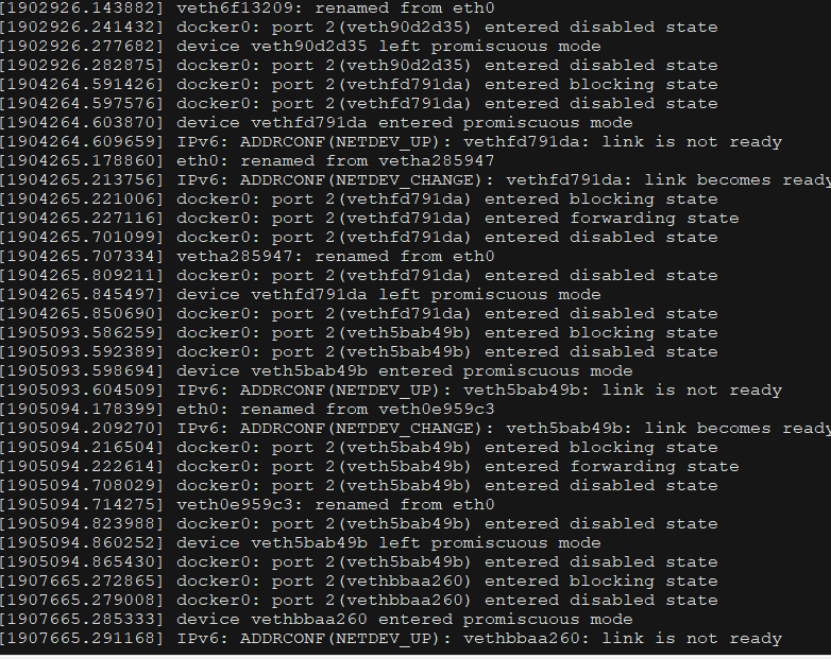

Docker installation log

Docker installation log

Docker config

ExecStart=/usr/bin/dockerd -H unix:///var/run/docker.sock -H tcp://0.0.0.0:2375

docker-compose

cat >/etc/docker/daemon.json << EOF

{

"data-root":"/home/docker",

"debug":true,

"default-address-pools":[

{

"base":"192.1.0.0/16",

"size":24

}]

}

EOF

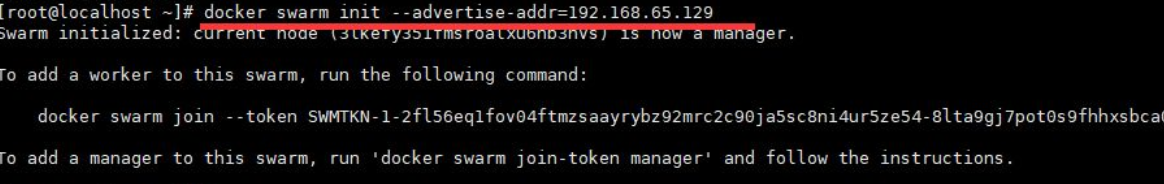

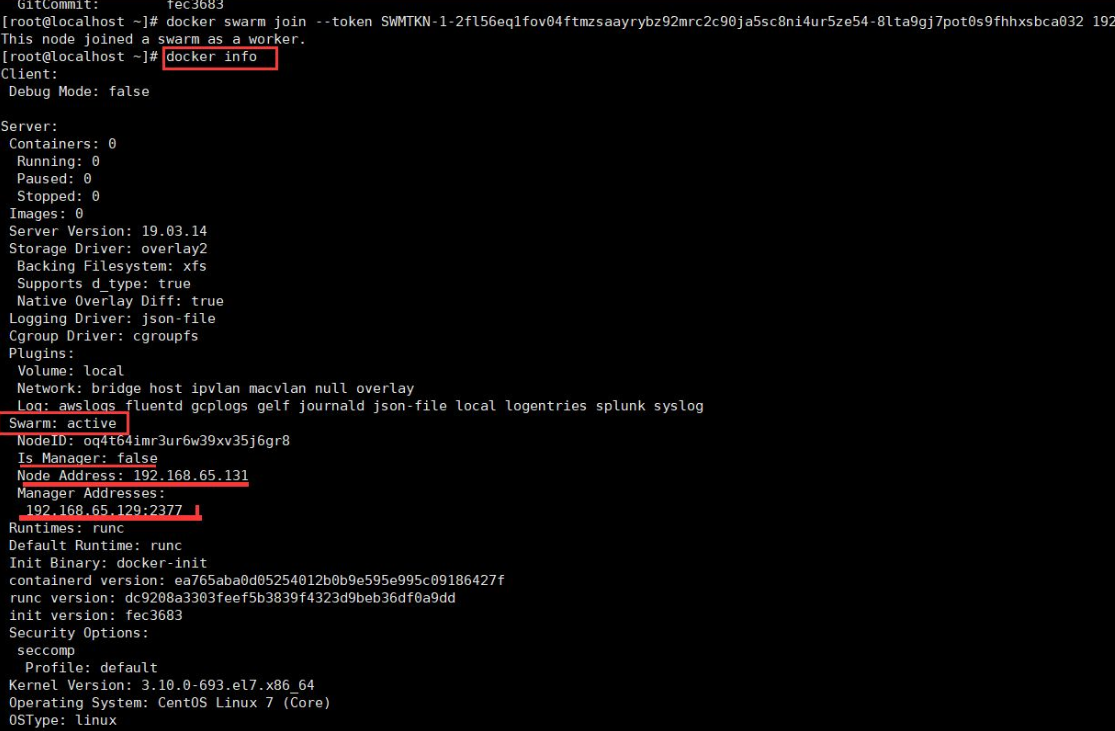

docker-swarm init

docker swarm init --advertise-addr 10.3.1.16 # set Manager

docker swarm join-token manager #join 10.3.1.16

show

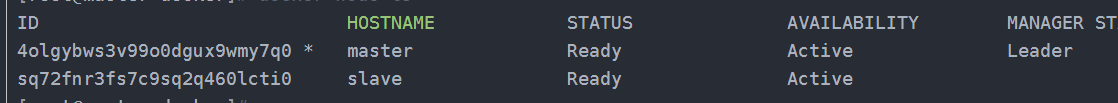

[root@master docker]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

4olgybws3v99o0dgux9wmy7q0 * master Ready Active Leader 19.03.5

sq72fnr3fs7c9sq2q460lcti0 slave Ready Active 19.03.5

Docker cluster status log

docker swarm join-token manager #join 10.3.1.16

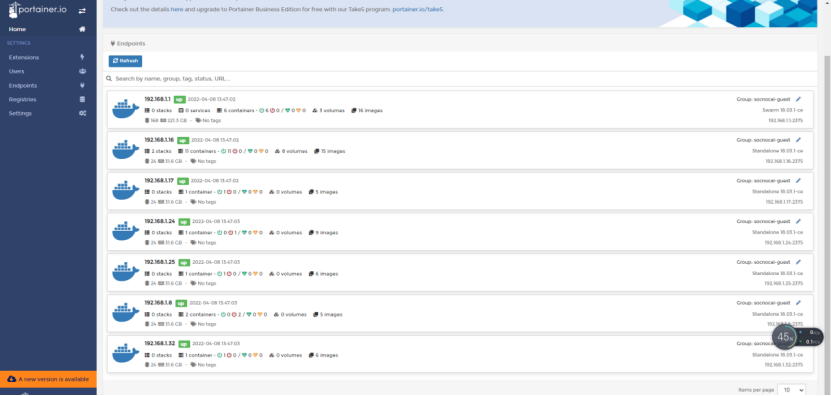

Web portal screenshot

The test is to evaluate the performance of SmartNIC offloading.

...