| HTML |

|---|

<script type="text/javascript" src="https://jira.akraino.org/s/c11c0bd6cdfdc04cacdf44e3072f7af4-T/ah7phx/78002/b6b48b2829824b869586ac216d119363/2.0.26/_/download/batch/com.atlassian.jira.collector.plugin.jira-issue-collector-plugin:issuecollector/com.atlassian.jira.collector.plugin.jira-issue-collector-plugin:issuecollector.js?locale=en-US&collectorId=c49ec9c2"></script> <script type="text/javascript">window.ATL_JQ_PAGE_PROPS = { "triggerFunction": function(showCollectorDialog) { jQuery("#myCustomTrigger").click(function(e) { e.preventDefault(); showCollectorDialog(); }); }}; </script>

<div style=" z-index:1000; background-color:#a00; position:fixed; bottom:0; right:-125px; display:block; transform:rotate(-45deg); overflow:hidden; white-space:nowrap; box-shadow:0 0 10px #888;" > <a href="#" id="myCustomTrigger" style=" border: 1px solid #faa; color: #fff; display: block; font: bold 125% 'Helvetica Neue', Helvetica, Arial, sans-serif; margin: 1px 0; padding: 10px 110px 10px 200px; text-align: center; text-decoration: none; text-shadow: 0 0 5px #444; transition: 0.5s;" >Report Issue</a> </div> |

| Table of Contents |

|---|

| Children Display | ||

|---|---|---|

|

Introduction

| draw.io Diagram | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Unicycle pods are deployed from an existing Regional Controller and consist of 3 master nodes and 0 to 4 worker nodes. The number of nodes may be increased after validation in subsequent releases.

A number of different options exist including the choice of Dell or HP servers and whether to support tenant VM networking with an OVS-DPDK or SR-IOV data plane. The choice of which is deployed is achieved by simply creating different pod specific yaml input files.

In R1 the options include

| Blueprint | Servers | Dataplane | Validated HW details | Validated by |

|---|---|---|---|---|

| Unicycle | Dell 740XD | OVS-DPDK | Ericsson Unicycle OVS-DPDK Validation HW, Networking and IP plan | Ericsson |

| Unicycle | Dell 740XD | SR-IOV | ATT Unicycle SR-IOV Validation HW, Networking and IP plan | AT&T |

Ericsson Unicycle SR-IOV Validation HW, Networking and IP plan | Ericsson | |||

| Unicycle | HP 380 Gen10 | SR-IOV | ATT Unicycle SR-IOV Validation HW, Networking and IP plan | AT&T |

Preflight Requirements

Servers

Deployment has been validated on two types of servers, Dell and HP.

The exact server specifications used during validation activities are defined in links in the table above. Whilst the blueprint allows for variation in the servers, since the installation includes provisioning of BIOS and HW specific components such as NICs, SSDs, HDDs, CPUs etc only these exact server types are supported and validated in the R1 release.

A unicycle pod deployment consists of at least 3 servers for the master nodes (that can also run tenant VM workloads and 0 to 4 dedicated worker nodes.

All Unicycle pod servers' iDRAC/iLO IP address and subnet must be manually provisioned into the server before installation begins. This includes the genesis, masters and any workers to be deployed in a pod.

Networking

The automated deployment process configures all aspects of the servers including BIOS, linux operating system and Network Cloud specific software. The deployment and configuration of all external switching components is outside the scope of the R1 release and must be completed as a pre-requisite before attempting a unicycle pods deployment.

Details of the networking is given in the Network Architecture section and that used during the validation in the ATT Validation Labs and Ericsson Validation Labs sections of the release documentation.

Software

When a Unicycle pod's nodes are installed on a new bare metal server no software is required on the Target Servers. All software will be installed from the Regional Controller and/or external repos via the internet.

Generate RC ssh Key Pair

In order to ssh into the Unicycle nodes after the deployment process a ssh key pair must be generated on the RC then the public key (located in /root/.ssh/id_rsa.pub) must be inserted into the Unicycle pods's site specific input file.

| Code Block | ||

|---|---|---|

| ||

root@regional_server# ssh-keygen -t rsa

.....

root@regional_server# cd /root/.ssh

root@regional_server# ls -lrt

total 12

-rw------- 1 root root 399 May 26 00:34 authorized_keys

-rw-r--r-- 1 root root 395 May 26 00:55 id_rsa.pub

-rw------- 1 root root 1679 May 26 00:55 id_rsa

|

Alternativley, any pre-existing ssh key pair can be used. In that case, the key pair should be manually added to the RC and the corresponding public key should be added to the input file. Instructions on these tasks are beyond the scope of this install guide.

Preflight Checks

To verify the necessary IP connectivity from the RC to the Target Unicycle Server's BMC confirm from the RC that at least port 443 is open to the Target Unicycle Server's iDRAC/iLO BMC IP address. Check the genesis, master and worker node oob ip addresses:

| Code Block | ||

|---|---|---|

| ||

root@regional_controller# #nmap -sS <SRV_OOB_IP>

root@regional_controller# nmap -sS 10.51.35.145

Starting Nmap 7.01 ( https://nmap.org ) at 2018-07-10 13:55 UTC Nmap scan report for 10.51.35.145 Host is up (0.00085s latency). Not shown: 996 closed ports PORT STATE SERVICE 22/tcp open ssh 80/tcp open http 443/tcp open https 5900/tcp open vnc Nmap done: 1 IP address (1 host up) scanned in 1.77 second |

Note: The enumerated IP shown (10.51.35.146) is an example iDRAC address for a RC deployed in a validation lab.

Preflight Unicycle Pod Specific Input Data

The automated deployment process configures the new Unicycle nodes based on a set of user defined values specific to each Unicycle pod. These values must be defined and stored in a site and pod specific input configuration file before the Unicycle pod deployment process can be started. The input file defines general information such as server names, network, and storage configuration as well as the more complex configuration details like SR-IOV or OVS-DPDK settings.

Unicycle Pods with OVS-DPDK Dataplane

No additional specific preflight checks are required.

Unicycle Pods with SR-IOV Dataplane

No additional specific preflight checks are required.

Deploying a Unicycle Pod

Deployment of each new Unicycle pod at a given site is performed from the RC's UI.

Warning! Internet Explorer and Edge may not work thus it is strongly recommended to use Chrome.

If an action appears to fail click 'Refresh'.

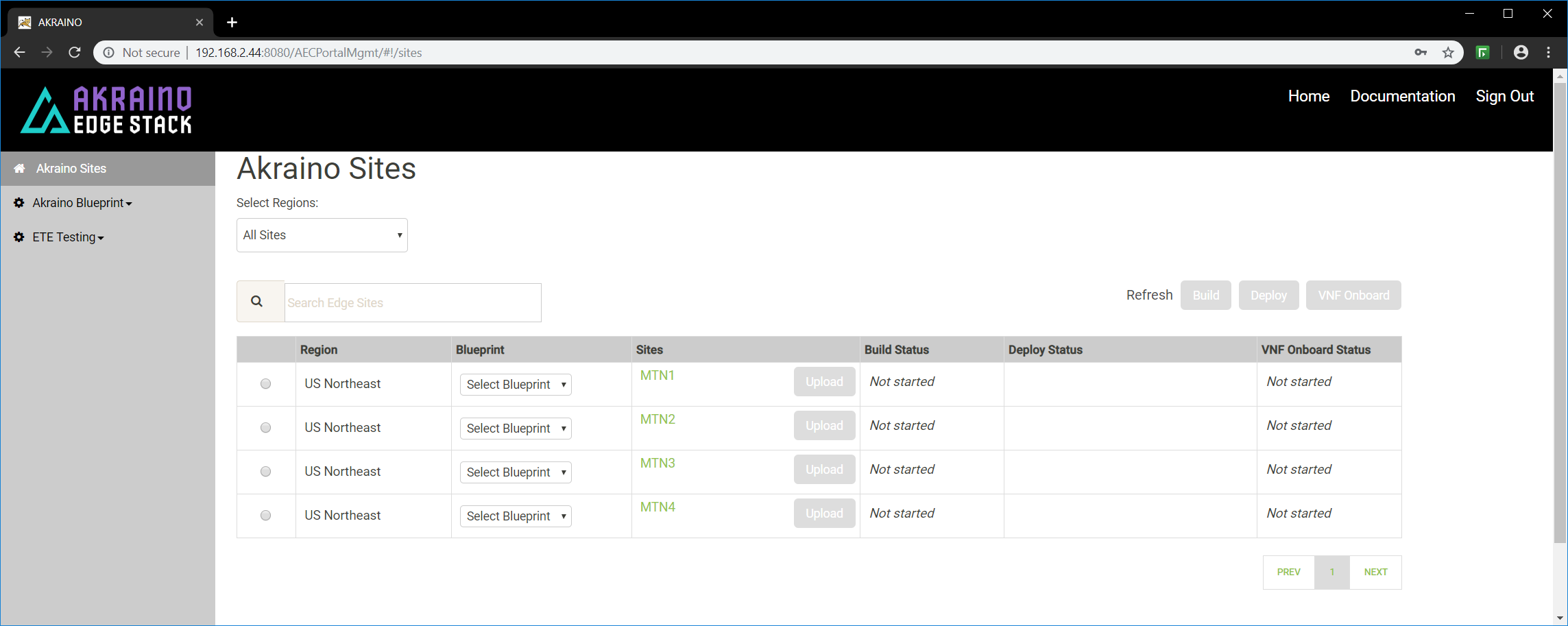

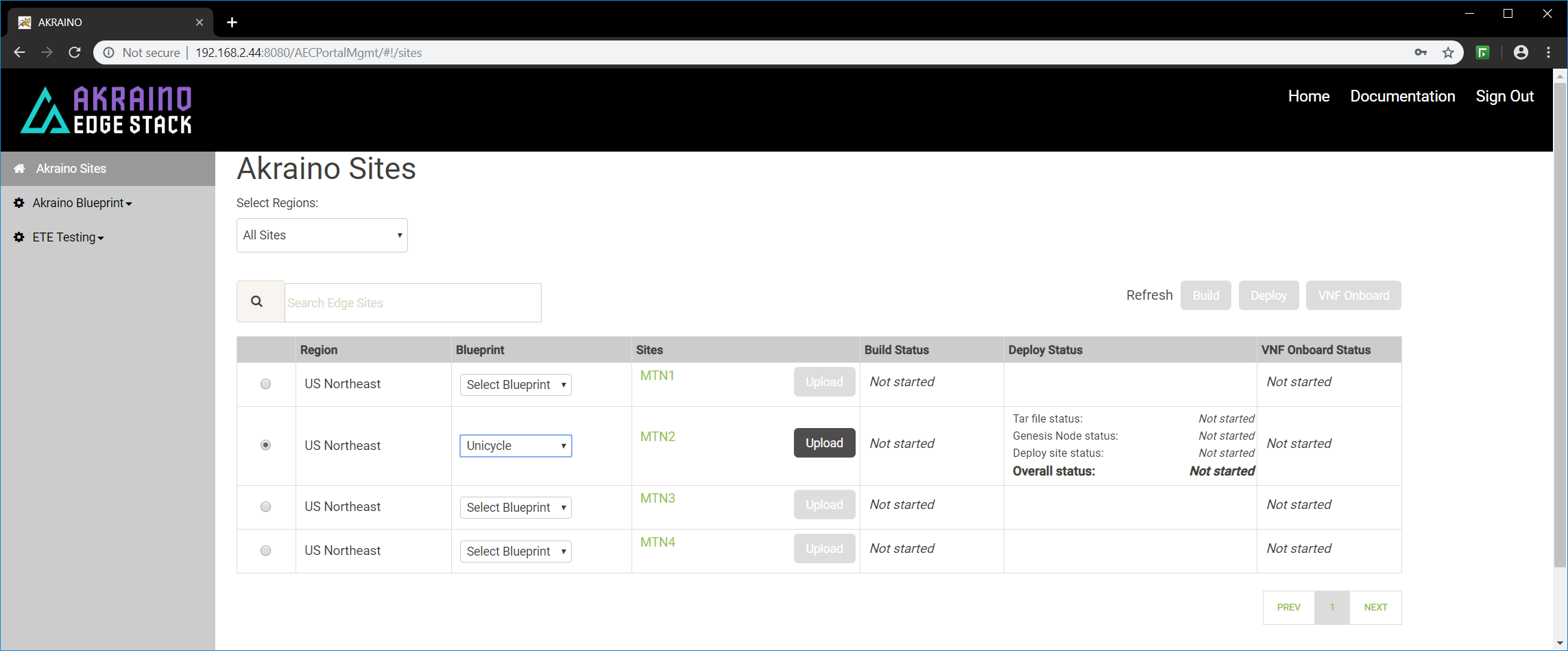

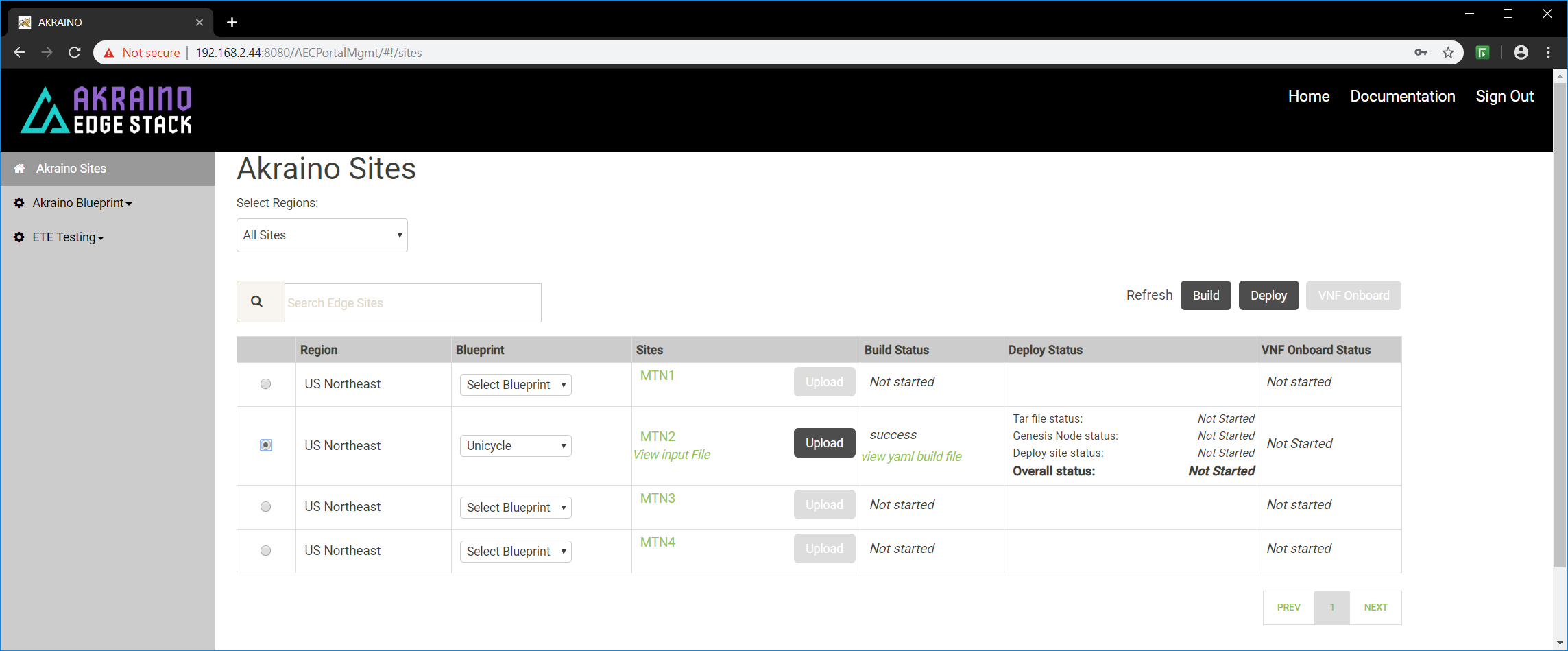

Select a Region and select 'Unicycle' for the blueprint :

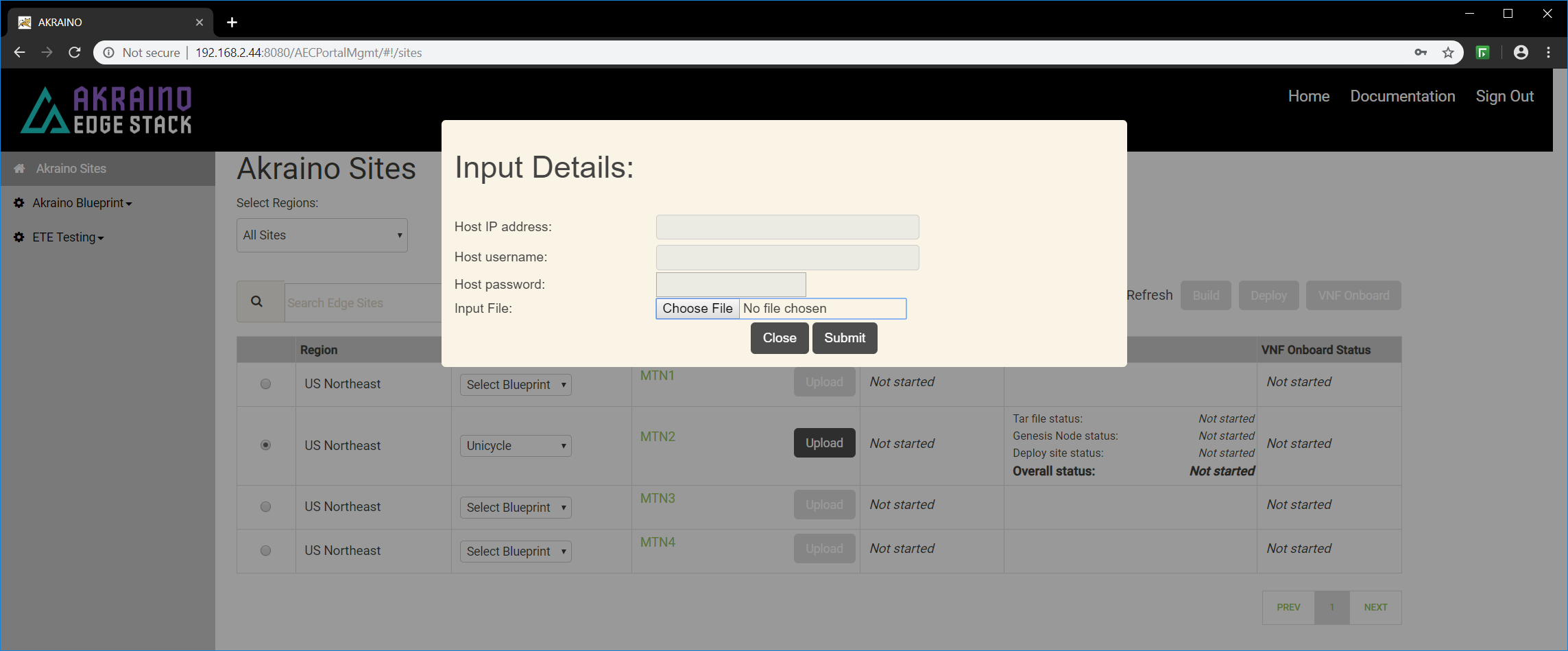

Click 'Upload' to allow you to select the Unicycle site and pod specific input file:

Site Specific Input File Selection

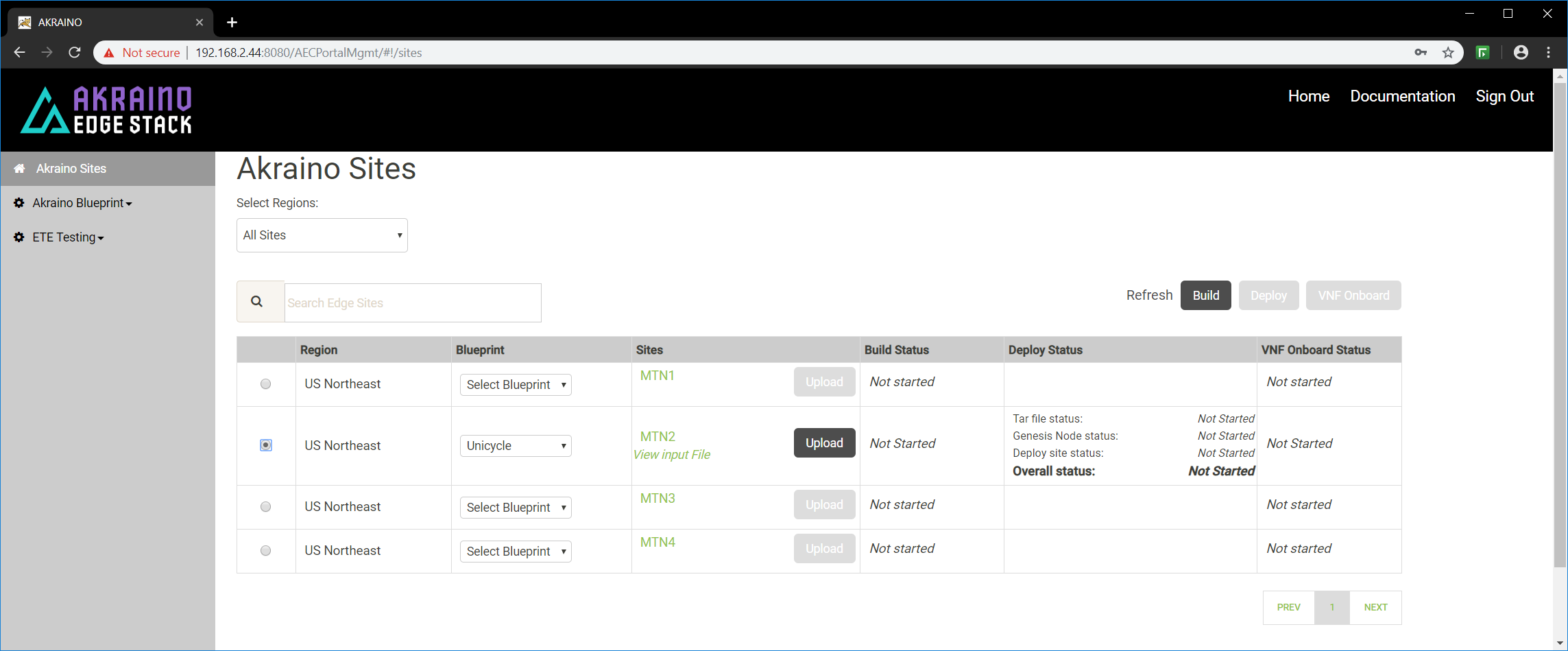

Choose the input file that you have created for the new Unicycle pod you want to deploy then click 'Submit'.

Click 'BUILD' to create the Airship files necessary to deploy the Unicycle site. Then click 'Refresh" and select the site again:

Make sure the pod you intend to deploy is still selected.

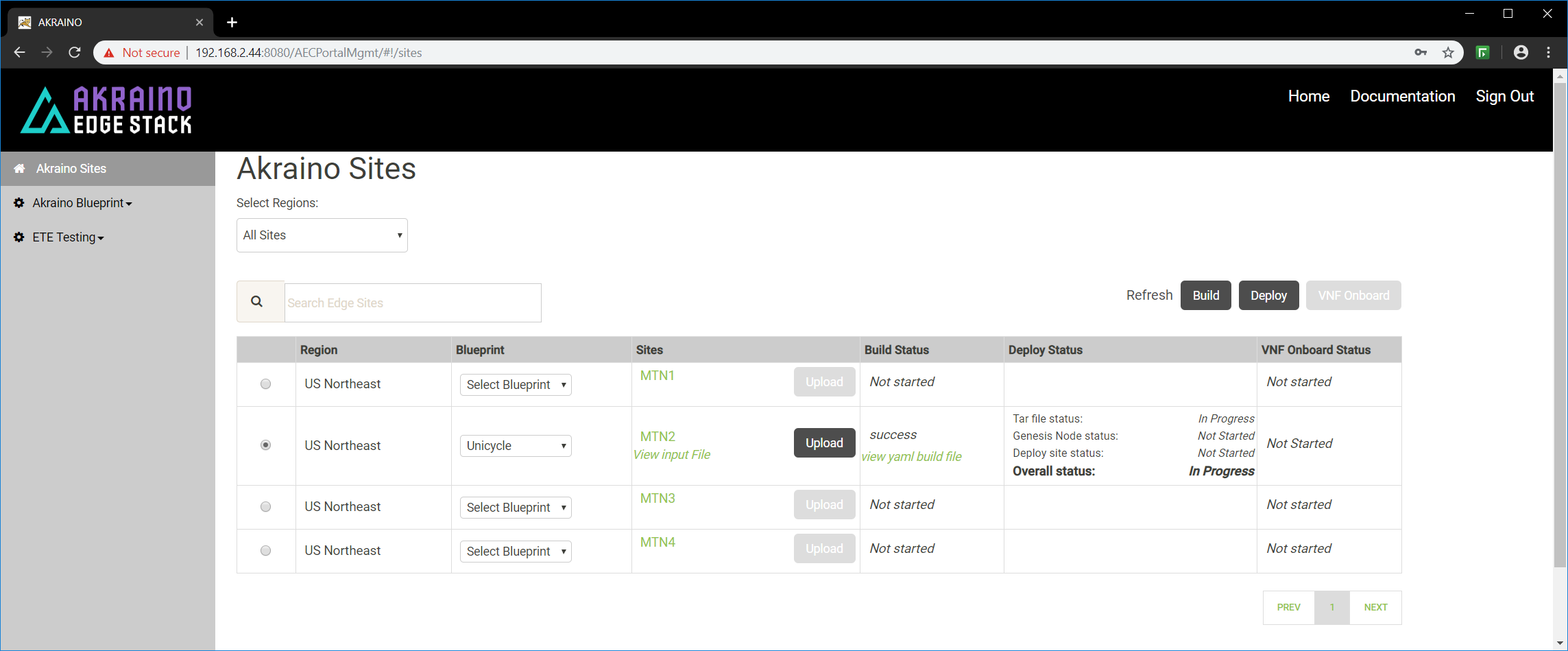

To initiate the automated deployment click 'Deploy'. You should see the status change to 'In Progress'.

The deployment will take a couple of hours to complete. Click "Refresh' periodically to display the current status of each step major step in the deployment.

It is also possible to follow the progress deployment in a number of ways including from the logs on the RC, monitoring the DHCP server on the RC and the virtual console of the iDRAC/iLO on the Genesis server being deployed. Details are shown in the Deployment Walk-Throughs section of the release documentation.

One of the simplest and easiest approaches is to ssh into the RC's 'host' address and issue the following command:

| Code Block | ||

|---|---|---|

| ||

root@regional_controller# tail -f /var/log/akraino/scriptexecutor.log

2019-05-29 18:35:50.902 DEBUG 18222 --- [SimpleAsyncTaskExecutor-34] .a.b.s.i.DeployResponseSenderServiceImpl : BuildResponse [siteName=MTN2, buildStatus=null, createTarStatus=null, genesisNodeStatus=null, deployToolsStatus=null, deployStatus=exception: problem while executing the script. exit code :1, onapStatus=null, vCDNStatus=null, tempestStatus=null]

2019-05-29 18:35:50.910 DEBUG 18222 --- [SimpleAsyncTaskExecutor-34] .a.b.s.i.DeployResponseSenderServiceImpl : Build response HttpResponseStatus :200

2019-05-29 18:38:21.965 DEBUG 18222 --- [http-nio-8073-exec-9] c.a.b.controller.CamundaRestController : Request received for deploy Deploy [sitename=MTN2, blueprint=Rover, filepath=/opt/akraino/redfish/install_server_os.sh , fileparams=--rc /opt/akraino/server-build/MTN2 --skip-confirm, winscpfilepa, port=22, username=root, password=akraino,d, destdir=/opt, remotefilename=akraino_airship_deploy.sh, remotefileparams=null, deploymentverifier=null, deploymentverifierfileparams=null, noofiterations=0, waittime=0, postverificationscript=null, postverificationScriptparams=null]

2019-05-29 18:38:21.968 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.d.DeployScriptExecutorTaskDelegate : task execution started :/opt/akraino/redfish/install_server_os.sh --rc /opt/akraino/server-build/MTN2 --skip-confirm

2019-05-29 18:38:21.968 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Executing the script.............

2019-05-29 18:38:21.972 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : logging to /var/log/akraino/install_server_os_2019-05-29T18-38-21+0000

2019-05-29 18:38:21.973 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Beginning /opt/akraino/redfish/install_server_os.sh as user [root] in pwd [/opt/akraino/workflow] with home [/root]

2019-05-29 18:38:21.978 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Checking for known required packages

2019-05-29 18:38:22.266 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : python/xenial-updates,now 2.7.12-1~16.04 amd64 [installed]

2019-05-29 18:38:22.540 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : xorriso/xenial,now 1.4.2-4ubuntu1 amd64 [installed]

2019-05-29 18:38:22.816 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : sshpass/xenial,now 1.05-1 amd64 [installed]

2019-05-29 18:38:23.094 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : python-requests/xenial-updates,xenial-updates,now 2.9.1-3ubuntu0.1 all [installed]

2019-05-29 18:38:23.332 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : python-pip/xenial-updates,xenial-updates,now 8.1.1-2ubuntu0.4 all [installed]

2019-05-29 18:38:23.579 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : python-yaml/xenial,now 3.11-3build1 amd64 [installed]

2019-05-29 18:38:23.817 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : python-jinja2/xenial,xenial,now 2.8-1 all [installed]

2019-05-29 18:38:24.080 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : make/xenial,now 4.1-6 amd64 [installed,automatic]

2019-05-29 18:38:24.318 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : gcc/xenial,now 4:5.3.1-1ubuntu1 amd64 [installed]

2019-05-29 18:38:24.556 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : coreutils/xenial-updates,now 8.25-2ubuntu3~16.04 amd64 [installed]

2019-05-29 18:38:24.556 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Tools are ready in [/opt/akraino]

2019-05-29 18:38:24.560 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Using Build Web ip address [10.51.34.231]

2019-05-29 18:38:34.570 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : WARNING: Preparing to build server [aknode29] using oob ip [10.51.35.146]. Beginning in 10 seconds ..........

2019-05-29 18:38:34.572 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Beginning bare metal install of os at Wed May 29 18:38:34 UTC 2019

2019-05-29 18:38:34.606 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Found build web ip address [10.51.34.231] on this server!

2019-05-29 18:38:34.608 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : bond0.408 Link encap:Ethernet HWaddr 3c:fd:fe:d2:5b:21

2019-05-29 18:38:34.608 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : inet addr:10.51.34.231 Bcast:10.51.34.255 Mask:255.255.255.224

2019-05-29 18:38:34.608 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : Checking access to server oob ip [10.51.35.146]

2019-05-29 18:38:36.647 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : 64 bytes from 10.51.35.146: icmp_seq=1 ttl=63 time=0.539 ms

2019-05-29 18:38:36.647 DEBUG 18222 --- [SimpleAsyncTaskExecutor-35] c.a.b.s.impl.ScriptExecutionServiceImpl : 64 bytes from 10.51.35.146: icmp_seq=2 ttl=63 time=0.504 ms

|

Unicycle Pod Site Specific Configuration Input Files

This section contains links to the input files used to build the Unicycle pods in ATT's and Ericsson's validation labs for the R1 release. Being pods and site specific the enumerated values will differ. Full details of the relevant validation lab setup that should be referenced when looking at these files is contained in the Validation Labs section of this documentation.

Please note, superficially these files may appear very similar but they are all included as examination of the details shows the differences dues to HW differences such as vendor, slot location of NICs and the method of defining the pod to implement a OCS-DPDK or SR-IOV dataplane as well site specific differences due to VIDs, subents etc.

| Children Display | ||

|---|---|---|

|

| Table of Contents |

|---|

Introduction

...

Unicycle pods are deployed from an existing Regional Controller and consist of 3 master nodes and 0 to 4 worker nodes. The number of nodes may be increased after validation in subsequent releases.

A number of different options exist including the choice of Dell or HP servers and whether to support tenant VM networking with an OVS-DPDK or SR-IOV data plane. The choice of which is deployed is achieved by simply creating different pod specific yaml input files.

In R1 the options include

...

Dell 740XD Detailed Specification

...

Preflight Requirements

Servers

Deployment has been validated on two types of servers, Dell and HP.

The exact server specifications used during validation activities are defined in links in the table above. Whilst the blueprint allows for variation in the servers, since the installation includes provisioning of BIOS and HW specific components such as NICs, SSDs, HDDs, CPUs etc only these exact server types are supported and validated in the R1 release.

A unicycle pod deployment consists of at least 3 servers for the master nodes (that can also run tenant VM workloads and 0 to 4 dedicated worker nodes.

Networking

The automated deployment process configures all aspects of the servers including BIOS, linux operating system and Network Cloud specific software. The deployment and configuration of all external switching components is outside the scope of the R1 release and must be completed as a pre-requisite before attempting a unicycle pods deployment.

Details of the networking is given in the Network Architecture section and that used during the validation in the ATT Validation Labs and Ericsson Validation Labs sections of the release documentation.

Software

When a unicycle pod's nodes are installed on a new bare metal server no software is required on the Target Server. All software will be installed from the Build Server and/or external repos via the internet.

Generate RC ssh Key Pair

In order for the RC to ssh into the Unicycle nodes during the deployment process a ssh key pair must be generated on the RC then the public key must be inserted into the Unicycle pods's site specific input file.

| Code Block | ||

|---|---|---|

| ||

root@regional_server# ssh-keygen -t rsa

.....

root@regional_server# cd /root/.ssh

root@regional_server# ls -lrt

total 12

-rw------- 1 root root 399 May 26 00:34 authorized_keys

-rw-r--r-- 1 root root 395 May 26 00:55 id_rsa.pub

-rw------- 1 root root 1679 May 26 00:55 id_rsa

|

Preflight Checks

Preflight Unicycle Pod Specific Input Data

Unicycle Pods with OVS-DPDK Dataplane

Unicycle Pods with SR-IOV Dataplane

Deploying a Unicycle Pod

Example Unicycle Pod Configuration Input Files

This section contains the input files used to build the Unicycle pods in ATT's and Ericsson's validation labs for the R1 release. Being pods and site specific the enumerated values will differ. Full details of the relevant validation lab setup that should be referenced when looking at these files is contained in the Validation Labs section of this documentation.

Unicycle Pods with OVS-DPDK Dataplane on Dell 740XD servers

This section includes a sample input file that was used in the Ericsson Validation Lab to deploy a Unicycle pod with an OVS_DPDK dataplane.

Please reference the following lab configuration Ericsson Unicycle OVS-DPDK Validation HW, Networking and IP plan.

| Code Block | ||

|---|---|---|

| ||

site_name: akraino-ki20

site_type: ovsdpdk # Note this field defines the dataplane to be deployed as OVS-DPDK

ipmi_admin:

username: root

password: calvin

networks:

bonded: yes

primary: bond0

slaves:

- name: enp95s0f0

- name: enp95s0f1

oob:

vlan: 400 # Note this VID is not used by current network cloud deployment process

interface:

cidr: 10.51.35.128/27 # Note this subnet mask length is used by the deployment scripts

netmask: 255.255.255.224 # Note this subnet mask is used by the deployment scripts

routes:

gateway: 10.51.35.129

ranges:

reserved:

start: 10.51.35.153

end: 10.51.35.158

static:

start: 10.51.35.132

end: 10.51.35.152

host:

vlan: 408

interface: bond0.408

cidr: 10.51.34.224/27 # Note this subnet mask length is used by the deployment scripts

subnet: 10.51.34.224

netmask: 255.255.255.224 # Note this subnet mask is used by the deployment scripts

routes:

gateway: 10.51.34.225

ranges:

reserved:

start: 10.51.34.226

end: 10.51.34.228

static:

start: 10.51.34.229

end: 10.51.34.235

storage:

vlan: 23

interface: bond0.23

cidr: 10.224.174.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

ranges:

reserved:

start: 10.224.174.1

end: 10.224.174.10

static:

start: 10.224.174.11

end: 10.224.174.254

pxe:

vlan: 407

interface: eno3

cidr: 10.224.168.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

gateway: 10.224.168.1

routes:

gateway: 10.224.168.12 #This address is the PXE interface of the Genesis Node.

ranges:

reserved:

start: 10.224.168.1

end: 10.224.168.10

static:

start: 10.224.168.11

end: 10.224.168.200

dhcp:

start: 10.224.168.201

end: 10.224.168.254

ksn:

vlan: 22

interface: bond0.22

cidr: 10.224.160.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

local_asnumber: 65531 # Note: this is the ASN of the k8s calico network

ranges:

static:

start: 10.224.160.134

end: 10.224.160.254

additional_cidrs:

- 10.224.160.200/29

ingress_cidr: 10.224.160.201/32

peers:

- ip: 10.224.160.129 # Note: this is the IP address of the external fabric bgp router

scope: global

asnumber: 65001 # Note: this is the ASN of the external fabric bgp router

vrrp_ip: 10.224.160.129 # keep peers ip address in case of only peer.

neutron:

vlan: 24

interface: bond0.24

cidr: 10.224.171.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

ranges:

reserved:

start: 10.224.171.1

end: 10.224.171.10

static:

start: 10.224.171.11

end: 10.224.171.254

vxlan:

vlan: 1 # Note this VID value defines that the dataplane interface is untagged and is the only supported option in R1

interface: enp134s0f0 #Note this is a single port and LAG is not support with an OVS-DPDK dataplane in r1

cidr: 10.224.169.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

ranges:

reserved:

start: 10.224.169.1

end: 10.224.169.10

static:

start: 10.224.169.11

end: 10.224.169.254

dns:

upstream_servers:

- 10.64.73.100

- 10.64.73.101

- 10.51.40.100

ingress_domain: vran.k2.ericsson.se

domain: vran.k2.ericsson.se

#################################################################################################################

#Note: The 'dpdk' statement below defines the deployment of and OVS-DPDK dataplane instead of an SR-IOV dataplane

dpdk:

nics:

- name: dpdk0

pci_id: '0000:86:00.0'

bridge: br-phy

migrate_ip: true

#################################################################################################################

storage:

osds:

- data: /dev/sda

journal: /var/lib/ceph/journal/journal-sda

- data: /dev/sdb

journal: /var/lib/ceph/journal/journal-sdb

- data: /dev/sdc

journal: /var/lib/ceph/journal/journal-sdc

- data: /dev/sdd

journal: /var/lib/ceph/journal/journal-sdd

- data: /dev/sde

journal: /var/lib/ceph/journal/journal-sde

- data: /dev/sdf

journal: /var/lib/ceph/journal/journal-sdf

osd_count: 6

total_osd_count: 18

genesis:

name : aknode23

oob: 10.51.35.143

host: 10.51.34.233

storage: 10.224.174.12

pxe: 10.224.168.12

ksn: 10.224.160.135

neutron: 10.224.171.12

vxlan: 10.224.169.12

root_password: akraino,d

masters:

- name: aknode31

oob: 10.51.35.147

host: 10.51.34.229

storage: 10.224.174.13

pxe: 10.224.168.13

ksn: 10.224.160.136

neutron: 10.224.171.13

vxlan: 10.224.169.13

oob_user: root

oob_password: calvin

- name : aknode25

oob: 10.51.35.144

host: 10.51.34.232

storage: 10.224.174.11

pxe: 10.224.168.11

ksn: 10.224.160.134

neutron: 10.224.171.11

vxlan: 10.224.169.11

oob_user: root

oob_password: calvin

#workers:

# - name : aknode32 # Note not verified in this validation lab in R1

# oob: 10.51.35.148

# host: 10.51.34.234

# storage: 10.224.174.14

# pxe: 10.224.168.14

# ksn: 10.224.160.137

# neutron: 10.224.171.14

# oob_user: root

# oob_password: calvin

# - name : aknode33 # Note not verified in this validation lab in R1

# oob: 10.51.35.149

# host: 10.51.34.235

# storage: 10.224.174.15

# pxe: 10.224.168.15

# ksn: 10.224.160.138

# neutron: 10.224.171.15

# oob_user: root

# oob_password: calvin

platform:

# vcpu_pin_set: "4-21,26-43,48-65,72-87"

kernel_params:

hugepagesz: '1G'

hugepages: 32

# default_hugepagesz: '1G'

# transparent_hugepage: 'never'

iommu: 'pt'

intel_iommu: 'on'

# amd_iommu: 'on'

# console: 'ttyS1,115200n8'

###########################################################################################

#Note: This section defines the use of Dell servers and their installed HW and BIOS version

hardware:

vendor: DELL

generation: '10'

hw_version: '3'

bios_version: '2.8'

bios_template: dell_r740_g14_uefi_base.xml.template

boot_template: dell_r740_g14_uefi_httpboot.xml.template

http_boot_device: NIC.Slot.2-1-1

###########################################################################################

disks:

- name : sdg

labels:

bootdrive: 'true'

partitions:

- name: root

size: 20g

mountpoint: /

- name: boot

size: 1g

mountpoint: /boot

- name: var

size: 100g

mountpoint: /var

- name : sdh

partitions:

- name: ceph

size: 300g

mountpoint: /var/lib/ceph/journal

disks_compute:

- name : sdg

labels:

bootdrive: 'true'

partitions:

- name: root

size: 20g

mountpoint: /

- name: boot

size: 1g

mountpoint: /boot

- name: var

size: '>300g'

mountpoint: /var

- name : sdh

partitions:

- name: nova

size: '99%'

mountpoint: /var/lib/nova

# Note: the key below must be generate each time a new RC is installed and inserted intot he input file of any pods deployed by that RC

genesis_ssh_public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQC+iukrMrXSPOnnz89BHQ0UWMb71OLhSb5byh4wPpjSHtqmTV7xsg0WWUId5K8ejqL+X1FKPdTlozY1Jy6LHq9yWu+nvSdCdcV5CnrodgNCIiY6z4B9Mhf3BoN9ADQtZKH7EpAzGiqYvncrZTwHlmIyc9ff8HxusWut1vKn8EJ96a07wybRpjOlblehSESTD6qyUxUGt6hrJZY/FXHJ+JvpogI55i0La9pt94RQVg8wOi1DqPWavOZzRT35A7bziKk79IoGOGnW9H+K5x7hiRFw1wrPoJrd1ixgLyg+UUwgZlDCf5AhDyajmb8rtDLckVFcw8KPuj7weGGFD7gqOw1N root@aknode29"

kubernetes:

api_service_ip: 10.96.0.1

etcd_service_ip: 10.96.0.2

pod_cidr: 10.98.0.0/16

service_cidr: 10.96.0.0/15

regional_server:

ip: 10.51.34.230 # Note: this is the host IP address of the Regional Controller that is being used to deploy this unicycle pod

|

Unicycle Pods with SR-IOV Dataplane on Dell 740XD servers

This section includes a sample input file that was used in the Ericsson Validation Lab to deploy a Unicycle pod with a SR-IOV dataplane.

Please reference the following lab configuration Ericsson Unicycle SR-IOV Validation HW, Networking and IP plan.

| Code Block | ||

|---|---|---|

| ||

site_name: akraino-ki20

ipmi_admin:

username: root

password: calvin

networks:

bonded: yes

primary: bond0

slaves:

- name: enp95s0f0

- name: enp95s0f1

oob:

vlan: 400 # Note this VID is not used by current network cloud deployment process

interface:

cidr: 10.51.35.128/27 # Note this subnet mask length is used by the deployment scripts

netmask: 255.255.255.224

routes:

gateway: 10.51.35.129

ranges:

reserved:

start: 10.51.35.153

end: 10.51.35.158

static:

start: 10.51.35.132

end: 10.51.35.152

host:

vlan: 408

interface: bond0.408

cidr: 10.51.34.224/27 # Note this subnet mask length is used by the deployment scripts

subnet: 10.51.34.224

netmask: 255.255.255.224

routes:

gateway: 10.51.34.225

ranges:

reserved:

start: 10.51.34.226

end: 10.51.34.228

static:

start: 10.51.34.229

end: 10.51.34.235

storage:

vlan: 23

interface: bond0.23

cidr: 10.224.174.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

ranges:

reserved:

start: 10.224.174.1

end: 10.224.174.10

static:

start: 10.224.174.11

end: 10.224.174.254

pxe:

vlan: 407

interface: eno3

cidr: 10.224.168.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

gateway: 10.224.168.1

routes:

gateway: 10.224.168.12 #This address is the PXE interface address of the Genesis Node.

ranges:

reserved:

start: 10.224.168.2

end: 10.5224.168.10

static:

start: 10.224.168.11

end: 10.224.168.200

dhcp:

start: 10.224.168.201

end: 10.224.168.254

ksn:

vlan: 22

interface: bond0.22

cidr: 10.224.160.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

local_asnumber: 65531 # Note: this is the ASN of the k8s calico network

ranges:

static:

start: 10.224.160.134

end: 10.224.160.254

additional_cidrs:

- 10.224.160.200/29

ingress_cidr: 10.224.160.201/32

peers:

- ip: 10.224.160.129 # Note: this is the IP address of the external fabric bgp router

scope: global

asnumber: 65001 # Note: this is the ASN of the external fabric bgp router

vrrp_ip: 10.224.160.129 # keep peers ip address in case of only peer.

neutron:

vlan: 24

interface: bond0.24

cidr: 10.224.171.0/24 # Note this subnet mask length is not used by the deployment scripts and is hard coded to /24 in R1

#netmask: 255.255.255.0 - Not Used

ranges:

reserved:

start: 10.224.171.1

end: 10.224.171.10

static:

start: 10.224.171.11

end: 10.224.171.254

dns:

upstream_servers:

- 10.64.73.100

- 10.64.73.101

- 10.51.40.100

ingress_domain: vran.k2.ericsson.se

domain: vran.k2.ericsson.se

####################################################################################################################

#Note: The 'sriovnets' statement below defines the deployment of a SR-IOV dataplane instead of an OVS-DPDK dataplane

sriovnets:

- physical: sriovnet1

interface: enp134s0f0

vlan_start: 2001

vlan_end: 3000

whitelists:

- "address": "0000:86:02.0"

- "address": "0000:86:02.1"

- "address": "0000:86:03.2"

- "address": "0000:86:03.3"

- "address": "0000:86:03.4"

- "address": "0000:86:03.5"

- "address": "0000:86:03.6"

- "address": "0000:86:03.7"

- "address": "0000:86:04.0"

- "address": "0000:86:04.1"

- "address": "0000:86:04.2"

- "address": "0000:86:04.3"

- "address": "0000:86:02.2"

- "address": "0000:86:04.4"

- "address": "0000:86:04.5"

- "address": "0000:86:04.6"

- "address": "0000:86:04.7"

- "address": "0000:86:05.0"

- "address": "0000:86:05.1"

- "address": "0000:86:05.2"

- "address": "0000:86:05.3"

- "address": "0000:86:05.4"

- "address": "0000:86:05.5"

- "address": "0000:86:02.3"

- "address": "0000:86:05.6"

- "address": "0000:86:05.7"

- "address": "0000:86:02.4"

- "address": "0000:86:02.5"

- "address": "0000:86:02.6"

- "address": "0000:86:02.7"

- "address": "0000:86:03.0"

- "address": "0000:86:03.1"

- physical: sriovnet2

interface: enp134s0f1

vlan_start: 2001

vlan_end: 3000

whitelists:

- "address": "0000:86:0a.0"

- "address": "0000:86:0a.1"

- "address": "0000:86:0b.2"

- "address": "0000:86:0b.3"

- "address": "0000:86:0b.4"

- "address": "0000:86:0b.5"

- "address": "0000:86:0b.6"

- "address": "0000:86:0b.7"

- "address": "0000:86:0c.0"

- "address": "0000:86:0c.1"

- "address": "0000:86:0c.2"

- "address": "0000:86:0c.3"

- "address": "0000:86:0a.2"

- "address": "0000:86:0c.4"

- "address": "0000:86:0c.5"

- "address": "0000:86:0c.6"

- "address": "0000:86:0c.7"

- "address": "0000:86:0d.0"

- "address": "0000:86:0d.1"

- "address": "0000:86:0d.2"

- "address": "0000:86:0d.3"

- "address": "0000:86:0d.4"

- "address": "0000:86:0d.5"

- "address": "0000:86:0a.3"

- "address": "0000:86:0d.6"

- "address": "0000:86:0d.7"

- "address": "0000:86:0a.4"

- "address": "0000:86:0a.5"

- "address": "0000:86:0a.6"

- "address": "0000:86:0a.7"

- "address": "0000:86:0b.0"

- "address": "0000:86:0b.1"

####################################################################################################################

storage:

osds:

- data: /dev/sda

journal: /var/lib/ceph/journal/journal-sda

- data: /dev/sdb

journal: /var/lib/ceph/journal/journal-sdb

- data: /dev/sdc

journal: /var/lib/ceph/journal/journal-sdc

- data: /dev/sdd

journal: /var/lib/ceph/journal/journal-sdd

- data: /dev/sde

journal: /var/lib/ceph/journal/journal-sde

- data: /dev/sdf

journal: /var/lib/ceph/journal/journal-sdf

osd_count: 6

total_osd_count: 18

genesis:

name : aknode23

oob: 10.51.35.143

host: 10.51.34.233

storage: 10.224.174.12

pxe: 10.224.168.12

ksn: 10.224.160.135

neutron: 10.224.171.12

root_password: akraino,d

masters:

- name: aknode31

oob: 10.51.35.147

host: 10.51.34.229

storage: 10.224.174.13

pxe: 10.224.168.13

ksn: 10.224.160.136

neutron: 10.224.171.13

oob_user: root

oob_password: calvin

- name : aknode25

oob: 10.51.35.144

host: 10.51.34.232

storage: 10.224.174.11

pxe: 10.224.168.11

ksn: 10.224.160.134

neutron: 10.224.171.11

oob_user: root

oob_password: calvin

#workers:

# - name : aknode32 # Note not verified in this validation lab in R1

# oob: 10.51.35.148

# host: 10.51.34.234

# storage: 10.224.174.14

# pxe: 10.224.168.14

# ksn: 10.224.160.137

# neutron: 10.224.171.14

# oob_user: root

# oob_password: calvin

# - name : aknode33 # Note not verified in this validation lab in R1

# oob: 10.51.35.149

# host: 10.51.34.235

# storage: 10.224.174.15

# pxe: 10.224.168.15

# ksn: 10.224.160.138

# neutron: 10.224.171.15

# oob_user: root

# oob_password: calvin

###########################################################################################

#Note: This section defines the use of Dell servers and their installed HW and BIOS version

hardware:

vendor: DELL

generation: '10'

hw_version: '3'

bios_version: '2.8'

bios_template: dell_r740_g14_uefi_base.xml.template

boot_template: dell_r740_g14_uefi_httpboot.xml.template

http_boot_device: NIC.Slot.2-1-1

###########################################################################################

disks:

- name : sdg

labels:

bootdrive: 'true'

partitions:

- name: root

size: 20g

mountpoint: /

- name: boot

size: 1g

mountpoint: /boot

- name: var

size: 100g

mountpoint: /var

- name : sdh

partitions:

- name: ceph

size: 300g

mountpoint: /var/lib/ceph/journal

disks_compute:

- name : sdg

labels:

bootdrive: 'true'

partitions:

- name: root

size: 20g

mountpoint: /

- name: boot

size: 1g

mountpoint: /boot

- name: var

size: '>300g'

mountpoint: /var

- name : sdh

partitions:

- name: nova

size: '99%'

mountpoint: /var/lib/nova

# Note: the key below must be generate each time a new RC is installed and inserted intot he input file of any pods deployed by that RC

genesis_ssh_public_key: "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQCos1E/53FhubxPOXncxByAI5WCyyt0UBhsBGCPlp5J5gM8vMZEX9pJI3uY/5R8z6crtuW9aneSQ9kZTkksGNoohWZDmLRymfJOOtfs5TlpRGvKAHMnYthDexeCKQixbrf0/9dzAUalzveM025D2ZJSM3mQ9kDr6Pn4220Lbsez50CzH9dKRNKzydDTvuwOi0unTQf9DNNc9WzDTO83UwmGVtR2mIq8ZXOd1G4To3cT0P487NezsRrCtscbdoe4YdAW9h7wxNC1saZNbx2kARIOjO79bKT1U+j9XQZTuHKbzhLxXqqUkEGfmqGvA1H6iAMPl4Hz8itM0veW63Zolwg/ root@aknode29"

kubernetes:

api_service_ip: 10.96.0.1

etcd_service_ip: 10.96.0.2

pod_cidr: 10.98.0.0/16

service_cidr: 10.96.0.0/15

regional_server:

ip: 10.51.34.230 # Note: this is the host IP address of the Regional Controller that is being used to deploy this unicycle pod

|

Unicycle Pods with SR-IOV Dataplane on HP DL380 servers

This section includes a sample input file that was used in the ATT Validation Lab to deploy a Unicycle pod with an SR-IOV dataplane.

...

| language | yml |

|---|

...