...

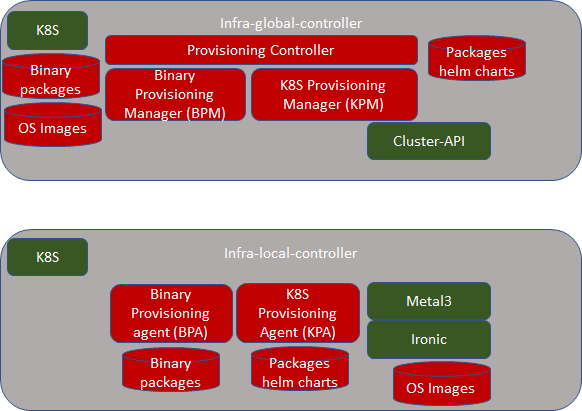

Architecture:

Blocks and Modules

All the green items are existing open source projects. If they require any enhancements, it is best done in the upstream community.

All the red items are expected to be part of the Akraino BP. In some cases, code in various upstream projects can be leveraged. But, we made them in red color as we don't know at this time to what extent we can use the upstream ASIS. Some guidance

- KPM can be borrowed from Multi-Cloud K8S plugin.

- Some part of provisioning controller (mainly location registration) can be borrowed from Multi-Cloud K8S plugin.

- Provisioning controller might use Tekton or Argo for workflow management.

Infra-local-controller:

"infra-local-controller" is expected to run in bootstrap machine of each location. Bootstrap is the one which installs the required software in compute nodes used for future workloads. Just an example, say a location has 10 servers. 1 server can be used as bootstrap machine and all other 9 servers can be used compute nodes for running workloads. Bootstrap machine is not only installs all required software in the compute nodes, but also is expected to patch and update compute nodes with newer patched versions of the software.

As you see above in the picture, bootstrap machine itself is based on K8S. Note that this K8S is different from the K8S that gets installed in compute nodes. That is, these are two are different K8S clusters. In case of bootstrap machine, it itself is complete K8S cluster with one node that has both master and minion software combined. All the components of infra-local-controller (such as BPA, KPA, Metal3 and Ironic) themselves are containers.

Infra-local-controller is expected to be brought in two ways:

- As a USB bootable disk: One should be able to get any bare-metal server machine, insert USB and restart the server. What it means is that USB bootable disk shall have basic Linux, K8S and all containers coming up without any user actions. It is also expected to have packages and OS images that are required to provision actual compute nodes. As in above example, these binary, OS and packages are installed on 9 compute nodes.

- As individual entities : As developers, one shall be able to use any machine without inserting USB disk. In this case, developer can choose a machine as bootstrap machine, install Linux OS, Install K8S using Kubeadm and then bring up BPA, KPA, Metal3 and Ironic. Then upload packages via RESTAPIs provided by BPA to the system.

Note that infra-local-controller can be run without infra-global-controller. In interim release, we expect that only infra-local-controller is supported. infra-global-controller is targeted for final Akraino R2 release. it is the goal that any operations done in interim release on infra-local-controller manually are automated by infra-global-controller. And hence the interface provided by infra-local-controller is flexible to support both manual actions as well as automated actions.

As indicated above, infra-local-controller is expected to bring K8S cluster on the compute nodes used for workloads. Bringing up workload K8S cluster normally requires following steps

- Bring up Linux operating system.

- Provision the software with right configuration

- Bring up basic Kubernetes components (such as Kubelet, Docker, kubectl, kubeadm etc..

- Bring up components that can be installed using kubectl.

Step 1 and 2 are expected to be taken care using Metal3 and Ironic. Step 3 is expected to be taken care by BPA and Step 4 is expected to be taken care by KPA.

Procedure the user would following is this:

- Boot up a bootstrap machine using USB bootable disk.

- Via Kubectl to infra-local-controller via Metal3 CRs, make ironic ready for compute nodes to do PXEBOOT and install Linux.

- Once Linux get installed, as a user go to BPA (which is a K8S operator) and install binary packages.

- Once user ensures that binary packages are installed, go to BPA and ask it to install container packages on compute nodes (via another CR provided by BPA)

Solution

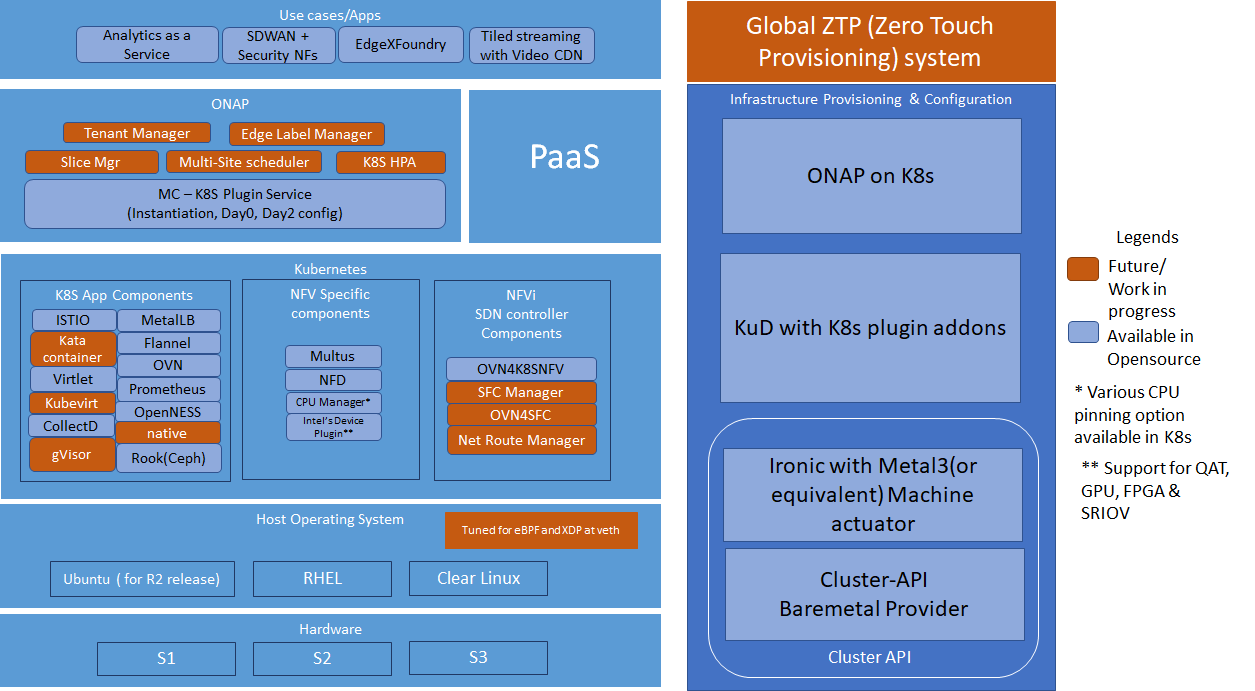

Global ZTP:

Global ZTP system is used for Infrastructure provisioning and configuration in ICN family. It is subdivided into 3 deployments Cluster-API, KuD and ONAP on K8s.

...

| Components | required state of implementation | Expected Result |

|---|---|---|

| ZTP |

| All-in-one ZTP script with cluster-API and Baremetal operator |

| ONAP |

| Should be integrated with the above script |

| KuD addons |

| Daemonset yaml should be integrated with the above script. Run an alpine container with Multiple networks, NFD and QAT device plugin |

Akraino R2 release

| Components | required state of implementation | Expected Result |

|---|---|---|

| ZTP |

| All-in-one ZTP script with cluster-API and Baremetal operator |

| ONAP |

| Should be integrated with the above script |

| KuD addons |

| Daemonset yaml should be integrated with the above script |

| Tenant Manager |

| should be deployed as part of KuD addons |

| Dashboard |

| Dashboard run as deployment in ONAP cluster |

| App |

| Instantiate 3 workloads from ONAP to show the SFC functionality in Dashboard |

| CI |

| End-to-End testing script |

Akraino R3 release

| Components | required state of implementation | Expected Result |

|---|---|---|

| ZTP |

| All-in-one ZTP script with cluster-API and Baremetal operator |

| ONAP |

| Should be integrated with the above script |

| KuD addons |

| Daemonset yaml should be integrated with the above script |

| Dashboard |

| Dashboard run as deployment in ONAP cluster |

| App |

| Instantiate 3 workloads from ONAP to show the SFC functionality in Dashboard |

| CI |

| End-to-End testing script |

Future releases

Yet to discuss

...