Contents

Introduction

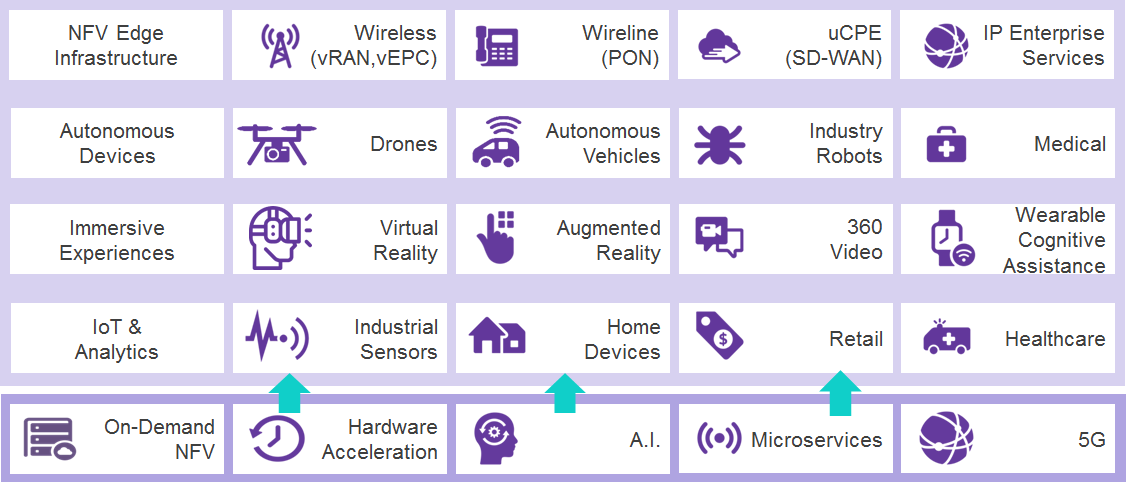

The evolution and rapid progression of new technologies like augmented reality, autonomous vehicles, drones, and smart cities are inevitable. The enormous amount of data produced and processed at the user end of the network is huge, and the subsequent communication between the cloud and distributed endpoints becomes cumbersome. To store and manage a large amount of data produced, and to process data in real-time becomes a challenge.

The demand for real-time processing capabilities at the edge, instead of centralized processing, and the high capacity storage needs can be addressed with Edge Computing. Edge Computing enables processing and storage capabilities closer to the endpoint using familiar cloud technologies. This approach will reduce the total cost of ownership and enable faster processing to meet application latency (< 20 ms) requirements. The Edge Cloud solution will also comply with local and global data privacy requirements.

There are many open source projects that provide component capabilities required for edge computing. However, there is no holistic solution to address the need for fully integrated edge infrastructure.

Akraino Edge Stack, a Linux Foundation project initiated by AT&T and Intel, provides a fully integrated edge infrastructure solution, and the project is completely focused towards Edge Computing. This open source software stack provides critical infrastructure to enable high performance, reduce latency, improve availability, lower operational overhead, provide scalability, address security needs, and improve fault management. The Edge infrastructure solution will support carrier, provider and the IoT networks.

AT&T's seed code will enable carrier-scale edge computing applications to run in virtual machines and containers. AT&T’s contributions, which will include support for 5G, IoT, and other networking edge services will enhance reliability and enable high performance.

Intel’s contribution will include open source components, namely its Wind River Titanium Cloud portfolio of technologies and Intel Network Edge Virtualization Software Development Kit (Intel NEV SDK). Wind River Titanium Cloud’s compact and performant technology enables ease of deployment and operational efficiency. The Intel NEV SDK provides a suite of reference libraries and APIs to enable different edge-computing solution deployment architectures for small cells, macro cells, wireline, aggregation sites, and central offices.

The Akraino Edge Stack project community, while embracing several existing open source projects, will continue the focus on the following Community Goal:

▪ Faster Edge Innovation - Focused group facilitating faster innovation, incorporating hardware acceleration, software-defined networking, and other emerging capabilities into a modern Edge stack.

▪ End-to-End Ecosystem - Definition and certification of H/W stacks, configurations, and Edge VNFs.

▪ User Experience - Address both operational and user use cases.

▪ Seamless Edge Cloud Interoperability- Standard to interoperate across multiple Edge Clouds.

▪ Provide End to End Stack- End to end integrated solution with demonstrable use cases.

▪ Use and Improve Existing Open Source - Maximize the use of existing industry investments while developing and up-streaming enhancements, avoiding further fragmentation of the ecosystem.

▪ Support Production-Ready Code- Security established by design and supports full life-cycle.

Akraino is complementary, and interfaces with the existing projects namely Acumos AI, Airship, Ceph, DANOS, EdgeX Foundry, Kubernetes, LF Networking, ONAP, OpenStack, and StarlingX.

Emerging Technologies

Emerging technologies in Internet of Things (IoT) demands lower latencies and accelerated processing at the edge.

Running cloud infrastructure at the network edge allows for the virtualization of applications key to running 5G mobility networks at a greater scale, density and lower cost using commodity hardware. This infrastructure also enables the virtualization of wireline services, Enterprise IP services and even supports the virtualization of client premises equipment. This speeds the time to provision new services for customers and even, in some cases, allows those customers to self-provision their service changes.

Autonomous vehicles, Drones, and such customer devices require a lot of compute processing power in order to support video processing, analytics and etc., Edge computing enables above-said devices to offload the computing processing to the Edge within the needed latency limit.

Devices like Virtual Reality (VR) headsets and augmented reality applications on user’s mobile devices also require extremely low levels of latency to prevent lag that would degrade their user experience. To ensure this experience is optimal, placing computing resources close to the end user to ensure the lowest latencies to and from their devices is critical.

To ensure timely information arrives for data-driven decisions for manufacturing and shipping businesses, edge computing is also beneficial. Receiving and processing this data at the edge allows more timely decision making leading to better business outcomes.

Network Edge - Optimal Zone for Edge Placement

The processing power demands of customer devices, namely AR/VR, Drones, and Autonomous Vehicles are ever increasing and require very low latency, typically measured in milliseconds. The place where processing takes place plays a major role with respect to quality of user experience and cost of ownership. Centralized cloud decreases the TCO, but fails to address the low latency requirement. Placement at customer premises is nearly impossible with respect to cost and infrastructure. Considering the cost, low latency, and high processing power requirements, the best available option is to utilize the existing infrastructure like Telco’s tower, central offices, and other Telco real estates. These will be the optimal zones for the edge placement.

Akraino Edge Stack

The Akraino Edge Stack is a declarative configuration of solution components. The framework will allow multiple hardware and software SKUs to co-exist with complete CI/CD process. It will support VM, container and bare metal workloads. The Software SKU will be a combination of upstream software and components hosted in Akraino. Common components will be used across the SKUs, for example, ONAP. The gating process will ensure that SKUs are accepted only after if it passes through the guidelines/approval process.

A typical service provider will have thousands of Edge sites. These Edge sites will be deployed at Cell tower sites, Central offices, and other service providers real estate such as wire centers. End-to-End Edge automation and Zero-Touch provisioning are required to minimize OPEX and meet the requirements for provisioning agility.

In the context of 5G, Edge Computing enables Operator and 3rd party services to be hosted close to the User Equipment's (UEs) access point of attachment, so as to achieve an efficient service delivery through the reduced end-to-end latency and load on the transport network. In 3GPP TS 23.501 there is a section addressing the architecture and requirements which is summarized below.

The 5G Core Network selects a User Plane Function (UPF) close to the UE and executes the traffic steering from the UPF to the local Data Network via an N6 interface. This may be based on the UE's subscription data, UE location, the information from Application Function (AF), policy or other related traffic rules. Due to a user or Application Function mobility, the service or session continuity may be required based on the requirements of the service on the 5G network. The 5G Core Network may expose network information and capabilities to an Edge Computing Application Function.

Depending on the operator deployment, certain Application Functions can be allowed to interact directly with the Control Plane Network Functions with which they need to interact, while the other Application Functions need to use the external exposure framework via the Network Exposure Function (NEF).

The functionality supporting for Edge Computing includes:

- User plane (re)selection: the 5G Core Network (re)selects UPF to route the user traffic to the local Data Network

- Local Routing and Traffic Steering: the 5G Core Network selects the traffic to be routed to the applications in the local Data Network

- Session and service continuity to enable UE and application mobility

- An Application Function may influence UPF (re)selection and traffic routing via Policy Control Function (PCF) or NEF

- Network capability exposure: 5G Core Network and Application Function to provide information to each other via NEF or directly

- QoS and Charging: PCF provides rules for QoS Control and Charging for the traffic routed to the local Data Network

- Support of Local Area Data Network: 5G Core Network provides support to connect to the LADN in a certain area where the applications are deployed

Akraino sites hierarchy consists of Central sites that deploy Regional sites and Regional sites that deploy Edge sites. The figure below shows Central site C1 deploying Regional sites R1, R2, R3, and R4 and Central site C2 deploying Regional sites R5, R6, R7, and R8. Regional site R1 deploys Edge sites E11, E12 and other sites in the R1 "Edge Flock". Regional site R2 deploys edge sites E21, E22 and other sites in the R2 "Edge Flock" and so on.

Regional sites serve as the controller for Edge sites in their corresponding "Edge Flock". For instance, R1 is the Regional Controller for Edge sites E11, E12, and others. R1 is responsible for Day 0 site deployment and Day 1/Day2 site operations and lifecycle management of E11, E12 and other sites in the "R1 Edge Flock". Similarly, R2 is the Regional Controller for Edge sites E21, E22, and others. R2 is responsible for Day 0 site deployment and Day1/Day 2 site operations and lifecycle management of E21, E22 and other sites in the "R2 Edge Flock" and so on.

To promote the high availability of Edge Cloud services, Akraino Regional Controller can failover to other Regional Controllers. For instance, R2 Regional Controller can back up Regional Controller R1 and Regional Controller R3 can back up R2 Regional Controller and so. To support this, the portal orchestration at each Regional Controller has visibility into all Edge sites in the hierarchy. Similarly, Central sites C1 and C2 that deploy Regional sites back each other and have visibility into all Regional sites in the hierarchy.