Introduction

Physical Networking Architecture Considerations

The Network Cloud blueprints deploy Rover and Unicycle pods at multiple edge locations driven by a Regional Controller. As such the networking architecture spans the WAN between the RC and edge pods as well as the edge sites themselves.

Clearly it is not desirable to span L2 broadcast domains over the WAN nor is it desirable to span L2 domains between edge sites. Thus the networking architecture allows for deployment using local isolated L2 domains at each edge site as well as support for IP connectivity only across the WAN.

Layer 2, IP and BGP Architecture

The diagrams below show the physical, layer, IP and BGP architecture for the Network Cloud family of blueprints in R1.

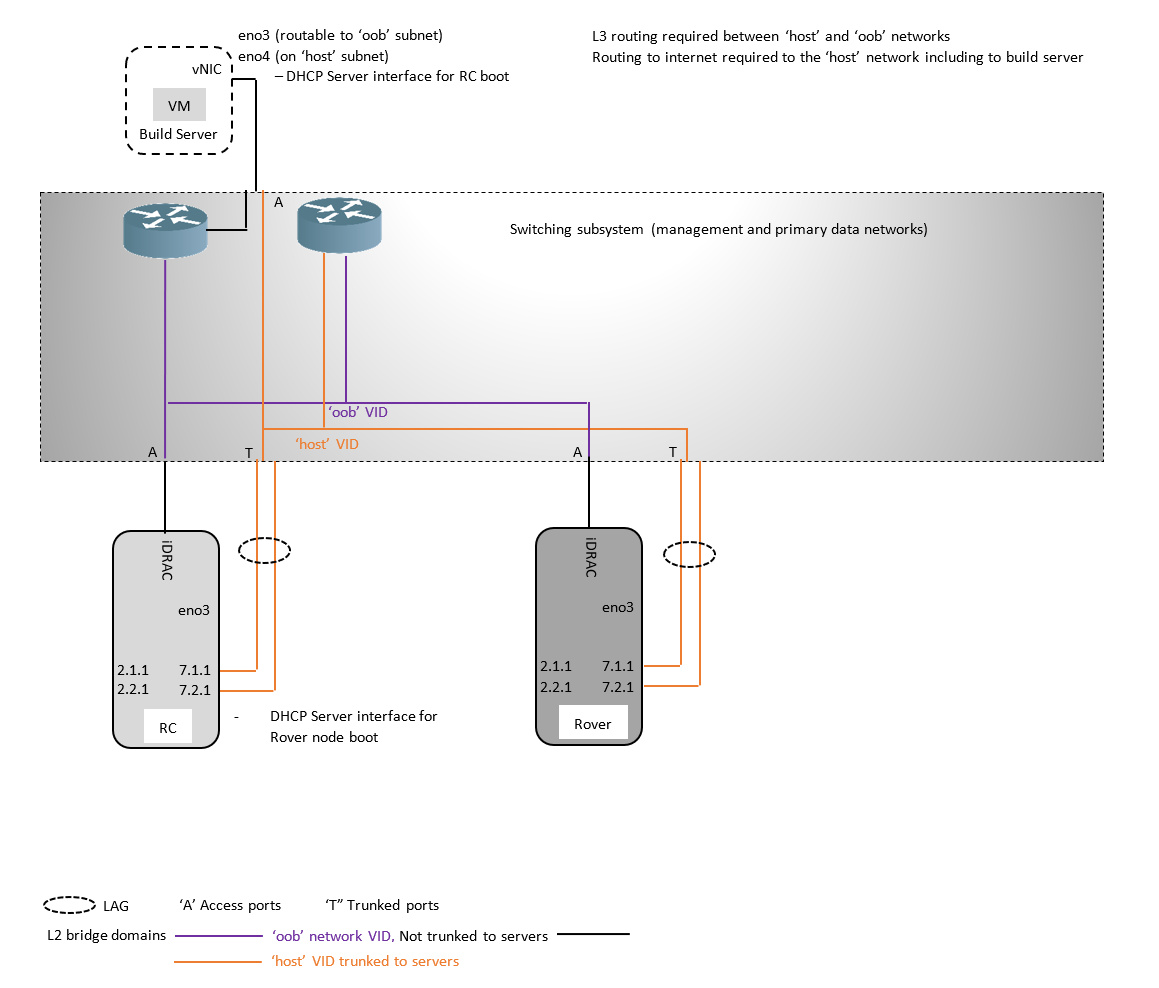

Rover

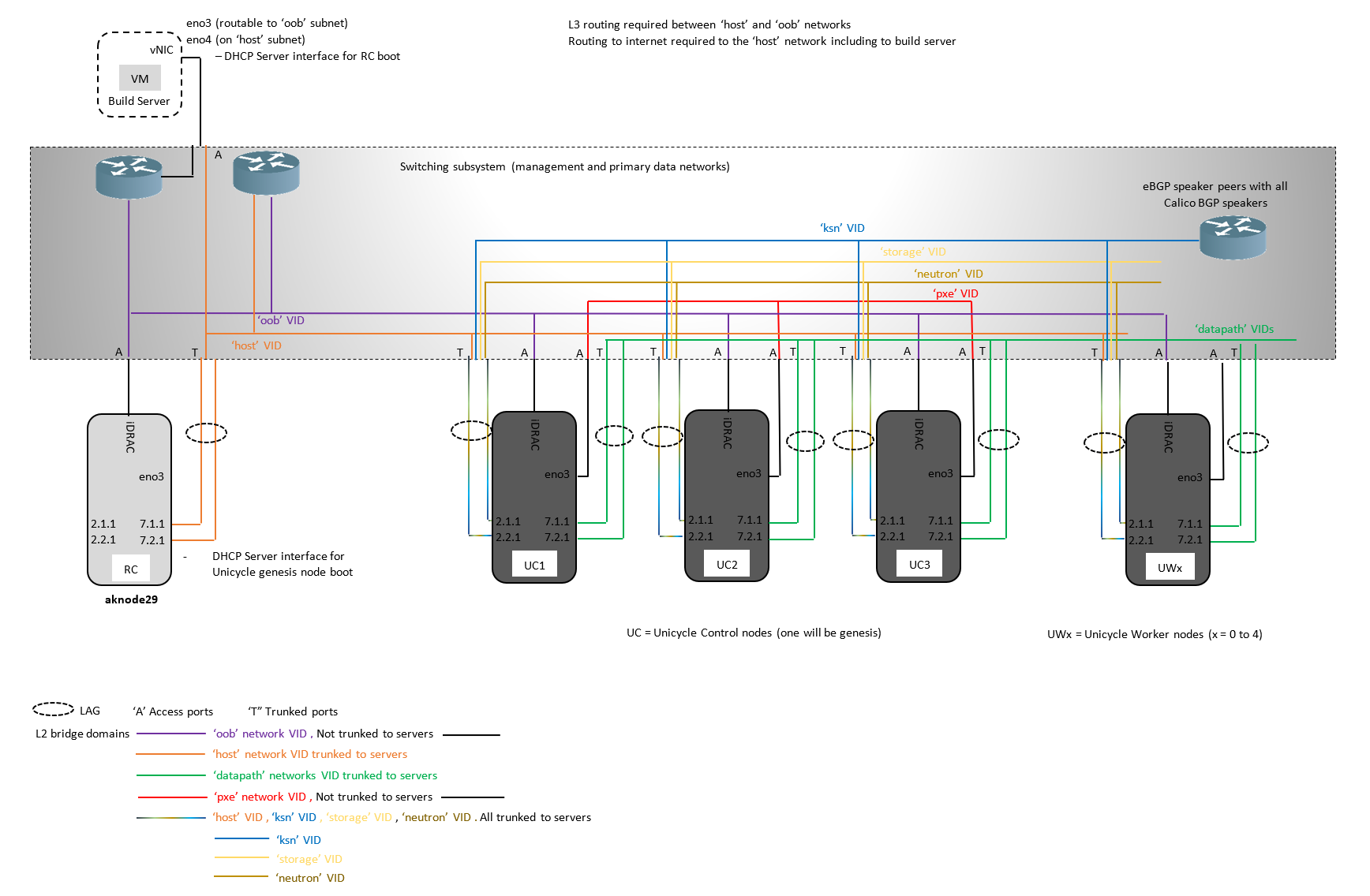

Unicycle with SR-IOV datapath

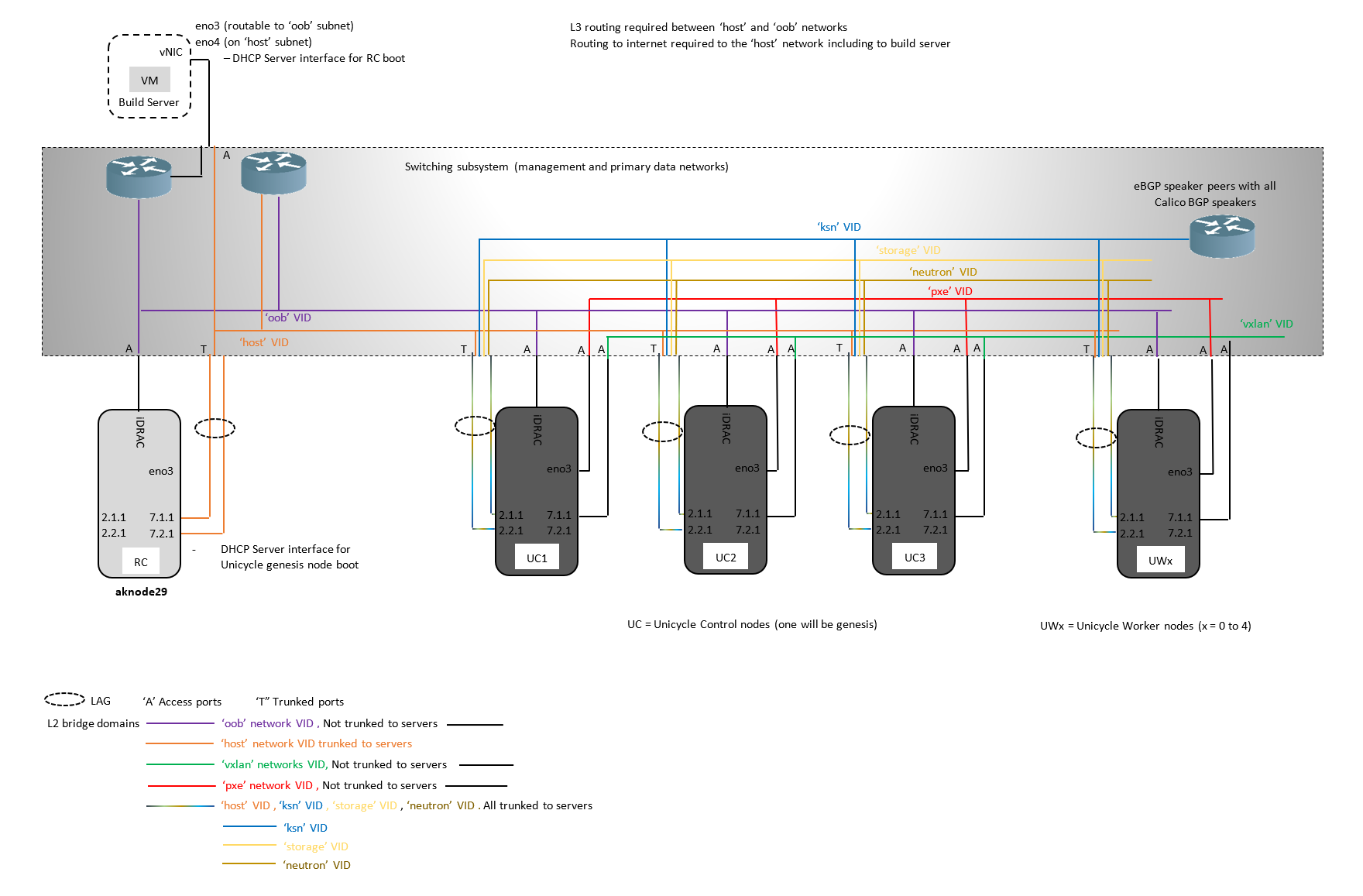

Unicycle with OVS-DPDK datapath

LAG Considerations

The RC (when installed on bare metal, Rover and Unicycle Genesis nodes boot via their VLAN tagged 'host' interfaces which are pre-provisioned on the serving TOR switches with LAG bonding. Since booting occurs before the linux kernel can bring up its LAC-P signaling the TOR switches must be configured to pass traffic on their primary (first) link before the LAG bundle is up.

The switch configuration for the network bond for the 'host' interfaces must be set to bring up the bond on the first interface prior to lacp completing negotiation.

- For Junos OS this option is typically called force-up and should be set on the first interface in the bond.

- For Arista, this option is typically called lacp fallback.

- Please ensure any TOR switch that is to be used supports this functionality and then refer to your network switch documentation to determine the correct configuration.

The 'host' network interfaces on all DHCP clients and servers should be located on the same L2 network so that the DHCP Request/Reply messages broadcast from the client reach the server and vice versa.

Alternatively DHCP helper/relay functionality may be implemented on the TOR to which the DHCP clients are attached to allow inter subnet DHCP operation between DHCP client and server.

DHCP, HTTP PXE and PXE Booting

Network Cloud family blueprint deployments involve a number of different DHCP servers and HTTP PXE and non HTTP PXE booting procedures during the automatic deployment process.

Regional Controller Deployment

When installed on a bare metal server, the RC is configured by the Redfish API calls issued by the Build Server to make DHCP Requests and then HTTP PXE boot over its vlan tagged 'host' network interface. (When installed in a VM the BIOS setting and operating system install and thus these steps are skipped).

The Build Server acts as the DHCP and HTTP PXE boot server for the RC via its own 'host' network interface.

The 'host' network must provide connectivity for these processes between the RC and the Build Server.

In R1 the 'host' network has been verified as a single L2 broadcast domain.

The RC DHCP/HTTP PXE process flow is summarized below.

Rover Deployment

The Rover server is configured by the Redfish API calls issued by the RC to make DHCP Requests and then HTTP PXE boot over its vlan tagged 'host' network interface.

The RC acts as the DHCP and HTTP PXE boot server for the Rover server via its own tagged 'host' network interface.

The 'host' network must provide connectivity across the WAN between the Rover edge site and the RC in a more centralized location.

In R1 the 'host' network has been verified as a single L2 broadcast domain.

The Rover DHCP/HTTP PXE process flow is summarized below.

It is possible to split the 'host' network spanning the WAN into multiple routed L2 domains using DHCP helper/relay functionality on the Rover's TOR but this is unverified in R1.

Unicycle Pod Deployment

A Unicycle pod's Genesis node is configured by the Redfish API calls issued by the RC to make DHCP Requests and then HTTP PXE boot over its vlan tagged 'host' network interface.

The RC acts as the DHCP and HTTP PXE boot server for the Unicycle Genesis node via its own tagged 'host' network interface.

The 'host' network must provide connectivity across the WAN between the Unicycle pods's edge site and the RC in a more centralized location.

In R1 the 'host' network has been verified as a single L2 broadcast domain.

Unicycle masters and workers (all Unicycle nodes other than Genesis), make DHCP Requests on the edge site's local 'pxe' network's L2 broadcast domain. The Genesis node's MaaS acts as the DHCP server.

Unicycle masters and workers (all Unicycle nodes other than Genesis) are configured by the MaaS server running on the Genesis node to PXE boot over the remote site's 'pxe' network.

The Unicycle DHCP/HTTP PXE/PXE process flow is summarized below.

It is possible to split the 'host' network spanning the WAN into multiple routed L2 domains using functionality such as DHCP helper/relay on the Unicycle Genesis TOR but this is unverified in R1. Note: this is not applicable to the other nodes in the Unicycle pod as the DHCP and PXE boot process is supported over the local 'pxe' network.

IP Address Plan

In the R1 release the following subnets are fixed of length /24

| Network name | Subnet size | Needs to be externally routable*? | Function |

|---|---|---|---|

| 'oob' | Any* | No | Provides the connectivity between the Build server and RC and then RC and Rover/Unicycle nodes for Redfish API calls to configure/interogate the servers' BIOS. |

| 'host' | Any* | Yes | Provides the edge site's isolated network for MaaS provisioning (MaaS runs on the genesis node once provisioned). It does not need to be routable to any other network. |

| 'pxe' | /24 fixed in R1 | No | Provides the connectivity and BGP route exchange for the calico network. |

| 'ksn' | /24 fixed in R1 | No | Provides the openstack storage network |

| 'neutron' | /24 fixed in R1 | No | Provides the openstack neutron network |

| 'datapath' | Defined in openstack | No ** | Provides the openstack datapath network for the SR-IOV variant VMs |

| 'vxlan' | /24 fixed in R1 | No | Provides the openstack storage network for the OVS-DPDK variant VMs |