Overview

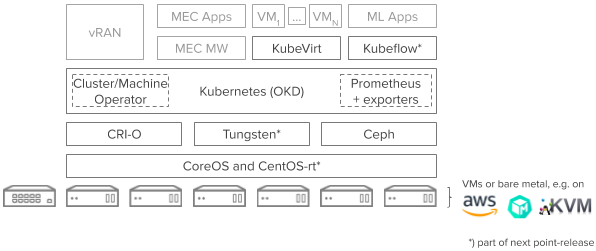

The Provider Access Edge blueprint is part of Akraino's Kubernetes-Native Infrastructure family of blueprints. As such, it leverages the best-practices and tools from the Kubernetes community to declaratively manage edge computing stacks at scale and with a consistent, uniform user experience from the infrastructure up to the services and from developer environments to production environments on bare metal or on public cloud.

This blueprint targets small footprint deployments able to host NFV (in particular vRAN) and MEC (e.g. AR/VR, machine learning, etc.) workloads. Its key features are:

- Lightweight, self-managing clusters based on CoreOS and Kubernetes (OKD distro).

- Support for VMs (via KubeVirt) and containers on a common infrastructure.

- Application lifecycle management using the Operator Framework.

- Support for multiple networks using Multus.

- Support for real-time workloads using CentOS-rt*.

Architecture

Resource Requirements

Deployments to AWS

| nodes | instance type |

|---|---|

| 1x bootstrap (temporary) | EC2: m4.xlarge, EBS: 120GB GP2 |

| 3x masters | EC2: m4.xlarge, EBS: 120GB GP2 |

| 3x workers | EC2: m4.large, EBS: 120GB GP2 |

Deployments to Bare Metal

| nodes | requirements |

|---|---|

| 1x provisioning host (temporary) | 12 cores, 16GB RAM, 200GB disk free, 3 NICs (1 internet connectivity, 1 provisioning+storage, 1 cluster) |

| 3x masters | 12 cores, 16GB RAM, 200GB disk free, 2 NICs (1 provisioning+storage, 1 cluster) |

| 3x workers | 12 cores, min. 16GB RAM, 200GB disk free, 2 SR/IOV-capable NICs (1 provisioning+storage, 1 cluster) |

The blueprint validation lab uses 7 SuperMicro SuperServer 1028R-WTR (Black) with the following specs:

| Units | Type | Description |

|---|---|---|

| 2 | CPU | BDW-EP 12C E5-2650V4 2.2G 30M 9.6GT QPI |

| 8 | Mem | 16GB DDR4-2400 2RX8 ECC RDIMM |

| 1 | SSD | Samsung PM863, 480GB, SATA 6Gb/s, VNAND, 2.5" SSD - MZ7LM480HCHP-00005 |

| 4 | HDD | Seagate 2.5" 2TB SATA 6Gb/s 7.2K RPM 128M, 512N (Avenger) |

| 2 | NIC | Standard LP 40GbE with 2 QSFP ports, Intel XL710 |

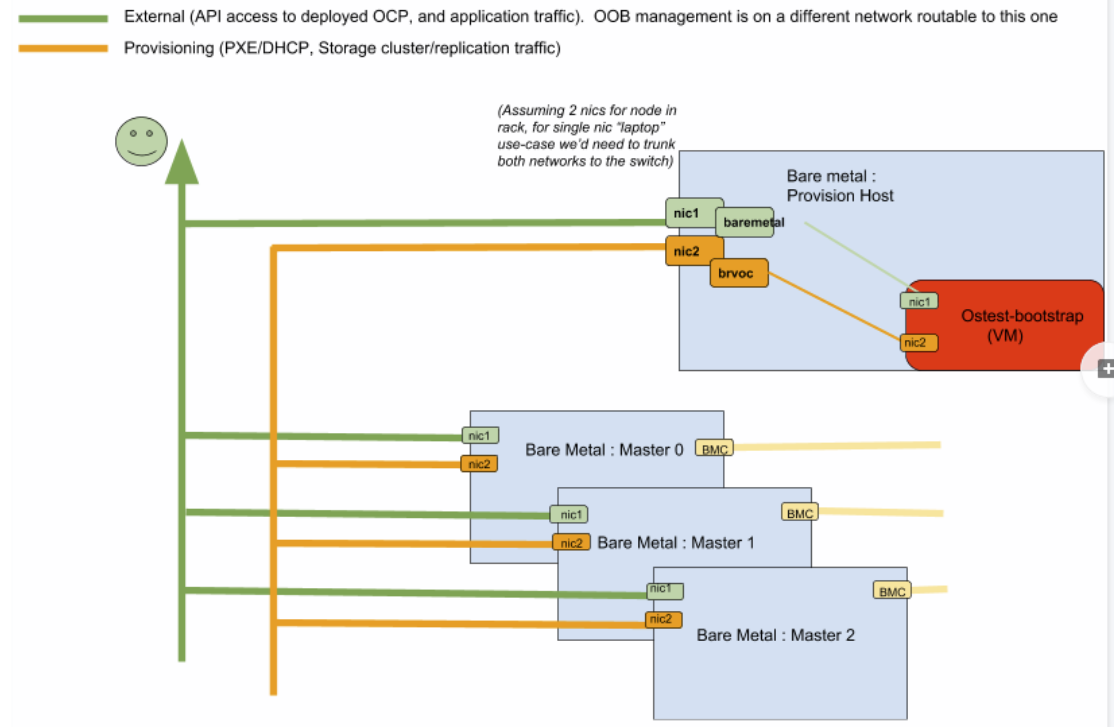

Networking for the machines has to be set up as follows:

Deployments to libvirt

| nodes | requirements |

|---|---|

| 1x bootstrap (temporary) | 2 vCPUs, 2GB RAM, 2GB (sparse) |

| 3x masters | 2 vCPUs, 8GB RAM, 2GB (sparse) |

| 3x workers | 2 vCPUs, 4GB RAM, 2GB (sparse) |

Documentation

See KNI Blueprint User Documentation.

Project Team

Member | Company | Contact | Role | Photo & Bio |

| Andrew Bays | Red Hat | Committer | ||

Frank Zdarsky | Red Hat | Committer | Senior Principal Software Engineer, Red Hat Office of the CTO; Edge Computing and | |

Jennifer Koerv | Intel | Committer | ||

| Manjari Asawa | Wipro | Manjari Asawa <manjari.asawa@wipro.com> | Committer | |

| Mikko Ylinen | Intel | Committer | ||

| Ned Smith | Intel | Committer | ||

| Ricardo Noriega | Red Hat | Ricardo Noriega | Committer | Red Hat NFVPE - CTO office - Networking |

Sukhdedv Kapur | Juniper | Committer | Distinguished Engineer; Contrail Software - CTO Org | |

| Yolanda Robla | Red Hat | Yolanda Robla Mota | PTL, Committer | Red Hat NFVPE - Edge, baremetal provisioning |

Use Case Template

Attributes | Description | Informational |

|---|---|---|

Type | New | |

Industry Sector | Telco and carrier networks | |

Business Driver | ||

Business Use Cases | ||

Business Cost - Initial Build Cost Target Objective | ||

Business Cost – Target Operational Objective | ||

Security Need | ||

Regulations | ||

Other Restrictions | ||

Additional Details |

Blueprint Template

Attributes | Description | Informational |

|---|---|---|

Type | New | |

Blueprint Family - Proposed Name | Kubernetes-Native Infrastructure for Edge (KNI-Edge) | |

Use Case | Provider Access Edge (PAE) | |

Blueprint - Proposed Name | Provider Access Edge (PAE) | |

Initial POD Cost (CAPEX) | less than $150k (TBC) | |

Scale & Type | 3 to 7 x86 servers (Xeon class) | |

Applications | vRAN (RIC), MEC apps (CDN, AI/ML, …) | |

Power Restrictions | less than 10kW (TBC) | |

Infrastructure orchestration | End-to-end Service Orchestration: ONAP | |

SDN | Tungsten Fabric (w/ SR-IOV, DPDK, and multi-i/f); leaf-and-spine fabric mgmt. | |

| SDS | Ceph | |

Workload Type | containers, VMs | |

Additional Details |