The Continuous Deployment Lab provided by Enea for IEC has two ThunderX1 servers on which we do nightly virtual deploys of IEC Type 2, run validation, and install SEBA usecase.

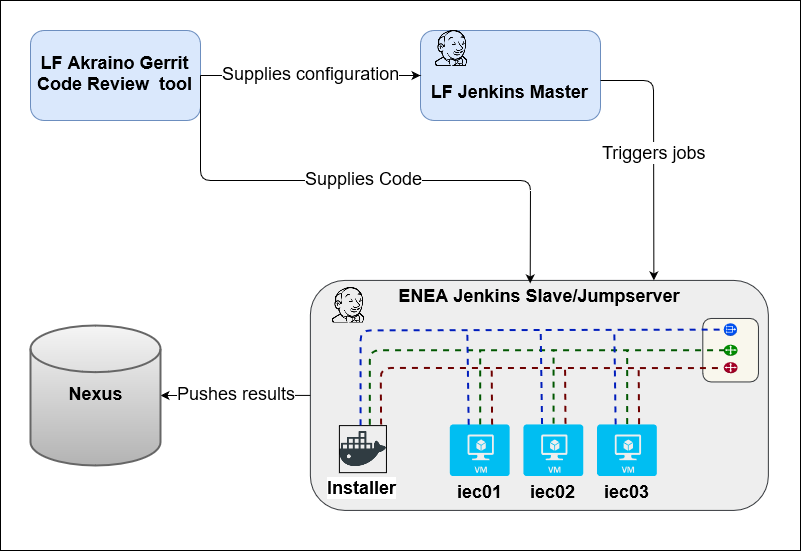

Continuous Integration → Continuous Deployment Flow

Enea's CI/CD validation lab is ran using Jenkins and it's connected to the Akraino Linux foundation Jenkins master.

The Jenkins jobs are configured via JJB in the Akraino ci-management project: https://gerrit.akraino.org/r/gitweb?p=ci-management.git;a=tree;f=jjb/iecThese jobs are loaded in the LF Jenkins master and triggered periodically: https://jenkins.akraino.org/view/iec/

Each job's has a master job that calls four subsequent jobs (e.g.: https://jenkins.akraino.org/view/iec/job/iec-type2-fuel-virtual-centos7-daily-master/)

- Deploy IEC

- The Installer will create the cluster VMs (using KVM), will clone the IEC repo and it will install the IEC platform on them (e.g.: https://jenkins.akraino.org/view/iec/job/iec-type2-deploy-fuel-virtual-centos7-daily-master/)

- Run validation k8s conformance tests

- The k8s conformance tests are ran against the cluster using the validation project (e.g.: https://jenkins.akraino.org/view/iec/job/validation-enea-daily-master/)

- Install SEBA use-case

- The scripts that install the SEBA usecase are ran (e.g.: https://jenkins.akraino.org/view/iec/job/iec-type2-install-seba_on_arm-fuel-virtual-centos7-daily-master/)

- Cleanup

- Destroy the setup (e.g.: https://jenkins.akraino.org/view/iec/job/iec-type2-destroy-fuel-virtual-centos7-daily-master/)

Logs from the CD installation of Integrated Edge Cloud (IEC) are available at: https://nexus.akraino.org/content/sites/logs/production/vex-yul-akraino-jenkins-prod-1/

Akraino Continuous Deployment Hardware

Sales Item | Description | QTY |

cn8890 | Gigabyte ThunderX R120-T32 (1U) | 2 |

Chassis Level Specification

Total Physical Compute Cores: 48

Total Physical Compute Memory: 256GB

Total SSD-based OS Storage: 480G

Networking per Server: 2x 1G and 2x 10G

IEC Cabling

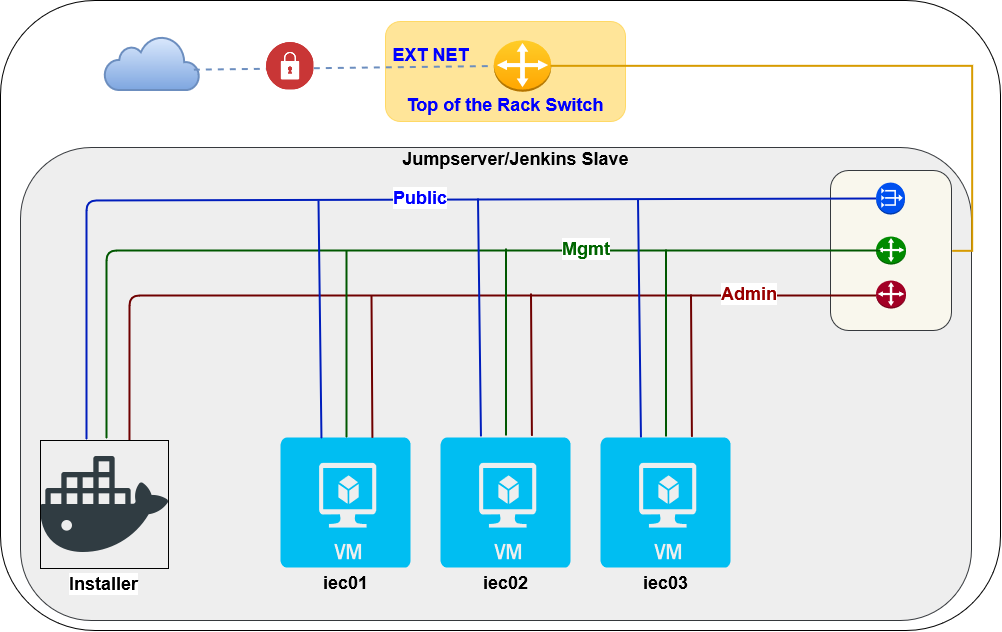

Virtual Deploy using Fuel@OPNFV installer

Based on the configuration passed to the installer, it will handle creating the VMs, virtual networks, OS installation and IEC installation.

The setup is created based on a Pod Descriptor File (PDF) and Installer Descriptor File (IDF) . The files for the two servers in Enea lab are at https://gerrit.akraino.org/r/gitweb?p=iec.git;a=tree;f=ci/labs/arm

The PDF contains information about the VMs (RAM, CPUs, Disks). The IDF contains the virtual subnets that need to be created for the cluster and the interfaces on the Jumphost that are going to be connected to the clusters. The first two interfaces on the Jumphost will be used for Admin and Public subnets.

An installation will create 3 VMs and install the OS given as parameter. The supported OSes are Ubuntu16.04, Ubuntu 18.04 and Centos7. Each VM has three subnets:

- Admin: used during installation

- Mgmt: used by k8s

- Public: used for external access