Introduction

The R4 release will evaluate the conntrack performance of Open vSwitch (OVS).

A DPDK based Open vSwitch (OVS-DPDK) is used as the virtual switch, and the network traffic is virtualized with the VXLAN encapsulation.

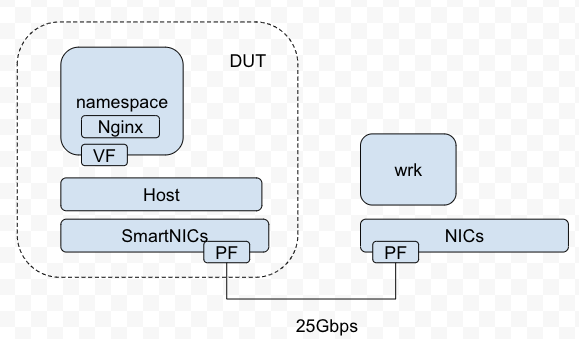

We will compare the performance of an optimized software based OVS with the performance of the OVS running on a MLNX SmartNIC with hardware offload enabled.

Akraino Test Group Information

SmartNIC Test Architecture

We reuse the test architecture for smart NIC in R3 release. The below description is the same with the R3 release test documents.

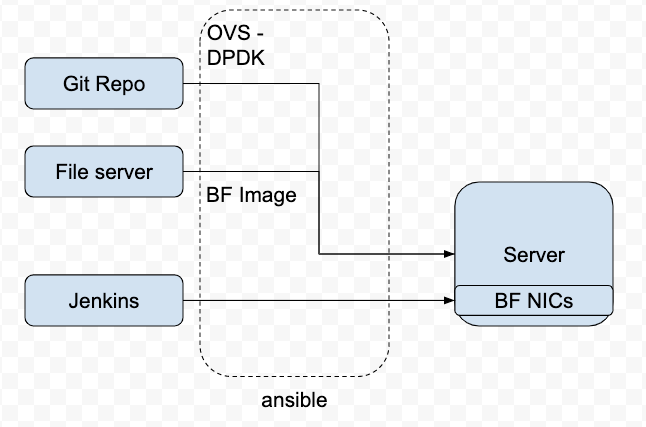

To deploy the Test architecture for smartNIC, we use a private Jenkins and an Intel server equipped with a BlueField v2 SmartNIC.

We use Ansible to automatically setup the filesystem image and install the OVS-DPDK in the SmartNICs.

The File Server is a simple Nginx based web server where stores the BF drivers, FS image.

The Git repo is our own git repo where hosts OVS-DPDK and DPDK code.

The Jenkins will use ansible plugin to download BF drivers and FS image in the test server and setup the environment

according to the ansible-playbook.

| Image | download link |

|---|---|

| BlueField-2.5.1.11213.tar.xz | https://www.mellanox.com/products/software/bluefield |

| core-image-full-dev-BlueField-2.5.1.11213.2.5.3.tar.xz | https://www.mellanox.com/products/software/bluefield |

| mft-4.14.0-105-x86_64-deb.tgz | |

| MLNX_OFED_LINUX-5.0-2.1.8.0-debian8.11-x86_64.tgz |

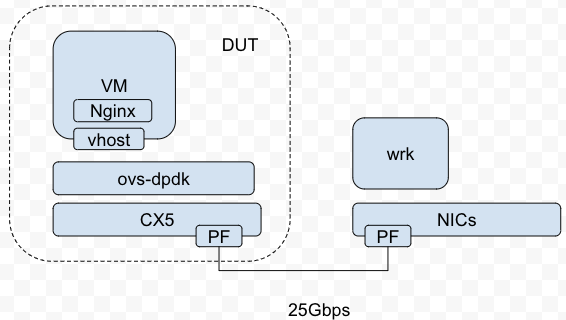

OVS-DPDK Test Architecture

OVS-DPDK on BlueField Test Architecture

The testbed setup is shown in the above diagram. DUT stands for Device Under Test

Test Framework

The software used and the OVS-DPDK setup is shown below.

| Type | Description |

|---|---|

| SmartNICs | BlueField v2, 25Gbps |

| DPDK | version 20.11 |

| vSwitch | OVS-DPDK 2.12 with VXLAN DECAP/ENCAP offload enabled. |

Bridge br-int

datapath_type: netdev

Port br-int

Interface br-int

type: internal

Port vhost-user-01

Interface vhost-user-01

type: dpdkvhostuserclient

options: {vhost-server-path="/var/run/openvswitch/dpdkvhostclient01"}

Port vxlan-vtp

Interface vxlan-vtp

type: vxlan

options: {dst_port="4789", key=flow, local_ip="YOU IP", remote_ip=flow, tos=inherit}

Port br-ex-patch

Interface br-ex-patch

type: patch

options: {peer=br-int-patch}

Bridge br-ex

datapath_type: netdev

Port br-int-patch

Interface br-int-patch

type: patch

options: {peer=br-ex-patch}

Port eth2

Interface eth2

type: dpdk

options: {dpdk-devargs="PCIE", n_rxq="4"}

Port br-ex

Interface br-ex

type: internal

ovs_version: "2.13.90"

root:/home/ovs-dpdk# ovs-vsctl --format=csv --data=bare --no-headings --column=other_config list open_vswitch "dpdk-extra=-w [PCIE] -l 70 dpdk-init=true dpdk-socket-mem=2048,2048 emc-insert-inv-prob=0 n-handler-threads=1 n-revalidator-threads=4 neigh-notifier-enable=true pmd-cpu-mask=0xc00000000000c00000 pmd-pause=false pmd-rxq-assign=roundrobin smc-enable=true tx-flush-interval=0 userspace-tso-enable=true"

Traffic Generator

We will use DPDK pktgen as the Traffic Generator.

CT Ruleset

Br-ex rules table=0 priority=300 in_port=eth2,tcp,tp_dst=32768/0x8000 actions=output:br-int-patch table=0 priority=300 in_port=eth2,udp,tp_dst=32768/0x8000 actions=output:br-int-patch table=0 priority=200 in_port=eth2 actions=output:LOCAL table=0 priority=200 in_port=LOCAL actions=eth2 table=0 priority=200 in_port=br-int-patch actions=eth2

Br-int rules

Nginx configuration

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log warn;

pid /var/run/nginx.pid;

events {

worker_connections 2000000;

}

http {

include /etc/nginx/mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log off;

sendfile on;

keepalive_timeout 65;

include /etc/nginx/conf.d/*.conf;

}

server {

listen 80 backlog=8192 reuseport;

server_name localhost;

location / {

return 200 "hello";

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

WRK configuration

connection setup rates:

./wrk -t 32 -c 640 -d30s http://10.0.1.127/ -H "Connection: Close"

Performance Results

For the optimized software-based OVS, we tested on a 48C24q VM, running NGINX as a server, CT is enabled on OVS-DPDK.( upstream version / our version )

We also run 50 2c2q VMs as clients, generating HTTP GET traffic to evaluate the performance.

pps (not closing connection after each query) | pps (closing connection after each query) | connection initial rates (closing connection after each query) | QPS (not closing connection after each query) |

|---|---|---|---|

| 1.66Mpps/2Mpps | 1.66Mpps/2Mpps | 140Kcps/200Kcps | 889Kqps/1.14Mqps |

Test API description

The test is to evaluate the performance of SmartNIC offloading.

Thus we currently don't have any Test APIs provided.

Test Dashboards

Functional Tests

Open vSwitch itself contains a test suite for functional test, the link is http://docs.openvswitch.org/en/latest/topics/testing/

We have run the basic test suite according to the link.

By running,

make check TESTSUITEFLAGS=-j8

We got the below results.

Total Tests | Test Executed | Pass | Fail | In Progress |

|---|---|---|---|---|

| 2225 | 2200 | 2198 | 2 | 0 |

The two failed cases are about sFlow sampling. We are investigating the internal reason.

25 cases are skipped due to the configuration.

Additional Testing

n/a

Bottlenecks/Errata

n/a