This is a draft and work in progress

Introduction

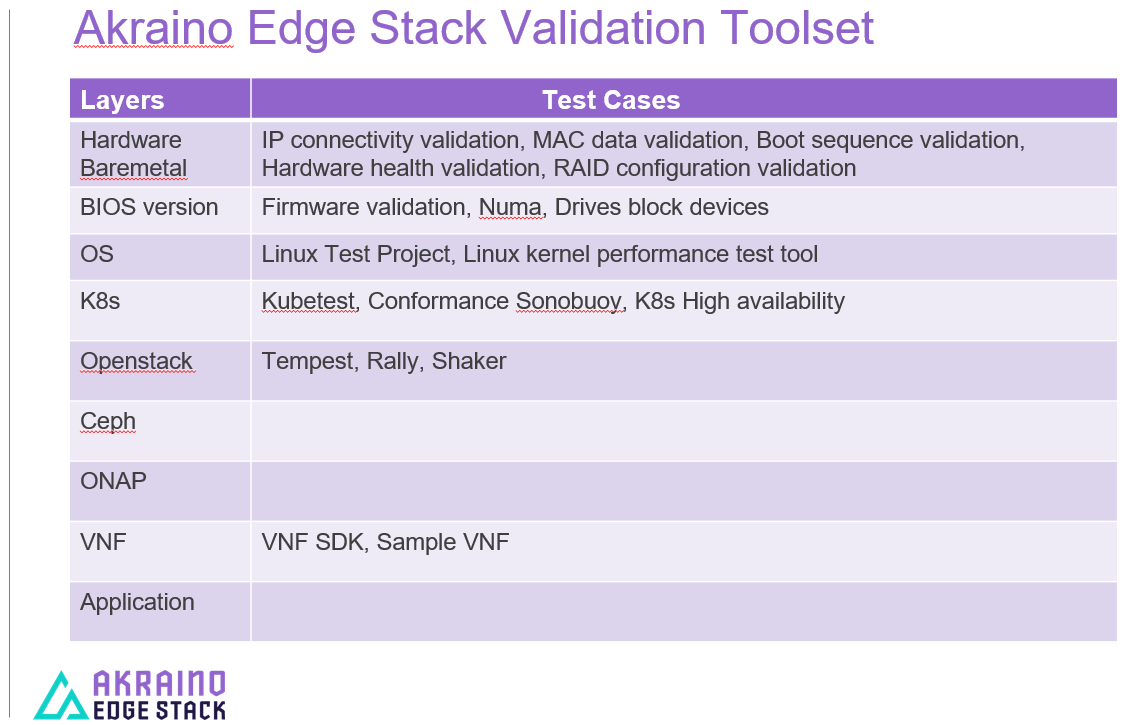

BluVal is a diagnostic toolset framework to validate different layers in the Akraino infrastructure developed and used in Akraino edge stack. BluVal integrates different test cases, its development employs a declarative approach that is version controlled in LF Gerrit. They are integrated in CI/CD tool chain where peer Jenkins jobs can run the test cases and the results are reported in LF Repo (Nexus). The test cases cover all blueprint layers in the cluster.

Requirements

- Support Kubernetes

- Integrate with LF Gerrit

- Run well in an Akraino validation lab

- Store test results in a database

Technical guidelines

To support python3

To be fully covered by unit tests

To provide docker containers and manifest for both architectures supported: amd64 and arm64

To publish the documentation online

Test cases bundle for different layers

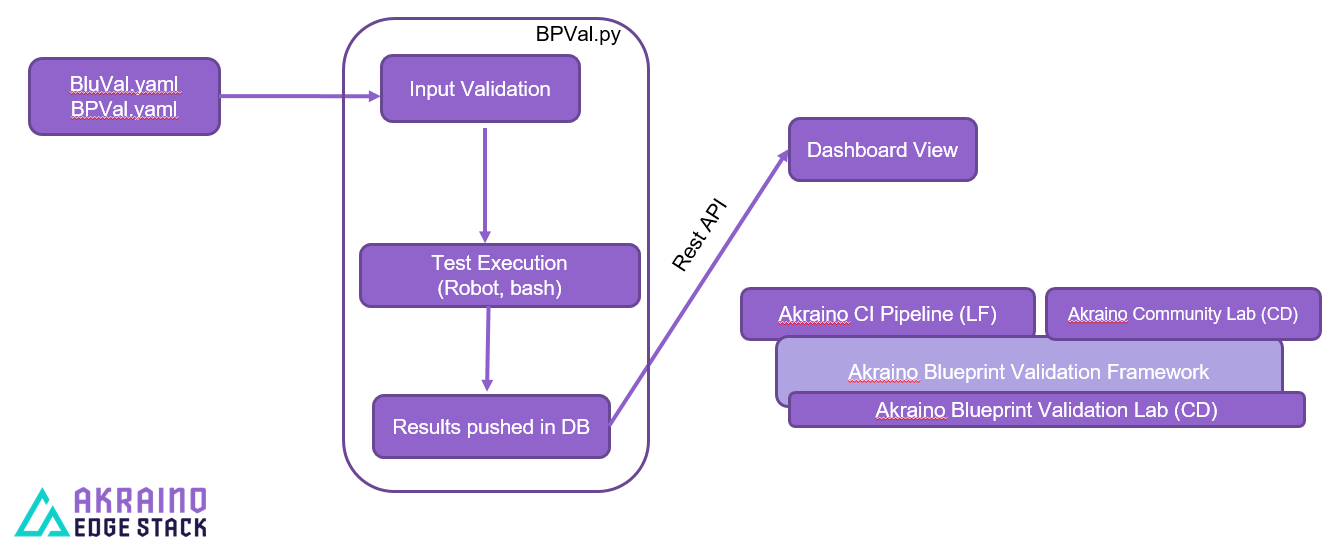

BluVal implementation Overview

bluval.py runs the tool-set to test the REC Akraino validation framework. It performs the following actions:

1. Input validation

2. Test execution

3. Results pushed in DB

4. Dashboard view

BluVal installation and execution

bluval.py toolset project is from Akraino gerrit (Linux Foundation credentials required). It is to be cloned into /opt/akraino on the designated Regional controller or test host.

For cloning 'git clone' command can be used as shown in the below procedure:

This is how you can validate the bluval engine setup, if you have proper connectivity it should show some testcases in PASS status.

ns156u@aknode82:~$ git clone https://gerrit.akraino.org/r/validation.git

ns156u@aknode82:~$ cd validation

ns156u@aknode82:~/validation$ python -m venv .py35 # First time only

ns156u@aknode82:~/validation$ source .py35/bin/activate

(.py35) ns156u@aknode82:~/validation$ pip install -r bluval/requirements.txt # First time only

(.py35) ns156u@aknode82:~/validation$ python bluval/bluval.py -l hardware dummy # this will run hardware test cases of dummy blue print

(.py35) ns156u@aknode82:~/validation$ deactivate

Akraino Blueprint Validation UI

This project contains the source code of the Akraino Bluepint Validation UI.

This UI consists of the front-end and back-end parts.

The front-end part is based on HTML, CSS, and AngularJS technologies.

The back-end part is based on Spring MVC and Apache Tomcat technologies.

Based on these instructions, a user can provide the prerequisites, compile the source code and deploy the UI.

Prerequisites

- Database

A PostgreSQL database instance is needed with the appropriate relations in order for the back-end system to store and retrieve data.

Configure the postgreSQL root password in the variable POSTGRES_PASSWORD and execute the following commands in order to build and deploy this database container:

cd validation/docker/postgresql

make build

./deploy.sh POSTGRES_PASSWORD=password

Currently, the following data is initialized in the aforementioned database (mainly for testing purposes):

Timeslots:

id:1 , start date and time: now() (i.e. the time of the postgreSQL container deployment), duration: 10 (sec), lab: 0 (i.e. AT&T)

id:2 , start date and time: now() (i.e. the time of the postgreSQL container deployment), duration: 1000 (sec), lab: 0 (i.e. AT&T)

id:3 , start date and time: now() (i.e. the time of the postgreSQL container deployment), duration: 10000 (sec), lab: 0 (i.e. AT&T)

id:4 , start date and time: now() (i.e. the time of the postgreSQL container deployment), duration: 100000 (sec), lab: 0 (i.e. AT&T)

id:5 , start date and time: now() (i.e. the time of the postgreSQL container deployment), duration: 100000 (sec), lab: 0 (i.e. AT&T)

Blueprints:

id: 1 , name : 'dummy'

id: 2 , name : 'Unicycle'

id: 3 , name : 'REC'

Blueprint Instances:

id: 1, blueprint_id: 1 (i.e. dummy), version: "0.0.2-SNAPSHOT", layer: 0 (i.e. Hardware), layer_description: "Dell Hardware", timeslot id: 1

id: 2, blueprint_id: 2 (i.e. Unicycle), version: "0.0.1-SNAPSHOT", layer: 0 (i.e. Hardware), layer_description: "Dell Hardware", timeslot id: 2

id: 3, blueprint_id: 2 (i.e. Unicycle), version: "0.0.7-SNAPSHOT", layer: 1 (i.e. OS), layer_description: "CentOS Linux 7 (Core)", timeslot id: 3

id: 4, blueprint_id: 3 (i.e. REC), version: "0.0.4-SNAPSHOT", layer: 2 (i.e. K8s), layer_description: "K8s with High Availability Ingress controller", timeslot id: 4

id: 5, blueprint_id: 3 (i.e. REC), version: "0.0.8-SNAPSHOT", layer: 2 (i.e. K8s), layer_description: "K8s with High Availability Ingress controller", timeslot id: 5

Based on this data, the UI enables the user to select an appropriate blueprint instance for validation.

Currently, this data cannot be retrieved dynamically by the UI (see limitations subsection).

For this reason, if a user wants to define a new timeslot with the following data:

start date and time:now, duration: 123 in secs, lab: Community

the following file should be created:

name: dbscript

content:

insert into akraino.timeslot values(5, now(), 123, 2);

Then, the following command should be executed:

$ psql -h <IP of the postgreSQL container> -p 6432 -U admin -f ./dbscript

Furthermore, if a user wants to define a new blueprint, namely "newBlueprint" and a new instance of this blueprint with the following data:

version: "0.0.1-SNAPSHOT", layer: 2 (i.e. K8s), layer_description: "K8s with High Availability Ingress controller", timeslot id: 5 (i.e. the new timeslot)

the following file should be created:

name: dbscript

content:

insert into akraino.blueprint (blueprint_id, blueprint_name) values(4, 'newBlueprint');

insert into akraino.blueprint_instance (blueprint_instance_id, blueprint_id, version, layer, layer_description, timeslot_id) values(6, 4, '0.0.1-SNAPSHOT', 2, 'K8s with High Availability Ingress controller', 5);

Then, the following command should be executed:

$ psql -h <IP of the postgreSQL container> -p 6432 -U admin -f ./dbscript

The UI will automatically retrieve this new data and display it to the user.

- Jenkins Configuration

The Blueprint validation UI will trigger job executions in a Jenkins instance.

This instance must have the following option enabled: "Manage Jenkins -> Configure Global Security -> Prevent Cross Site Request Forgery exploits".

Also, currently corresponding Jenkins job should accept the following as input parameters: "SUBMISSION_ID", "BLUEPRINT", "LAYER" and "UI_IP".

The "SUBMISSION_ID" and "UI_IP" parameters (i.e. IP address of the UI host machine-this is needed by the Jenkins instance in order to send back Job completion notification) are created and provided by the back-end part of the UI.

The "BLUEPRINT" and "LAYER" parameters are configured by the UI user.

Moreover, as the Jenkins notification plugin (https://wiki.jenkins.io/display/JENKINS/Notification+Plugin) seems to ignore proxy settings, the corresponding Jenkins job must be configured to execute the following command at the end (Post-build Actions)

$ curl -v -H "Content-Type: application/json" -X POST --insecure --silent http://$UI_IP:8080/AECBlueprintValidationUI/api/jenkinsJobNotification/ --data '{"submissionId": "'"$SUBMISSION_ID"'" , "name":"'"$JOB_NAME"'", "buildNumber":"'"$BUILD_NUMBER"'"}'Finally, the Jenkins instance must be accessible from the UI host without using system proxy.

- Nexus server

All the blueprint validation results are stored in Nexus server.

These results must be available in the following url:

https://nexus.akraino.org/content/sites/logs/"lab"-blu-val/job/validation/"Jenkins job number"/results.

where "lab" is the name of the lab (for example 'att') and "Jenkins job number" is the number of the Jenkins job that produced this result.

Moreover, the Nexus server must be accessible from the UI (with or without using system proxy).

Finally, the results should be stored using the following format:

TBD

Compiling

$ cd validation/ui

$ mvn clean install

Deploying

In the context of deploying, the following data is needed:

- The postgres root user password

- The Jenkins url

- The Jenkins username and password

- The name of Jenkins Job

- The Url of the Nexus results

- The host system's proxy ip and port

These variables must be configured as content of the deploy script input parameters. Execute the following commands in order to build and deploy the UI container:

$ cd validation/docker/ui

$ make build

$ ./deploy.sh postgres_db_user_pwd=password jenkins_url=http://192.168.2.2:8080 jenkins_user_name=name jenkins_user_pwd=jenkins_pwd jenkins_job_name=job1 nexus_results_url=https://nexus.akraino.org/content/sites/logs proxy_ip=172.28.40.9 proxy_port=3128

If no proxy exists, just do not define proxy ip and port variables.

The UI should be available in the following url:

http://localhost:8080/AECBlueprintValidationUI

Limitations

- The UI is not connected to any LDAP server. Currently, any user can login.

- The UI and postgreSQL containers must be deployed on the same host.

- The back-end part of the UI does not take into account the configured timeslot. It immediately triggers the corresponding Jenkins Job.

- Results data manipulation (filtering, graphical representation, indexing in time order, etc) is not supported.

- Only the following labs are supported: AT&T, Ericsson and Community.

- Only the following tabs are functional: 'Committed Submissions', 'Blueprint Validation Results -> Get by submission id'.

- The UI configures only the "BLUEPRINT" and "LAYER" input parameters of the Jenkins job.

- The available blueprints and timeslots must be manually configured in the PostgreSQL database.

- The Jenkins instance must be accessible from the UI host without using system proxy.