This document covers both Integrated Edge Cloud(IEC) Type 1 & 2.

Integrated Edge Cloud(IEC) is an Akraino approved blueprint family and part of Akraino Edge Stack, which intends to develop a fully integrated edge infrastructure solution, and the project is completely focused towards Edge Computing. This open source software stack provides critical infrastructure to enable high performance, reduce latency, improve availability, lower operational overhead, provide scalability, address security needs, and improve fault management. The IEC project will address multiple edge use cases and industry, not just Telco Industry. IEC intends to develop solution and support of carrier, provider, and the IoT networks.

Use Case

The first use case of IEC is SDN Enabled Broadband Access(SEBA) on arm, in the future more use cases would be added with the provisional plan, such as REC, Edge AI, vCDN, Autonomous Vehicles, and so on.

Where on the Edge

Business Drivers

The Integrated Edge Cloud (IEC) will enable new functionalities and business models on the network edge. The benefits of running applications on the network edge are - Better latencies for end users - Less load on network since more data can be processed locally - Fully utilize the computation power of the edge devices.

Overall Architecture

Currently, the chosen operating system(OS) is Ubuntu 16.04 and/or 18.04. The infrastructure orchestration of IEC is based on Kubernetes, which is a production-grade container orchestration with rich running eco-system. The current container network interface(CNI) solution chosen for Kubernetes is project Calico, which is a high performance, scalable, policy enabled and widely used container networking solution with rather easy installation and arm64 support. In the future, Contiv/VPP or OVN-Kubernetes would also be candidates for Kubernetes networking.

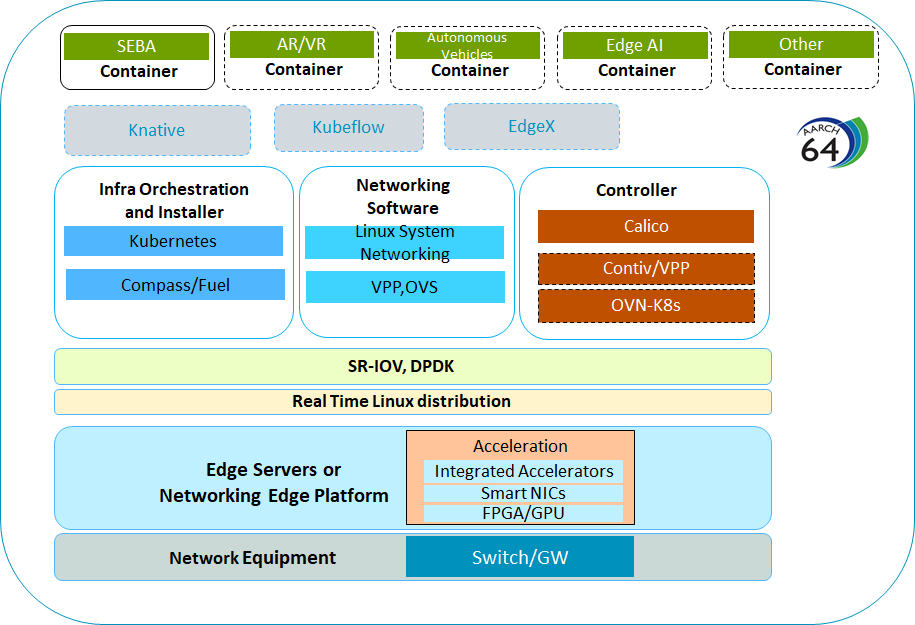

The high level design of IEC architecture uses containerized networking environment:

The edge applications run as containers with container orchestration engine and high performance networking support;

The integrated edge cloud platform provides management interface and programming interface to deploy/manage edge applications quickly and conveniently

The platform supports the applications of IoT gateway, SD-WAN, edge AI and etc.

Under the current architecture, 2 kinds of use cases are supported:

Telco/enterprise Edge cloud – for example, MEC or branch office data center…

Telco/enterprise remote edge locations – edge platform with limited resources, for example, SD-WAN, IoT gateway…

The IEC reference stack architecture is given with the following figure:

Fig 1 IEC Reference Stack

Currently, IEC provides the following functions under its reference architecture:

- The IEC supported hardware are edge servers mainly based on arm64, such as Huawei Taishan, Marvell ThunderX, Ampere Arm64 servers; at the far edge, the supported edge end devices would be Marvell MACCHIATObin Double Shot or other arm based boxes/devices. The desired network connections are above 10Gbit/s which may satisfy most current IEC applications requirement.

- The installation scripts which deploys Kubernetes cluster, Calico CNI, Helm/Tiller and related verifying Kubernetes applications/services with 1 master and 2 slave nodes. The scripts can be run from the jumpserver, or with manual installation from the servers on which it run. The installation methods is introduced in IEC Blueprints Installation Overview.

- Currently IEC uses project Calico as the main container networking solution which provides high performance, rich network policy, widely supported from Linux system and easy installation. In the future, Contiv/VPP and OVN-Kubernetes can be used as a high performance substitute since those 2 solutions can support DPDK enabled high speed interface access.

- IEC support Akraino CI/CD requests: IEC Daily jobs (scheduled to run recurrently) deploy IEC using one of the agreed installers; run testing suites; collect logs and publish them.

- IEC Type1&2 is building 2nd CI/CD validation lab and pushing logs to Akraino Nexus server.

- Currently IEC suppors the SDN Enabled Broadband Access(SEBA) as a use case. The installation scripts for SEBA on arm and its related source repositories are developed and/or integrated in IEC source code repo. We had ported SEBA components to arm64 servers with Helm chart installation support

Main Progresses after Release 1

Item | Name | Category | Description |

1 | Certain version of Kubernetes Installation Support | Function enhancement | Support stable version: 1.13.x(default) and user indicated version, such as 1.16.x |

2 | Centos/Redhat OS Support | Function enhancement | The IEC installation now supports both CentOS/Rhel OS besides Ubuntu |

3 | Multiple CNIs(Flannel, Contiv/VPP) Support | Function enhancement | Added support for Flannel, Contiv/VPP for IEC type1&2. Added the installation options when deploy the IEC cluster. For IEC type1, added support for Marvell PP2 interface in Contiv/VPP |

4 | VM deployment tools integration | Function enhancement | Support the deployment of VMs and the installation of IEC cluster into those VMs automatically. |

5 | Initial Restful API server framework | Function enhancement | Initial commit to add a Restful API service(http server) framework to IEC, |

6 | IEC type1 information about Macchiatobin board | Function enhancement | The Marvell MACCHIATObin is a family of cost-effective and high-performance networking community boards targeting ARM64bit high end networking and storage applications. It adds the kernel config file, system boot parameters and linux kernel setup stripts on MACCHIATObin for edge infrastructure. |

7 | Comformance test | Test | Add and enable Sonobuoy on IEC foudation to diagnose the state of a Kubernetes cluster, mainly on arm64 platform. |

| 8 | Update Seba-charts submodule Force apt-get to use ipv4 | Bug Fix | Switching the submodule to latest commit containing the fix for freeradius. For avoiding docker-ce installation failed, we specify the apt to |

| 9 | Use multi-arch etcd yaml to support Calico | Function enhancement | Using single etcd yaml file to support Calico installation |

| 10 | Add PONSim installation scripts | Function enhancement | Add PONSim installation scripts for SEBA use case |

Platform Architecture

The IEC project is for openness, which intends to develop a fully integrated edge infrastructure solution, it provides a reference implementation for hardware and software to help users build their projects.

What is reported below is a list of hardware that IEC community have tested over time in lab trials, mainly on the Arm machine. If you need to understand the hardware requirements of the x86 server, you can refer to the following link:

https://guide.opencord.org/cord-6.1/prereqs/hardware.html#bom-examples

Build Of Materials (BOM) / Hardware requirements

Generic Hardware Guidelines

Compute Machines: By observing the actual memory utilization of ThunderX2, it is found that if IEC is deployed on a single node, at least 15G of memory and 62G disk is required; This kind of hardware condition is very harsh for embedded devices. For more realistic deployments, we suggest using at least three machines (preferably all the same). The characteristics of these machines depends several factors. At the very minimum, each machine should have a 4 cores CPU, 32GB of RAM, and 60G of disk capacity.

Network: The machine have to download a large quantity of software from different sources on the Internet, so it needs to be able to reach Internet. For whatever server use, it should have a 1G network interface for management at least. A 40G NIC is required if performance testing is required.

Optics and Cabling: Some hardware may be picky about the optics. Both optics and cable models tested by the community are provided below.

Recommended Hardware

Following is a list of hardware that people from the IEC blueprint community has been tested over time in lab trials.

Note: Until now, there isn't performance testing of the IEC, which is our follow-up work.

Device 1

Quantity | Category | Brand | Model | P/N |

1 | Compute | Cavium | ThunderX2 | ThunderX2 |

4 | Memory | Micron Technology | 9ASF1G72PZ-2G6D1 | 9ASF1G72PZ-2G6D1 8GB*4 |

1 | Management switch (L2 with VLAN support) | * | * | * |

1 | Network interface card(for mgmt) | Intel | 10-Gigabit X540-AT2 | 10-Gigabit X540-AT2 |

1 | Network interface card(for data) | Intel | XL710 40 GbE | XL710 40 GbE |

2 | SFP(for mgmt) | Intel | FTLX8571D3BCV-IT | INTEL FTLX8571D3BCV-IT Finisar 10GB s 850nm Multimode SFP SR Transceiver |

Fabric switch | N/A | N/A | N/A |

Device 2

Quantity | Category | Brand | Model | P/N |

1 | Compute | Ampere | eMAG server | eMAG server |

8 | Memory | Samsung | M393A4K40CB2-CTD | M393A4K40CB2-CTD 32GB*8 |

1 | Management switch (L2 with VLAN support) | * | * | * |

1 | Network interface card(for mgmt) | Mellanox | MT27710 Family | ConnectX-4 Lx |

1 | Network interface card(for data) | Intel | XL710 40 GbE | XL710 40 GbE |

2 | SFP(for mgmt) | Intel | FTLX8571D3BCV-IT | INTEL FTLX8571D3BCV-IT Finisar 10GB s 850nm Multimode SFP SR Transceiver |

Fabric switch | N/A | N/A | N/A |

Device 3

Quantity | Category | Brand | Model | P/N |

2 | Compute | Marvell | Marvell ARMADA 8040 | MACCHIATObin Double Shot |

1 | Memory | System memory | Marvell ARMADA 8040 | DDR4 DIMM slot with optional ECC and single/dual chip select support 16GB |

1 | Management switch (L2 with VLAN support) | * | * | * |

1 | Network interface card(for mgmt) | Marvell | Marvell ARMADA 8040 | Dual 10GbE (1/2.5/10GbE) via copper or SFP 2.5GbE (1/2.5GbE) via SFP 1GbE via copper |

2 | SFP(for mgmt) | Cisco | Passive Direct Attach Copper Twinax Cable | SFP-H10GB-CU3M Compatible 10G SFP+ |

Fabric switch | N/A | N/A | N/A |

Platform Features Added/Improved

Contiv-VPP CNI for IEC Blueprint

Brief Introduction

Contiv-VPP is a Kubernetes CNI network plugin designed and built to address pod networking 2.0 requirements. It uses FD.io VPP to provide policy and service-aware dataplane functionality consistent with k8s orchestration and lifecycle management.

Contiv-VPP Highlights

The important features of Contiv-VPP:

- Maps k8s policy and service mechanisms (i.e. label selectors) to the FD.io/VPP dataplane. In effect, said policies and services are network-aware leading to the ability for containerized applications to benefit from optimal transport resources.

- Implemented in user space for rapid innovation and immutability.

- Programmability enabled by a Ligato-based VPP agent that expedites the mapping of k8s policies and services into a FD.io/VPP dataplane configuration.

Setting up on IEC

The automatic deployment script provided by IEC uses calico CNI by default. To enable Contiv-VPP network solution for Kubernetes, you need to make some minor modifications. In addition, the deployment methods of IEC type1 and type2 are slightly different. IEC provide details on how to install Kubernetes with Contiv-VPP networking on one or more bare-metal servers. Please refer to the contiv-vpp_setup.rst document.

IEC Type1 Specifics

| Item | Name | Description |

|---|---|---|

| 1 | Setting up MACCHIATObin | Prerequisites: IEC provide detail document to instruduce how to setup MACCHIATObin. Its contents include:Build and Update Bootloader,Setting U-Boot parameters,Kernel compilation and update. The default kernel configuration provided by Marvell does not meet the container's system requirements. A modified kernel config enable necessary module to meet container`s requirement, such as NETFILTER, IPTABLES, OVERLAY_FS, VHOST, TAP and other related kernel modules. |

| 2 | MUSDK Introduction | MUSDK version used: musdk-armada-18.09 Marvell User-Space SDK(MUSDK) is a light-weight user-space I/O driver for Marvell's Embedded Networking SoC's, Following kernel modules from MUSDK must be loaded for VPP to work:

|

| 3 | Contiv-VPP usage | A section of the document contiv-vpp_setup.rst describes how to run contivpp in type1. |

Notes

IEC type1 device is suitable for low power device.Now we choose MACCHIATObin board as the main hardware platform,but contiv-vpp master version does not support running on this board。IEC team develops new features based on v3.2.1 version that make Contiv-VPP enabled on MACCHIATObin board. This feature involves the code changes of fd.io/VPP, ligato/vpp-agent, contiv/VPP, and we will submit the relevant modifications to the upstream community later.