Overview

The Ampere Pod consists of 3 Ampere HR330A servers with the following specifications per server:

| Ampere HR330A | |

|---|---|

| CPU | 1x 32 core 3.3 GHz Ampere ARMv8 64-bit Processor |

| RAM | 8x 16GB DDR4-DIMM |

| Storage | 1x 480GB SSD |

| Networking | 2x 25Gbe SFP+ (connected) 1x 1Gbe BASE-T (not connected) 1x IPMI / Lights-out Management |

Usage & Users

Blueprint(s) / Description(s): Connected Vehicle Blueprint

Primary Contact: Robert.Qiu(robertqiu@tencent.com)

Server Access

For the meantime when you request access to the Akraino Pods at UNH-IOL we will ask that you send us your public ssh key so we can add it to the root users of those machines.

If you wish to have your own user we can get that setup, but it is much quicker and easier if you just stick with the root user

IPMI Access

Once you have successfully connected to the VPN there is a file located in each machine in /opt called ipmi_info.txt, this file has the username and password for the IPMI interfaces.

IMPORTANT:

Please write down the information or copy the file somewhere safe in case of the machines getting reinstalled and the file will be gone. We are trying to prevent sending emails with passwords in them. If for some reason you did not have this info before it got wiped you can email akraino-lab@iol.unh.edu and we will help you by making a new file in the machines with the IPMI username and password.

You can access an IPMI interface like the example below:

Then enter the username and password provided from the file in /opt and now you can manage the system on your and will even be able to add your own public keys in the event of a reinstall of the machine.

If you have any issues with any of the IPMI interfaces please email akraino-lab@iol.unh.edu and we will assist you in anyway we can.

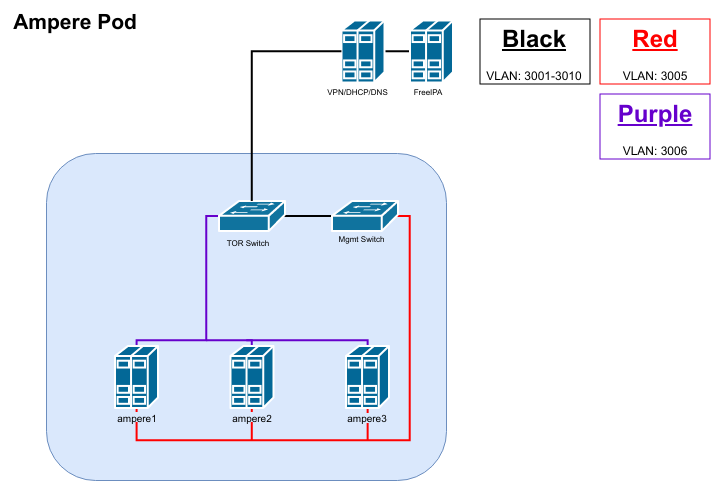

Networking

| Network | IP Network | VLAN ID | Description |

|---|---|---|---|

| IPMI / Management | 10.11.5.0/24 GW: 10.11.5.1 | 3005 | Connections to all IPMI interfaces on each server and the switch management interface |

| Public | 10.11.6.0/24 GW: 10.11.6.1 | 3006 | Public network (able to reach the Internet), available to each server. |

Servers and Switches

| Server Name | IPMI Address | IPMI VLAN ID | Public Network Address | Public Network VLAN ID | Switch Port(s) | OS Installed |

|---|---|---|---|---|---|---|

| ampere1 | 10.11.5.11 | 3005 | 10.11.6.11, 10.11.6.12 | 3006 | Cisco TOR: Left 25Gbe Port 41, Right 25Gbe Port 42 | CentOS 7.6 |

| ampere2 | 10.11.5.12 | 3005 | 10.11.6.13, 10.11.6.14 | 3006 | Cisco TOR: Left 25Gbe Port 43, Right 25Gbe Port 44 | CentOS 7.6 |

| ampere3 | 10.11.5.13 | 3005 | 10.11.6.15, 10.11.6.16 | 3006 | Cisco TOR: Left 25Gbe Port 45, Right 25Gbe Port: Not Connected | CentOS 7.6 |

Usage

This POD is used for connected vehicle blueprint. Refer to additional blueprint specific information.

Tarsframwork is deployed in Ampere Server 1.

TarsNode is deployed in Ampere Server 2 and Ampere Server 3.

To make the CI/CD work, we set up Jenkins master in Ampere Server 1, Jankins slave Ampere Server 2 and 3.

Beyond that, we create a folder in CI/CD gerrit repo( path: ci/ci-management/jjb/connected-vehicle) and write a YAML file. Let Jenkins control the tars framework via the Yaml file.

In the end, we upload the CI/CD log to Akraino Community. In this way to make sure connected vehicle pass R2 release review.