Licensing

Radio Edge Cloud is Apache 2.0 licensed. The goal of the project is the packaging and installation of upstream Open Source projects. Each of those upstream projects is separately licensed. For a full list of packages included in REC you can refer to https://logs.akraino.org/production/vex-yul-akraino-jenkins-prod-1/ta-ci-build-amd64/313/work/results/rpmlists/rpmlist (the 313 in this URL is the Akraino REC/TA build number, see https://logs.akraino.org/production/vex-yul-akraino-jenkins-prod-1/ta-ci-build-amd64/ for the latest build.) All of the upstream projects that are packaged into the REC/TA build image are Open Source.

Introduction

This document outlines the steps to deploy Radio Edge Cloud (REC) cluster. It has a minimum of three controller nodes. Optionally it may include worker nodes if desired. REC was designed from the ground up to be a highly available, flexible, and cost-efficient system for the use and support of Cloud RAN and 5G networks. The production deployment of Radio Edge Cloud is intended to be done using the Akraino Regional Controller which has been significantly enhanced during the Akraino Release 1 timeframe, but for evaluation purposes, it is possible to deploy REC without the Regional Controller. Regardless of whether the Regional Controller is used, the installation process is cluster oriented. The Regional Controller or a human being initiates the process on the first controller in the cluster, then that controller automatically installs an image onto every other server in the cluster using IPMI and Ironic (from OpenStack) to perform a zero touch install.

In a Regional Controller based deployment, the Regional Controller API will be used to upload the REC Blueprint YAML (available from the REC repository) which informs the Regional Controller of where to obtain the REC ISO images, the REC workflows (executable code for creating, modifying and deleting REC sites) and the REC remote installer component (a container image which will be instantiated by the create workflow and which will then invoke the REC Deployer (which is located in the ISO DVD disc image file) which conducts the rest of the installation.

The instructions below skip most of this and directly invoke the REC Deployer from the Baseboard Management Controller (BMC), integrated Lights Out (iLO) or integrated Dell Remote Access Controller (iDRAC) of a physical server. The basic workflow of the REC deployer is to copy a base image to the first controller in the cluster and then read the contents of a configuration file (typically called user_config.yaml) to deploy the base OS and all additional software to the rest of the nodes in the cluster.

Although the purpose of the Radio Edge Cloud is to run the RAN Intelligent Controller (RIC), the RIC software (which was written from scratch starting in the spring of 2019) is not quite stable enough yet to incorporate into the REC ISO image. The manual installation procedure described below does not result in the installation of the RIC. In the REC Continuous Deployment system, as of the Akraino Release 1 and 2 timeframe, a Jenkins job deploys a snapshot of the RIC and runs a small set of tests. These steps may be manually executed and this procedure is described in the last section of this WIKI document. Fully automated installation of the RIC as part of the REC will not be complete until sometime in 2020. If you are interested in actually interfacing a REC appliance with eNodeB/gNode B and radio infrastructure you should really join the Radio Edge Cloud Project Meetings on a weekly basis and let the REC team know of your interest. We will be happy to coordinate with you and welcome any testing that you can do.

Pre-Installation Requirements for REC Cluster

Hardware Requirements:

REC is a fully integrated stack from the hardware up to and including the application, so for best results, it is necessary to use one of the tested hardware configurations. Although REC is intended to run on a variety of different hardware platforms, it includes a hardware detector component that customizes each installation based on the hardware present and will need (possibly minor) changes to run on additional hardware configurations. Preliminary support is present in Akraino Release 1 of REC for HP DL380 generation 9 and 10, Dell R740xd and Nokia Open Edge servers, but the primary focus of Release 1 testing is the Nokia Open Edge servers, so some issues may be encountered with other server types.

- Minimum of 3 nodes

- Total Physical Compute Cores: 60 (120 vCPUs)

- Total Physical Compute Memory: 192GB minimum per node

- Total SSD-based OS Storage: 2.8 TB (6 x 480GB SSDs)

- Total Application-based Raw Storage: 5.7 TB (6 x 960GB SSD0

- Networking Per Server: Apps - 2 x 25GbE (per Server) and DCIM - 2 x 10GbE + 1 1Gbt (shared)

The specific recommended configuration as of the Release 1 timeframe is the Open Edge configuration documented in the Radio Edge Cloud Validation Lab

BIOS Requirements:

- BIOS set to Legacy (Not UEFI, although UEFI support is partially implemented and should be available in 2020)

- CPU Configuration/Turbo Mode Disabled

- Virtualization Enabled

- IPMI Enabled

- Boot Order set with Hard Disk listed as first in the list.

As of Release 1 and 2, Radio Edge Cloud does not yet include automatic configuration for a pre-boot environment. The following versions were manually loaded on the Open Edge servers in the Radio Edge Cloud Validation Lab using the incomplete but functional script available here. In the future, automatic configuration of the pre-boot environment is expected to be a function of the Regional Controller under the direction of the REC pod create workflow script.

- BIOS1: 3B06

- BMC1: 3.13.00

- BMC2: 3.08.00

- CPLD: 0x01

Network Requirements:

The REC cluster requires the following segmented (VLAN), routed networks accessible by all nodes in the cluster:

- External Operations, Administration and Management (OAM) Network

- Out Of Band (OOB) (iLO/iDRAC) network(s)

- Storage/Ceph network(s)

- Internal network for Kubernetes connectivity

- NTP and DNS accessibility

The REC installer will configure NTP and DNS using the parameters entered in the user_config.yaml. However, the network

must be configured for the REC cluster to be able to access the NTP and DNS servers prior to the install.

About user_config.yaml

The user_config.yaml file contains details for your REC cluster such as required network CIDRs, usernames, passwords,

DNS and NTP server ip addresses, etc. The REC configuration is flexible, but there are dependencies: e.g., using DPDK

requires a networking profile with ovs-dpdk type, a performance profile with CPU pinning & hugepages and performance

profile links on the compute node(s).

The following link points to the latest user_config template with descriptions and examples for every available parameter:

Note: the version number listed in the user_config.yaml needs to follow closely the version from the template. There is a strict version checking during deployment for the first two part of the version number. The following rules apply to the yaml's version parameter:

### Version numbering: ### X.0.0 ### - Major structural changes compared to the previous version. ### - Requires all users to update their user configuration to ### the new template ### a.X.0 ### - Significant changes in the template within current structure ### (e.g. new mandatory attributes) ### - Requires all users to update their user configuration according ### to the new template (e.g. add new mandatory attributes) ### a.b.X ### - Minor changes in template (e.g. new optional attributes or ### changes in possible values, value ranges or default values) ### - Backwards compatible

Example user_config.yaml

YAML Requirements

- The YAML files need to edited/created using Linux editors or in Windows Notepad++

- YAML files do not support TABS. You must space over to the location for the text.

Note: You have a better chance at creating a working YAML by editing an existing file or using the template rather than starting from scratch.

Installing REC

Obtaining the ISO Image

Recent builds can be obtained from the Akraino Nexus server. Choose either "latest" or a specific build number from the old release images directory for builds prior to the AMD/ARM split or the AMD64 builds or the ARM64 builds and download the file install.iso.

| Akraino Release | REC or TA ISO Build | Build Date | Notes |

|---|---|---|---|

| 1 | Build 9. This build has been removed from Nexus (probably due to age) | 2019-05-30 | Build number 9 is known to NOT work on Dell servers or any of the ARM options listed below. If attempting to install on Dell servers, it is suggested to use builds from no earlier than June 10th |

| 2 | Build 237. This build has been removed from Nexus (probably due to age) | 2019-11-18 | It is possible that there may still be some issues on Dell servers. Most testing has been done on Open Edge. Some builds between June 10th and November 18th have been successfully used on Dell servers, but because of a current lack of Remote Installer support for Dell (or indeed anything other than Open Edge), the manual testing is not as frequent as the automated testing of REC on Open Edge. If you are interested in testing or deploying on platforms other than Open Edge, please join the Radio Edge Cloud Project Meetings. |

| 3 - AMD64 | Build 237. This build has been removed from Nexus (probably due to age) | 2020-05-29 | This is a minor update to Akraino Release 2 of AMD64 based Radio Edge Cloud |

| 3 - ARM64 | Arm build 134. This build has been removed from Nexus (probably due to age) | 2020-04-13 | This is the first ARM based release of Radio Edge Cloud |

| 4 - AMD64 | 2020-11-03 | The ARM build is unchanged since Release 3 |

Options for booting the ISO on your target hardware include NFS, HTTP, or USB memory stick. You must place the ISO in a suitable location (e.g., NFS server, HTTP(S) server or USB memory stick before starting the boot process. The file bootcd.iso, which is also in the same directory, is used only when deploying via the Akraino Regional Controller using the Telco Appliance Remote Installer. You can ignore bootcd.iso when following the manual procedure below.

Preparing for Boot from ISO Image

Nokia OpenEdge Servers

Using the BMC, configure a userid and password on each blade and ensure that the VMedia Access checkbox is checked.

The expected physical configuration as described in Radio Edge Cloud Validation Lab is that each server in the cluster has two SSD 480GB SATA 1dwpd M.2 2280 on a riser card inside the server and two SSD 960GB SATA 3dwpd 2.5 inch on the front panel. There is no RAID configuration used. The reference implementation in the Radio Edge Cloud Validation Lab uses one M.2 drive as the physical volume for LVM and both 2.5 inch SSDs as Ceph volumes.

HP Servers

Dell Servers

Provision the disk configuration of the server via iDRAC such that the desired disks will be visible to the OS in the desired order. The installation will use /dev/sda as the root disk and /dev/sdb and /dev/sdc as the Ceph volumes.

Ampere Servers

Download and print hardware configuration guide for REC test installation

Each server requires 2 SSDs

Each server requires 3 NIC ports and 1 BMC connection

"Dumb" switch or vLAN is connected to two NIC ports

1 NIC port and BMC are connected to router via switch

REC ISO will recognize Ampere based Lenovo HR330 1U, HR350 2U or openEDGE sleds with "Hawk" motherboard

Designate 1 server as Node 1. It runs all code to complete the installation

Boot each server with a monitor attached to the VGA port. Note the BMC IP address.

Boot note 1 into Operating System. Note Linux names for all Ethernet ports on hardware guide.

Download and edit user_config.yaml

cidr is range of IPs

infra_external has network access

infra_internal is VLAN or dumb switch without network access

infra_storage are IPs on internal network used for storage

Interface_net_mapping must be set with NIC port names previously obtained for Node 1

hwmgmt is IP addresses of all BMCs. Node 1 is the master

Marvell Servers

@ Carl Yang <carlyang@marvell.com>

Booting from the ISO Image

Nokia OpenEdge Servers

Login to the controller-1 BMC ip using a web browser (https://xxx.xxx.xxx.xxx).

Go to Settings/Media Redirection/General Settings.

Select the Remote Media Support.

Select the Mount CD/DVD.

Type the NFS server IP address.

Type the NFS share path.

Select the nfs in Share Type for CD/DVD.

Click Save.

Click OK to restart the VMedia Service.

Go to Settings/Media Redirection/Remote Images.

Select the image for the first CD/DVD device from the drop-down list.

Click the play button to map the image with the server’s CD/DVD devices. The Redirection Status changes to Started when the image redirection succeeds.

Go to Control & Maintain/Remote Control to open the Remote Console.

Reset the server.

Press F11 to boot menu and select boot from CD/DVD device.

HP Servers

Login to iLo for Controller 1 for the installation

Go to Remote Console & Media

Scroll to HTML 5 Console

- URL

http://XXX.XXX.XXX.XX:XXXX/REC_RC1/install.iso -> Virtual Media URL →

- NFS

< IP to connect for NFS file system>/<file path>/install.iso

Check “Boot on Next Reset” -> Insert Media

Reset System

Dell Servers

Go to Configuration/Virtual Media

Scroll down to Remote File Share and enter the url for ISO into the Image File Path field.

- URL:

http://XXX.XXX.XXX.XX:XXXX/REC_RC1/install.iso

- NFS

< IP to connect for NFS file system>/<file path>/install.iso>

Select Connect.

Open Virtual Console, and go to Boot

Set Boot Action to Virtual CD/DVS/ISO

Then Power/Reset System

Be sure to read the note below on Dell servers

Ampere Servers

Download install.aarch64.iso from the latest Telco Appliance build. A complete list of ARM aarch64 builds is available here.

Mount install.aarch64.iso as NFS share on Linux file system in same network as REC cluster

Download and unzip ampere_virtual_media_v2.zip

Edit mount_media.sh and dismount_media.sh. Set IPMI_LOCATION to IP address of Node 1 BMC, NFS_IP to IP of NFS server, ISO_LOCATION to NFS path for install.aarch64.iso

Run mount_media.sh. This will connect install.aarch64.iso as a CDROM on Node 1

Boot Node 1 into BIOS. Force boot from CD by selecting "Save & Exit" tab on BIOS, Boot Override → CDDROM

REC Telco Appliance will begin installation

See instructions below

Marvell Servers

@ Carl Yang <carlyang@marvell.com>

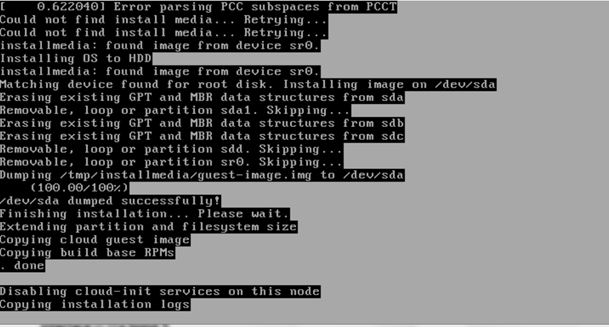

After rebooting, the installation will bring up the Akraino Edge Stack screen.

The first step is to clean all the drives discovered before installing the ISO image.

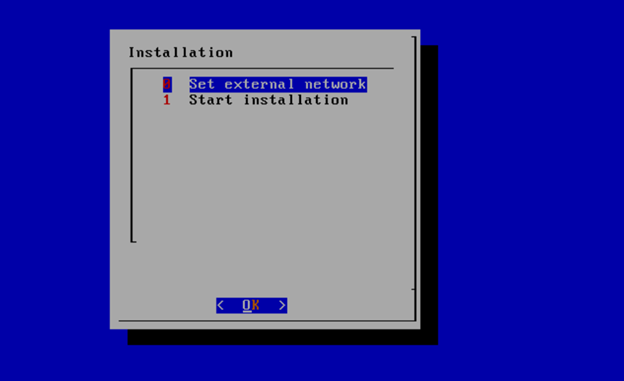

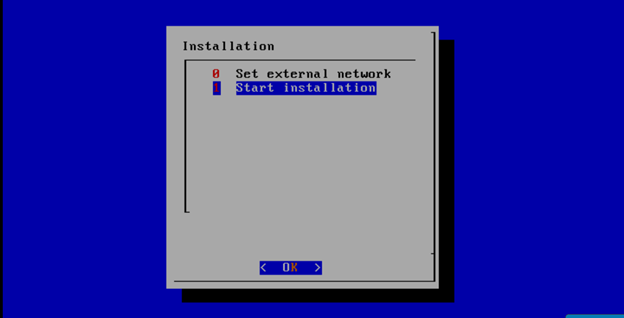

Select, 0 Set external network at the Installation window, press OK.

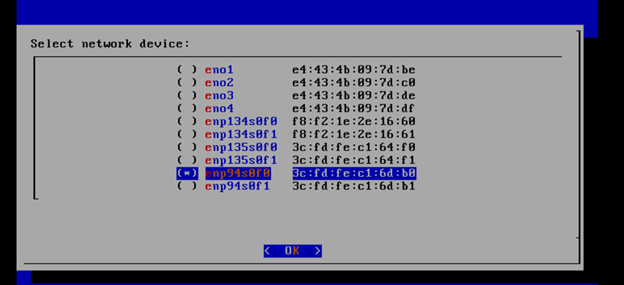

Arrow down to and press the spacebar to select the network interface to be used for the external network.

If using bonded nics, select the first interface in the bond.

Enter the external ip address with CIDR for controller-1: 172.28.15.211/24

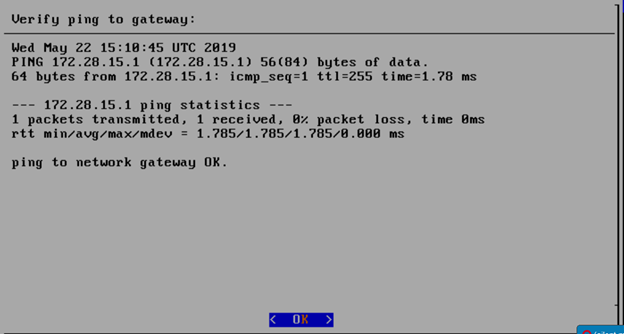

Enter the gateway ip address for the external ip address just entered: 172.28.15.1

Enter the VLAN number: 141

The installation will check the link and connectivity of the IP addresses entered.

If the connectivity test passed, then Installation window will return.

Uploading user_config.yaml

Go to your RC or jump server and scp (or sftp) your user_config.yaml to controller-1’s /etc/userconfig directory.

initial credentials: root/root.

scp user_config.yaml root@<controller-1 ip address>/etc/userconfig/

Select, 1 Start installation and OK.

After selecting Start Installation, the installation should start automatically, and the content of /srv/deployment/log/bootstrap.log should be displayed on the remote console.

Monitoring Deployment Progress/Status

You can monitor the REC deployment by checking the remote console screen or by tailing the logs on controller-1 node's /srv/deployment/log/ directory.

There are two log files:

bootstrap.log: deployment status log

cm.log: ansible execution log

tail -f /srv/deployment/log/cm.log

tail -f /srv/deployment/log/bootstrap.log

Note: When the deployment to all the nodes has completed, “controller-1” will reboot automatically.

Special Attention Required on Dell

A Note on deploying on DELL severs:

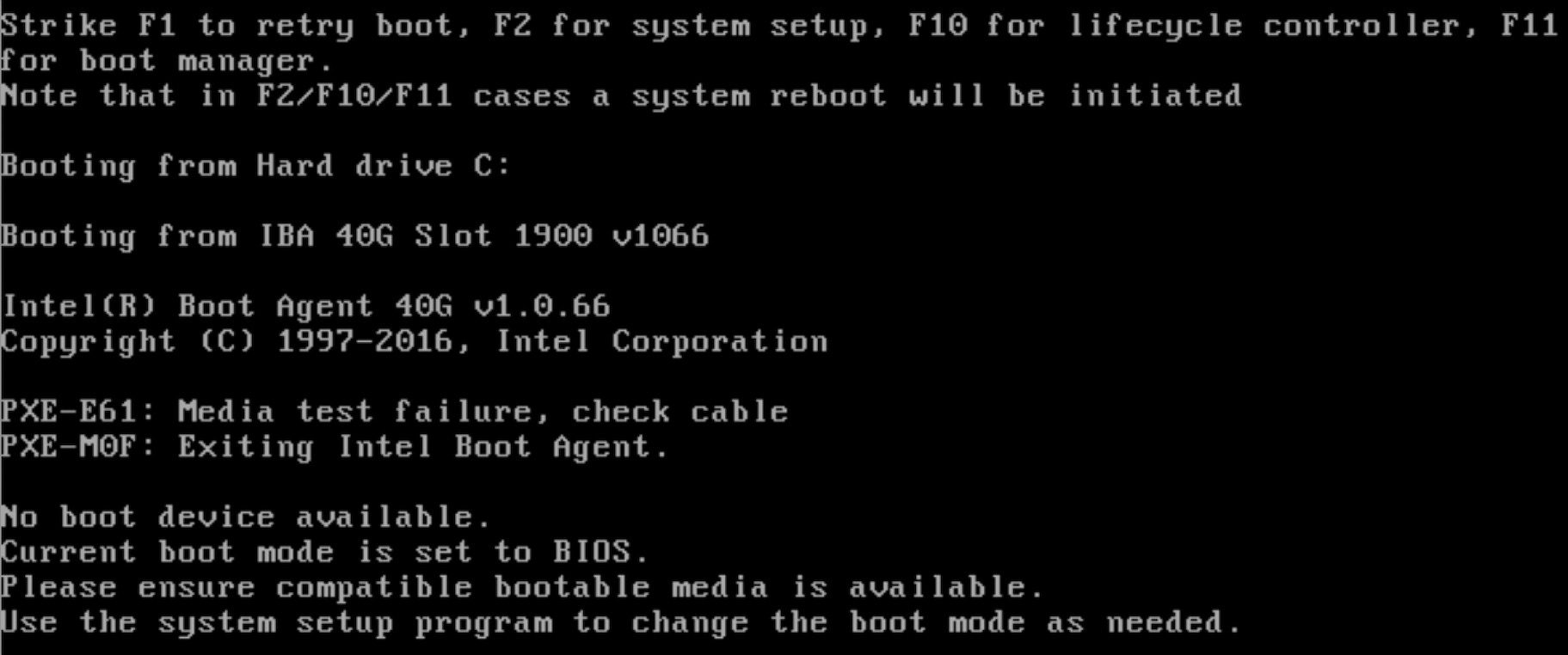

Currently, a manual step is required when doing an installation on Dell servers. After the networking has been set up and the deployment has started, the following message will be shown on the console screen on controller-2 and controller-3:

At this point, both controller-2 and controller-3 should be set to boot from virtual CD/DVD/ISO.

To do this:

- Log on to the iDrac web interface

- Select "Launch Virtual Console"

- In the Virtual Console:

- Select "Boot | Virtual CD/DVD/ISO" and confirm

- Select "Power | Reset System (Warm Boot)" and confirm

Again, this needs to be done for both controller-2 and controller-3. After this, the installation should continue normally.

As a reference, during this time, viewing the file /srv/deployment/log/cm.log on controller-1 will show the following:

FAILED - RETRYING: Verify node provisioning state. Waiting for 60mins max. (278 retries left).

FAILED - RETRYING: Verify node provisioning state. Waiting for 60mins max. (277 retries left).

FAILED - RETRYING: Verify node provisioning state. Waiting for 60mins max. (276 retries left).

This will continue until the above manual step is completed or a timeout happens. After the manual step, the following messages will appear:

ok: [controller-2 -> localhost]

ok: [controller-3 -> localhost]

Verifying Deployment

A post-installation verification is required to ensure that all nodes and services were properly deployed.

You need to establish an ssh connection to the controller’s VIP address and login with administrative rights.

tail /srv/deployment/log/bootstrap.log

You should see: Installation complete, Installation Succeeded.

Go to REC Test Document and follow the steps outlined there to ensure that all nodes and services were properly deployed.

Deployment Failures

Sometimes failures happen, usually due to misconfigurations or incorrect addresses entered.

To re-launch a failed deployment

There are two options for redeploying. (Execute as root)

- /opt/cmframework/scripts/bootstrap.sh /etc/userconfig/user_config.yaml --install &

- openvt -s -w /opt/start-menu/start_menu.sh &

Note: In some cases modifications to the user_config.yaml may be necessary to resolve a failure.

If re-deployment is not possible, then the deployment will need to be started from booting to the REC.iso,

RIC R0 Installation onto REC

REC Release R1 includes the ability to run the R0 version of the RIC.

This R0 is limited functionality of the RIC plaform, but demonstrates the basic RIC platform components of appmgr, rtmgmr, redis, e2term, etmgr.

Additionally it is possible to load robot test suites to verify functionality, but not all test cases will work in this version of RIC.

As more functionality becomes available, more test cases will work and more tests will be added.

Onboarding RIC R0 is a manual step at present, in future it will be included in the REC build process.

RIC R0 includes scripts to bring up the RIC onto a generic kubernetes platform.

To bring it up on the REC, follow the steps below.

Step 1:

Login to the controller 1 as cloudadmin and clone the scripts used to bring up the RIC on a REC cluster.

git clone https://gerrit.akraino.org/r/rec.git

Step 2:

Copy the scripts to your home directory

cp rec/workflows/ric_automation.sh rec/workflows/robot_test_ric.sh rec/workflows/nanobot.sh ./

Step 3:

Run the ric_automation.sh script.

bash ric_automation.sh

Once the script completes, verify the output below indicating successful deployment of the RIC helm charts.

+ helm install localric/ric --namespace ricplatform --name ric-full --set appmgr.appmgr.service.appmgr.extport=30099 --set e2mgr.e2mgr.service.http.extport=30199

LAST DEPLOYED: Fri Jun 28 17:42:53 2019 NAMESPACE: ricplatform STATUS: DEPLOYED RESOURCES: ==> v1/ConfigMap NAME DATA AGE ric-full-appmgr-appconfig 1 0s ric-full-appmgr-appenv 1 0s ric-full-e2mgr-router-configmap 1 0s ric-full-e2term-router-configmap 1 0s ==> v1/Deployment NAME READY UP-TO-DATE AVAILABLE AGE ric-full-appmgr 0/1 1 0 0s ric-full-dbaas 0/1 1 0 0s ric-full-e2mgr 0/1 1 0 0s ric-full-e2term 0/1 1 0 0s ric-full-rtmgr 0/1 1 0 0s ==> v1/Pod(related) NAME READY STATUS RESTARTS AGE ric-full-appmgr-74b4f68459-rhwf6 0/1 ContainerCreating 0 0s ric-full-dbaas-877f5788d-rpg87 0/1 ContainerCreating 0 0s ric-full-e2mgr-f6956b9f8-kxc6q 0/1 ContainerCreating 0 0s ric-full-e2term-f6556544c-pzxgv 0/1 ContainerCreating 0 0s ric-full-rtmgr-95f7cb5cc-bfhdx 0/1 ContainerCreating 0 0s ==> v1/Service NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE ric-full-appmgr ClusterIP 10.254.254.49 <none> 8080/TCP 0s ric-full-dbaas ClusterIP 10.254.188.227 <none> 6379/TCP 0s ric-full-e2mgr ClusterIP 10.254.93.207 <none> 3800/TCP,3801/TCP 0s ric-full-e2term ClusterIP 10.254.208.204 <none> 38000/TCP 0s ric-full-rtmgr ClusterIP 10.254.70.83 <none> 5656/TCP 0s

Step 4:

Verify the RIC pods are all coming up and running.

kubectl get pods -n ricplatform

NAME READY STATUS RESTARTS AGE ric-full-appmgr-74b4f68459-rhwf6 1/1 Running 0 2m5s ric-full-dbaas-877f5788d-rpg87 1/1 Running 0 2m5s ric-full-e2mgr-f6956b9f8-kxc6q 1/1 Running 0 2m5s ric-full-e2term-f6556544c-pzxgv 1/1 Running 0 2m5s ric-full-rtmgr-95f7cb5cc-bfhdx 1/1 Running 0 2m5s

Note: It may take a little time so repeat the command at some intervals. If they do not come up as Running, you should use standard kubernetes command such as kubectl describe, kubectl logs to troubleshoot and resolve the issue.

Step5:

Now that you have running RIC R0, on the REC, you can install robot test scripts to do more verification of the RIC components. These at present only provide limited testing but more tests will be added later as more functionality is developed in later RIC releases.

11 Comments

Jingzhao Ni

Hi Paul Carver,

We want to port the REC into Aarch64 platform. Could you please give more detail information about install.iso so that we could install those software on Aarch64 platform step by step? And what is the base OS system? Thanks.

Paul Carver

Please review Gerrit Code Repository Overview and the TA Jenkins jobs https://jenkins.akraino.org/view/ta/ especially https://jenkins.akraino.org/view/ta/job/ta-ci-build/configure

The basic process is that many of the TA repos build one or more RPMs containing Docker images and those RPMs are ultimately included in the ISO that is built using Dracut https://en.wikipedia.org/wiki/Dracut_(software)

Pay particular attention to https://gerrit.akraino.org/r/gitweb?p=ta/build-tools.git;a=tree and https://gerrit.akraino.org/r/gitweb?p=ta/rpmbuilder.git;a=tree

The base OS is configured in https://gerrit.akraino.org/r/gitweb?p=ta/manifest.git;a=blob;f=build_config.ini;h=1153a8b491dacd0bd00454d8b4bc36ea8068afa2;hb=HEAD#l10

You're also going to need to add to https://gerrit.akraino.org/r/gitweb?p=ta/hw-detector.git;a=tree;f=src/hw_detector/hw_types;h=73a463fbdc0d411f42f61caa8f7aea2e10b982b9;hb=HEAD

Ankit Arora

Hi Paul Carver,

We are building our cluster in our lab for REC. I need to understand why do we need 3 controllers in a 3 node cluster. I mean what is the purpose of 3 controllers ? And can we use these controllers for hosting container loads like (RIC or Xapps)

Levente Kálé

3-controller nodes is the minimum number of Nodes required to provide a high-available infrastructure, which is basic requirement in TelCo. with a 3-controller setup the installation can seamlessly survive, and recover from the loss of a whole physical node

but yes, containerized workloads can be instantiated on controller nodes too!

Paul Carver

Ankit Arora I agree with Levente Kálé 's reply and I would also add that for development purposes it would be nice to be able to deploy on a 3 VM cluster running on a single physical server, but we would never deploy that type of cluster in production. Since the priority for REC is to develop a blueprint for production deploy-able clusters we aren't focusing any effort on VMs. We focus on developing and testing for our target edge site hardware. However, we would welcome anyone who wants to help create a "devstack" for REC if doing so is helpful to them. But it can't be at the expense of the main goal of producing a production deploy-able system. So removing the quorum based cluster redundancy mechanisms isn't an acceptable change.

Tarun Jain

Hi,

I am trying to created a lab installation using Dell servers.

But i was not able to find any ISO image for the installation.

All of them have been removed from Nexus repository.

Kindly help in locating the ISO image required for installation or redirect to any changed procedure for installation if done.

Thanks.

Paul Carver

Unfortunately the Linux Foundation expires the builds after some period of time. Since we are no longer developing REC there haven't been any recent commits which would trigger the CI/CD system to generate new builds. I can try making a dummy commit just to get the CI/CD system to do a new build, but if there has been any bit rot in the system I can't guarantee I will have the time to debug it. I also can't guarantee the build will work on Dell servers because our primary test platform was the Nokia OpenEdge servers. Although we have installed on Dell servers in the past, they were not part of the CI/CD pipeline and didn't have any end users actively resolving bugs. As of Akraino release 4 we have concluded our work on the REC platform and are investigating other platforms going forward due to issues such as the CentOS 7/8 situation and a variety of legacy code in REC that is getting harder to keep up to date.

Paul Carver

Unfortunately the build failed https://jenkins.akraino.org/view/ta/job/ta-ci-build-amd64/347/console with the following error:

I think maybe the rename command (which is a perl script that supports renaming files based on regular expression matching) got removed from the Ubuntu repos. I haven't researched this today, but I have a vague recollection about that. Unfortunately, if that is true then fixing the build will require figuring out where and why it was necessary to batch rename files based on regex and create a workaround. This is the bit rot that I was referring to. If it is no longer possible to apt install the rename script then it requires work to fix the REC build for a reason that has nothing to do with the inherent functionality of the blueprint.

Tarun Jain

Thanks Paul for triggering the build.

Since build has been failure due to some issues, is it possible to share any old image which can be used for deployment purpose.

I need to do installation in my lab environment to understand the complete architecture and working.

Regards,

Tarun

Paul Carver

You're probably going to need to do some tweaking to get REC working on Dell. As I mentioned, the primary platform we used and the only platform that was tested by the CI/CD process was the Nokia OpenEdge. I would suggest running your own build locally using the instructions here How to Build a REC or Telco Appliance ISO.

Because REC's primary goal was to do a zero touch bare metal install, it is quite sensitive to the specific details of the hardware platform's preboot quirks, including things like how hard drives are enumerated.

You can take a look at Gerrit for examples of the work that Dave did, some of which (particularly the changes in 2019) related to Dell compatibility.

https://gerrit.akraino.org/r/q/owner:davek%2540research.att.com

d k

As Paul said, I think you'll find attempting to install on Dell hardware a challenge at best — we've really only tested anything at all recent on the Nokia OpenEdge hardware. If you'd still like to take a swing at it, I think your best bet is building the images yourself following the instructions here: How to Build a REC or Telco Appliance ISO

I think you'll need a bunch of tools to make the build work that aren't really mentioned on that page. Looking back at what I've got installed on my build box, it looks like at a minimum you'll likely need a CentOS 7 with the following packages (in addition to the standard development tools):

docker-cecreaterepoqemu-imageisohybridlibguestfs-toolslibguestfspython2-pipThere may be others I've missed. Again, though, I don't think we can promise that what you'll end up with is a REC that works — or even boots — on Dell hardware. If it does work, I'd love to hear about it though!